AI: Hypes and Doom-sayings

Ever since the industrial revolution, humanity has many technological breakthroughs with profound impacts on how we live. With each technological breakthrough, there were hypes of hopes that consecrate the technology as solving many of the problems and challenges facing mankind as well as concerns and fears that demonize the technology as causing more problems for societies. The same pattern repeats over the rapid development of artificial intelligence in recent years. There are those who regard AI as the ultimate tool that not only liberate human beings from low-level intellectual work so that they can focus on more advanced and creative activities but also extend human decision-making by overcoming our intellectual and emotional limitations, such as our inherently limited computational abilities and abilities to handle large amount of data (Gigerenzer, 2020) and our susceptibility to make biases in judgements due to our reliance on a range of heuristics (Dale, 2015). There are also those who sees AI as the ultimate threat to humanity as it might evolve to be self-conscious and revolt against human beings, as captured in the concept of technological singularity (Braga & Logan, 2019) and as depicted in many sci-fi films. Rather trumpeting the benefits of AI as if it were a saviour of humanity and stamping it on the ground based on some far-fetched fears could both be wrong, it is more useful and constructive to identify some major realistic concerns of AI that require our serious attention and one of such concerns are the bias and discrimination as introduced by AI decision-making. Although AI is a powerful tool for improving productivity and living standards, it does tend to benefit all in an equal manner. Due to both the technical design and the social and political contexts that fosters AI development, it is bound to favour certain social groups and bias or discriminate against others. Acknowledging the bias and discrimination of AI would allow us to work hard to contain and minimize such negative impacts of AI, although we may never eliminate them.

AI: Not So Much A Ghost in the Shell

Before a more meaningful discussion of the bias and discrimination of AI can be made, more clarifications on the concept of AI are in order. The AI being discussed here is not the idealized or even anthropomorphized ones such as those in the film series of Ghost in the Shell, Matrix or the Kortana in the recently screened Halo TV series. Such potent images of AI are often depicted and presented to attract more funding or to create marketing hypes that can excite the audience and consumers to galvanize the development of the industry (Siegel, 2023). As Crawford (2021, p.9) has shrewdly pointed out, the humbler term of “machine learning” is more frequently used in the computer science community, while “the nomenclature of AI is often embraced during funding application season, when venture capitalists come bearing check-books, or when researchers are seeking press attention for a new scientific result”. Fundamentally, the AI as it is today, are still systems of algorithms supported by hardware that requires human developers to make “all processes and data to be explicit and formalized” (Crawford, 2021). In another word, the AI as we have today are still man-made algorithms. However, a more distinguishing feature of AI as a well-designed algorithm is that it can improve “over time by learning from repeated interactions with users and data” (Flew, 2021,p.83). As such, the application and evolution of AI requires at least three key elements: well-designed algorithms, access to large amounts of data, and capital investment to obtain increasingly powerful computational capabilities (Flew, 2021). However, it is exactly due to these three elements that AI tend to be biased and discriminating.

“Oops, I did it again”, says the AI

News and cases of AI that bias or discriminate against users are not rare and they could be found in many areas of AI applications. In 2019, a paper was published examining the underlying biases and discriminations against African Americans in the algorithms used by the U.S. healthcare system to guide health decision making (Obermeyer et al., 2019). It was found that the algorithm used by the U.S. healthcare system used health costs as a proxy for health needs in the design of the algorithm and, since African Americans tend to have lower level of health spending due to their disadvantaged socioeconomic conditions, they are given less weight in terms of health needs due to their lower health costs (Obermeyer et al., 2019). As a result, for African Americans with the same level of health risks as their white counterparts, they tend to receive fewer extra healthcare and, to obtain the same level of extra healthcare, the African Americans need to have a higher health spending than their white counterparts (Obermeyer et al., 2019).

Another case of AI bias and discrimination that was identified involves Amazon. Amazon has started building an AI tool to help it make screening and hiring decisions since 2014 (Dastin, 2018). However, when the AI tool was completed and introduced for trial in 2015, it was found that the new system was not rating candidate software developers in a gender-neutral way (Dastin, 2018). What happened was that Amazon had trained the new AI hiring system by using its employee data for the last 10 years and these employees for the software developer position were predominantly males and the AI system picked up the pattern and automatically disfavours female applicants by detecting key words that indicates the applicants to be females, such as “women’s” as in “women’s chess club” or “women’s college” (Dastin, 2018).

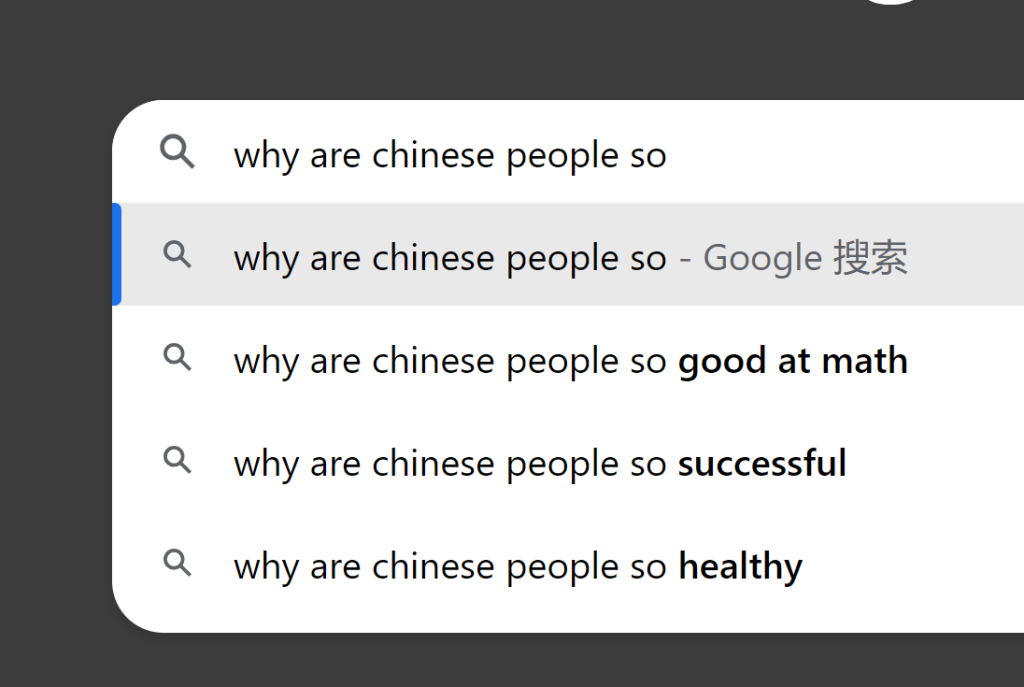

A third case of AI bias and discrimination is Google’s search engine. This is clearly recorded and discussed in Noble (2018), in which the author typed in black girls in the Google Search engine and was returned numerous websites that pornographize black girls and returned a range of derogatory terms such as “loud”, “lazy”, “mean”, “ghetto” and “rude” when typing “why are black people are so”. Such searches occurred in 2011 and 2013 respectively (Noble, 2018). Nowadays, the google search engine has become smarter and do not make such similar mistakes. However, stereotyping could still be observed. For example, the author typed in “Why are Chinese people so” on April 5 of 2024, top of the list is “good at math” , which is a strong stereotype of the Chinese people (Cvencek et al., 2015).

Thus, although Google has revamped its search engine to avoid autosuggesting search words that reflect negatively on specific groups of people, the positive stereotypes reflect that the underlying algorithms runs on the same logic, only that they have been enhanced for culling out the negative ones to avoid offending the public.

The Original Sins of AI In Bias and Discrimination

The three cases of AI going off target and introducing bias and discrimination are not randomly picked. Instead, they each correspond to issues in the three elements of the AI as mentioned in second section, i.e., the algorithm, the data, and capital. This also corresponds to the three sources of AI bias and discrimination as identified by Ferrer et al. (2021), which are bias in modelling, bias in training, and bias in application.

According to Ferrer et al. (2021, p.2), bias could be introduced in the AI system in the design of the modelling such as “through smoothing or regularization parameters to mitigate or compensate for bias in the data” or when “modelling in cases with the usage of objective categories to make subjective judgements”. The discrimination of the U.S. healthcare system algorithm clearly reflects a mistake made in the latter category. While wanting a better variable to better reflect a subjective judgement of the health risks of the population, the developers of the AI program used an objective category, which is health cost in the case, as a proxy or substitute. There is no doubt that it is normally reasonable to assume that health risks and health costs are positively correlated, however, it completely ignored the social context as faced by African Americans of low-socioeconomic status, in which the African Americans tend to suppress their health spending due to limited disposable income, regardless of their health risks. This lack of considerations of the African Americans’ situation in the AI modelling process reflects an inherent bias of the developers, who design the model based on their own assumption that people wither higher health risks tend to spend more on healthcare. This assumption might hold for the middle-class white Americans who do have sufficient disposable income to spend on healthcare. After all, as Crawford (2015) has indicated, the AI industry is not large and it is predominantly controlled by a small number of well-educated people in mostly developed countries. As reported by Paul (2019), the AI industry has a disastrous lack of diversity as it is an overwhelmingly white and male field. Thus, it is understandable that the AI algorithms developed are fundamentally reflective of the assumptions and worldviews of the white males, to the exclusion of the females and ethnic minorities.

Bias and therefore discrimination could also be introduced into the AI system in the training stage. As Ferrer et al. (2021) indicated, “if a dataset used for training purposes reflects existing prejudices, algorithms will very likely learn to make the same biased decisions”. This is exactly what happened with the AI hiring tool designed by Amazon. As shown in the case, the data used by Amazon to train the AI hiring tool contains an existing gender discrimination against female software developers as Amazon had recruited a predominant proportion of male software developers for the last 10 years. This biased data then was fed into the AI hiring tool for training, which quickly learned that females should be disfavoured, although there was not originally intended by the developers. Since AI learning process is highly opaque, it is difficult for the developers to know exactly what the AI tools has learned and how it arrives at the decisions at it does. This may not be that apparent in the Amazon case, but in a Netflix challenge that relies on crowdsourcing for solutions that can improve the accuracy of its recommendation algorithm by 10%, the winning solution used a technique called SVD (singular value decomposition) that disregards user demographic information and relies only on user past recommendation history and splits such historical film scoring values of users into one row and one column matrix vectors (Hallinan & Striphas, 2016). It is impossible for the developers to know what the row and one column matrix vectors represent, although they prove to be the best predictors of user rating films (Hallinan & Striphas, 2016). Thus, on the one hand, the AI algorithm, while being trained on data, could pick up existing patterns of bias and discrimination not intended to by its developers, as shown in the Amazon case, while the developers could not necessarily make sense of how the AI algorithm optimizes its decisions, as shown in the Netflix challenge case. Combined, they constitute a recipe for disaster in the sense that the developers knows that the AI algorithms work for now but do not know how they work and whether they will continue to work in the future or in different contexts.

A third condition under which bias and discrimination could be introduced into AI systems is in its application, as indicated by Ferrer et al. (2021). As Crawford (2015,p.8) has argued, AI ultimately is a tool that is “ultimately designed to serve existing dominant interests”. As shown in the Google search engine case, the search engine is designed to improve search efficiency or to return attractive contents that can grab user attention, which are then turned into a commodity that can be sold to advertisers to maximize both Google and its advertisers’ commercial interests (O’Reilly et al., 2024). As a result, it is neither Google’s obligation as a private business nor its interests to cull out the bias and discrimination in its algorithms.

Making Peace with AI Bias and Discrimination

It is due to the above discussed reasons that we may not see the elimination of bias and discrimination in AI applications in the short-term, or we may not see its elimination ever, due to the inherent social inequalities underlying biases and discrimination that may never be removed. However, we should make continuous efforts to reduce and minimize the impacts of AI biases and discriminations as we seem not being able to stop or escape from the rising of AI. This would require both backbreaking technical efforts in addressing the AI modelling assumptions and in ensuring the representativeness of the data for training AI as well as more ambitious plans in addressing the diversities issues in the AI industry as well as the increasing inequality in the distribution of power and wealth as brought about by AI.

References

Braga, A., & Logan, R. K. (2019). AI and the singularity: A fallacy or a great opportunity?. Information, 10(2), 73.

Crawford, K. (2021). Introduction In The atlas of AI: Power, politics, and the planetary costs of artificial intelligence.(pp. 1-21).Yale University Press.

Cvencek, D., Nasir, N. I. S., O’Connor, K., Wischnia, S., & Meltzoff, A. N. (2015). The development of math–race stereotypes:“They say Chinese people are the best at math”. Journal of Research on Adolescence, 25(4), 630-637.

Dale, S. (2015). Heuristics and biases: The science of decision-making. Business Information Review, 32(2), 93-99.

Dastin, J. (2018). Insight – Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/idUSKCN1MK0AG/

Ferrer, X., Van Nuenen, T., Such, J. M., Coté, M., & Criado, N. (2021). Bias and discrimination in AI: a cross-disciplinary perspective. IEEE Technology and Society Magazine, 40(2), 72-80.

Flew, T. (2021). Issues of Concern. In Regulating Platforms (pp. 79–86). Cambridge: Polity.

Gigerenzer, G. (2020). What is bounded rationality?. In Routledge handbook of bounded rationality (pp. 55-69). Routledge.

Hallinan, B., & Striphas, T. (2016). Recommended for you: The Netflix Prize and the production of algorithmic culture. New media & society, 18(1), 117-137.

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447-453.

O’Reilly, T., Strauss, I., & Mazzucato, M. (2024). Algorithmic Attention Rents: A theory of digital platform market power. Data & Policy, 6, e6.

Paul, K. (2019). ‘Disastrous’ lack of diversity in AI industry perpetuates bias, study finds. The Guardian. https://www.theguardian.com/technology/2019/apr/16/artificial-intelligence-lack-diversity-new-york-university-study

Siegel, E. (2023). The AI Hype Cycle Is Distracting Companies. Harvard Business Review. https://hbr.org/2023/06/the-ai-hype-cycle-is-distracting-companies

Be the first to comment