Introduction

Digital networking platforms, such as social media, have made it easier, more efficient and faster to disseminate people’s ideas. However, these ideas are complex and can be hateful and hurtful speech. (Alkiviadou, 2019 ) Currently, AI helps people to analyze big data and is a huge engine behind the Internet of Things, and although it brings a lot of convenience and benefits to people, it has features such as sales pitches and collection of personal information, as well as the ability to create realistically and efficiently, which is also a huge pitfall. (Manheim, Karl, & Kaplan, Lyric. (2019). ) The purpose of this blog is to explore the negative role of AI in amplifying online harms in the context of digital networks, especially social media, and how AI can be used to mitigate online harms, as well as to present the challenges for the utilization of AI and to grasp the double-edged sword of AI.

Hate speech and online harm on the Internet

Online harm encompasses a wide range of issues, including both hate speech and other negative experiences that may occur online. These harms may affect individuals as well as communities and may be psychological, social or even physical in nature. Hate speech is contrary to human rights, and the popularity of the Internet has multiplied the spread of hate speech. As a result of hate speech and online harm, the discrimination, prejudice, and intimidation that it fosters makes it difficult for individuals to integrate into collective life and causes great distress in their lives. (Flew, 2021)

The impact of online victimization and hate speech on individuals and communities is well documented. (Williams & Tregidga, 2014) Online services are booming, with the first generation of SNS tools in use attracting a huge user base of over 100 million in less than four years. Social media has become increasingly important to people’s lives and is gradually becoming an indispensable part of their daily lives. Digital networking platforms, such as social media, are characterised by freedom and timeliness, which attract more and more users and therefore generate huge amounts of traffic. Currently, social media such as Facebook and WeChat have hundreds of millions of active mobile users per month. However, with the gradual penetration of social media into the components of people’s real life, people are becoming more and more dependent on social media, which makes false information and online harm have a serious impact. (Zhu, Wu, Cao, Fu&Li,2018) Due to the anonymity and high mobility that the internet provides users, negative issues such as online harassment, hate speech, and online fraud run rampant in traditional law enforcement environments. (Banks, J.) And the emergence and development of artificial intelligence(AI) has led to the development of new forms of hate speech and online harm.

In what ways does artificial intelligence contribute to online harm

Creating AI-generated realistic and false content that spreads disinformation.

Bontridder & Poullet (2021) argue that AI plays a primary role in the current dissemination of disinformation on the world wide web and that these issues relate to issues such as morality and human rights, with deleterious effects.

Algorithmic bias: prioritising sensational or divisive content.

Verma (2019) argues that we are living in an era of algorithms that are gradually evolving and becoming more complex.Verma refers to Cathy O’Neil’s emphasis on big data as being dangerous in that it reinforces discrimination and inequality, and in particular tends to emphasise the highlighting of sensational or divisive content.

Case Study

The New York Times reports that recent false and explicit sex-related images of Taylor Swift have been spread rapidly on social media. Not only did this cause harm to Taylor herself and her fans, but it also served as a warning to protect women and sparked a conversation about how to combat the spread of this type of content. Before one of the fake images was taken down, it had been viewed 47 million times, but the task of stopping these images from being spread further is made difficult by the widespread nature of social media.

Ben Colman, founder and CEO of X, said the images were created through an artificial intelligence-driven technique after it was identified.

(New York Times, 26 January 2014)

Catherine, Princess of Wales, has been caught up in a similar furore this year. Posts have appeared on social media X speculating that Kate may be dead. Conspiracy theorists have spread the idea that Kate has disappeared or died, despite Kensington Palace stating that she is unable to take part in royal duties while recovering from surgery. Multiple internet sleuths believe the princess was created by artificial intelligence and that she was faked dead in the same way as public figures such as President Biden. Much of the Internet is divided on basic facts, and artificial intelligence is one of the things that exacerbates it. (New York Times, Tiffany Hsu Published March 20, 2024)

It’s not just for public figures who are being harmed online, but ordinary people are also being harmed online in ways exacerbated by artificial intelligence.

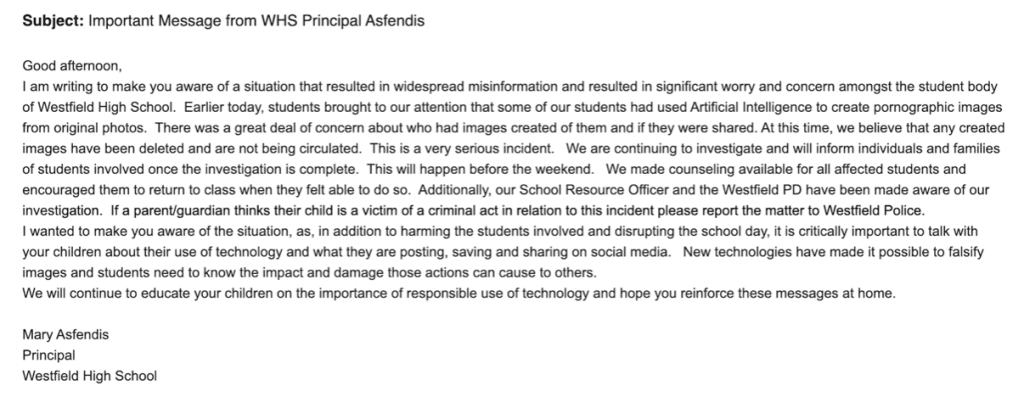

Some boys at Westfield High School used nudification apps without permission to AI the girls’ photos to generate realistic nude images and distribute them on online platforms such as instagram. The school’s headmaster said all school districts are responding to the challenges and impacts of AI and other technologies. The news mentioned that relevant experts have said this could cause serious mental health issues for the women who were victimised, affect their reputations, threaten their personal safety and pose a risk to their future studies and employment. The FBI has warned that it is illegal to distribute AI-generated sexual abuse material, including sexually explicit images generated by AI.

(By Natasha Singer The Times April 8, 2024)

(Image courtesy of the New York Times)

Artificial Intelligence is gradually infiltrating our lives. With the amazing development of AI, there are also alarms and concerns on many fronts about whether AI may be abused, and Elon Musk believes that it is essential for the government to monitor, intervene and manage AI. (Scherer, 2016)

How to Detect and Mitigate Online Harm Using Artificial Intelligence

Online hate speech and online violence have become a common phenomenon. (Loebbecke, Luon, Obeng-Antwi, & Germany, 2021) The use of AI for online hate speech detection has become a popular research topic in machine learning in this field (Ullmann & Tomalin, 2020)

Natural Language Processing (NLP) techniques for identifying and labelling harmful content.

Sharma, Singh, Kshitiz, Singh & Kukreja (2017) investigated an efficient algorithm for identifying hurtful speech and further analysed the content of these comments to test whether this algorithm is effective or not, NLP and Machine Learning are used for the analysis of social commentaries and identifying the impact of the speech, and the best-performing classifiers can detect social media based cyber Bullying.

From a large amount of data, AI can continuously learn to better understand content differences.

The murder of Lee Riggs, a famous drummer from London, England, in 2013 caused a lot of buzz on social media, and using this opportunity, researchers immediately tweeted a study of hate speech data to train and test a supervised machine for text classification, which allowed this machine system to differentiate between hateful, racial, ethnic, or religious content. The study demonstrated how classifiers can be effectively utilized in statistical models, which can be used to predict the likelihood of propagation of a sample of Twitter data. (Burnap& Williams, 2015)

Success Case:

JIGSAW, a subsidiary of Google, has developed a project called “Perspective API”, which uses machine learning to identify harmful speech online and flag harmful content to help mitigate online harm (Hosseini, Kannan, Zhang, & Poovendran, 2017).

Siapera and Viejo-Otero in Facebook and digital racism mention that Zuckerberg believes that improving the use of AI so that the AI proactively reports potential problems to the review team, and in some cases can automatically take action on relevant content, is paramount in enforcing policy.Facebook also uses advanced machine learning models to detect hate speech and other online harm in multiple languages, and the AI has been trained on sample content to recognise a wider variety of new content.

The use of AI also faces challenges in mitigating hate speech and online harm

AI also has functional and technical shortcomings:

Complexity of languages, cultures, dialects: AI needs to learn continuously and recognising different languages and different regional dialects becomes a challenge.

Exacerbating inherent bias: AI operates on the basis of data learnt by the learning model, and if there is bias in the data it is easy to cause bias in AI’s judgement

Difficulty in identifying complex scenarios: AI is not yet able to stand in the human perspective and make accurate judgements in scenarios that require understanding and empathy.

Conflict between freedom of speech and intelligent control:

Flew mentions that the UN International Covenant on Civil and Political Rights (ICCPR) states that people have the right to hold opinions without interference, and that people have the right to be heard, but it also states that advocacy of national, racial, or religious hatred is prohibited. This implies that there are parts of the use of AI to mitigate hate speech and online harm that are mutually exclusive with freedom of expression and security, and that it is crucial to strike this balance, not only to control online harm, but also to uphold people’s rights.

Conclusion

Digital networks and artificial intelligence are double-edged swords for online harm and hate speech. Science and technology and the development of networks are intended for the development and quality of human life in the future, and should not become a weapon that harms human rights and causes discrimination and prejudice. The emergence of AI is both an opportunity and a challenge for people’s societies and in the face of the various controversies that exist, individuals, platforms, enterprises and governments can better address the negative effects of AI in expanding online harm by cooperating and associating with each other. In addition, in the face of the proper use of AI, balancing regulation and freedom, on the one hand, it is necessary to promote freedom of speech, minimize censorship and advocate against hate speech and online harm, on the other hand, it is necessary to make full use of the law to restrict and sanction hate speech and online harm (Flew, 2021)

REFERENCE LIST

Alkiviadou, N. (2019). Hate speech on social media networks: towards a regulatory framework? Information & Communications Technology Law, 28(1), 19–35. https://doi.org/10.1080/13600834.2018.1494417

Banks, J. (2010). Regulating hate speech online. International Review of Law, Computers & Technology, 24(3), 233–239. https://doi.org/10.1080/13600869.2010.522323

Burnap, P., & Williams, M. L. (2015). Cyber hate speech on Twitter: An application of machine classification and statistical modeling for policy and decision making. Policy & Internet, 7(3), 223-242. https://doi.org/10.1002/poi3.85

Bontridder, N., & Poullet, Y. (2021). The role of artificial intelligence in disinformation. Data & Policy, 3, e32. doi:10.1017/dap.2021.20

Flew, T. (2022). Regulating platforms. Polity Press.

Hosseini, H., Kannan, S., Zhang, B., & Poovendran, R. (2017). Deceiving Google’s Perspective API built for detecting toxic comments. arXiv. https://doi.org/10.48550/arXiv.1702.08138

Loebbecke, C., Luong, A. C., Obeng-Antwi, A., & Germany, C. (2021). Ai for Tackling Hate speech. In ECIS.

Manheim, Karl, & Kaplan, Lyric. (2019). Artificial Intelligence: Risks to Privacy and Democracy. Yale Journal of Law and Technology, 21, 106-188.

Scherer, M. U. (2016). Regulating artificial intelligence systems: risks, challenges, competencies, and strategies. Harvard Journal of Law &Technology, 29(2), 353-400.

Sharma, H. K., Singh, T., Kshitiz, K., Singh, H., & Kukreja, P. (2017). Detecting hate speech and insults on social commentary using nlp and machine learning. Int J Eng Technol Sci Res, 4(12), 279-285.

Siapera, E., & Viejo-Otero, P. (2021). Governing hate: Facebook and digital racism. Television & New Media, 22(2), 112-130.

Ullmann, S., & Tomalin, M. (2020). Quarantining online hate speech: Technical and ethical perspectives. Ethics and Information Technology, 22, 69–80. https://doi.org/10.1007/s10676-019-09516-z

Verma, S. (2019). Weapons of math destruction: How big data increases inequality and threatens democracy. Vikalpa, 44(2), 97-98. https://doi.org/10.1177/0256090919853933

Williams, M. L., & Tregidga, J. (2014). Hate crime victimization in Wales: Psychological and physical impacts across seven hate crime victim types. The British Journal of Criminology, 54(5), 946–967. https://doi.org/10.1093/bjc/azu043

Zhu, H., Wu, H., Cao, J., Fu, G., & Li, H. (2018). Information dissemination model for social media with constant updates. Physica A: Statistical Mechanics and its Applications, 502, 469-482. https://doi.org/10.1016/j.physa.2018.02.142

(This Blog was helped by grammar detection, translation software, and ChatGPT.)

Be the first to comment