Fired by a Machine: How Amazon’s Algorithm Decides Who Loses Their Job

Imagine waking up one day to find out you’ve been fired—not by your boss, not by HR, but by an algorithm. No phone call. No explanation. Just an app notification saying you’re no longer eligible to work. That’s exactly what happened to 63-year-old veteran Stephen Normandin, a delivery driver for Amazon Flex, who was dismissed without ever speaking to a human. The system decided he wasn’t efficient enough, and that was the end.

Normandin’s story is not a glitch in the system. It is the system.

Amazon’s warehouse operations are powered by more than just robots and conveyor belts. Behind the scenes, a complex web of algorithms tracks every worker’s movements, including how fast they scan packages, how often they pause for breaks, and how efficiently they move.

Figure 1. Amazon warehouse workers are monitored by algorithmic systems that track every movement and performance metric. (Getty Images)

This data feeds into a productivity scoring system that can, without human oversight, generate automated warnings, disciplinary notes, and ultimately, terminations.

In one Baltimore warehouse, over 300 full-time employees were fired within a year due to “productivity concerns.” Many workers didn’t even know what they had done wrong. As reported by The Verge, the system “automatically generates warnings and terminations, with only rare input from supervisors.”

This new form of workplace automation isn’t just about streamlining logistics; it’s about shifting power. It removes the messy and human aspects of management, such as empathy, context, and dialogue, and replaces them with metrics, thresholds, and cold efficiency.On paper, it might look like progress. But for thousands of workers on the ground, it feels more like being ruled by an unfeeling machine.

As artificial intelligence and algorithmic systems continue to spread across industries, we must ask: What happens when machines manage people? And what happens when those people have no voice in the process?

In this blog post, we’ll explore the rise of algorithmic management through Amazon’s firing system. We’ll examine the ethical and social risks of letting automated systems make life-altering decisions, and why this trend signals a dangerous shift in the future of work.

The Algorithm Behind the Curtain

At Amazon, time isn’t just money; it is measured in metrics. Every second a warehouse worker pauses, whether to stretch, drink water, or simply breathe, is logged. The company’s Time Off Task (TOT) system monitors each employee’s activity in granular detail. Deviate from expected productivity benchmarks, and the system flags you. Rack up enough red flags, and the system initiates disciplinary measures, including in some cases terminations, without direct human intervention. This is not science fiction. It’s business as usual.

In a 2019 investigation, The Verge revealed that Amazon’s automated tracking systems were not just monitoring workers—they were replacing traditional managers by issuing warnings and triggering firings automatically (The Verge, 2019). And workers had little recourse. Many discovered they had been let go via app notifications. Others never received an explanation at all.

One driver, Stephen Normandin, described being deactivated from Amazon Flex after four years of service due to a low performance rating generated by the app. “There was no appeal process,” he told Bloomberg. “I feel totally helpless” (Bloomberg, 2021).

The logic is clear: automate everything. But this logic assumes that algorithms are better decision-makers than humans. That they are neutral, fair, and objective. That they can manage labor without prejudice. But these assumptions are deeply flawed.

Algorithmic Management Is Not Neutral

Despite its sleek veneer of efficiency, Amazon’s algorithmic management system reflects a broader shift in the digital economy: replacing human judgment with automated metrics and calling it progress. But what happens when that system doesn’t understand nuance, when it fails to distinguish between a temporary slowdown and systemic underperformance, or when it punishes someone simply for being human? In Atlas of AI, Kate Crawford (2021) argues that what we often frame as technical systems are, in reality, deeply political architectures. “Artificial intelligence is neither artificial nor intelligent,” she writes. “It is made from natural resources, fuel, human labor, data, classification, and infrastructures.” In Amazon’s warehouses, the power of this infrastructure is exercised not through conversation or negotiation, but through a screen that delivers numbers—cold, context-free numbers.

Figure 2. An Amazon worker interacting with the digital performance tracking system. The screen records every scan, pause, and gesture to feed into algorithmic management. (Photo by Spencer Platt/Getty Images)

These numbers determine the fate of thousands of workers every day.

One might argue that the use of data makes such decisions more “objective.” But as Helen Nissenbaum (2015) contends, the ethical use of data hinges not on whether it is accurate, but whether it is used in context. Performance data gathered for workflow coordination should not be unilaterally repurposed for employment termination. Doing so violates what Nissenbaum terms contextual integrity, and represents a shift from supportive management to surveillance-driven control.

And this control operates most harshly on those with the least power.

As Marwick and boyd (2018) point out, algorithmic systems rarely serve everyone equally. Marginalised workers, especially people of color, migrants, older employees, and those with disabilities, are often the least able to understand or contest how they are being evaluated. The system doesn’t explain itself, and appeals go into digital voids. In these cases, algorithmic governance doesn’t just replace human oversight—it erases human experience altogether.

This systemic invisibility has emotional and material consequences. Workers report high levels of stress, fear of termination, and a pervasive sense of being watched (Goggin et al., 2017). Their labor is reduced to data points, and their value measured in throughput, not humanity. As one former Amazon associate told The Guardian, “You’re not a person there. You’re just another number” (Levin, 2020).

The lack of empathy is built into the system itself. There is no button to explain that you had a migraine, or that your child was sick, or that the scanner froze for a minute. The system does not care. It only measures, penalises, and moves on.

This mirrors what Safiya Noble (2018) calls algorithmic oppression: the reproduction of societal inequalities through supposedly neutral technical systems. While Amazon’s system doesn’t “see” race or class explicitly, it disproportionately harms those who are structurally less likely to meet rigid productivity quotas, including people juggling care responsibilities, dealing with chronic illness, or navigating language barriers. In this way, efficiency becomes a proxy for exclusion.

We must ask: what kind of workplace are we building, if workers can be fired by a machine, with no explanation and no way to be heard? If we wouldn’t allow this level of depersonalisation in a courtroom or a school, why do we tolerate it in employment?

Who Governs the Algorithm? Closing the Accountability Gap

If we’ve reached a point where algorithms can hire, fire, and monitor workers without human oversight, then we also need to ask: who is monitoring the algorithms?

Amazon’s automation model reveals a regulatory vacuum. When a machine makes a bad call, such as misidentifying a productive worker as a slacker, miscalculating idle time, or enforcing productivity goals that ignore physical limitations, who is accountable? The system acts, but no individual can be held responsible. This is not just a technical issue. It’s a democratic one.

This is where the current structures of corporate responsibility and public governance break down. The 2017 Digital Rights in Australia report points out that most people believe governments and tech companies are jointly responsible for protecting digital rights (Goggin et al., 2017). But when a decision is made by an opaque algorithm, it often slips through both regulatory and ethical cracks.

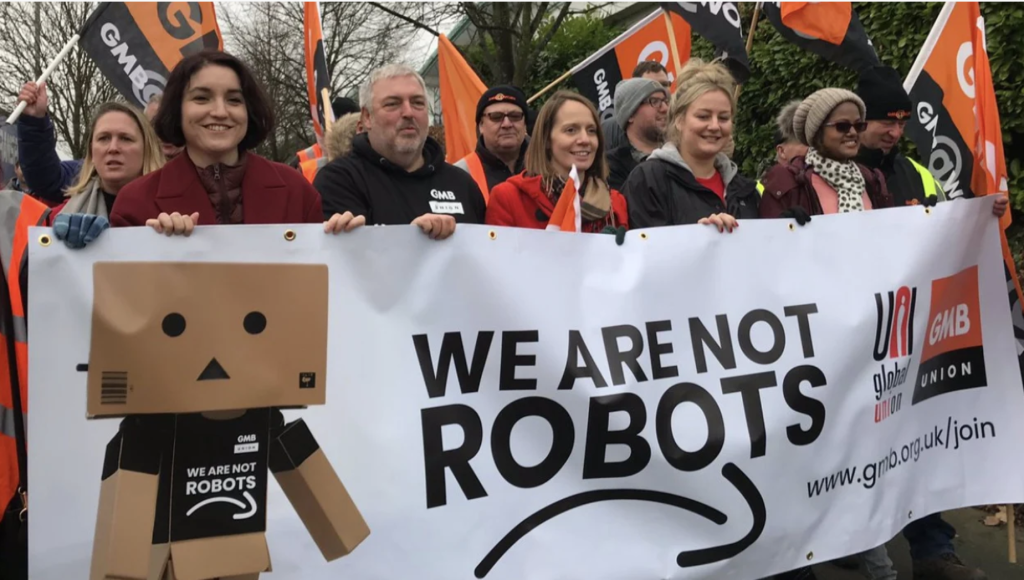

Figure 3. Workers protest Amazon’s automated performance management during a union-led demonstration in the UK. (GMB Union, via UNI Global Union)

Workers are left in a Kafkaesque limbo: no clear avenue for appeal, no transparency, and no human accountability.

To fill this vacuum, governance needs to catch up—not with more tech, but with stronger human-centered policies.

For example, the European Union’s proposed Artificial Intelligence Act classifies high-risk uses of AI—including employment decision-making—as needing mandatory oversight, transparency, and human intervention (European Commission, 2021). Meanwhile, the UNESCO Recommendation on the Ethics of Artificial Intelligence (2021) sets out a global framework for AI governance, calling for protections such as:

Algorithmic explainability

Human oversight in decision-making

Mechanisms for accountability and redress

Special protections for vulnerable and marginalised groups

But none of these safeguards mean anything if they remain policy on paper.

In practice, few jurisdictions enforce these principles when it comes to private-sector platforms like Amazon. What’s needed is not just corporate “AI ethics statements,” but legally binding obligations. That means:

Requiring companies to conduct independent audits of all high-risk automated decision systems (ADS)

Making it mandatory to disclose when workers are subject to automated management

Giving workers a legal right to human review of algorithmic decisions

Imposing fines or liability when algorithmic harms occur

In other words, workers need more than transparency; they need leverage.

Governance must also go beyond technical fixes. It must include structural participation: unions, civil society groups, ethicists, and affected communities should all be part of decision-making around how algorithmic systems are designed and deployed. As Crawford (2021) warns, “AI governance must address the power imbalance between those who build systems and those who are subjected to them.”

Otherwise, algorithmic governance becomes governance without consent.

Ultimately, regulating AI in the workplace is not about stopping innovation. It’s about anchoring innovation in justice. Workers should not be the testing ground for experimental systems that would never be accepted in other domains like healthcare or criminal justice. The same ethical standards must apply.

Because when a machine decides whether you can pay your rent next month, the stakes are too high for silence.

We Need to Talk About Algorithms

In the rush to automate, we’ve been quietly outsourcing decisions that used to be grounded in human judgment, dialogue, and mutual accountability. Hiring, performance reviews, even terminations are no longer conversations. They have become computations. We are replacing relationships with ratings.

Amazon’s algorithmic management is not just an HR innovation; it is a warning sign. When the drive for efficiency becomes embedded in code, when surveillance is dressed up as optimisation, and when labor is reduced to data points, we are no longer simply talking about productivity. We are building a system that is profoundly dehumanising.

But it doesn’t have to be this way.

As users, workers, and citizens, we have a choice. We can continue to accept this quiet erosion of rights in the name of convenience, or we can demand a more just digital future.

Figure 4. “Make Amazon Pay” protest in Germany, symbolising opposition to the dehumanisation of work through automation. (Ver.di Union, 2022)

This means more than just writing ethical codes or holding AI summits. It means embedding structural protections into law. As Crawford (2021) insists, governance must address “not only the systems themselves, but also the power asymmetries they reflect and reinforce.” That includes stronger labor protections, clearer appeal mechanisms, and guaranteed human oversight in any decision that affects a person’s livelihood.

It also means changing the narrative. Algorithms are not neutral tools. They are political artefacts—products of social values, institutional interests, and economic priorities. When deployed without transparency or checks, they don’t just reflect existing inequalities—they amplify them (Noble, 2018).

To counter this, governance must be proactive, participatory, and rooted in human dignity. The UNESCO Recommendation on the Ethics of AI (2021) reminds us that automation should never come at the cost of fairness. AI systems must be designed with safeguards that “respect, protect, and promote human rights, fundamental freedoms, and human dignity.”

That includes the right to explanation, the right to contest decisions, and the right to not be managed solely by machines.

Because justice in the age of algorithms will not be automated. It will be demanded and built from the ground up. If the future of work is algorithmic, then the future of justice must be algorithm-resistant, context-aware, and fundamentally human.

Toward a Fairer Digital Future

Technology can improve efficiency, but it can also mask injustice. When we give the “power of dismissal” to a system that has no face, no accountability, and no thought for the complexities of human nature, we lose not just a job, but an entire mechanism for negotiation, respect, and re-appeal.

The future of labor cannot go back to the past, but it can be designed to be fairer. If we choose to participate, monitor, and question, algorithmic governance can also be people-centered rather than exploitative.

Because a truly progressive society can’t just pursue faster packing speeds, it must also pursue more temperature-driven decision-making. What we want is not a “passive adaptive system,” but a co-shaping system.

References list

Bloomberg. (2021, June 28). Fired by bot at Amazon: “It’s you against the machine”. Bloomberg. https://www.bloomberg.com/news/features/2021-06-28/amazon-flex-drivers-are-being-fired-by-bots

European Union: Opinion of the European Central Bank of 29 December 2021 on a proposal for a regulation laying down harmonised rules on artificial intelligence (CON/2021/40). (2021). Asia News Monitor.

Goggin, G., Vromen, A., Weatherall, K., Martin, F., Webb, A., Sunman, L., & Bailo, F. (2017). Digital rights in Australia. University of Sydney. http://hdl.handle.net/2123/17587

Labour Heartlands. (n.d.). Amazon workers announce strike date in first ever UK walkout. https://labourheartlands.com/amazon-workers-announce-strike-date-in-first-ever-uk-walkout/

Levin, S. (2020, February 5). “You’re not a person”: Amazon warehouse workers push to unionize. The Guardian. https://www.theguardian.com/technology/2020/feb/05/amazon-warehouse-workers-union-new-york

Marwick, A. E., & boyd, d. (2018). Understanding privacy at the margins. International Journal of Communication, 12, 1157–1165.

Mashable. (n.d.). Amazon workers strike on Black Friday over working conditions. https://mashable.com/article/amazon-workers-black-friday-strike-europe-uk

Nissenbaum, H. (2015). Respecting context to protect privacy: Why meaning matters. Science and Engineering Ethics, 24(3), 831–852. https://doi.org/10.1007/s11948-015-9674-9

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism.

Portmann, E. (2021). Kate Crawford: Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence: ISBN: 978-0300209570, Yale University Press (6. April 2021), 336 S., € 21.99. Informatik-Spektrum, 44(4), 299–301. https://doi.org/10.1007/s00287-021-01385-5

Tribune Magazine. (2022, January). Amazon’s algorithm is human resources management tech dressed up as AI. https://tribunemag.co.uk/2022/01/amazon-algorithm-human-resource-management-tech-worker-surveillance

UNESCO. (2021). Recommendation on the ethics of artificial intelligence. https://unesdoc.unesco.org/ark:/48223/pf0000381137

Vincent, J. (2019, April 25). How Amazon automatically tracks and fires warehouse workers. The Verge.https://www.theverge.com/2019/4/25/18516004/amazon-warehouse-workers-productivity-firing-automation

Be the first to comment