The world’s richest man, who calls himself a “free speech absolutist,” took control of a platform with hundreds of millions of users and brought about sweeping changes. (Reuters: Brian Snyder; Reuters: Carlos Barria )

The $8 Prank

On a November night in 2022, a verified Twitter account impersonating pharmaceutical giant Eli Lilly tweeted: “We are excited to announce insulin is now free.” It was fake—an impersonator had paid $8 for a blue checkmark—but the fallout was very real. Eli Lilly’s stock plummeted, ad campaigns were pulled, and with much of Twitter’s moderation team already laid off, the platform was too hollowed out to react swiftly. The incident became a symbol of the chaos that followed Elon Musk’s $44 billion takeover of Twitter (now rebranded as “X”) just a month earlier.

A fake Eli Lilly account, verified with Twitter Blue, tweeted that insulin was free.

Musk had promised to champion free speech and reinvent the platform. The self-proclaimed “free speech absolutist” and world’s richest man took control of an app used by hundreds of millions—and swiftly set about reshaping it. Within months, Twitter underwent mass layoffs, rapid policy shifts, and controversial feature rollouts, raising urgent questions about what Musk’s version of Twitter means for privacy, safety, and digital rights in the online public square.

This blog post unpacks Musk’s governance of Twitter through that lens—examining how his changes have affected users’ privacy and security, and what they signal for the broader digital rights landscape. We’ll start with the facts, then move into the deeper context, arguing that beneath the rhetoric of “free speech,” Musk’s Twitter has often undermined the very protections users depend on.

Musk’s Free Speech Vision: A Double-Edged Sword

Elon Musk has cast himself as a staunch defender of free expression, lifting bans on controversial users like Donald Trump, scrapping policies against COVID-19 misinformation, and rolling back protections for transgender users from hate speech (CBS News, 2023). To Musk and his supporters, these moves represent a win for open dialogue. But critics warn that loosening content moderation opens the floodgates to harassment and the unchecked spread of falsehoods.

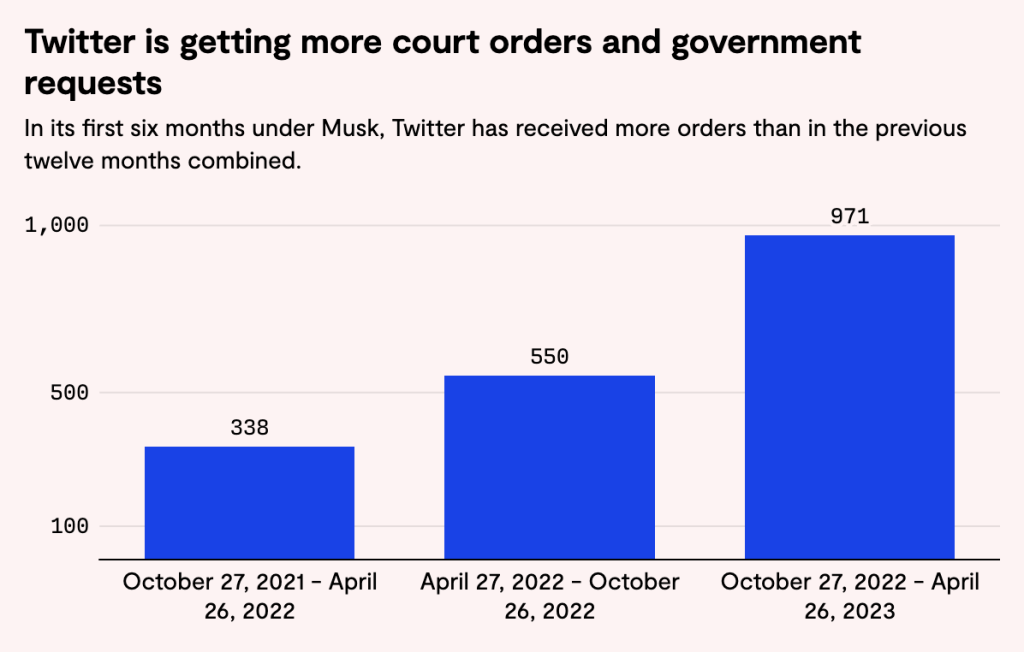

Meanwhile, Musk’s approach reveals stark contradictions. While championing free speech publicly, Twitter’s compliance with government censorship demands has surged under his leadership. In the first six months after the takeover, Twitter received 971 government requests for content takedowns or user data disclosures—and complied with 808 of them, a sharp rise from its previous 50% compliance rate. Many of these requests came from authoritarian-leaning governments like Turkey and India. In Turkey, Twitter even agreed to censor content to avoid a nationwide ban.

These contradictions expose a deeper tension in Musk’s version of free speech: whose voices are amplified, and whose are silenced? While restoring high-profile accounts, Musk also banned journalists who displeased him—such as those sharing publicly available data about his private jet, which he framed as a security threat. This selective enforcement raises concerns about arbitrary rule-making and highlights how personal preferences can dictate the boundaries of acceptable speech.

Despite his bombastic rhetoric, Musk’s content moderation appears ad hoc and opaque. Digital rights advocates argue that under his leadership, Twitter’s rules are guided more by impulse than principle. Scholar Nicolas Suzor (2019) puts it bluntly: platforms like Twitter wield “broad discretion to make and enforce rules in any way they see fit,” often with little accountability or transparency (pp. 10–24). Musk’s Twitter only reinforces these concerns, as policies are announced via tweets or quietly updated web

pages—leaving users and even employees to guess what is or isn’t allowed on any given day.

Chart: Rest of World Source: Lumen Database

Privacy and Security: Chaos and Compliance Cracks

Privacy, the cornerstone of digital rights, has come under intense threat since Elon Musk took over Twitter. In a matter of weeks, key privacy and security officers resigned, citing fears that Musk’s decisions might breach an existing agreement with the U.S. Federal Trade Commission (FTC) (The Verge, 2022). This agreement, established after Twitter was fined $150 million in 2022 for misusing user data, required the company to submit to strict privacy oversight. But following the acquisition, internal safeguards reportedly crumbled. Engineers were allegedly instructed to grant system access to unvetted external parties—including Musk’s personal contacts. A Justice Department filing later described the situation as “chaotic,” with unclear procedures and ignored protocols. Musk reportedly bypassed standard security reviews in his rush to roll out new features, exposing user data to heightened risk.

The FTC launched an investigation into whether Musk’s Twitter had violated the agreement. Instead of cooperating, his team pushed back, dismissing the agency’s inquiries as excessive. This resistance sparked alarm over whether Musk was willing to prioritize user privacy at all. With top privacy officials gone, compliance mechanisms dismantled, and regulatory oversight openly challenged, users now face growing uncertainty: Is their data still safe on X? Experts warn that the platform may be more vulnerable than ever. As media theorist Terry Flew (2021) argues, protecting personal data is now central to platform governance (pp. 72–79). Yet Musk’s apparent disregard for privacy obligations underscores how cost-cutting and disruption can put user safety at serious risk.

The Decline of Safety on X

Under Elon Musk’s leadership, X (formerly Twitter) has taken a series of controversial steps that many argue have compromised user safety. In early 2023, the platform announced that SMS-based two-factor authentication (2FA) would be restricted to paying users only. Non-subscribers were given 30 days to switch to alternative methods or lose this basic layer of security entirely. Musk defended the move as a cost-cutting, anti-spam measure—but cybersecurity experts called it reckless, effectively turning essential account protection into a paywall.

And that wasn’t the only red flag. The introduction of paid verification triggered a wave of impersonation. Anyone could buy a blue checkmark, enabling fake accounts to pose as celebrities, corporations, and even government agencies. It wasn’t just satire—some impersonations, like the fake Eli Lilly account that falsely announced free insulin, caused real-world damage.

Internally, Twitter’s safety infrastructure crumbled. Musk slashed over half the company’s workforce and dissolved the Trust and Safety Council—a group of outside experts advising on harassment, child exploitation, and suicide prevention. With moderation capacity gutted, the platform became more vulnerable to abuse and misinformation. Critics saw this as a clear shift toward autocratic decision-making over collaborative governance.

Researchers at UC Berkeley reported that hate speech on the platform surged by 50% after Musk’s takeover, with a significant rise in targeted attacks against marginalized groups. Musk claimed that impressions of hate content had dropped, but peer-reviewed data told another story: a sustained spike in offensive and targeted posts. In this light, “safety” isn’t just about passwords—it’s about whether people can exist online without fear of abuse.

The Governance Problem: Who Makes the Rules at X?

One of the most striking features of Elon Musk’s reign over Twitter—now rebranded as X—is how vividly it illustrates a broader issue in platform governance: the unchecked power of private individuals over public discourse.

Before Musk’s takeover, Twitter at least maintained a set of established policies, enforcement protocols, and a modicum of transparency. Flawed though they were, these systems offered some consistency. After Musk assumed control, however, these mechanisms were swiftly dismantled. Platform policy began to emerge not from formal channels but from late-night tweets and off-the-cuff public polls. Famously, he used a Twitter poll to decide whether to reinstate Donald Trump’s account—or even whether he should step down as CEO. While such polls created a façade of user participation, the final say always remained Musk’s alone.

As legal scholar Nicolas Suzor (2019) observed, social media platforms wield enormous power over our ability to speak, yet they operate with almost no obligation to be democratic or transparent (pp. 15–16). Musk’s Twitter exemplifies this danger. With governance centralized in the hands of a single figure, decisions became erratic and personal. In one case, the platform abruptly banned links to competing services like Mastodon without warning—only to reverse the decision a day later following public outcry.

This kind of impulsive rule-making not only breeds confusion, it undermines user trust. The case of X shows what happens when platforms act more like private fiefdoms than public forums: governance becomes volatile, accountability disappears, and the boundaries of free expression are redrawn on a whim.

The Shift Toward Decentralization

Interestingly, the chaos surrounding X (formerly Twitter) has pushed many users toward decentralized alternatives like Mastodon and Bluesky. Since 2022, journalists, academics, and activists have begun establishing their presence on these community-driven platforms, seeking digital spaces built on transparency and shared governance. Unlike X’s top-down model, these alternatives aim to distribute control more evenly between users and communities. This shift highlights a broader understanding of digital rights—one that goes beyond free expression to include the freedom to choose platforms not dominated by monopolies. Yet despite growing interest in decentralized networks, X remains a central arena for global conversation. Its sheer scale and influence make it difficult to replace, meaning Musk’s decisions continue to shape digital public life in profound ways.

The New Reality: Digital Rights in the Musk Era

Photo illustration: CNN/Jordan Vonderhaar/Bloomberg/Getty Images/Jonathan Ernst/Reuters/Michel Euler/AP

In just over a year, Musk has dramatically reshaped the governance of one of the world’s largest social platforms, with far-reaching consequences for privacy, safety, and digital rights. On one hand, he delivered on parts of his promise to “open up” the platform, loosening content moderation rules and releasing internal review documents—the so-called “Twitter Files.” But the costs of his vision are mounting.

- Privacy erosion: Internal safeguards and compliance processes were disrupted, leading regulators to worry that X is violating privacy commitments. Users are less confident that their data (emails, phone numbers, DMs) will be protected. Musk’s aversion to regulation highlights how platform owners’ priorities (e.g., rapid innovation, cost-cutting) may put user privacy protections on the back burner.

- Security Setbacks: instead of strengthening security, Musk’s changes have often weakened it – from charging for SMS-based two-factor authentication to enabling impersonation scams. Reports of harassment and hate speech spiked, while engineering layoffs led to blackouts and technical glitches. Trust in the platform’s basic security was eroded.

- Free Speech vs. Manipulation: Musk’s absolutist stance has revived previously silenced voices, but it has also amplified harmful content that silences others. His selective enforcement – suppressing speech when it conflicts with business interests or political expediency – reveals that freedom of speech often depends on who’s in power, rather than any consistent principles.

- Platform Power Exposed: Perhaps most importantly, Musk’s ownership highlights the enormous, unchecked influence of tech moguls over digital public life. As Flew (2021) warns, platform power encompasses market dominance, content control and data ownership (pp. 79-86).

Musk acquired a public-facing service and reshaped it in his image—with no democratic process. One man’s rules replaced what little collective governance Twitter once had. Ultimately, this saga offers a stark reminder: today’s digital rights are less about enforceable protections and more about the whims of a billionaire CEO.

Conclusion: A Cautionary Tale

Elon Musk’s takeover of Twitter has unfolded as a real-time experiment in platform governance, showing what happens when the rules of social media are rewritten at the whim of a single owner. For users, the Musk era has been a double-edged sword: greater freedom for some has come at the cost of rising chaos, harm, and regulatory uncertainty. It’s a stark reminder that digital rights—privacy, safety, and freedom of expression—can be profoundly shaped, for better or worse, by the policies of privately owned platforms. Musk once described Twitter as the “de facto public town square.” If that’s true, then safeguarding the public interest—not just a billionaire’s vision—becomes critically important.

As we navigate the Musk-Twitter era and beyond, one lesson stands out: platform governance needs stronger accountability. This could take the form of tighter external regulation—such as the EU’s Digital Services Act, which enforces content standards and data privacy laws to curb the unchecked power of tech giants. It could also involve platforms adopting more transparent, inclusive decision-making processes—something Musk has gestured toward with Twitter polls, though meaningful power-sharing has yet to materialize. At the very least, the upheaval at X has sparked greater public awareness of digital rights, fueling debate over what we should expect from the companies that mediate our online lives.

Finally, Musk has undoubtedly left his mark on Twitter—but perhaps not in the way he intended. While he championed free speech, he also unintentionally underscored the importance of privacy protections, thoughtful safety protocols, and balanced rulemaking for sustaining healthy digital communities. The Musk era at Twitter is still unfolding, but it already stands as a cautionary tale in tech governance—a vivid illustration of how fragile our digital rights can become when concentrated in the hands of one individual. The current state of affairs on X reflects this uneasy truth: a landscape of new possibilities and new risks, born from a high-stakes experiment in who gets to write the rules of the internet.

References

Flew, T. (2021). Regulating Platforms. Cambridge: Polity Press. (pp. 72–79)

Suzor, N. P. (2019). Lawless: The Secret Rules That Govern Our Digital Lives. Cambridge: Cambridge University Press. (pp. 10–24)

Hickey, D., Singh, L., et al. (2025). X under Musk’s leadership: Substantial hate and no reduction in inauthentic activity. PLOS ONE, 18(2), e0313293. https://doi.org/10.1371/journal.pone.0313293

Milmo, D. (2023). Twitter chaos after Elon Musk takeover may have violated privacy order, DoJ alleges. The Guardian. Twitter chaos after Elon Musk takeover may have violated privacy order, DoJ alleges | X | The Guardian

Brandom, R. (2023). Twitter is complying with more government demands under Elon Musk. Rest of World. Twitter is complying with more government demands under Elon Musk – Rest of World

Dang, S. (2022). Twitter dissolves Trust and Safety Council. Reuters. Twitter dissolves Trust and Safety Council | Reuters)

Tanna, S. (2023). Twitter to charge users to secure accounts via text message. Reuters. Twitter to charge users to secure accounts via text message | Reuters

CNN. (2023). Twitter removes transgender protections from hateful conduct policy. Article by CNN, published on CBS News Sacramento. Twitter removes transgender protections from hateful conduct policy – CBS Sacramento

Manke, K. (2025). Study finds persistent spike in hate speech on X. Berkeley News. Study finds persistent spike in hate speech on X – Berkeley News

Notopoulos, K. (2023). The guy behind a fake insulin tweet celebrated Eli Lilly’s price reduction. BuzzFeed News. The Guy Behind A Fake Insulin Tweet Celebrated Eli Lilly’s Price Reduction

Wikipedia. (2023). Twitter Blue verification controversy (section on impersonation). Retrieved November 2023, from Wikipedia.org Twitter Blue verification controversy – Wikipedia

Be the first to comment