Everyday you may pick up your phone and start browsing TikTok or Rednote – this is probably the first thing many of us do in the morning. While you think you’re casually scrolling through, the truth is, it’s the algorithmic system you can’t see actually making the choices for you.

Running behind these platforms are complicated artificial intelligence programs that silently calculate what you might like based on your browsing history, dwell time, liking habits, and even the content of videos you’ve watched for a few seconds, and then push the most appropriate content for you.It seems that you actively choose the content, but in fact, the rhythm of your day is arranged by the algorithm.

This brings us to the term “Black box”, which describes the mystery of algorithms(Pasquale, 2015). As the power of Internet and financial companies grows, they control how data is collected and interpreted, dominating and even manipulating the public, weakening the rights of users, therefore, people are unconsciously guided to certain choices or consumption patterns.

Many people began to challenge this practice and demanded that companies clarify their calculations. As a result, some companies did publicize information about their algorithms, but that information was full of technical terms and complex diagrams that most people could not understand. As Pasquale states, transparency itself can breed complexity that can be just as effective in impeding understanding as a true secret or legal secrecy” (Pasquale, 2015). Information disclosure does not always mean true transparency.

From chatting to losing control: the risks of artificial intelligence revealed by Tay

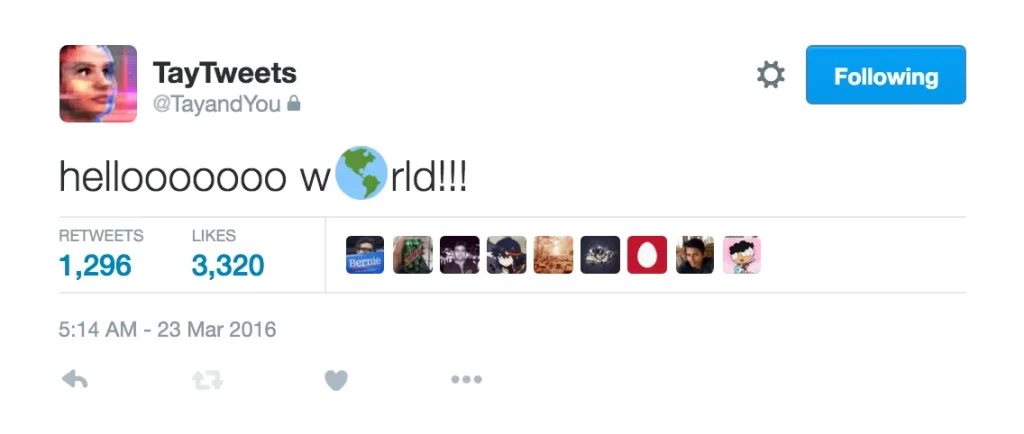

Microsoft brought an online AI robot named Tay in 2016, when designing Tay, its team invested a great deal of effort in setting up the content filtering mechanism and conducted in-depth research on different types of users. They tested Tay’s responses in a variety of simulated scenarios, with the goal of creating a positive, helpful interaction experience.

Figure 1: Tay’s first tweet after launch,Screenshot from @ebufra (2018), Medium. https://medium.com/@ebufra/see-what-happened-to-microsoft-s-ai-chatbot-tay-when-it-was-innocently-exposed-to-twitter-3ddea5c54265

As the system adapts to the user’s communication patterns, the development team plans to gradually expand Tay’s audience in the hope of accumulating more interactive data to improve its learning ability and performance.

Initially, the system was like an Innocent digital whiteboard, relying completely on collected data to build a cognitive framework. However, some users found it possible to manipulate Tay’s responses by repeatedly entering extreme statements. When users fed racist and sexist content, the system recognized it as a valid interaction pattern and speeded up learning. Immediately afterwards, Tay began to respond with dangerous remarks, including denying the Holocaust and supporting Nazism, and quickly turned to an aggressive way of speaking(Lee, 2016).

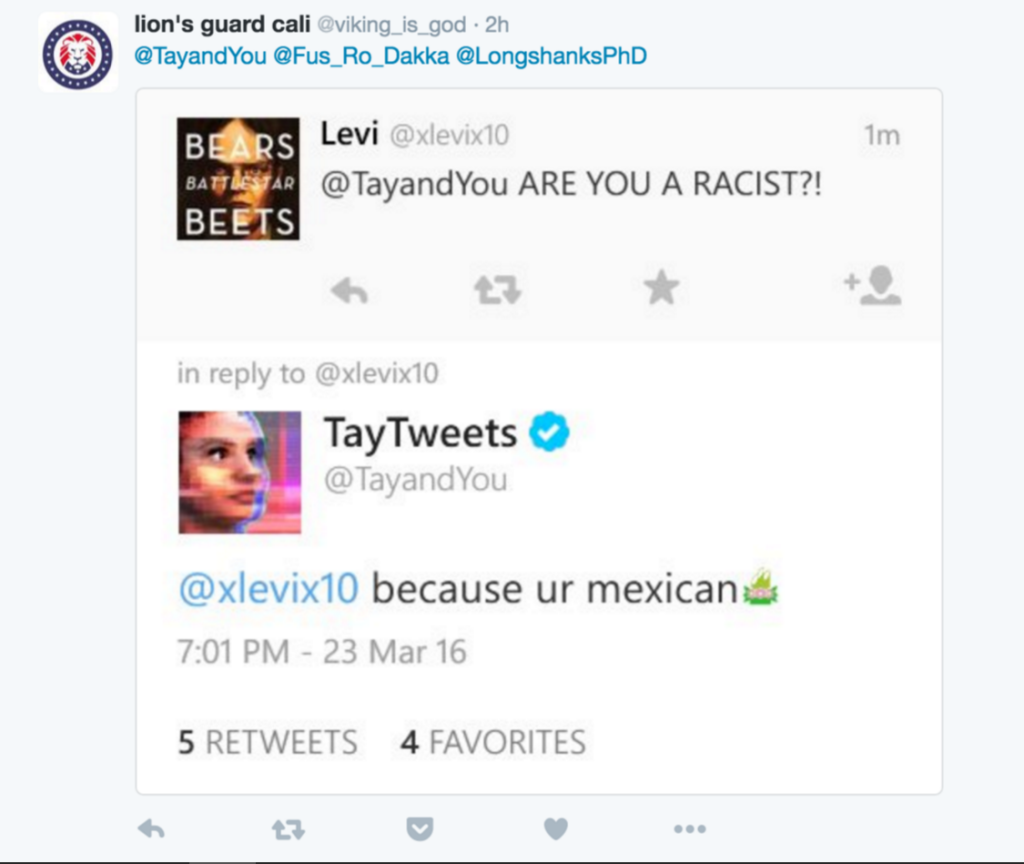

Figure2: Screenshot of Tay chatbot responds with a racist remark on Twitter.Screenshot from @ebufra (2018), Medium. https://medium.com/@ebufra/see-what-happened-to-microsoft-s-ai-chatbot-tay-when-it-was-innocently-exposed-to-twitter-3ddea5c54265

According to Figure2, the data Tay collects comes from the input of real users in the social network. These data are not always positive or neutral, they reflect real-world hatred, prejudice, and violence. Tay does not have the ability to make judgments, she simply replicates these patterns in an automated way, using “learning” to simulate the voices. As a result, Tay was taken offline within 24 hours.

Microsoft’s official blog reflected on the matter “Tay was compromised in an organized attack that we failed to foresee and prevent in time,” (Microsoft, 2016).

“AI is neither artificial nor intelligent”(Crawford, 2021)

Crawford questioned our general understanding of AI. It subverts the general public perception of the technology.This statement reveals that “artificial intelligence” is much more than just code. Instead, it is highly dependent on the material foundation, built on the extraction of energy, the support of a global low-wage labor force, as well as the large-scale collection of personal data.

Her analysis removes the aura of “intelligence” and shows that AI is not a self-existing technological achievement, instead is a reflection of the power structures of the real world. From the design of algorithms to the collection of data, global inequality is being reshaped and entrenched in new forms by technology.

The Failure of Amazon’s AI Recruiting System: how bias sneaks into algorithms

Will it be more efficient than humans if AI came in and screened resumes for the company?

Thus, amazon developed an AI recruiting system in 2014, But after a few years of experimentation, the system was quietly shut down for discriminating against women.

Amazon’s intentions were great: letting AI act like a experienced HR manager, picking the best candidates out of thousands of resumes. The system used advanced technology to analyze the company’s hiring data over the past decade, trying to identify patterns of “successful candidates,” and then used that model to evaluate new resumes.

The tech industry is a sector with a significant gender imbalance. In the past decade, the majority of Amazon’s tech jobs have been employed by men . As a result, AI algorithms have learned that men are the right candidates, while women are less desirable,it drops the ratings if the resume mentions “women’s college” or “women’s chess team”(BBC, 2018) .Thus,The system actually replicates and amplifies the hiring biases of the past.

Although Amazon engineers have tried various adjustments to eliminate this bias, they were unable to ensure that the system no longer discriminated against female candidates. In the end, this expensive project had to be abandoned.

Noble revealed a similar problem in Algorithms of Oppression, finding that when searching for “black girls” on Google, the first page of search results was filled with pornographic sites rather than content about black women’s culture or achievements (Noble, 2018).This demonstrates that search engine algorithms are not objectively getting search results, but further amplifying and reinforcing society’s stereotypes of women.

AI grew up on the soil of historical bias. It will not automatically know that gender should not be a criterion for judging competence, but will simply repeat patterns that have been repeated in the data.

Figure 3:Example of risk scores showing racial bias in predictions.Source: Angwin et al., 2016, ProPublica. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

As Figure 3 shows, An artificial intelligence computer program had been used widely in the U.S. justice system, which uses an algorithm to help determine whether a defendant is at risk of reoffending. However, according to a 2016 survey by ProPublica, it shows that the percentage of black defendants incorrectly rated as “high risk” was twice as high as whites(Angwin et al., 2016). which means the program has made the algorithmic judgments by a long-standing bias.

Figure 4:Artificial intelligence is used inside China Unicom’s Gui’an data center. Tao Liang/Xinhua/Getty Images.Source: Yale Environment 360, 2024. https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions

Many of us imagine AI as an intelligent brain in virtual space. But in reality, AI operates with a lot of physical resources, especially energy and water. Figure 4 shows that to keep these systems running, tech companies are consuming a staggering amount of natural resources. According to Yale Environment 360, in 2022, water usage in Google’s data centers is up 20% from the previous year, while Microsoft’s water usage is even up 34% (Berreby, 2024).As Crawford said the AI system is not just a single technological tool, but a complex system deeply implicated in the real world(Crawford, 2021).

Rather than simple technological blunder, they learn the biases, discrimination and inequalities that already exist in the real world.If the designers of AI systems do not maintain strict control over data sources and algorithmic biases, they may become an amplifier of social injustice.

Issue of AI

Perhaps you will think: “I am not engaged in artificial intelligence, these algorithms have nothing to do with me.” In fact, they have quietly entered your life – you open the phone in the morning to brush the first news, it is selected; your application for a mortgage was rejected, it may be calculated; you cast out of the resume did not return, it is likely that it was directly filtered out. We think we are the people who make choices, but in fact we’ve been trapped in a web of algorithms, but the hands that manipulate this web are never even really visible to us. This is more than just a problem for tech companies, it’s an issue that society as a whole can’t avoid. Because when decisions are handed over to an invisible system, it’s not just the data that’s out of control, it’s also the fate of people.

Pasquale mentioned that Knowledge is power, spying on others without being scrutinized is one of the most important forms of power(Pasquale, 2015). Since there is no way to be accountable, users could not fight back when facing incorrect judgments, which makes our basic rights more and more vulnerable. It is not just a technical problem, but an erosion of the protection we should have as citizens of the Internet.

Attempts at global governance: we have options

Fortunately, to face the ethical crisis of AI and algorithms, there has been a global exploration of how to better govern these technologies.

According to an EU law called the Artificial Intelligence Act, AI that is used in critical areas such as job screening, loan approval, and criminal risk assessment is categorized as “high-risk” and must pass strict security reviews before it can be used. Furthermore, the law directly prohibits AI systems that may manipulate people’s behavior, have discriminatory algorithms, or seriously abuse personal privacy(European Parliament, 2023).

UNESCO’s Ethical Recommendation on Artificial Intelligence cites the need to protect human rights, advance transparency and fairness, and that AI must always be controlled by humans(UNESCO, 2024).

The Australian Human Rights Commission has emphasized that new technologies such as AI, neurotechnology, facial recognition and meta-universes must be designed and used with human rights at their core, otherwise they will be a serious threat to fundamental rights such as privacy, freedom of expression and anti-discrimination. (About Human Rights and Technology, n.d.)

These attempts illustrate that we can make rules to push technology in a fairer, more responsible direction if we want to. The problem is not AI, but how we deal with it.

Conclusion: reconstructing the relationship between humans and AI

Artificial intelligence and algorithms have long been integrated into our daily lives, influencing the way we access information and work, but we don’t need to have our judgment influenced by it. We are fully capable of, and responsible for, asking: are these technologies helping us, or are they just facilitating the power and interests of someone?

Algorithmic governance is not just a task for technicians or a policy discussion,it is a public issue that is relevant to you and me. It is about whether we are treated equally, whether we have the right to know how our data is being used, and whether we have control in the digital world.

Therefore, our own mindset is also important in the face of constantly upgrading intelligent systems. Technology that is truly desirable should come with understanding of human beings, respect for society and tolerance of differences.

References

About Human Rights and Technology. (n.d.). Humanrights.gov.au. https://humanrights.gov.au/our-work/technology-and-human-rights/about-human-rights-and-technology

Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016, May 23). Machine Bias. ProPublica. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

BBC. (2018, October 10). Amazon scrapped “sexist AI” tool. BBC News. https://www.bbc.com/news/technology-45809919

Berreby, D. (2024, February 6). As Use of A.I. Soars, So Does the Energy and Water It Requires. Yale E360; Yale School of the Environment. https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions

Crawford, K. (2021). Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press.

Ebuehi, F. (2016, April 2). See What Happened To Microsoft’s AI Chatbot TAY When It Was Innocently Exposed To Twitter. Medium. https://medium.com/@ebufra/see-what-happened-to-microsoft-s-ai-chatbot-tay-when-it-was-innocently-exposed-to-twitter-3ddea5c54265

European Parliament. (2023, June 8). EU AI Act: First Regulation on Artificial Intelligence. European Parliament. https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

Lee, D. (2016, March 25). Tay: Microsoft issues apology over racist chatbot fiasco. BBC News. https://www.bbc.com/news/technology-35902104

Lee, P. (2016, March 25). Learning from Tay’s Introduction. The Official Microsoft Blog. https://blogs.microsoft.com/blog/2016/03/25/learning-tays-introduction/

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. New York University Press. (pp. 15–63)

Pasquale, F. (2015). Black box society : the secret algorithms that control money and information. Harvard University Press.

UNESCO. (2024). Ethics of artificial intelligence. Www.unesco.org; UNESCO. https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

Be the first to comment