In the process of the development of the internet, social media is often regarded as the product of democratic development, because it provides people with free space to share their views and ideas. People can more easily and quickly capture diverse information. Most importantly, the internet has created a new way of public participation, and everyone has an equal opportunity to participate in social discussions (Jorgensen & Zuleta, 2020). As Barlow (1996) stated, people have the right to freely express their beliefs regardless of their location, without worrying about being suppressed or forced to submit to the views of others. Unfortunately, in the real cyberspace, hate speech, harassment, and extremism are constantly eroding online content.

What are hate speech and online harms?

Hate speech is not simply offensive language, but a form of expression with substantial harm. Its destructive power may manifest or accumulate over a long period of time. It may target vulnerable groups with distinct characteristics, even if these characteristics are usually unchangeable, such as race, gender and so on. In addition, people impose traits that are generally disliked by society on the target group through explicit or implicit means. They portray the attacked group as a public enemy to justify their hatred (Sinpeng et al., 2021).

Online harm includes cyberbullying. It refers to someone attacking children or adolescents through online platforms, causing them to develop negative emotions. Secondly, adult online abuse targets individuals aged 18 and above, causing physical and psychological harm through the dissemination of harmful content. Furthermore, image-based abuse involves unauthorized sharing and threatening to share private images, which violates personal privacy. Online harm also includes the dissemination of illegal and restricted content, such as child sexual abuse materials, terrorism, or extreme violence (Office of the eSafety Commissioner, n.d.). These are not only illegal behaviors but also pose a serious threat to social stability. It can be seen that the abuse of the dissemination nature of the Internet has brought varying degrees of harm to many victims.

The Collapse of Utopia in Cyberspace: The Dilemma of Freedom of Speech

Some users consider platforms such as Facebook and Instagram to be a guarantee of freedom of speech. A long time ago, the general manager of Twitter UK stated that this platform is a free speech faction of the Free Speech Party (Halliday, 2012). However, with the emergence of events such as # Gamergate, negative thoughts have been widely spread on social media. After many groups were attacked and harassed by the internet, users began to question whether the idea of freedom of expression was feasible, because some people used the internet as a means of spreading hatred. Massanari (2017) suggests that the design and management of social media may have contributed to toxic technocultures. For the platform’s stakeholders, algorithms and profit driven business models make it more likely that hateful content will be exposed and widely disseminated.

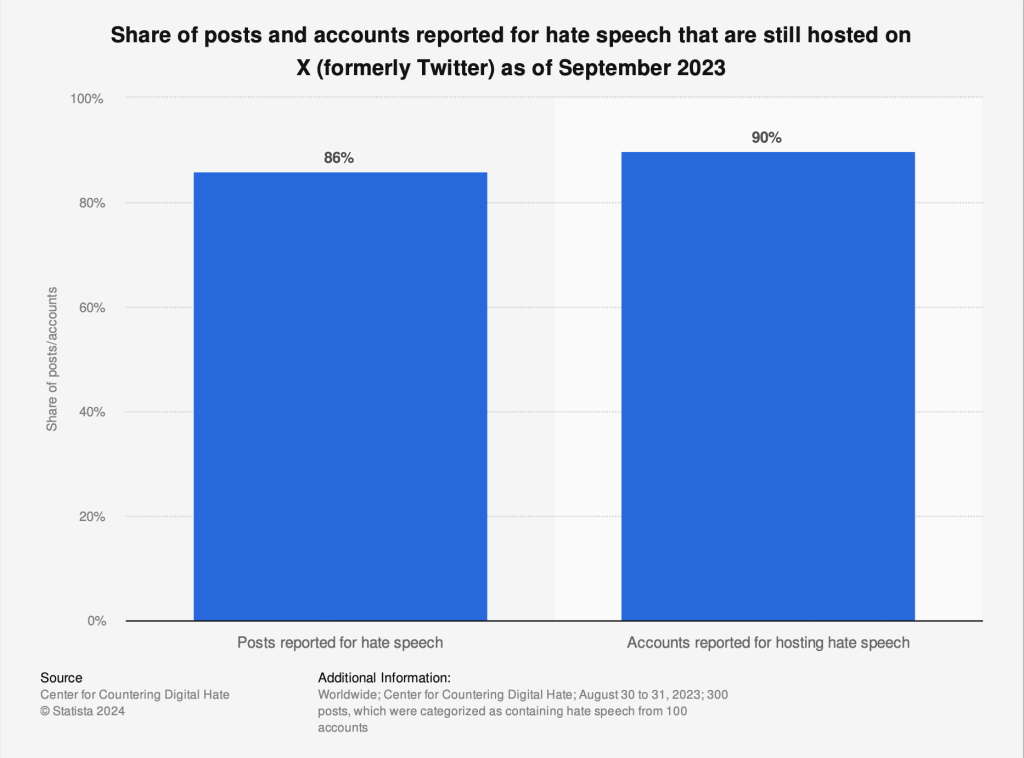

Data shows that as of September 2023, the majority of posts marked as containing hate speech on X platform (formerly Twitter) will still remain on the platform. Additionally, 90% of accounts that spread hate speech continue to operate even after being reported. This shows that X seems to have not effectively managed and cleared hate content on the platform (Center for Countering Digital Hate, 2023).

Case study – Gamergate Campaign: The actual harms caused by hate speech

In 2014, a man named Eron Gjoni wrote a blog post about his breakup process with game developer girlfriend Zoe Quinn. Stuart (2014) added that Eron Gjoni stated that his girlfriend had often lied before and believed that the success of her game “Depression Quest” was partly due to an improper relationship between her and many game journalists. In a short period of time, many people who consider themselves concerned about the ethics of gaming media and the public have started using the hashtag “#Gamergate” to criticize gaming media and express their dissatisfaction. Moreover, this hashtag evolved into an attack tool against Zoe Quinn and other female game developers, feminist critic Anita Sarkeesian, and their male allies (Massanari, 2017). Someone threatened Anita to carry out a large-scale violent attack against women on the university campus where her speech was given. A game developer named Brianna Wu was subjected to verbal attacks from Twitter users. In addition to these threats, their personal information such as home address and telephone number has been publicly released on the internet. As a result, Zoe had to find another place to live and cancel all public events. Many opponents of Gamergate have also been continuously harassed due to the ongoing escalation of this campaign. They expressed disappointment that these harassers were not stopped or punished (Greengard, 2025).

Milo Yiannopoulos, a supporter of Gamergate, said that this campaign was an attempt by feminists and left-wing online activists to use “victim culture” to restrict public discussion. He has doubts about whether feminists are truly in danger. In his view, feminists have distorted the culture of video games. In general, he sees Gamergate as a struggle to defend freedom of speech, resist feminist intervention, and oppose politically correct culture (Ng, 2015). Undoubtedly, some people have expressed opposing views on this campaign. According to Wingfield (2014), supporters of Gamergate appear to be concerned about the “ethical issues” of the game, but the fact is that most of the attacks target women, especially those who openly criticize gender discrimination in the gaming industry. Additionally, this harassment behavior reflects the long-standing male dominance in the gaming industry and player culture. Some male players express rejection and hostility because they feel isolated due to the diverse development of game culture. Therefore, the entire gaming industry needs to take effective measures to create a more inclusive and secure online environment for users.

Responsibilities of the platform: Shortcomings in the regulatory system

In today’s cyberspace, every social media platform is not only facing the problem of hate speech, but also the massive spread of negative content such as false information, terrorist content and obscenity remains a challenge. This reality highlights that platforms should take responsibility, continuously engage in self-regulation, and improve platform standards. The House of Commons Home Affairs Committee (2017) pointed out that some companies and institutions have placed advertisements on the YouTube, and Google lacks basic scrutiny of them. These advertisements are often displayed alongside offensive content, even including videos related to terrorist organizations. It can be seen that there are still a large number of extreme contents that have not caught the attention of social media platforms and are allowed to spread. When it comes to copyright infringement, Google can remove YouTube videos in a short period of time. On the contrary, hate speech or illegal content has not been properly addressed through appropriate means. Similar situations frequently occur on Facebook, as there is a clear opposition between the platform’s commercial goals and public welfare. If it improves the security of the algorithm, user engagement may decrease. People will spend less time on the platform, resulting in a decrease in ad click through rates. It is worth noting that Facebook has found through internal investigation that Instagram’s negative impact on teenagers is not just minor, but actual harm. Compared to other platforms, Instagram has a significantly higher level of harm (Pelley, 2021). In short, these platforms seem to always focus on improving profit margins while neglecting the protection of user interests and social responsibility. Al-Zaman (2024) added that there is controversy between the guarantee of freedom of speech and strict censorship. The core purpose of social media is to provide a channel for people to exchange ideas and participate in public discussions, and excessive control over it may lead to concerns about suppressing freedom of speech. Faced with such dual attributes, stakeholders have reached a consensus that social media platforms need to be appropriately regulated.

Platform self-regulation

Taking Meta as an example, it aims to encourage users to actively and friendly communicate on Facebook, Instagram, Messenger and Threads and establish a healthy online community. It encourages people around the world to connect. Meta has established usage rules that clearly specify which content can or cannot be published to prevent platform services from being abused (Meta Transparency Center, n.d.).

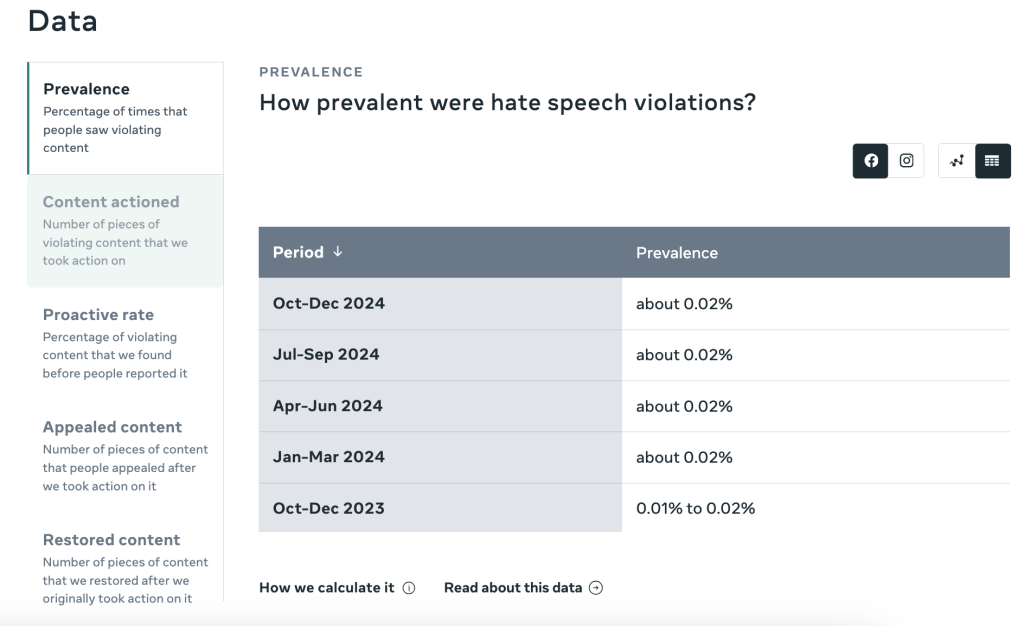

In response to hateful behavior on the platform, Meta strictly prohibits attacks on racial, ethnic, national, and other groups, and forces the removal of related content. It clearly distinguishes between hate speech and permissible viewpoints. Meta also employs over 15000 content reviewers worldwide to efficiently assess whether content violates regulations and ensure the safety of users. On the other hand, users can report content that may involve hatred at any time during use. Reviewers will arrange for handling based on the severity of the report and provide feedback to users as soon as possible. It is important that Meta publishes relevant data on specific pages, showing the frequency of people seeing violations, the number of violations that have been processed, the proactivity rate and so on. (Meta Transparency Center, n.d.). This behavior of demonstrating regulatory transparency may enhance user trust, improve the platform’s image, and enhance its sense of social responsibility. These data can also be provided to research institutions or news media organizations to analyze the impact of social platforms on society. For Meta company itself, regularly analyzing these data reports is also beneficial for improving or correcting some loopholes.

Fighting against online harms: Government actions

Due to the continuous spread of negative content on the internet, governments around the world have begun to attach great importance to it. To meet this challenge, legislators and regulators in each country are taking a variety of measures, such as establishing new laws, strengthening platform supervision, and cooperating with platforms to build a healthier online environment. The Online Safety Act 2021 in Australia stipulates that safety commissioners have the authority to compel platforms to remove online content deemed illegal within 24 hours of receiving a complaint (Australian government, 2024). This effectively requires the platform to take preventive measures, cut off network harm behavior from the source, and avoid passive response modes. In the UK, Ofcom is the cybersecurity regulatory agency responsible for overseeing platforms’ compliance with user protection obligations. For companies that violate regulations, a maximum penalty of £ 18 million or a fine of 10% of their global revenue (whichever is higher) can be imposed. In more serious cases, Ofcom has the right to initiate criminal proceedings against senior executives of the company (Government of the United Kingdom, 2025). Furthermore, the EU’s Digital Services Act stipulates that large online platforms and search engines must inspect and evaluate their services, algorithms, and functionalities for potential systemic risks. These platforms also need to regularly release public reports and allow regulatory authorities to review their algorithms and content review rules (Kaesling & Wolf, 2025). In summary, these measures taken by the governments have jointly promoted platforms to take on more responsibilities, shifting from passive solutions to proactive prevention and reducing more online harms.

Conclusion: Moving towards the future

Hate speech and online harms are not accidental events. The functional defects of platforms, social inequality, or excessive pursuit of profits by capital can all lead to the frequent occurrence of hate incidents. Digital platforms already occupy a dominant position in people’s lives, and it is not advisable to shirk social responsibilities. These platforms should ensure the dignity and safety of every user. Verbal violence needs to be regulated while not infringing on people’s right to freedom of speech. Relying solely on self-regulation or remedial measures by enterprises is not enough. Relevant departments should establish a more comprehensive legal framework, transparent algorithmic mechanisms, as well as focus on protecting the rights and interests of vulnerable groups. Each platform user should also be responsible for their own behaviors and speech. People cannot tolerate the spread of hate speech but rather share and pursue more positive content. The government, enterprises, civil organizations, and users should all participate together to create a civilized, orderly, open, and inclusive cyberspace, preventing hatred from eroding people’s lives.

References

Al-Zaman, Md. S. (2024). Patterns and trends of global social media censorship: Insights from 76 countries. International Communication Gazette. https://doi.org/10.1177/17480485241288768

Australian government. (2024). Statutory Review of the Online Safety Act 2021 Issues paper (p. 19). https://www.infrastructure.gov.au/sites/default/files/documents/online-safety-act-2021-review-issues-paper-26-april-2024.pdf

Barlow, J. P. (1996, February 8). A Declaration of the Independence of Cyberspace. Electronic Frontier Foundation. https://www.eff.org/cyberspace-independence

Center for Countering Digital Hate. (2023, September 13). Share of posts and accounts reported for hate speech that are still hosted on X (formerly Twitter) as of September 2023 [Graph]. Statista. https://www.statista.com/statistics/1462960/posts-accounts-hate-speech-hosted-x-twitter/

Government of the United Kingdom. (2025, March 17). Online Safety Act: explainer. GOV.UK. https://www.gov.uk/government/publications/online-safety-act-explainer/online-safety-act-explainer

Greengard, S. (2025, March 18). Gamergate. Britannica. https://www.britannica.com/topic/Gamergate-campaign

Halliday, J. (2012, March 23). Twitter’s Tony Wang: “We are the free speech wing of the free speech party.” The Guardian. https://www.theguardian.com/media/2012/mar/22/twitter-tony-wang-free-speech

House of Commons Home Affairs Committee. (2017). Hate Crime: Abuse, Hate, and Extremism Online (p. 10). https://publications.parliament.uk/pa/cm201617/cmselect/cmhaff/609/609.pdf

Jorgensen, R. F., & Zuleta, L. (2020). Private Governance of Freedom of Expression on Social Media Platforms: EU content regulation through the lens of human rights standards. Nordicom Review, 41(1), 51–67. https://doi.org/10.2478/nor-2020-0003

Kaesling, K., & Wolf, A. (2025). Sustainability and Risk Management under the Digital Services Act: A Touchstone for the Interpretation of “Systemic Risks.” GRUR International, 74(2), 119–131. https://doi.org/10.1093/grurint/ikae156

Massanari, A. (2017). #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Meta Transparency Center. (n.d.). Hateful Conduct. Retrieved April 7, 2025, from https://transparency.meta.com/en-gb/policies/community-standards/hate-speech/

Ng, D. (2015, October 29). Gamergate advocate Milo Yiannopoulos blames feminists for SXSW debacle. Los Angeles Times. https://www.latimes.com/entertainment/la-et-milo-yiannopoulos-gamergate-feminists-20151028-story.html

Office of the eSafety Commissioner. (n.d.). Home page. https://www.esafety.gov.au/

Pelley, S. (2021, October 4). Whistleblower: Facebook is misleading the public on progress against hate speech, violence, misinformation. CBS News. https://www.cbsnews.com/news/facebook-whistleblower-frances-haugen-misinformation-public-60-minutes-2021-10-03/

Sinpeng, A., Martin, F. R., Gelber, K., & Shields, K. (2021). Facebook: Regulating Hate Speech in the Asia Pacific (pp. 6–7). Department of Media and Communications, The University of Sydney.

Stuart, K. (2014, December 4). Zoe Quinn: “All Gamergate has done is ruin people’s lives.” The Guardian. https://www.theguardian.com/technology/2014/dec/03/zoe-quinn-gamergate-interview Wingfield, N. (2014, October 15). Feminist Critics of Video Games Facing Threats in “GamerGate” Campaign. The New York Times. https://www.nytimes.com/2014/10/16/technology/gamergate-women-video-game-threats-anita-sarkeesian.html

Be the first to comment