Figer1: Families of the South Korean plane crash are crying out in grief for their lost loved ones. From They lost their families in a plane crash – then came the online hate,2025, BBC NEWS. ( https://www.bbc.com/news/articles/cx28n141209o)

Have you seen attacks on the families of the victims in the comments section of this disaster news? Have you noticed that some users base their own pleasure on the pleasure on the pain of others? Are platforms responsible for the consequences of online attacks?

INTRODUCTION

Firstly, we need to define what cyber violence is. Many people’s first reaction would be hating speech, but that is incomplete. On hate speech we define it: Hate speech has been defined as speech that ‘expresses, encourages, stirs up, or incites hatred against a group of individuals distinguished by a particular feature or set of features such as race, ethnicity, gender, religion, nationality, and sexual orientation’ (Parekh, 2012, p. 40; Flew,2021). There is no denying that hate speech is many speeches is many times trigger for online harms, but cyberviolence is by no means limited to hate speech. “In particular, cyber-violence encompasses hate speech, threats, stalking, harassment, sexual remarks, vulgar language, and cyber-bullying.” (Muaadh Mukred et al., 2024) Hate speech can be considered a form of online harms on some level. “However, the emphasis in this legislation is upon individualised harms, which are defined under s 234 as ‘physical or psychological harm’.” (Farrand, 2024) That is to say, harms to individuals, including verbal abuse, harassment, human searches and other behaviours are considered online harms.

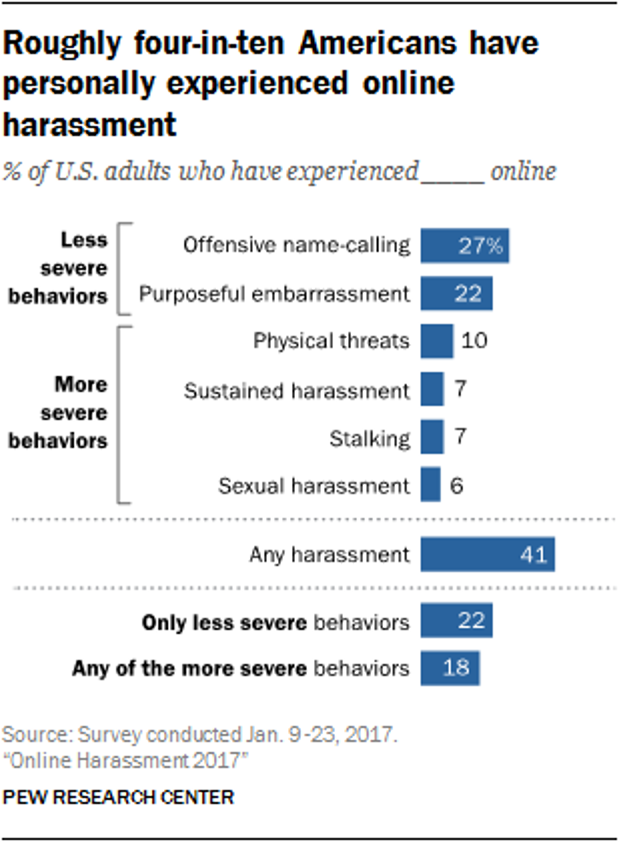

Online harms are now a great cause for concern. In a 2017 report by the Pew Research Centre in the US, “41% of internet users claimed to have experienced online harassment” (Maeve Duggan, 2017). This is a very large statistic, with almost half of the population facing online injuries.

Figer2: Research data on cyber injuries suffered by Americans. ( https://www.pewresearch.org/internet/2017/07/11/online-harassment-2017/)

Among the causes of online harm, the tacit approval and even guidance of platforms is a key problem that cannot be ignored. The major platforms seem to follow the principle of “traffic first”, condoning the dissemination of malicious content for the sake of traffic and not dealing well with online harm. For example, a famous platform “Facebook”, its page administrators said Facebook did not remove the material they reported and they felt powerless over the process of flagging hate speech.(Sinpeng, 2021) Does such behaviour mean we are acquiescing to distorted digital culture? How can we face and change it next time?

CASE STUDY1: Can Disaster Events Also Be Bait For Platforms To Attract Traffic?

Figer3: Police arrested six users for posting violent online comments in response to the plane crash. (Ng, 2025)(https://www.bbc.com/news/articles/cx28n141209o)

In the aftermath of the plane crash on South Korea’s Jeju Island, the online atmosphere, which should have been a place of shared concern for the families who lost their members, has taken a strange turn. Many users began to attack them online, including but not limited to attacks on the gender of the crew and malicious speculation about the families. The platforms also allowed these online attacks to spread and even distilled them into trending terms to attack searches from the public.

Online harms display

After the incident, some netizens made a series of hate speeches against women, claiming that the pilot and co-pilot in the crew were both women and that’s what led to the incident. But then “Even fueling a surge of misogynistic comments. Both were identified as male.” (Choi Jeong-yoon, 2025) Hate speech against women simply because they are men themselves can trigger a surge in misogyny and divert attention from the incident.

Some other internet users even attacked the families of the victims directly and were eventually arrested by the police. In news, one man who was 30 years old had posted related comments on social media platforms, “Think about how much the compensation for all those people are, families with multiple deaths must be thrilled.” (Choi Jeong-yoon, 2025) Attempts have been made to divert the public’s attention and attack family members online with such hate speech and false statements. What should be attention is that online harm on the internet is not only for the instantaneous harm at that time, but subsequently it gradually transforms into harm to the body and even the mind.

Problems with the platforms

In addition to the users who attacked others in the online injuries triggered by this disaster accident, the platforms did not do a good job of dealing with violent speech. “Authorities have taken down at least 427 such posts.” (Ng, 2025) With such a large number of offensive posts, the platform can be said to have allowed them to spread and proliferate, even to the extent that the police eventually had to step in an arrest the users concerned. Wouldn’t the platforms have been able to reduce more harm if it had prevented these posts from being made in the first place on the grounds of attacking others?

Although we all have the right to freedom of speech. Just as John Perry Barlow said, “We are creating a world where people anyone, anywhere everyone can express their beliefs without fear of needing to remain silent because of uniqueness.” (Barlow, 1996) But does having the right to free speech justify platform inaction and internet users hurting others? What’s more, the excuse of “freedom of speech” should not be used to let cyber-violence will gradually be transformed into real injuries to the members’ body and mind in reality.

Critical thinking

The reason for the platform’s inaction or even acquiescence is probably that the platform nowadays pursues the principle of “traffic first”. Does it mean that if it can bring users and views to the platform, all other comments can be allowed to grow without boundaries? Shouldn’t the platform be held responsible for the bad consequences of these comments? What should be done to improve this situation?

CASE STUDY2: The Platform’s Inaction Or Even ‘Negative’ Guidance Has Led To More Online Harms!

Figer4: A video describe how Zhao Lusi took a step towards depression because of online injuries.(팝콘뉴스, 2025) https://youtu.be/YoWlQq2jqL4?si=P7ukQqtoTNNzfUA1)

Figer5: Photo showing Zhao faint and stay in a wheelchair. Photo from: Baidu (South China Morning Post, 2025) (https://www.scmp.com/news/people-culture/china-personalities/article/3293184/china-actress-claims-verbal-physical-abuse-talent-agency-over-failure-land-roles)

If ordinary people can’t stop online attacks because they don’t have a strong team to protect them, are celebrities free from online attacks? The answer is no, and the platform’s algorithmic recommendations are causing even more online attacks to come their way.

Event Showcase

Chinese star Zhao Lusi suffered from depression somatization in 2024, so she published her recovery process on the WEIBO website. At the same time, after recovering from her condition, she took part in the filming of a variety show “Little Courage” free of charge, with the intention of bringing positive energy and encouragement to those who also share the same experience but suffered from a lot of unwarranted attacks on the Internet. Celebrities as public figures have high exposure and influence, which can lead them to become the main target of cyber-violence at the same time.

Some of the online attack rhetoric goes something like they feel that the reason why Zhao Lusi has a disease instead of going to the doctor instead of showing her recovery from depression is to attract and even to earn more money. These online harms have given Zhao Lusi, who is recovering, another mental beatdown. Even before this incident, Zhao Lusi had already incurred many violent comments from netizens, such as big face, poor lines and other verbal attacks.

It is believed that the causes of depression are many and varied, but the harmful sentences online must have an impact on the people involved. Internet users need to manage themselves. It is bad for them to go about asserting another person’s behavior on the basis of their own assumptions, and that a simple attack on an internet comment can really give the potential to turn into a real-life injury.

Responsibilities On the Part of the Platform

We often find that the great reason for the shift in the direction of netizen comments is due to the guidance of the platform’s algorithms, such as the WEIBO, TITOCK’s hot search list will bring great guidance to the user. “Without it, digital platform services risk becoming dysfunctional and toxic, resulting in negative impacts on users and broader communities.” (Working Paper 1: Literature Summary – Harms and Risks of Algorithms | the Digital Platform Regulators Forum (DP-REG), n.d.) This is the kind of secure and efficient environment that we expect the platform’s algorithms to provide us with, but it is clear platforms are not currently to use its algorithms very well as we want.

Figer6: Most of the hot search list on the Weibo is about the topic of Zhao Lusi’s condition. ( https://pop.inquirer.net/373506/zhao-lusis-fans-demand-accountability-from-weibo-after-platform-allegedly-fueled-harmful-online-criticism)

As the platform’s algorithm prioritises extreme comments in the effort to grow “traffic”, the platform instead recommends more violent comments but ignores the fact that both ordinary people and celebrities should be protected. In the case of Zhao Lusi, many fans felt that the Weibo took advantage of her recovery journey to gain traffic but failed to protect her in a good way.

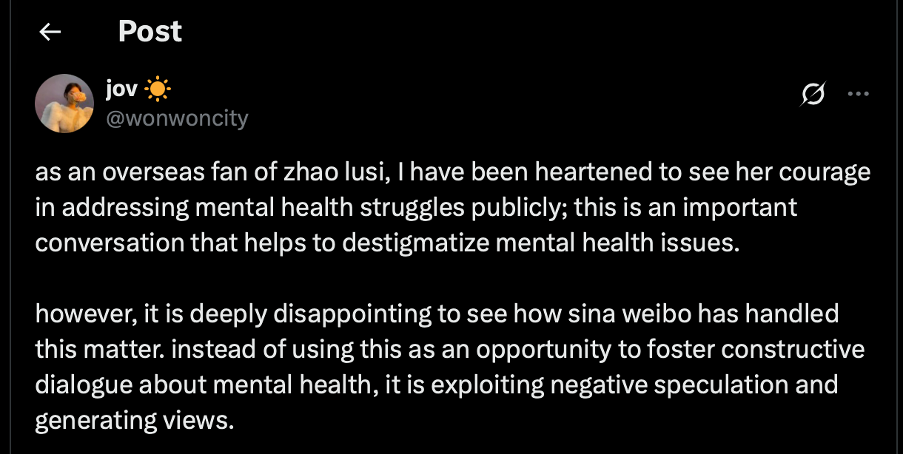

Figer7: A screenshot of comments from Zhao Lusi’s overseas fans about this unfriendly approach to Weibo.

This Zhao Lusi’s oversea fan commented on the way the Weibo platform handled the issue, pointing out that the various trending topics created by the platform using Zhao Lusi’s condition were not only unnecessary, but also used her suffering as a bait to lure people into commenting and basing her. Algorithmic recommendations that show platforms are “doing whatever it takes” to attack traffic is a mechanism that needs to be changed urgently, or it could be hurt more people in the future.

Critical thinking

Online harms and “malicious” algorithmic recommendations from platforms are not specific to a particular group of people, but rather a problem that anyone may experience. As Zhao Lusi, celebrities still have a legal team to protect their rights and interests, and are still pushed into the limelight by platform algorithms, so how can ordinary people defend their rights and interests from online harms? Therefore, while maintaining freedom of speech, how platforms can balance the protection of users, so that online harms do not affect their daily lives and physical and mental health. This is the content platform need to strengthen. So how exactly should platforms change by letting these issues go unchecked or even fueling them simply for the sake of the platform’s own traffic?

CONCLUSION

In the current era of big data, the digital platforms have an increasingly important position, should have followed the development needs of the times, and constantly develop into a public service platform to serve everyone’s life.

However, the keyboard has gradually turned from a tool for creation to a weapon for hurting people online, and the platform has gradually become a breeding ground for violence. Social media platforms often use algorithmic push mechanisms to prioritise content that is controversial and emotional in order to gain traffic, but such “traffic-first” algorithmic mechanisms inadvertently amplify the generation of violence online.

“The largest platforms increasingly function as public infrastructure even as they involve private companies.” (Flew, 2021) In order to provide users with a more secure and comfortable online environment in the future, data platforms cannot define themselves as just a simple company but have to become a platform that carries social responsibility, reduces online harm and eliminates hate speech.

In order to change this serious situation, governments need to improve their legal frameworks to strictly define the boundaries of online harm and hate speech. At the same time, an independent network regulator should be set up for transparent and open management, and the platform’s “traffic-first” service concept should be eliminated as far as possible from legal level. The platforms should also optimize the design of the algorithm in a timely manner to reduce the push of extreme or emotional content in order to reduce the online harm as much as possible. Also, improve the reporting and auditing mechanism, from the source to reduce the spread of negative energy and proliferation. For ourselves, as simple network users, we should also improve their digital quality not to be a “keyboard warrior”, refused to participate in all network violence.

As difficult as it is, online cyberviolence is not an insurmountable dilemma that requires all people to come together to make positive changes. Wish we all be free from online injuries and have a comfortable online environment in the future!

References

Barlow, J. P. (1996, February 8). A Declaration of the Independence of Cyberspace. Electronic Frontier Foundation. https://www.eff.org/cyberspace-independence

Choi Jeong-yoon. (2025, January 13). Why are Jeju Air crash victims’ families targeted by online hate? – The Korea Herald. The Korea Herald. https://www.koreaherald.com/article/10389191

Farrand, B. (2024). How do we understand online harms? The impact of conceptual divides on regulatory divergence between the Online Safety Act and Digital Services Act. Journal of Media Law, 1–23. https://doi.org/10.1080/17577632.2024.2357463

Flew, T. (2021). Regulating Platforms. Polity Press.

Maeve Duggan. (2017, July 11). Online harassment 2017. Pew Research Center: Internet, Science & Tech. https://www.pewresearch.org/internet/2017/07/11/online-harassment-2017/

Muaadh Mukred, Umi Asma Mokhtar, Fahad Abdullah Moafa, Abdu Gumaei, Ali Safaa Sadiq, & Abdulaleem Al-Othmani. (2024). The roots of digital aggression: Exploring cyber-violence through a systematic literature review. International Journal of Information Management Data Insights, 4(2), 100281–100281. https://doi.org/10.1016/j.jjimei.2024.100281

Ng, K. (2025, March 1). Jeju Air: Families attacked online after South Korea plane crash.https://www.bbc.com/news/articles/cx28n141209o

Parekh, B. (2012). Is there a case for banning hate speech? In M. Herz and P. Molnar (Eds.), The Content and Context of Hate Speech: Rethinking Regulation and Responses (pp. 37–56). Cambridge: Cambridge University Press.

Sinpeng, A. (2021). Facebook: Regulating Hate Speech in the Asia Pacific. https://r2pasiapacific.org/files/7099/2021_Facebook_hate_speech_Asia_report.pdf

South China Morning Post. (2025, January 9). South China Morning Post. https://www.scmp.com/news/people-culture/china-personalities/article/3293184/china-actress-claims-verbal-physical-abuse-talent-agency-over-failure-land-roles

Working Paper 1: Literature summary – Harms and risks of algorithms | The Digital Platform Regulators Forum (DP-REG). (n.d.). Dp-Reg.gov.au. Retrieved April 16, 2024, from https://dp-reg.gov.au/publications/working-paper-1-literature-summary-harms-and-risks-algorithms

팝콘뉴스. (2025, January 5). “Zhao Lusi Faces Attacks—Here’s Why!” YouTube. https://www.youtube.com/watch?v=YoWlQq2jqL4

Be the first to comment