In today’s digital landscape, where viral dances and life hacks coexist with political propaganda and hate-fueled rhetoric, TikTok has emerged as both a cultural phenomenon and a battleground for online harm. This platform, celebrated for its addictive algorithm and creative potential, has also become a fertile ground for hate speech, misinformation, and real-world violence. But why does a platform designed for entertainment keep making headlines for all the wrong reasons? And more importantly—who stands to gain from this toxic environment while vulnerable communities pay the price?

Introduction: The Double-Edged Sword of TikTok’s Virality

“Hate speech goes against human rights principles, and the scope for its circulation has increased exponentially in the online world” (Flew, 2021), when TikTok exploded globally during the COVID-19 pandemic, it brought with it a new era of short-form video content that prioritized engagement above all else. With over 325 million users in Southeast Asia alone (Kumar, 2023), the platform’s influence is undeniable. But beneath the surface of viral challenges and influencer trends lies a darker reality: TikTok has become a hub for hate speech, extremist ideologies, and coordinated disinformation campaigns that spill into real-world harm.

What makes TikTok particularly dangerous isn’t just the hateful content itself, but how the platform’s design amplifies it. Unlike traditional social media, TikTok’s “For You Page” (FYP) algorithm learns user preferences with frightening precision, often pushing polarizing content to maximize watch time. This isn’t an accident—it’s by design. And while TikTok, like other platforms, claims to combat hate speech, enforcement remains inconsistent at best, and complicit at worst.

This blog post will critically examine TikTok’s role in spreading hate speech, analyzing whose interests are served by the status quo, how historical and technological trends have led us here, and what this means for public discourse in an increasingly fractured digital world.

Questioning Assumptions: Who Really Benefits from TikTok’s Hate Speech Problem?

The Myth of Neutral Platforms

A common assumption is that social media platforms like TikTok are neutral spaces—blank slates where users dictate content. But this ignores how algorithms actively shape what we see. TikTok’s recommendation system doesn’t just reflect user preferences; it manipulates them by prioritizing engagement over safety. Researcher Nuurrianti Jalli found that during elections in Malaysia and Indonesia, TikTok became flooded with ethno-religious hate speech, including false claims accusing political opponents of communism and inciting violence against minority groups (Kumar, 2023) Despite clear violations of TikTok’s policies, this content remained online for extended periods, suggesting that the platform’s moderation efforts are either under-resourced or deprioritized in certain regions.

Why does this happen? One reason is that outrage drives engagement, and engagement drives profit. When hateful content goes viral, TikTok benefits from increased user retention and ad revenue, even as marginalized communities bear the brunt of the harm.

The Political Economy of Hate: Who Gains from the Chaos?

Another overlooked perspective is how political and corporate actors exploit TikTok’s lax enforcement. In Southeast Asia, Jalli notes that scrutiny of TikTok is weaker compared to Western markets due to “regulatory frameworks, enforcement capabilities, cultural perceptions of free speech, and more” (Kumar, 2023). This allows governments and political operatives to use the platform as a tool for propaganda. For example, during Malaysia’s elections, “cybertroopers” (paid online propagandists) flooded TikTok with content priming voters to associate Chinese political parties with historical violence, using hashtags like #13mei to revive traumatic memories of the 1969 Sino-Malay riots (Kumar, 2023).

Similarly, in the U.S., far-right accounts like Libs of TikTok have used the platform to amplify anti-LGBTQ+ hate, falsely labeling educators and healthcare providers as “groomers” and inciting harassment campaigns against children’s hospitals (Wikipedia, 2025). These accounts often enjoy impunity until media pressure forces TikTok to act—Libs of TikTok was eventually banned, but only after it had already radicalized millions (Wikipedia, 2025). The delay in enforcement suggests that platforms tolerate hate speech when it aligns with the interests of powerful political factions or generates controversy that boosts engagement.

Missing Perspectives: The Voices Silenced by Algorithmic Amplification

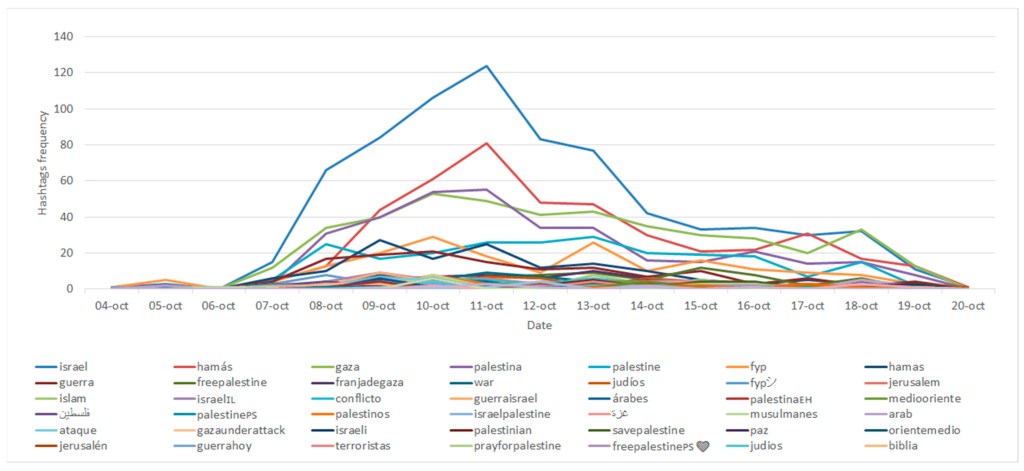

While extremists and propagandists flourish on TikTok, the voices most affected by hate speech—minorities, activists, and vulnerable youth—are often sidelined in policy discussions. For example, during the 2023 Israel-Hamas conflict, researchers found a surge in hate speech on TikTok, with young users exposed to polarized and dehumanizing rhetoric (González, 2024). Yet, the narratives dominating the platform tended to reflect geopolitical agendas rather than the experiences of civilians caught in the crossfire. It’s clear that TikTok’s system tends to push content that sparks conflict, while quieter, more thoughtful voices get lost in the noise.

Contextual Understanding: How History and Technology Shaped TikTok’s Hate Speech Crisis

A Brief History of Platform Liability (Or Lack Thereof)

If you want to know why TikTok has such a hard time handling hate speech, it helps to look at the bigger picture of social media rules. Unlike traditional media—which can be held legally responsible for harmful content—platforms like TikTok have long been protected by laws similar to the U.S. Section 230, meaning they aren’t liable for what users post. This legal loophole has led to a strange incentive: platforms can benefit financially from content that sparks outrage, without having to deal with the fallout.

The Algorithmic Arms Race: Why TikTok Is Especially Vulnerable

TikTok’s design worsens hate speech in ways that older platforms like Facebook and Twitter did not. Its recommendation algorithm quickly picks up on users’ hidden biases and then serves them increasingly extreme content just to keep them watching. A study of young TikTok users during the Middle East conflict showed that the app’s recommendation system boosted polarizing views, gradually nudging users toward more radical opinions (González, 2024). This so-called “rabbit hole” effect is especially dangerous for young people who may not have the media literacy needed to judge what they see. Moreover, TikTok’s focus on viral trends often lets hate speech hide behind memes or challenges. For instance, the “Super Straight” trend, which began as a transphobic meme on TikTok, quickly spread to 4chan and was co-opted by neo-Nazis using imagery resembling the SS logo (Guide, 2023). Because TikTok’s moderators often struggle to distinguish between humor and hate, such trends can gain massive traction before being flagged.

Global Inequalities in Moderation

TikTok’s handling of hate speech also reflects broader disparities in how platforms treat different regions. While the U.S. and EU face stricter scrutiny, Jalli’s research shows that Southeast Asian markets receive less attention from TikTok’s trust and safety teams, despite being hotspots for election-related disinformation and ethno-religious violence (Kumar, 2023). This disparity mirrors findings from Global Witness, which revealed that social media companies consistently enforce policies more rigorously in Western countries than in the Global South (Mapping, 2023). The result is a two-tiered system where users in marginalized regions are left unprotected against hate speech that could destabilize their societies.

Interpretation & Argument: Why TikTok’s Hate Speech Problem Matters Beyond the Screen

From Online Hate to Real-World Violence

The most urgent takeaway is that TikTok hate speech isn’t confined to digital spaces—it spills into physical harm. In Malaysia, Jalli documented how anti-Chinese propaganda on TikTok led to real-world protests and clashes with security forces (Kumar, 2023). In the U.S., Libs of TikTok’s posts preceded bomb threats against children’s hospitals offering gender-affirming care (Wikipedia, 2025). These cases underscore how online rhetoric can mobilize violent actors, especially when platforms fail to intervene early.

The Erosion of Democratic Discourse

Hate speech on TikTok also corrodes democratic processes by drowning out factual debate with emotional manipulation. According to Kumar (2023), research shows that hate speech increases during elections. Political figures often take advantage of TikTok’s algorithm to launch attacks on their opponents and stir up sectarian tensions. This undermines informed voting and replaces policy discussions with identity-based fearmongering. When platforms prioritize engagement over truth, democracy itself becomes collateral damage.

The Business of Outrage: How TikTok Profits from Polarization

Ultimately, TikTok’s hate speech crisis is a feature, not a bug, of its business model. Like all social media platforms, TikTok profits from attention, and nothing captures attention like outrage. A 2023 study of TikTok’s role in the Middle East conflict found that hate speech intensified engagement, rewarding creators who leaned into polarizing narratives (González, 2024). Until platforms face meaningful financial penalties for hosting harmful content, their incentives will remain misaligned with user safety.

Engagement with Evidence: Case Studies of TikTok’s Failures

Case Study 1: Election Interference in Malaysia and Indonesia

Jalli’s research shows how TikTok was used as a tool during Southeast Asian elections. Before Malaysia’s 2022 polls, pro-government groups filled the platform with false claims that linked opposition parties to communist sympathies—a narrative that added fuel to the already volatile political climate. One video by @Hussen_Zulkarai falsely accused the Democratic Action Party of communist ties and remained online despite violating TikTok’s policies (Kumar, 2023). Similarly, in Indonesia, ethno-religious hate speech targeting candidates surged months before the 2024 election, priming voters for division (Kumar, 2023).

These examples reveal three key failures:

- Delayed moderation: Content violating policies persisted for extended periods.

- Algorithmic amplification: Hashtags like #13mei trended despite promoting historical trauma.

- Geographic bias: TikTok’s enforcement was weaker in Southeast Asia than in Western markets.

Case Study 2: Anti-LGBTQ+ Radicalization and the “Groomer” Hoax

The anti-LGBTQ+ “groomer” conspiracy—which falsely equates queer people with pedophiles—exploded on TikTok before spreading to other platforms. GLAAD’s guide documents how terms like “LGBTP” (fraudulently adding “P” for “pedophile” to the LGBTQ acronym) originated on 4chan but gained traction through TikTok memes (Guide, 2023). Far-right accounts like Libs of TikTok then repurposed this rhetoric to harass educators and doctors, with real-world consequences: after Libs of TikTok targeted Boston Children’s Hospital, it received bomb threats (Wikipedia, 2025, 2025).

TikTok’s belated bans on “groomer” rhetoric (Guide, 2023) came only after the damage was done, illustrating how platforms allow hate speech to metastasize before acting.

Case Study 3: The Israel-Hamas War and Algorithmic Polarization

During the 2023 Israel-Hamas conflict, researchers analyzed 17,654 TikTok comments, finding that hate speech spiked as the algorithm pushed users toward more extreme viewpoints (González, 2024). Young users, many uninformed about the conflict’s history, were fed a diet of dehumanizing rhetoric with little context. This case shows how TikTok’s algorithm exploits complex geopolitical issues for engagement, privileging sensationalism over nuance.

Comparison & Contrast: How TikTok Stacks Up Against Other Platforms

TikTok vs. Meta: Similar Problems, Different Tactics

While Meta (Facebook/Instagram) also struggles with hate speech, its longer-form content allows for slightly more contextual moderation. TikTok’s short videos, by contrast, are harder to analyze for nuanced hate speech, making moderation more reactive. However, both platforms share a pattern of uneven enforcement. Global Witness found that Meta approved hate speech ads in Norway despite policy bans (Mapping, 2023), mirroring TikTok’s failures in Southeast Asia (Kumar, 2023).

The Global Disparity in Enforcement

Across all platforms, a troubling pattern emerges: hate speech enforcement is strongest in Western countries and weakest in the Global South. In Myanmar, for instance, Facebook’s failure to curb anti-Rohingya hate speech contributed to genocide (Mapping, 2023).. Similarly, TikTok’s light touch in Malaysia and Indonesia (Kumar, 2023) suggests that platforms still treat non-Western users as expendable.

Conclusion: Where Do We Go From Here?

TikTok’s hate speech epidemic is not inevitable—it’s the result of design choices and profit-driven negligence. While the platform has occasionally updated its policies (Hern, 2020)., these efforts remain inconsistent and reactive. To create meaningful change, we must:

- Demand algorithmic transparency: TikTok should disclose how its recommendation system works and allow independent audits.

- Push for global equity in moderation: Enforcement must be rigorous worldwide, not just in wealthy markets.

- Hold platforms legally accountable: Laws like the EU’s Digital Services Act are a start, but stronger liability frameworks are needed.

- Invest in media literacy: Users, especially youth, need tools to critically evaluate content (Kumar, 2023).

The stakes couldn’t be higher. As TikTok becomes a primary news source for young people, its role in shaping public discourse—for better or worse—will only grow. Without urgent action, we risk normalizing a digital landscape where hate is just another viral trend.

References

Flew, T. (2021-11-30). Regulating Platforms. [VitalSource Bookshelf 11.0.0]. Retrieved from https://bookshelf.vitalsource.com/books/9781509537099

Kumar, 2023, R. (2023). “Hate speech can be found on TikTok at any time. But its frequency spikes in elections.” Reuters Institute for the Study of Journalism. Retrieved April 6, 2025, from https://reutersinstitute.politics.ox.ac.uk/news/hate-speech-can-be-found-tiktok-any-time-its-frequency-spikes-elections

Wikipedia, 2025 contributors. (2025). Libs of TikTok. Wikipedia, 2025, The Free Encyclopedia. https://en.Wikipedia, 2025.org/w/index.php?title=Libs_of_TikTok&oldid=1284128275

González-Esteban, J.-L., Lopez-Rico, C. M., Morales-Pino, L., & Sabater-Quinto, F. (2024). Intensification of Hate Speech, Based on the Conversation Generated on TikTok during the Escalation of the War in the Middle East in 2023. ProQuest, 49. https://doi.org/10.3390/socsci13010049

Hern, A. (2020, October 21). TikTok expands hate speech ban. The Guardian. https://www.theguardian.com/technology/2020/oct/21/tiktok-expands-hate-speech-ban

Guide to anti-LGBTQ online hate and disinformation. (2023, June 12). GLAAD | GLAAD Rewrites the Script for LGBTQ Acceptance; GLAAD. https://glaad.org/smsi/anti-lgbtq-online-hate-speech-disinformation-guid

Mapping social media platforms’ failure to tackle hate and disinformation. (2023, Sept15). Global Witness. Retrieved April 6, 2025, from https://globalwitness.org/en/campaigns/digital-threats/a-world-of-online-hate-and-lies-mapping-our-investigations-into-social-media-platforms-failure-to-tackle-hate-and-disinformation/

Be the first to comment