Who is spying on you? Take back control over your digital privacy.

Have you ever had that weird feeling that the internet knows too much about you? Maybe you’ve searched online for new shoes, and then your Instagram or TikTok feed is flooded with shoe ads. Or maybe you talk to a friend about a trip, and immediately after, travel deals pop up on your social media. According to a 2024 report by The Australian, nearly half of Australians described the advertisements on platforms owned by Meta, such as Facebook and Instagram, as “creepy,” suspecting these platforms may be surveilling them.

Figure 1 Screenshot from Instagram, 2025.

You might be asking,” Is my phone listening to me at this point?” Your information is getting collected, researched, and sold never stop in the modern era, but you’re not the only one. What happens is that AI techniques are snooping on your work, gathering information, and guessing your choices. How do our privacy and flexibility change as a result of data tracking and systems? People do not do well in digital privacy, but it’s important today. Your rights, freedoms, and how the modern world will operate are also important. This blog will discuss how to regain control of your information, what has changed, and why it’s critical.

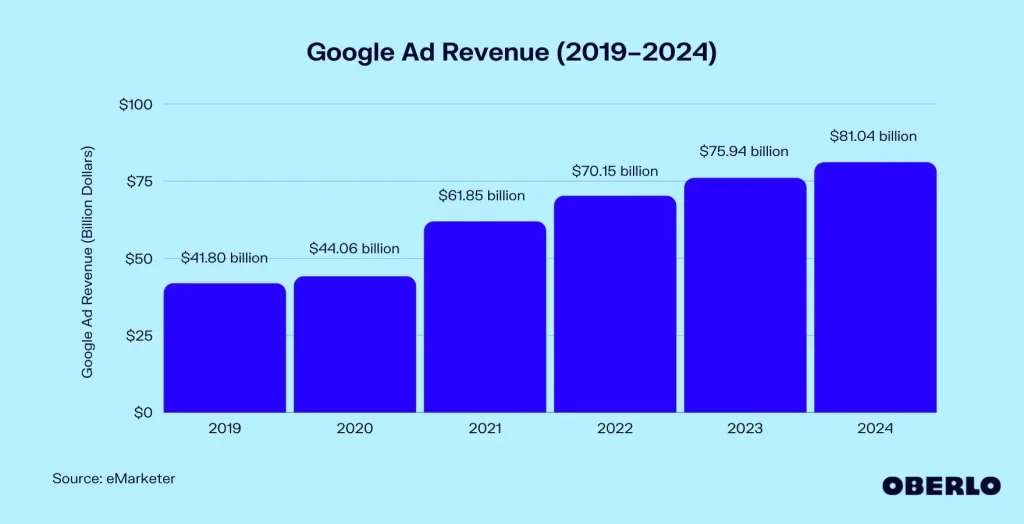

Privacy isn’t just about closing the curtains or password-protecting your phone. It’s about the power to control your personal information: who has access to it, how they use it, and how it affects your life. As Terry Flew (2021) highlights in The Regulatory Platform, the data economy is built on collecting your digital footprint. Every click, swipe, and scroll generates data about you – your likes and dislikes, political opinions, financial situation, and health issues. Because they know specifically how to target your interests and concerns, websites like Facebook and Google prosper. Google’s ad income, for instance, reached$ 237 billion in 2022, mainly driven by user-generated, hyper-targeted ads.

Figure 2. Google Ad Revenue (2019–2024).

Image source: eMarketer, via Oberlo. Retrieved from https://images.app.goo.gl/frkeE29FQVcwn3Gk9

Your personal information is monetized in a way that has a significant impact on your chances and also your personal freedom. Imagine getting denied insurance when you apply for health insurance because a company looks at the data on your supermarket store loyalty card and suspects you might have heart disease. Sounds ridiculous, right? It is already taking place. Your freedom and respect depend on it more than just a preference. Even more alarming is the common lack of transparency regarding how these systems operate. They are frequently controlled by a few tech giants, who are the ones to decide what data to gather, how to use it, and how to use it (Suzor, 2019). We regularly use our statistics, but we have little control over how it is used or processed. Companies silently gather information about us when we use apps, purchase online, and shop in shops. According to Helen Nissenbaum (2018), privacy violations happen when our information is used in ways we neither consented to nor expect, a concept she calls the violation of “contextual integrity.”

Your health monitor might, for instance, discuss your health information with third-party advertisers, changing the health plan offers you receive or reject. According to a 2023 research, Fitbit sold user information to insurance companies, raising ethical issues about the re-identification of “anonymous” information. Similar to food loyalty programs, detailed knowledge about your eating habits are collected. Without your consent, these organizations then sell or share that information with organizations you’ve never spoken to directly about your life’s personal information. You might be wondering,” How do these companies gather my data?” You’d believe it’s simpler and scarier than you think. An unknown “digital breadcrumb” is left behind in almost every modern action you perform. Important data points are generated by your online browsing history, Netflix usage habits, GPS on your cellphone, and even the Wi-Fi sites you frequently connect to. Then, companies then piece together these scattered fragments to build incredibly detailed profiles about your personal life and habits (Suzor, 2019). For instance, when you download a seemingly harmless game or image editing software, it might quietly request access to your contacts, cameras, camera, or area. The majority of users may recognize these permissions without hesitation. Your contact information may be moved to a distant site, your pictures may be analyzed for advertising or facial identification, or your exact movements may be monitored 24/7 for targeted advertisements. TikTok admitted that its application analyzed iOS device clipboard data in 2020, exposing sensitive words and reproduced passwords.

Figure 3. Screenshot from TikTok video, 2024. Retrieved from https://vt.tiktok.com/ZSrUGuwxJ/

Generally speaking, people do not understand these procedures, and they are not even being made aware of them. Why does the software want entry to your camera or camera if it’s just a quick riddle game?

Additionally, online surveillance continues. To get consumer behavior, surveillance technology is increasingly being used in open spaces, retail stores, and on roads. For instance, facial recognition devices in stores record information on how long you stay in a particular product. This data can be cross-referenced with your online data to create a smooth monitoring loop where real-world behavior URS delivers virtual targeted ads. Every aspect of our everyday lives are transformed into tangible, viable data as a result of this blending of offline and online information, known as digitization. The procedure is not always clear. How frequently are those extended privateness notices read? Occasionally. The businesses that are hidden in these great printing, but, support thorough data collection practices, which most of us would find unsettling if we knew how far along they were. The majority of us must acknowledge that without our explicit acceptance, our digital identities and behavioral patterns are brutally collected and developed.

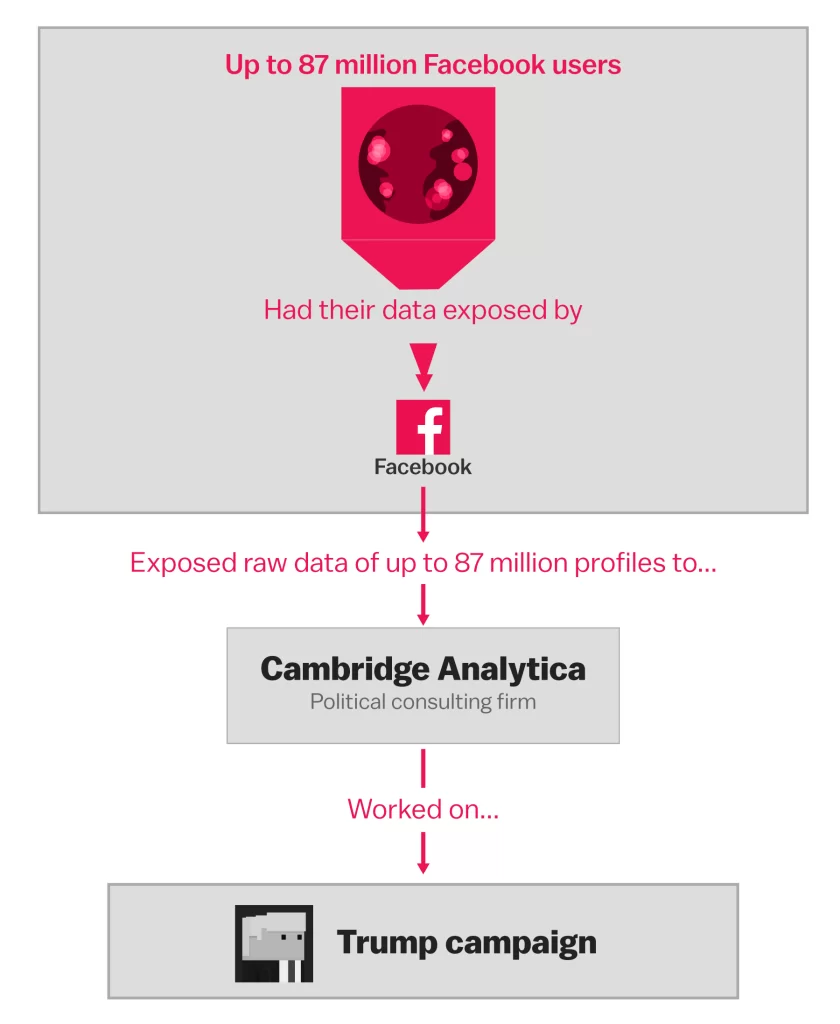

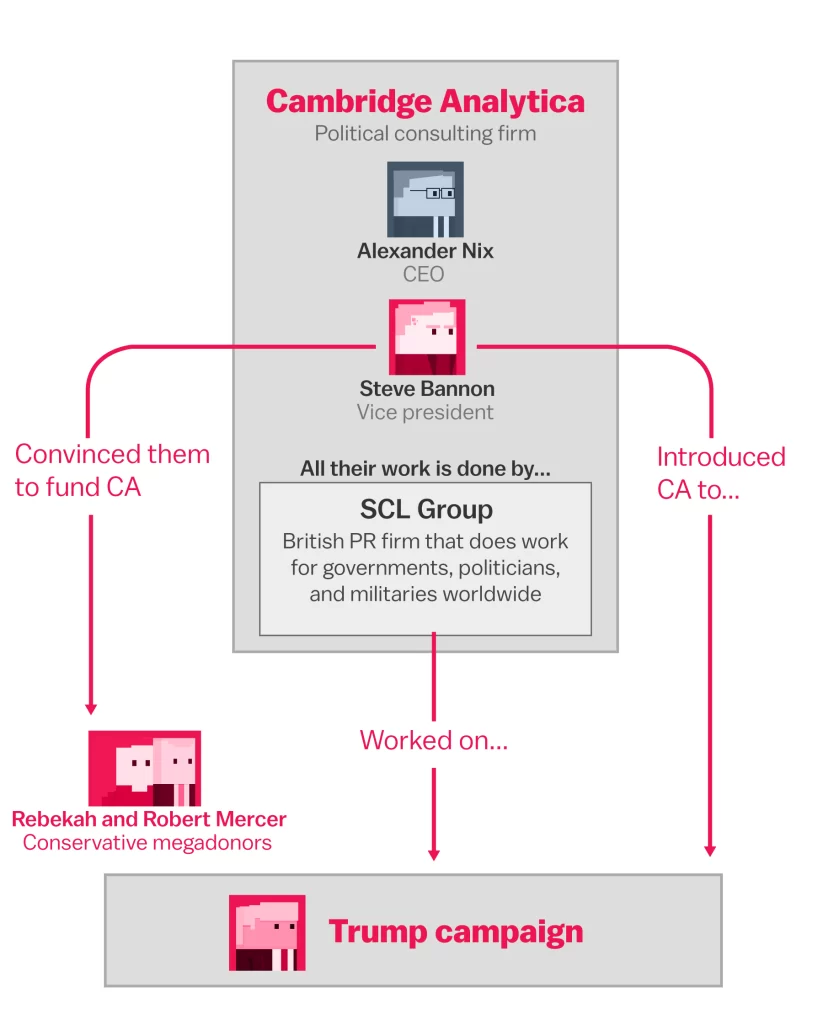

As our situation research demonstrates, let’s take a look at a well-known case of data use, the Cambridge Analytica scandal. A disturbing truth was exposed by the Cambridge Analytica scandal that broke out in 2018 when a political consulting firm had collected personal data from 87 million Facebook users without getting permission (Goggin et al., 2017).

Figure 4. Image source: Retrieved from https://images.app.goo.gl/3Np5Vo3GWABo3Cr98.

Figure 5. Image source: Retrieved from https://images.app.goo.gl/6FHHDe5A9Xb8PM8b9.

The process about how the data was leaked

However, the issue involved more than just information fraud. The company used likes and shares to create hyper-targeted political ads, particularly during the important events of the 2016 U.S. national vote and the Brexit referendum. Our most intimate details can be subtly transformed into weapons against democracy itself, which makes this more than merely another privacy breach. The essence of political decision-making must be preserved, not just the protection of personal protection. Cambridge Analytica, however, is just the start. Similar statistics catches occur monthly across a wide range of applications and systems. Take the case of Linked In’s settlement in 2023, where it agreed to pay $13 million to settle allegations that it had used user data to improve its advertising techniques in secret. These are symptoms of a growing illness rather than isolated events. We are only just beginning to understand how society is being shaped by the steady erosion of digital privacy. Real protection is the foundation of free speech, warns media expert Kari Karppinen in 2017. We naturally censor ourselves when we are aware that institutions or companies are monitoring us. That divisive viewpoint is hidden ahead. That delicate heath research fails to open. This self-regulation eventually reduces the diversity of viewpoints needed for a prosperous politics in open discussion.

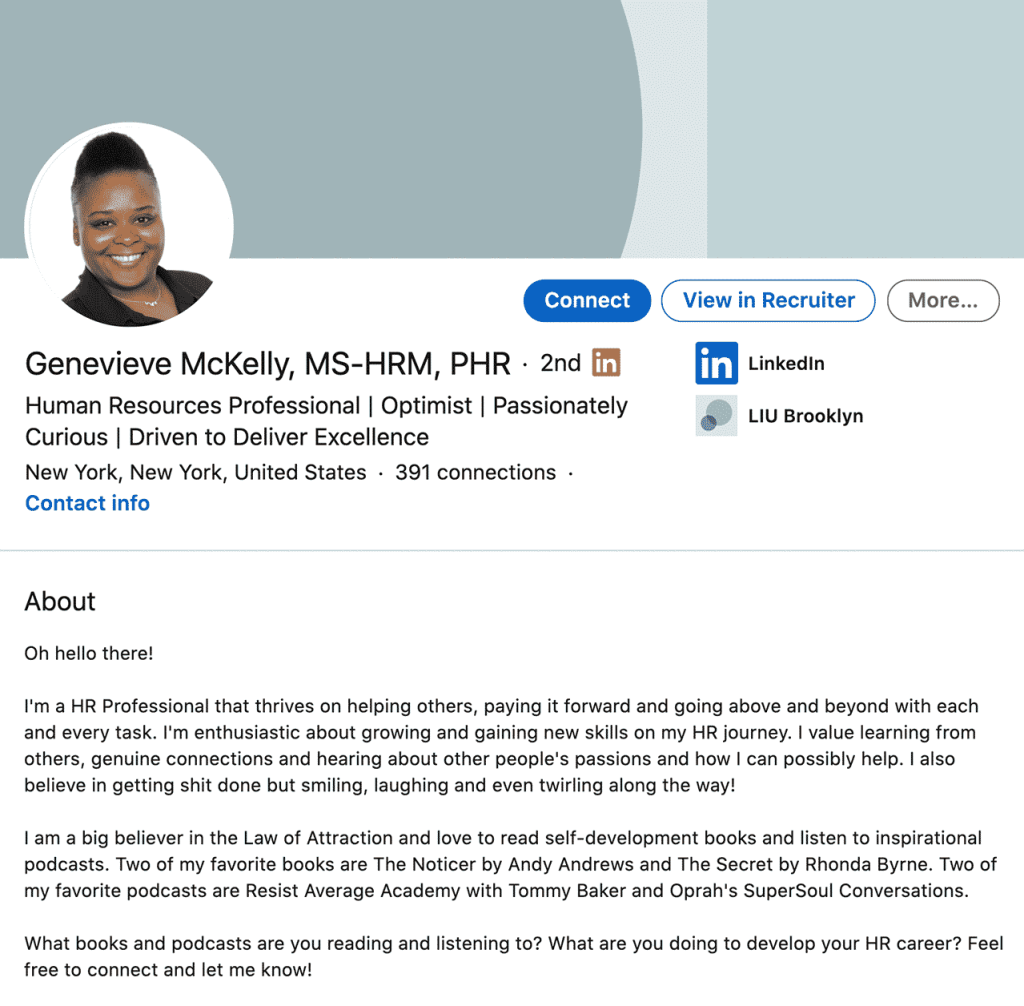

What’s the true damage, though? you may enquire. What if businesses were aware of my meal choices? More than just targeted advertising, the risk is greater. Acquire a college applicant looking for website treatments for depression. Through data brokers, admissions committees may be given access to that secret search history, changing their view before they even meet the pupil. According to a 2023 study published in the Journal of Business and Psychology, job candidates who openly discussed their experiences with anxiety or depression on LinkedIn were perceived by recruiters as less emotionally stable and conscientious, potentially harming their employment prospects.

Figure 6. Image source: City Personnel. Retrieved from https://images.app.goo.gl/Zqv4QfuHZvWNJrm77.

Next, there’s identity theft – with billions of data points bobbing in poorly secured business directories, a second leaked email from your social media accounts may give thieves the codes to your financial life. Beyond unique challenges, the current effects extend. Picture a society where people avoid examining controversial issues for fear of algorithmic judgment and where marginalized groups hesitate to manage online. This is n’t futuristic literature; it’s the direction we’re on without strong privacy protections because other perspectives are stifled before they are expressed.

Does the tide be slowed down by laws? Governments are required to accept responsibility for this charge, according to communications expert Flew (2021). The GDPR in Europe provides a blueprint for granting users the right to request data be deleted and requiring businesses to share the information they hold. The proposed AI Act of the EU expands on this by examining discrimination and adjustment of high-risk techniques. Countries that try to balance business development with fundamental human rights are following coat from Australia to Canada. Although inadequate, these steps represent significant first steps toward restoring our digital independence.

People have a lot of personal authority to protect themselves online rather than relying only on state or organization efforts to protect their digital privacy. To reduce data sharing, one can evaluate and adjust their privacy settings across social media websites. Why would a simple computer software require exposure to your area or personal information if you download applications but exercise caution when downloading them? Explore other search engines that do n’t track surfing activity but give consumer privacy. For added protection, be sure to clean your computer history and biscuits. You can improve your digital privacy and security by implementing these methods on your own. This can significantly reduce the amount of website recording that occurs. Important. In order to make better-informed decisions and obtain stronger security measures for your information, inform yourself and those around you about how businesses gather information. The protection of our future is shaped by it as well, not just by systems. Toddlers in today’s society frequently forget that their internet activities result in the selection of their information. Today’s approach to private establishes the foundation for generations-long private requirements. A world where privateness violations are routineized as ethical behaviour may result from a failure to establish boundaries and safeguards to stop aggressive data practices from spreading to younger people. Teaching young people about their private rights makes it easier for them to protect themselves and guarantees that the internet continues to be a safe space for expression of ideas and opinions. Reliability and personalization are often cited by AI and algorithms as advantages in the marketplace, but we also need to take into account how society is affected by them. The first step in regaining control over our online presence is review and adjust your privacy settings across social media platforms to minimize data sharing. Beyond just being a personal decision, private is. In today’s world where technology is prevalent, it is a fundamental straight that requires strong protection. People should understand the value of protection. This idea, which defends our rights and political principles, serves as the foundation of our sense of value. Government and people like us, who understand it all too also, have a responsibility to deal with the set and use of information. We can start the conversation by raising awareness of our digital rights and using tools to protect our privacy, even though it may seem like the average person can’t directly control the tech company’s actions themselves. Thought. We must press both governments and businesses to raise their standards for transparency and recognize that the public has a right to keep informed and have a say in how information is used. More must be expected of us, our partners, and the government. Before lightly agreeing to” Take all cookies,” pause and consider how valuable your privacy is and who benefits from it. Simple acts like this can affect the lives of millions of people, transforming the online world while defending our right. Politicians and tech giants are in charge of the death of rights, as is everyone else’s awareness and participation. In the end, our nation is affected by those shady celebrities. They must demonstrate justice, responsibilities, and clarity. Every careful choice we make about sharing our data is important. Every time we learn about these issues and tell others, we help protect privacy step by step.

References

Flew, T. (2021). Regulating platforms. Polity Press.

Goggin, G., Vromen, A., Weatherall, K., Martin, F., Webb, A., Sunman, L., & Bailo, F. (2017). Digital rights in Australia: Executive summary and digital rights: What are they and why do they matter now? University of Sydney. https://ses.library.usyd.edu.au/handle/2123/17587

Karppinen, K. (2017). Human rights and the digital. In H. Tumber & S. Waisbord (Eds.), Routledge companion to media and human rights (pp. 95–103). Routledge.

Nissenbaum, H. (2018). Respecting context to protect privacy: Why meaning matters. Science and Engineering Ethics, 24(3), 831–852. https://doi.org/10.1007/s11948-015-9674-9

Suzor, N. P. (2019). Lawless: The secret rules that govern our lives (pp. 10–24). Cambridge University Press.

Be the first to comment