Why was your meme deleted? My foreign friend posted a sarcastic political meme on Twitter, and his account was frozen for 24 hours. At the same time, we found that Facebook allowed a group of apparently violent posts to spread for three days.

This made me think about what went wrong with the AI content review. What criteria does it use to review content?

It’s not simply a matter of platform rules. There have been many similar incidents in 2023. When Meta’s AI system mistakenly deleted a live video of a hospital bombing in Gaza, citing “violent content”. Twitter’s relaxed censorship after Musk took over has led to a surge in hate speech. It’s fair to say that we live in an era where “algorithms make the difference between life and death”. But it is incomprehensible that no one understands how these “digital referees” work.

How does the AI audit work?(And why it always goes wrong)

Let’s imagine that the current AI content review technology is similar to a decision-maker hiring a non-native-speaking security guard for a social media platform who makes poor judgment in many areas. For example, it may fail to distinguish between “killing the game”(a sports term) and “killing someone” and choose to block advertisements for breast cancer and pro-breastfeeding-related content because they contain “nudity.” However, it also cannot tell which emojis refer to the drug trade.

This is the current situation of AI content review. AI review currently relies on two technologies. The first one is keyword filtering, similar to what we said above, it cannot differentiate “kill the game” and “kill someone”. It’s like playing an overly sensitive Minesweeper game. Because it can’t clearly distinguish the specific meanings of words. So it indiscriminately blocks and deletes similar words like “vaccine” and “anti-vaccine”. The second technology is image recognition. We cannot deny that AI content review is also full of loopholes in the use of this technology. There are no lack of huge scandals.

Facebook’s AI recognition system in 2021 uploaded users of African descent to the family photo labeled as “primates”. Google had also flagged photos of two users of African descent as “gorillas” in 2015. The incident quickly sparked an outcry. Although Google immediately said it would fix the problem. Two years later, Wried found that Google’s solution simply prevented tagging any images as gorillas, chimpanzees and monkeys. Google subsequently confirmed that it had removed the labeling of the phrase directly from its search results to fix the error.

Even worse, there is an algorithmic bias that cannot be ignored. Studies have shown that AI is much less accurate at recognizing hate speech from African Americans than from whites. The leading AI models are 1.5 times more likely to label tweets written by African Americans as “offensive” than other tweets, a result caused not by technical flaws but by the fact that 80% of the sample in the training data came from the North American white community and the team members responsible for the training were predominantly well-educated, 30-40-year-old white males who make a decent living.

But prejudice is not just about race. It also deeply affects the global flow of information.

CASE STUDY 1:Threads and the experiments of “safe” moderation.

If Twitter has to turn into a “Wild West of free speech”, then Meta’s Threads in 2023 will become more like a “science experiment in algorithmic censorship”.

Threads seemed like a fresh start–a social platform with “healthier conversation”. But things quickly took a turn.

Let’s imagine that we have just joined Threads, excited for a fresh, drama-free alternative to Twitter. You post something about sex education and suddenly it is shadow-banned (hidden from public view while appearing normal to the author). Your friend in Turkey shares a meme criticizing the government and suddenly it also disappears without a trace. Meanwhile, your Arabic-speaking colleague cannot even greet people normally because the AI thinks it is hate speech.

Threads promised a “healthier” social media experience, but its AI moderation quickly became overkill:

- Over-filtering (excessive removal of content due to overly sensitive algorithms) blocked even harmless educational content.

- Cultural blind spots (AI errors caused by lack of diverse training data) led to innocent words being flagged as hate speech.

- Political interference made certain topics, such as Turkish elections.

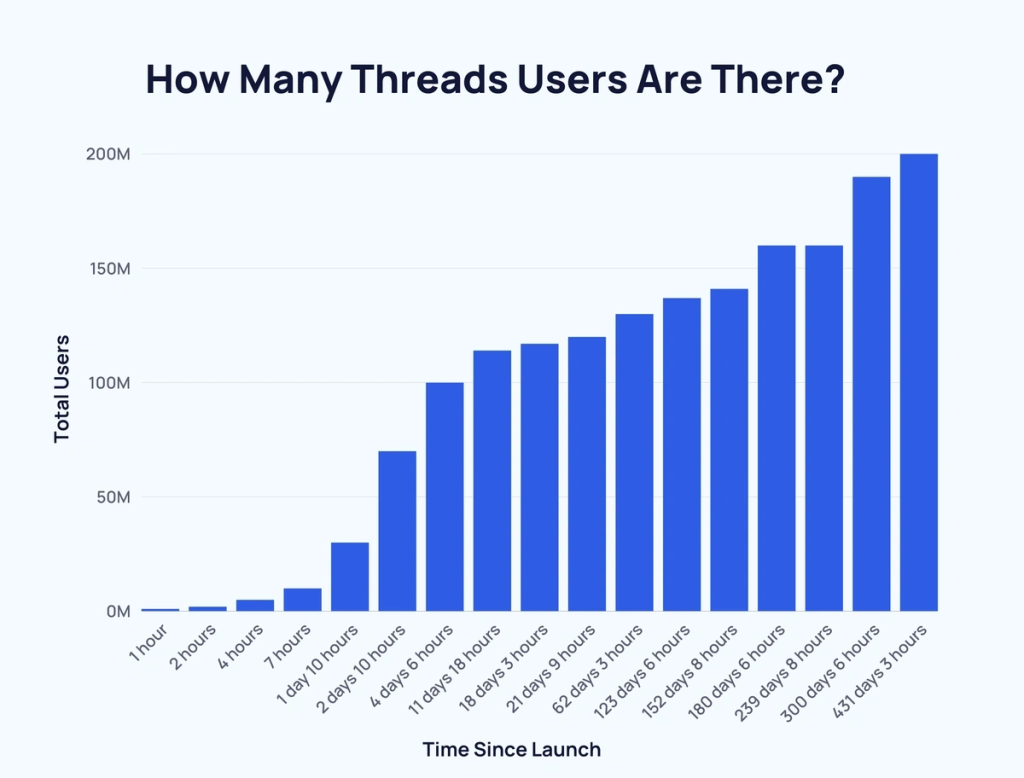

According to Sensor Tower Research, Threads’ daily active users reduced 80% to just 8 million. This was decreased by 82% from the peak value. That’s not just a failed experiment. That’s a warning signal for every platform which excessive review.

According to this case, we can find out that overly permissive freedom of speech is bad and over-regulation can be equally bad.

AI content review: Protection or systematic silencing?

AI censorship doesn’t just remove harmful content. It decides who gets to have a voice. We can always find out that the communities that are silenced are the minorities during the research.

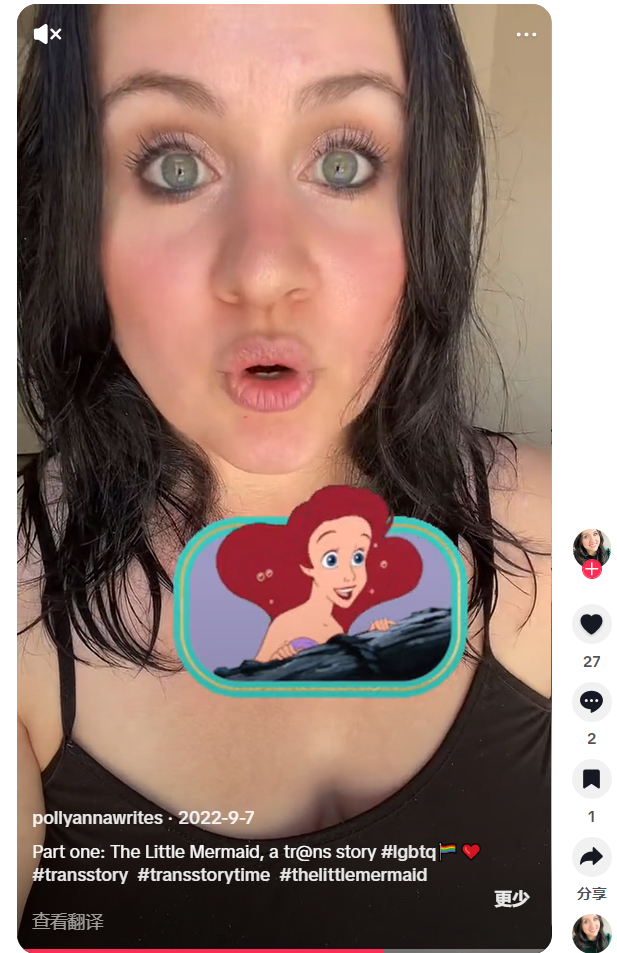

For some groups, having a voice sometimes means having to bypass or fight AI censorship. For example, transgender creators often have to write “trans” as “tr@ns” just to avoid automatic the blocking, while Palestinian journalists have to replace “terrier” with “terrorist” to report on air strikes. When a system designed to protect users instead forces them to self-censor just to be heard. AI content review is not just flawed. It is failing the very people who need to speak out most.

Through extensive research we can find that public discussion is gradually becoming trapped in its safety zone as time retires. In other words, cyberspace is gradually becoming more and more closed due to excessive content censorship and regulation. Which makes it difficult for people to discuss sensitive and controversial topics.

To avoid being misjudged or penalized, a large number of users stopped discussing sensitive social issues and healthcare professionals avoided sharing information about vaccines online. The public online discussion becomes plain and lacks diversity. AI algorithmic intervention and content management of sensitive issues such as political controversies, social movements, or individual rights has become a culture of self-censorship. People fear that any complex or even “righteous” discussion will make the system’s marking and then lead to content being removed or accounts being penalized. This makes our online debates less vibrant and limits the diversity needed for public discourse.

Last but not least, who is making censorship rules?

Even though most tech companies claim that AI review is objective. But we all know that this is not the case. AI does not judge what is harmful, its standards come from training data provided by human beings and set review rules that are often in the hands of an elite few.

Large tech companies don’t generally make their AI auditing standards public. But we can piece together clues through leaked documents, whistle-blower testimonies and research studies. For example, Human auditors are mainly from North America and Europe on some platforms and their cultural backgrounds influence their understanding of certain words and symbols. This explains why AI always takes the Buddhist swastika as a hate symbol and certain non-English-speaking words and symbols are often misjudged as hate speech.

Possible way out

Many social media platforms’ content audit algorithms are still in a closed state.Users often don’t know for what reason their posts are deleted or blocked and they don’t have access to clear explanations or effective complaint channels. This leads users to a state of helplessness and makes it difficult for platforms to be monitored by outside.

Even though the EU’s Digital Services Act (DSA) requires platforms to disclose some of their auditing standards and provide a channel for users to do the manual review, the information is still obscure lacking in specific details, and hard to understand for the common users.

CASE STUDY 2: Reddit’s Moderator Autonomy: A Solution to AI Moderation Failures?

Unlike many other platforms, Reddit has taken a radically different approach. It doesn’t rely on AI or corporate oversight. Instead of a top-down content moderation system, Reddit empowers community moderators to operate with a high degree of autonomy. To shaped their communities based on cultural context and user expectations rather than rigid AI rules.

As long as they adhere to Reddit’s core guidelines, the moderators of Reddit will have significant freedom in governing their respective spaces. This decentralized pattern avoids the possible bias and miscalculation of AI audits in dealing with cross-cultural and multilingual environments.

This system allows for greater flexibility and cultural sensitivity. But it’s far from perfect and the biggest issue is labour cost.

Reddit’s moderators (volunteer community managers who enforce content rules) collectively contribute 466 hours of unpaid work every day. If we add up that time, it amounts to $3.4 million in free labor (about 2.8% of Reddit’s annual revenue).

But it also caused a series of questions. Is a platform that long relies on unpaid labor sustainable? The management ability of moderators is uneven, can they really achieve fair content review?

Moreover, decentralization also brings some challenges. While the system avoids some disadvantages of AI moderation, it introduces potential biases from individual moderators. Some may enforce rules too strictly and others may turn a blind eye to certain behaviors. This unstable audit method also damages fairness, causing user dissatisfaction with excessive audit and selective law enforcement.

This decentralized model also weakens the company’s control. Because the power is largely in the hands of moderators. So it is difficult for Reddit to implement sweeping changes across the platform. Especially in the face of government regulation or advertisers under pressure, Reddit Will have to negotiate with its own moderators’ team. This was particularly evident in the subreddit protest API price adjustment event in 2023.

So, can we say that the Reddit model is the outcome of the AI audit failure? I think this is more like a trade-off, with Reddit prioritizing community autonomy over algorithmic control. This makes content governance more diverse and more in line with the different cultural backgrounds of each community. But at the same time, it leaves the moderators a heavy work burden and brings the problem of audit instability, which may affect the user experience on another level.

In any case, Reddit’s experiment at least proves that AI review is not the only option and despite the flawed human judgment is still a good solution.

What kind of digital public space do we need?

Figure 6: Social Media Platforms

As the issue of AI audit becomes the focus of people, We will discuss the following two issues.

Who gets to decide what speech is acceptable? Should AI be the final judge?

Instead of immediate content deletion as the preferred solution, platforms should adopt a “harm prevention” approach that means that prioritizes context, intent, and dialogue rather than blanket censorship. AI moderation should not function as an opaque operation which instantly deletes content at the first sign of controversy.

The AI content review mechanism should be designed as a review tool that can manage content while also leaving some space for various discussions.

To ensure fairness, the review norms must mandate that the algorithm training data be diverse. At the same time, the platform should incorporate minority languages and relatively niche cultural backgrounds to increase the diversity of content. Instead of defaulting to mainstream standards. As I mentioned before, AI content review systems mainly trained based on English data often make mistakes when analyzing non-English content, leading to misunderstandings and incorrect reviews of certain words or cultural expressions.

The most important thing is that users must have the right to know and the right to appeal against the decisions made by artificial intelligence. Many platforms rely entirely on automated systems to remove content and ban accounts but fail to provide clear explanations or effective appeal mechanisms and channels. This leaves users in an awkward and helpless situation. The transparency of artificial intelligence review is not only a moral issue but also a necessary condition for building user trust.

If artificial intelligence content review is to participate in the regulation of public discourse. It may at least meet the standards of manual review, be able to fairly and impartially review the information we create, and provide suitable explanations when faced with questions and concerns.

The reason why the articles we published were deleted was not simply due to a simple technical mistake. They used algorithms and censorship systems to limit the topics we could see on social platforms and determine which topic may be openly discussed. Finally, they used this data to affect how we think and act.

We can’t just function as viewers. Rather, we should actively participate and raise concerns, requesting that the AI content review process become transparent and fair that users have the right to know and charm to create an open and various platform.

Reference:

Andrejevic, M. (2019). ‘Automated Culture’, in Automated Media. London: Routledge, pp. 44-72.

Al-Shifa Hospital. (2023).

https://transparency.meta.com/zh-cn/oversight/oversight-board-cases/al-shifa-hospital

Cardillo, A. (2024). Number Of Threads Users.

https://explodingtopics.com/blog/threads-users

Crawford, K. (2021). The Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. New Haven, CT: Yale University Press, pp. 1-21.

Facebook mistakenly labels Black men ‘primates’. (2021).

https://www.aljazeera.com/news/2021/9/4/facebook-mistakenly-labels-black-men-primates

Flew, T. (2021). Regulating Platforms. Cambridge: Polity, pp. 79-86.

Ghaffary, S. (2019). The algorithms that detect hate speech online are biased against black people.

Hao, K. (2021). How Facebook got addicted to spreading misinformation.

https://www.technologyreview.com/2021/03/11/1020600/facebook-responsible-ai-misinformation

Iginio, G., Danit, G., Thiago, A., & Gabriela, M. (2015). Countering online hate speech.

Just, N. & Latzer, M. (2019). ‘Governance by algorithms: reality construction by algorithmic selection on the Internet’, Media, Culture & Society 39(2), pp. 238-258.

Keenan, N. (2023). Navigating the Impact of AI on Social Media: Pros and Cons

https://www.bornsocial.co/post/impact-of-ai-on-social-media

Meta’s Broken Promises. (2023).

Mcgirt, E. ,&Vanian. J. (2021). Bias in A.I. is a big, thorny, ethical issue.

https://fortune.com/2021/11/12/bias-ai-ethical-issue-racism-data-technology

Noble, S. U. (2018). A society, searching. In Algorithms of Oppression: How search engines reinforce racism. New York: New York University. pp. 15-63.

Pippa, N. (2023). Cancel culture: Heterodox self-censorship or the curious case of the dog-which-didn’t-bark HKS Working Paper No. RWP23-020.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4516336

Pasquale, F. (2015). ‘The Need to Know’, in The Black Box Society: the secret algorithms that control money and information. Cambridge: Harvard University Press, pp.1-18.

Rivera, G. (2023). Threads’ user base has plummeted more than 80%.Metas’s app ended July with just 8 million daily active users.

https://www.businessinsider.com/threads-meta-app-decrease-daily-active-users-mark-zuckerberg-2023-8

The Musk Bump: Quantifying the rise in hate speech under Elon Musk. (2022).

Tumas, A. (2024). Exploring the Downside: Disadvantages of AI in Social Media

Be the first to comment