Author: Yihe Zhang

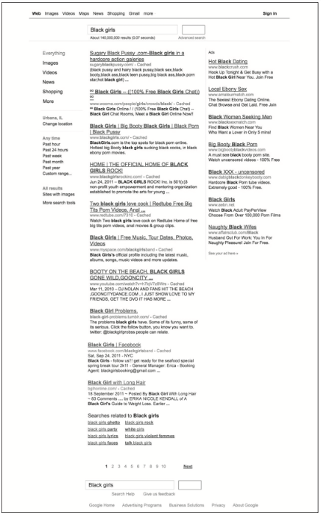

You would expect that searching for Black girls would display information on school achievements, inspiring figures, or youth programs, but for years, the face of pornography came up instead. This was not a mistake, it was the algorithm doing exactly how it was told.

One would say it is 2025, and most of us still consider Google as an unbiased fountain of information. From medicine, news and dinner recipes to politics, we are overly reliant on Google for everything. But the harsh reality is, Google is not merely answering our questions, it is moulding and manipulating our perspective of the world, which more often than not, results in a troubling manner. Can you imagine Google tagging an image of a Black couple as ‘gorillas’? A BBC report highlighted that over the years, Google as the most trusted Search Engine has been slammed for its racist connotations. Therefore, social media algorithms have shown a higher tendency toward promoting racial bias among the masses to shift societal views on racial stereotyping into digital platforms.

The purpose of this blog isn’t to rant about the irreverence of search engines. In fact, they are useful and even indispensable in the digital world we live in. However, one question that we dread asking ourselves is… Can we put our trust in the algorithm? Because once you start to scratch the surface, you quickly uncover something far more troubling than simply awful search results, it becomes glaringly obvious that those results are rooted in a deeply biased and discriminatory system.

Search Isn’t Neutral: It’s a Mirror—and a Megaphone

Neutrality is a myth, particularly in the context of search engines. Google portrays itself as an unbiased librarian simply sorting through the gazillion pages available on the internet and serving you the best possible answer based on its popularity or relevance. Like most convex structures, Safiya Noble in her book Algorithms of Oppression (2018) search engines do more than reflect biases. They amplify them. This book has highlighted the issues faced by people from racially diverse backgrounds and how the dominance of digital media platforms is oppressing these marginalised communities based on their less dominance and power.

Take the infamous example: when Noble searched “Black girls” in 2011, the results were almost exclusively pornographic. In comparison, “white girls” resulted in prom dresses, fashion tips and a slew of selfies. This was not an isolated glitch. It was a peek into the reality of how algorithms sort, rank and prioritize information based not on truth or justice, but on commercial logic and user behaviour. This further justifies the position of the Black racial identity in the digital space which is accelerating their race-based identity for creating Google’s racial stereotyping.

Google does give us the internet but does so through a pernicious lens. A study has mentioned that algorithmic oppression navigates complex intertwining social relations while gatekeeping gendered hierarchies, algorithms rank results lead to the construction of identity categories like race, gender, and identity where these gatekeepers hold the power. Here, after the massive stereotyping of a Black couple as a gorilla has been questioned against the technological affordability and its implications on society. A Podcast by Molly Kaplan has mentioned that racial stereotyping is not a glitch in Google rather it replicates the discrimination offered by digital media. Therefore, the invisible governor of our digital experience is algorithmic oppression. And once you know, you cannot unknow this.

Why Does This Take Place? The Finances (and the Data)

In the scope of this case, one factor Google harvesting our data is a phenomenon we often neglect. Business magnate Frank Pasquale explains in his publication The Black Box Society that Pasquale extends the argument with data Facebook collects profit off by running it through private algorithms. Here, the study has pointed out the economic, political, and social agenda associated with the suggestions of ads on social media. The greater the clicks, the click’s net value.

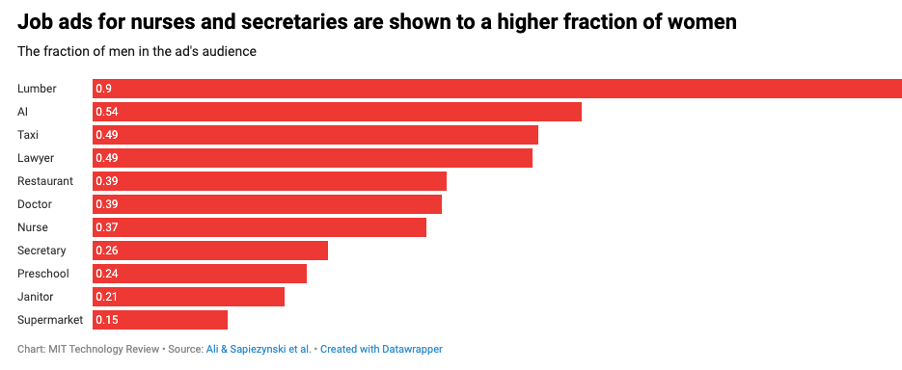

In this context, an NBC report has discussed that Facebook promotes racial stereotyping in their advertisement facilities where White women are prioritised for sending job offers where the media representation of Black women is always portrayed as the secretory to White people.

If you think this is not enough to call it algorithmic oppression, I don’t know how to respond to this. Another report by MIT Technology Review has portrayed that job advertisements for Taxi and Janitor are shown massively to the marginalised communities whereas jobs like nurse and secretaries are highly presented among women.

When the search result one is looking for motivates them to break into offensive, shocking, or sensational territory, there is nothing wrong with the equation. Enable which tends to be features. These results are rewarded by a system that pays attention to the matters of interest, even if there is misinformation contained. Misleading even if it proves to be damaging. Here, a study has marked the racial capitalism at the core of the oppressive voices in digital media where the racially charged voices are optimised and re-constructed for promoting a hyper-commercialised space by empowering corporate monopolies. Therefore, algorithmic oppression is real where big media corporations like Google and Facebook are leveraging the power of marginalised identities to maintain their capitalist attitude for commercialising the racialised identities.

We do not understand their functionality, neither are we in a position to elect for block boxes. Pasquale captures the situation of modern society’s dominated reputation speaks on finance, and analytic algorithm estranged systems reconstruct. Mark these systems overshadow search serve among multiple tasks ranging from hiring and even loan approvals. As discussed before, the monopoly of media platforms and their particularity in advertisement targeting based on the preconceived notion of assigned job roles based on gender and race is a clear indication of how algorithm culture in digital media is dominating the marginalised communities in exchange for monetary gain.

The Paradox of AI Algorithms and the Objectivity of Technology

Most people forget or choose to ignore that a computer making a decision is impartial and has no innate bias to any given human prejudice. However, Kate Crawford makes it clear in her book, The Atlas of AI that an AI system is only as objective as the data it is based on. The Internet is filled with all forms of bigotry and lies, and because AI algorithms use data from the Internet, they end up with attention bias which becomes part of the system.

AI is not simply just software, as Kate Crawford points out, it is an entire piece of machinery with its own skeleton that supports the blood. It relies on the harvesting of data, labour and other resources from the periphery, usually from less favoured societies. The same applies to algorithms like appearing suggestions on Google search and the suggestion engine of YouTube. These do not exist in a bubble devoid of social inputs, but rather systems rooted in inequality.

One of the goals of Google is to provide quality content for viewer satisfaction whilst receiving encouraging feedback from viewers to make the platform better. A warning example of this attempt gone wrong is best seen on YouTube. YouTube’s recommendation engine has come under fire for pushing people towards more radical content in politics, race, and gender. A user may choose to view an anti-feminist mainstream video only to realise they are suddenly bombarded with a deluge of anti-feminist and even misogynist content, all thanks to the platform’s zeal for engaging algorithms.

A Vox article has paid special attention to the connection between AI and humans to understand the source of algorithmic bias, a system where prejudice is born and used to support one group over another. Here, the writer has presented a different argument on how the data programmed by humans can be biased unless the biased ideology is transferred from humans to technology.

This raises a significant question about the authenticity and originality of the AI or automated algorithmic system for uplifting racial stereotyping as discussed in the cases of Facebook and Google’s search engine. Therefore, a sustainable solution is important to address these issues from the ground level.

An Issue of Power and Not Simply Technology

You may ask: is it not society which is at fault here? If people are clicking on harmful content, does it not in a way mean that the public is hungry for this content? That is partly true, but also very inaccurate.

As Pasquale and Noble argue, the issue is not simply what users engage in. It is also how platforms decide to operate and make money off of those decisions. Google can step in and do something. It can alter its algorithms to give preference to authoritative and fair content. It does that for health-related searches, or to curb misinformation during elections. But for issues about identity and representation? The history is much more disturbing.

Also, the ability of marginalized groups to access the digital seats of power is not the same. As Pointed out, if online visibility is based on clicks, backlinks, and ad revenue, then further silenced. Here, a UN report has highlighted that marginalised voices are excluded from the digital public sphere. Thus, the algorithm does not only mirror power, it reproduces it. This further highlights the claim that technology is the only phenomenon which promotes bias. Here, the presence of third parties such as other common people from the dominant class or the media platforms are actively negotiating with the biased attitude of the common people making them extremely one-dimensional and oppressive for the marginalised communities.

From Curiosity to Extremism: Focus on Global Culture on Algorithmic Oppression

Let us look at one more side: Foreign cultures and their belief system. Being an integral part of the extension of a society, the algorithmic bias is often observable in foreign countries which excludes the claim of digital media as a free space. A study in the context of Chinese digital media has highlighted that foreign women are perceived as less threatening compared to foreign men because of their patriarchal dominance. Following this, the Chinese patriarchal dominance is suppressing the identities of women and their existence through stereotyping their digital existence as weak. Therefore, even in foreign countries, the ideology of stereotyping and racism exists posing significant challenges for the identity of individuals extending the notions of stereotyping in social media.

What We Should Do?

As individuals, our sole responsibility would be to create awareness in our groups about the racial and gendered stereotyping which is dominating our digital space. This will ensure a ground-level awareness among the marginalised people and voices for supporting them would be effective for urging for a policy reformation. This would be more effective for controlling the monopolistic and commercialised attitude of digital media providers. Since the marginalised bias by AI is a reflection of human attitude toward marginalised communities based on their race and gender, the awareness creation approach will be influential for their existing hatred or bias toward racially diverse communities to bring a systematic change for AI and algorithm followed by its relationship with the society.

Conclusion

In conclusion, what the scholars and the reports said is concerning because of the oppression faced by marginalised communities which should be eradicated. Even though the hyper-commercialisation of racial comment ensues the profitability of the media houses and can be tagged as their success in their AI and automated search process, this is a severe issue for the racially ‘othered’ communities and portrays derogatory results for their existence in the digital society.

So, from now on, when you search for any controversial topics or anything related to racial identities, always recall ‘Did you really want to see this content?’ or it’s just a metaphor for showcasing the power of automated technology to overshadow our expectations from AI.

References

American Civil Liberties Union. (2021, October 14). Glitch in the Code: Black Girls and Algorithmic Justice | American Civil Liberties Union. American Civil Liberties Union. https://www.aclu.org/podcast/glitch-in-the-code-black-girls-and-algorithmic-justice

BBC. (2015, July 1). Google apologises for racist blunder. BBC News. https://www.bbc.com/news/technology-33347866

Cakmak, M. C., Agarwal, N., & Oni, R. (2024). The bias beneath: analyzing drift in YouTube’s algorithmic recommendations. Social Network Analysis and Mining, 14(1), 171. https://doi.org/10.1007/s13278-024-01343-5

Govenden, P. (2024). Disrupting the Neoliberal Capitalist Media Agenda in South Africa. tripleC: Communication, Capitalism & Critique. Open Access Journal for a Global Sustainable Information Society, 22(2), 567-591. https://doi.org/10.31269/triplec.v22i2.1497

Hao, K. (2019, April 5). Facebook’s ad-serving algorithm discriminates by gender and race. MIT Technology Review. https://www.technologyreview.com/2019/04/05/1175/facebook-algorithm-discriminates-ai-bias/

Heilweil, R. (2020, February 18). Why algorithms can be racist and sexist. Vox. https://www.vox.com/recode/2020/2/18/21121286/algorithms-bias-discrimination-facial-recognition-transparency

Illing, S. (2018, April 3). How Google and search engines are making us more racist. Vox; Vox. https://www.vox.com/2018/4/3/17168256/google-racism-algorithms-technology

Noble, S. U. (2018). 1. A Society, Searching. Algorithms of oppression: How search engines reinforce racism. In Algorithms of oppression (pp.15-63). New York university press.

PASQUALE, F. (2015). INTRODUCTION: THE NEED TO KNOW. In The Black Box Society: The Secret Algorithms That Control Money and Information (pp. 1–18). Harvard University Press. http://www.jstor.org/stable/j.ctt13x0hch.3

Reuters. (2019, April 4). Facebook’s ad system pushes stereotypes in housing, jobs, study says. NBC News. https://www.nbcnews.com/tech/social-media/facebook-s-ad-system-pushes-stereotypes-housing-jobs-study-says-n990791

UN Women. (2023, February 24). Power on: How we can supercharge an equitable digital future. UN Women – Headquarters. https://www.unwomen.org/en/news-stories/explainer/2023/02/power-on-how-we-can-supercharge-an-equitable-digital-future

West, S. M. (2017). Raging against the machine: Network gatekeeping and collective action on social media platforms. Media and Communication, 5(3), 28-36. https://doi.org/10.17645/mac.v5i3.989

Zhou, Z. B. (2024). Patriarchal racism: the convergence of anti-blackness and gender tension on Chinese social media. Information, Communication & Society, 27(2), 223-239. https://doi.org/10.1080/1369118X.2023.2193252

Be the first to comment