Introduction

In today’s digital world, hate speech is becoming a growing concern around the real world. While the Internet used to be seen as a safe space for free speech, now it become a dangerous place where people might face extreme and violent content. The line between protecting our rights and ensuring a safe place has blurred. An example is Elon Musk’s takeover of Twitter, which triggered the debate on how important platforms should regulate speech. In this blog, we are going to explore the impact of hate speech, the role platforms are playing, and how we can find a balance between free speech and responsibility.

What is Hate speech?

Speaking about hate speech, many people will think of Haters on the Internet, yes it can be a part of hate speech. But the definition can be a wide range, it can be expressed or encourage hatred to a specific group of people by their race, religion, gender, or any kind of tag they have(Parekh, 2012, p. 40). Therefore, many people would receive hatred for no reason.

Hate speech also has three other features. First, it is targeted to a specific group. Secondly, Slander a group with undesirable traits, they just want to blame the group even the information might be fake. Thirdly, Legitimizes hostility to the group, we can see through the situation of how Jewish are facing (Parekh, 2012, p. 40).

The hate speech itself is not just being violent or emotive to others (Flew, 2021, p. 116), or might lead to public violence. It can be expressed in many ways unconsciously. For example, implicit bias, uses neutral language but reinforces stereotypes or discrimination, like some races are born to being lazy. Sarcastic humor can be another serious issue, some people usually spread hate through jokes, such as demeaning a group in a guise of humor.

What are the problems and challenges?

If only in real life, hate speech will only have a negative impact in a small area. However on digital platforms, hate speech can rapidly or viral spread through social media platforms, it can lead to real-world violence or social instability potentially. We can see through the news websites that many cases can be traced back to online hate speech, and finally developed into a real Terrorist attack.

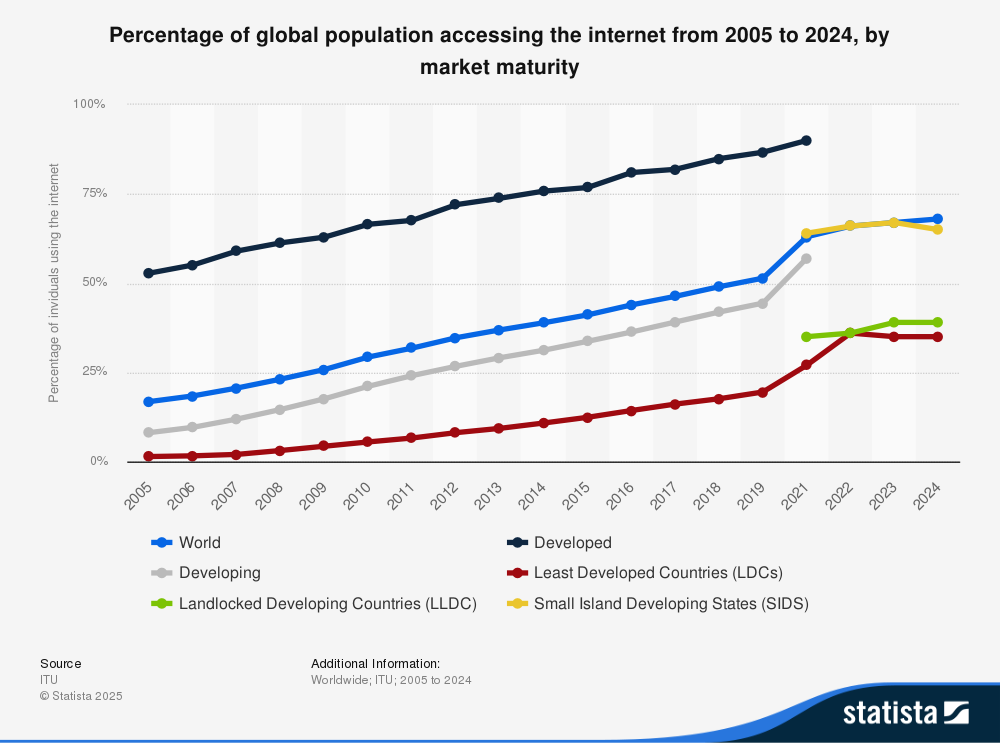

Previously, on the Internet, we had the right to speak whatever we wanted to speak, at that time, most people who could afford to have a computer were elite in society, and many wealthy families and scholars used the Internet to explore issues of great depth, they have higher-than-normal people’s quality. At that time, they also try to obey basic rules and communicate logically. Internet users also developed a word “Netiquette” which combined “Net” and “Etiquette”, a writer called Virginia Shea also published a book using the name, aiming to educate internet users on how to communicate respectfully with others when we are not in the face-to-face situation(Virginia, 1995). According to the statistics from Statista, we can see the percentage of the global population accessing the Internet in 2005, over half of Internet users are from developed countries(Statista, 2024).

Back to the topic, when analyzing the users that post hate speech, we can see some trolls just trying to look for others’ attention, while others are not just talking on the Internet, once they are encouraged by others, they will really do something dangerous to the society, this is why many social media company trying their best work on pretend any hate speech online.

However, in some countries like America, they are using the right of freedom of speech against the company that they should let others speak what they want to speak. As a private company, Twitter has the right to set the content rules and user agreements without negotiation. If these platforms fail to regulate hate speech, they will face legal liability, such as Germany which has a serious anti-hate speech law through NetzDG(Bundestag, 2018). But, we can also notice that YouTube can quickly take action like removing videos from their website when they detect issues related to copyright, but when it comes to toxic content, they don’t act at the same speed.

The role of social media in the spread of hate speech

Almost all of social media companies will use Algorithmic recommendation systems to make sure what content they push to users are what users want. This is the main reason why hate speech can have a viral spread on the internet.

Hate speech usually has a strong emotional impact which can easily trigger users’ emotional reflections, such as anger, and fear. Algorithmic recommendation systems usually evaluate users’ interests by their reflections such as comments, likes, or shares. As a result, Algorithmic recommendation systems tend to promote content that can trigger strong reactions, including hate speech and extreme viewpoints(Whittaker, 2021). Therefore, even you comment something to condemn someone, the system will assume you like this kind of content and interact with it, eventually they will push more content with the topic. This is why we have so many Clickbaits now, the more conflicts it have, the more engagements and profits they will get.

Another phenomenon that can bring to our eyes is “Filter Bubble” proposed by Pariser Eli. It means when Algorithmic recommendation system only promotes people what they are interested in, it will make users remain in a world that they already accept(Pariser 8), which will gradually narrow their perspective. In such an enclosed information environment, users only encounter like-minded groups and communities with extreme views, where hate speech can be reinforced once after once.

Therefore, regulating hate speech already become a huge and unavoidable issue on social media platforms.

Case study: changes by Elon Musk

In the first 12 hours Elon Musk took over Twitter in 2022, hate speech jumped 500%. As soon as he became the CEO of Twitter, he immediately carried out lots of measures like layoffs, almost half of employers were dismissed to reduce costs. Most of them are in charge of tasks related to content moderation. And also some senior figures, including the chief executive, the chief financial officer, and the head of legal policy, trust, and safety(Dan Milmo, Jasper Jolly, Alex Hern, Kari Paul, 2022).

According to the report of the New York Times, Elon Musk described himself as a “free speech absolutist”, so he brought back a lot of accounts that were suspended before, including those who were classified as a terror group by U.S government, and Donald Trump who was banned because of incite violence.

Finally, the result is, after he bought Twitter, on the social media platforms, the curse against Black Americans from 1282 times per day jumped to 3786 times on average, for gay men it from 2506 times to 3964 times, for Jews or Judaism it increased more than 61%(Sheera Frenkel, 2022). Many sponsors and advertisers worried about their investments and some of them pulled out from the platform, Twitter lost many revenue from it.

We can see after Elon Musk took over Twitter, the platform’s content moderation policy has some huge changes, which directly influenced the environment of the platform and social responsibility. Even though he proposed free speech and relax the content moderation, but he has not fully considered about the relationship between right and responsibility, which lead to viral promotion of hate speech and extreme content.

First of all, Twitter dismissed lots of employers and reduced the power of the content moderation team, leading to the amount of inappropriate content rising instantly. The census rules of the platform were relaxed, and many unstable accounts were brought back, especially those right-wing figures, that helped hate speech and extreme points spread widely. Aggressive content targeting minorities has significantly increased, becoming an unsafe online environment atmosphere.

Secondly, Musk highly proposed free speech and ignored his social responsibility for such a big company, especially with the speed of information spread. Lack of regulation, hate speech and misunderstanding information would easily spread. Trust of advertisers and users would declined, nobody want their ads appear in a toxic environment, which will impact Twitter’s economic stability.

Besides, Musk also introduced a paid verification system “Twitter Blue”, made the platform more commercialized. Though through this action Twitter can get more profits in a short time, it brings other issues such as fake official accounts and fake news. Blue marks can be considered as authority for those who don’t know how to earn it, which only costs a few dollars. Therefore, the system leaves a huge loophole for malicious accounts to more easily mock public figures or institutions, further contributing to the spread of confusion and disinformation.

Finally, the biggest challenge for Twitter is to find the balance between free speech and platform responsibility. When reducing the limitation of speech, it should be considered twice about hate speech, fake news, and extreme content.

Where is the future?

Through the case, we can see that effective regulation and moderation are not just policy tools, they are essential safeguards to our digital public area. It raises an important question to us: how should digital platforms develop in the future?

Of course, by using regulation from platforms. But it is clearly not enough, we can also explore the role and responsibility of users themselves. In an anonymous fragmented information environment, users are more likely to lack responsibility and shame, and emotional speech can be easily released. What’s more, we can also encourage platforms to design a notice to calm down their users. For example, we can educate basic rules and manners to users when they register their accounts or join a community. Some platforms display a notice before users post sensitive content, like “Are you sure you want to say this?”

We can also solve the issue from a psychological perspective by analyzing their behavior and the underlying logic of hate speech. Try to understand why people post hate speech online, what is their motivation, what they are seeking, and what the social impact it will cause. Alternatively, we can also improve platform recommendation systems from this perspective, reducing the impact of filter bubbles, or potentially pushing some positive content when the system detect warning signals during usage.

Beyond policy and users’ responsibility, the development of technology also can be a crucial part of the spread and moderation of hate speech. The rapidly developing AI has become a source of hope in many industries, and so have social media platforms. An algorithmic moderation system can significantly reduce the work and cost compared to human content moderation. The Guardian posted news that recently, Meta was sued by employers in Kenya because their moderators were diagnosed with PTSD due to exposure to extreme content with low pay (Robert Booth, 2024). If AI can take over the jobs, those people in developing countries won’t get treated like that. However, how to define hate speech and the potential risks of Algorithms helping the spread of hate speech can still be a challenge to our research.

Globally speaking, we can notice that different countries have different policies and practices when defending hate speech. For example, the European Union passed the Digital Service Act (European Commission, 2022), asking platforms to take more responsibility, enforce content removal and enhance transparency. During that, we can also consider how policies and practices across different regions can achieve balance on a global scale.

Conclusion

Through the Twitter case under Elon Musk’s leadership, we can see that the platform can shape the online environment and influence real-world by technical and political decisions, but it is not enough to only do this. Otherwise, it will allow harmful content to spread freely under the name of free speech, creating a toxic space for users. Moreover, both platforms and users must take responsibility. Platforms need to develop a smarter and more ethical system, and users must have a sense of self-regulation. At the same time, policies like the DSA show that the government also has a role to play. The future of digital space depends on collaboration between technology, regulation, and human judgment, to build a healthy environment.

Reference list

Sheera Frenkel(2022, December 2). Hate Speech’s Rise on Twitter Is Unprecedented, Researchers Find. The New York Times. https://www.nytimes.com/2022/12/02/technology/twitter-hate-speech.html

Dan Milmo, Jasper Jolly, Alex Hern, Kari Paul(2022 October 29). Elon Musk completes Twitter takeover amid hate speech concerns. The Guardian. https://www.theguardian.com/technology/2022/oct/28/elon-musk-twitter-hate-speech-concerns-stock-exchange-deal

Statista(2024, May). Percentage of global population accessing the internet from 2005 to 2024, by market maturity. https://www.statista.com/statistics/209096/share-of-internet-users-worldwide-by-market-maturity/

NBC Bay Area[@NBCBayArea]. (2022, November 1). Hate Speech on Twitter Jumps 500% in First 12 Hours of Elon Musk Takeover: Report. [Video]. YouTube. https://www.youtube.com/watch?v=pb8lZ_lOZBQ

Robert Booth(2024, December 19). More than 140 Kenya Facebook moderators diagnosed with severe PTSD. The Guardian. https://www.theguardian.com/media/2024/dec/18/kenya-facebook-moderators-sue-after-diagnoses-of-severe-ptsd

Parekh, B. (2012). Is there a case for banning hate speech? In M. Herz and P. Molnar (eds), The Content and Context of Hate Speech: Rethinking Regulation and Responses (pp. 37–56). Cambridge: Cambridge University Press.

Terry Flew. (2021). Regulating Platforms (p. 116). Polity Press.

European Commission. (2022). The Digital Services Act package. https://digital-strategy.ec.europa.eu/en/policies/digital-services-act-package

Bundestag. (2018). Network Enforcement Act Regulatory Fining Guidelines Guidelines on setting regulatory fines within the scope of the Network Enforcement Act (Netzwerkdurchsetzungsgesetz -NetzDG). https://www.bundesjustizamt.de/SharedDocs/Downloads/DE/NetzDG/Leitlinien_Geldbussen_en.pdf?__blob=publicationFile&v=3&utm

Shea, Virginia. Netiquette. Utrecht, Bruna Informatica, 1995.

Whittaker, Joe, et al. “Recommender Systems and the Amplification of Extremist Content.” Internet Policy Review, vol. 10, no. 2, 30 June 2021. https://policyreview.info/articles/analysis/recommender-systems-and-amplification-extremist-content?utm

Pariser, Eli. The Filter Bubble: What the Internet Is Hiding from You. New York, Penguin Press, 2011, p. 8.

Be the first to comment