Two important events occurred in China in 2024. The 144-hour visa-free trip to China is popular on YouTube social media platforms. Compared with the same national tourism videos, if the blogger travels in China, his traffic will be much greater than the videos of traveling in other countries. From the beginning of the comment section, “Did you take money from the Communist Party of China?” To “see the real China through these videos”. From these videos and comments, I feel the differences in thinking patterns in different cultural contexts, but also deeply realize that news media and social platforms have greatly influenced people’s cognition. As a carrier of voice, the news media is the most important means of social education.

A large number of social refugees have poured into China’s popular social media platform Xiaohongshu, most of whom are Americans from TikTok. Although one of the reasons is that Trump is trying to ban the use of TiK ToK in the United States through legislation, I think the root cause is that the people who have escaped from “The Truman Show” are no longer tools for political struggle. Capital controls the media narrative, and the “information cocoon” created by the algorithm push brings more difficulties to people. Many refugees who run to the Little Red Book learn about the real China from the real Chinese instead of obtaining information from the media. Of course, there may still be some wrong information resources, but to a certain extent, this exposes the illusion of many authoritative Western media reports.

However, now fake news can not only be faked by writing but also show a world that is both true and false in more diversified ways such as pictures, video, and audio through AI. In the face of such a huge challenge, how should people identify it? How should government governance be promoted?

Information is Weapon

Nowadays, fake news is not only used in political struggles but also becomes a weapon of war. For example, in the Russia-Ukraine war, the country uses false information as a “war weapon”, including emoticon packs, fake videos, artificial intelligence-generated content, and distorted truth, and creates chaos through social platforms to manipulate international public opinion.

Last year, some residents of Kharkiv, Ukraine, received a text message from government officials asking them to escape from there before the Russian army surrounded the city. The alert not only had the logo of the Ukrainian National Emergency Service Center but also the local police station that first identified the fake news on the infographic or safe escape route. The director and other government officials were able to give timely feedback, but in fact, it was inevitable that there would be panic among the people, and some leaky fish might fall into the enemy’s trap. The Ukrainian side said that Russia not only launched fierce attacks on mobile phone text messages but also fell into an incubation ground for fake news on social media. On social media alone, there are more than 2,000 posts on social media that are classified as false information about the war by the Ukrainian authorities on average. At the same time, Moscow also accused Ukraine and Western countries of launching a complex information war against Russia. Ukrainian officials admitted that they did use online activities to try to raise the anti-war sentiment of the Russian people, and Ukraine’s digital army would also rely on exaggeration or selective information to boost morale — and support from the international community.

It can be seen that both sides are waging an information war, but from different national positions, they describe the dissemination of false information as “strategic communication” (Flew, 2021), which is a large amount of content full of lies, making it almost impossible for people to identify what is true and what is false, and this speed of development It has far surpassed the traditional forms of governance and media supervision. Led by state actors, official institutions directly plan the content, and use telecommunications technology and social platform algorithms to promote anti-war groups and conspiracy theorists to participate in the discussion.

The effect of false information as a strategic weapon of communication is remarkable, which not only strengthens geopolitical bias but also deepens the confrontation and constraints of the EU and NATO countries behind Ukraine against Russia. The war in Ukraine may be one of the most obvious examples of the militarization of fake news, becoming a psychological weapon that confuses global audiences. Today’s social platforms can also benefit from the data infrastructure and amplification mechanism of artificial intelligence as a pusher. These platforms will prioritize participation over accuracy. (Crawford,2021) From this, we can see the failure of platform governance. They are not neutral tools, and their algorithms tend to amplify emotional and sensational content. (Flew, 2021) In addition, the bigger problem is that it has caused the collapse of public trust. It is difficult for ordinary readers to distinguish forged expert reports or real news. In the long run, this fake news will lead to the public’s trust in international institutions and authoritative media. Drop. In addition, I have doubts about the source report of this case. Russia has also been attacked by information warfare, but from this report, I can only see two descriptions of this kind, most of which are expressing Ukraine’s grievances. It is undeniable that the media reports also have a bias of the national position, so most of the time I don’t think Authoritative media is equivalent to real news. On the contrary, he may use the most rigorous editing methods to make up the most inflammatory words, so it becomes difficult for people to obtain the truth in the digital age.

False “Facts”

Nowadays, AI can generate highly realistic false videos, audio, pictures, and other content to forge characters’ remarks, behaviors, or event scenes. The media may be unable to identify false or misleading information due to defects in technical means or disguise this content as news for certain political purposes. Deep Fake provides a technical upgrade for fake news. False information has changed from simple text falsification to sensory deception, which has significantly improved deception and credibility. (Festré, 2015)

During the 2020 U.S. election, the Washington Post reported a fake Biden voice and boycotted mailing ballots (Flew, 2021). In 2023, an extremely realistic deep fake video about Trump’s call for the violent expulsion of illegal immigrants went viral on TikTok and Telegram. In 2024, Trump and his supporters shared a large number of false videos and images about Harris that did not happen in real life.

In recent years, AI technology has been updated explosively. Deep Fake has broken through the limitations of traditional fake news. It not only relies on text or pictures of vague sources, but also directly triggers the nervous system through audio-visual content, and reduces the audience’s information through emotional reflection. Critical thinking.

In addition, the production cost of false information about open-source tools for AI is also getting lower and lower. Social platform algorithms will give priority to pushing controversial topics. The hot content has accelerated the viral spread of fake news.

Traditional identification methods, such as manual verification, are difficult to cope with a large amount of AI content. The level of AI detection tools to identify deep forgery is not higher than the generation technology of fake news, and it may not have been identified as fake videos, and the hot period of news has passed, which has a huge result in the identification mechanism of fake news. A big challenge.

Fake News and Hate Speech

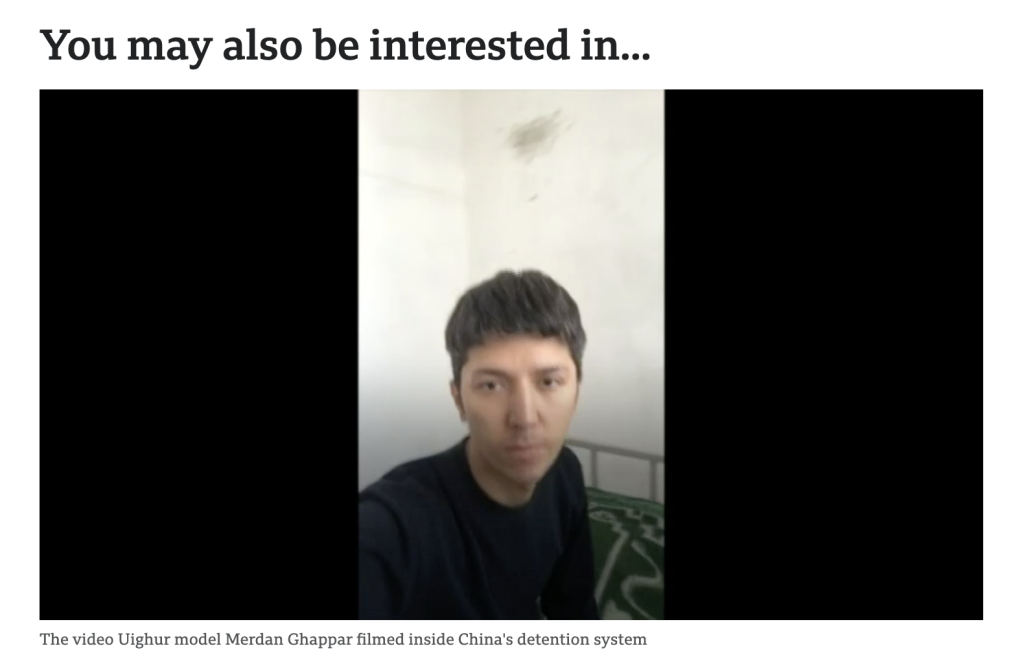

In 2021, some Western countries and institutions accused China of “forced labor” and “genocide” for the Uyghur people in Xinjiang, especially for the production process of Xinjiang cotton. These accusations triggered the announcement of international brands (such as H&M, Nike, etc.) to stop using Xinjiang cotton, which subsequently triggered a strong public backlash and boycott in China.

Many reports of “forced labor” in Xinjiang come from unverified ridiculous videos or so-called reports, which lack authenticity and transparency, and even be accused of political motives. Some media selectively cited data in their reports, ignoring the fact that cotton production in Xinjiang is highly mechanized, which further aggravated the misleading. Social media platforms have become the main channel for the dissemination of fake news, and inflammatory content is pushed first through algorithms, which quickly triggers public emotions.

As a result, a large number of hate speech has been generated. Some Western media and politicians have used the Xinjiang cotton incident to incite hostility to China and strengthen geopolitical confrontation. There is also nationalism against international brands and Western countries in China, and some of the remarks are hateful. Fake news incites public sentiment, while hate speech further exacerbates social division and ideological antagonism. The interaction between the two has affected the public’s perception of events and has also had a profound impact on the business decision-making of international brands and diplomatic relations between countries.

Governance Dilemma

Deep Fake has been used as a political weapon to create scandals and divide voters, which has caused the public’s trust in the government to collapse to a certain extent. The government’s use of automated tools for identification may cause misjudgment, and excessive censorship may violate users’ freedom of speech and cause greater legal and ethical disputes.

Australia introduced the Anti-False Information and Misinformation Act in 2024, but it was widely criticized for potentially restricting freedom of speech. In November of the same year, it announced the withdrawal of the bill on the grounds of lack of sufficient support to pass legislation. In 2023, China issued the “Regulations on the Administration of Deep Synthesis of Internet Information Services” to require the addition of logos to AI-generated content. Failure to comply with them will be held responsible for enterprise platforms or individuals that comply with the regulations.

China can adopt a top-down strong supervision model to regulate the content of online information through legal means, but no one can guarantee that it is a successful governance measure. After all, users still cannot distinguish between the texts or pictures generated by AI on the Little Red Book, and Australia faces freedom disputes in the process of implementation, which leads to legislation. Block.

The European Union strengthens platform responsibilities through the Digital Services Act (DSA), the United States relies on the First Amendment to avoid content review, and developing countries lack legislative resources. This fragmentation allows cross-border false information to flow freely using regulatory depressions. Reconstructing information governance requires the path of transforming fragmented control into collaborative governance through international cooperation. The United Nations Global Digital Compact (2023) proposes to establish a transnational false information database, requiring platforms to share malicious account network data. However, China, Russia, and other countries opposed the clause on the grounds of “information sovereignty”, which led to the stagnation of progress.

Humanistic reflection

The explosion of information and the indistinguishable environment have led to people’s cognitive confusion. When the amount of information exceeds the processing capacity of the human brain, the brain may subconsciously adopt simplification strategies, such as focusing only on the title or superficial information, and no longer analyzing the content in depth. This is the current situation of most people, but the development of this habit makes the ability to screen information decline, and it is more difficult to distinguish between real and fake news.

We have entered the era of traffic supremacy. All hot positions are like the ups and downs of the stock market, and there are always new hot spots covering old topics. In the face of the overwhelming flow of information, people may lose sensitivity to new information and only blindly accept it. The brain’s response mechanism does not activate the ability to distinguish at all. Especially when false information is mixed with the truth, people’s alertness may gradually weaken, and even choose to ignore information to reduce the psychological burden.

When true and false information is intertwined and the authenticity cannot be quickly confirmed, people begin to question all sources of information, as if there is no difference between the defendant and the plaintiff in front of the judge. This general sense of distrust exacerbates the complexity of information screening and affects the judgment of individuals and society.

Excessive stimulation of emotional and inflammatory content in information content may make people more easily influenced by emotion-driven contents, while ignoring facts directly reduces the critical thinking of the human brain.

In summary, the government still has a long way to go in dealing with fake news and information manipulation.

Reference

Crawford, K. (2021). The atlas of AI: Power, politics, and the planetary costs of artificial intelligence. New Haven, CT: Yale University Press, pp. 1–21.

Donovan, J. (2024, February 1). Fake Biden robocall to U.S. voters highlights how easy it is to make deepfakes. The Conversation. Retrieved from The Conversation. https://lsj.com.au/articles/fake-biden-robocall-to-u-s-voters-highlights-how-easy-it-is-to-make-deepfakes/

Evans, J. (2023, December 27). Experts warn of AI’s impact on 2024 US election as deepfakes go mainstream and social media guardrails fade. ABC News. Retrieved from ABC News. https://www.abc.net.au/news/2023-12-27/ai-impact-2024-us-election-deepfakes-social-media-misinformation/103267342?utm_campaign=abc_news_web&utm_content=link&utm_medium=content_shared&utm_source=abc_news_web

Evans, J. (2024, November 24). Laws to regulate misinformation online abandoned. ABC News. Retrieved from ABC News. https://www.abc.net.au/news/2024-11-24/laws-to-regulate-misinformation-online-abandoned/104640488?utm_source=chatgpt.com

Festré, A., & Garrouste, P. (2015). The “economics of attention”: A history of economic thought perspective. Œconomia – History, Methodology, Philosophy, 5(1), 3–36.

Flew, T. (2021). Regulating platforms. Cambridge, UK: Polity Press, 79–91.

Kroeger, A. (2021, March 27). Xinjiang cotton: How do I know if it’s in my jeans? BBC News. Retrieved from BBC News. https://www.bbc.com/news/world-asia-china-56535822

Mak, T., & Temple-Raston, D. (2020, October 1). Where are the deepfakes in this presidential election? NPR Morning Edition. Retrieved from NPR. https://www.npr.org/2020/10/01/918223033/where-are-the-deepfakes-in-this-presidential-election

Zou, M. (2023, June 1). China’s deepfake regulations: Navigating security, misinformation and innovation [Event]. Oxford Martin School & Online. Retrieved from Oxford Martin School. https://www.oxfordmartin.ox.ac.uk/events/chinas-deepfake-regulations?utm_source=chatgpt.com

Be the first to comment