Ⅰ. When ‘God is Dead’ Meets the Algorithm Era: We Are Losing a Shared Truth

In the flick of a finger while scrolling through their phones, Generation Z may simultaneously experience multiple cognitive earthquakes: a conspiracy theory video claiming Wuhan pandemic coincided with 5G base station deployment1, an authoritative WHO report stating the virus originated naturally, heated debates in comment sections about a British engineer diagnosed with COVID, and even inflammatory tweets alleging the Notre-Dame fire was Muslim retaliation. These conflicting fragments of ‘truth’ constitute the cognitive breakfast of digital natives.

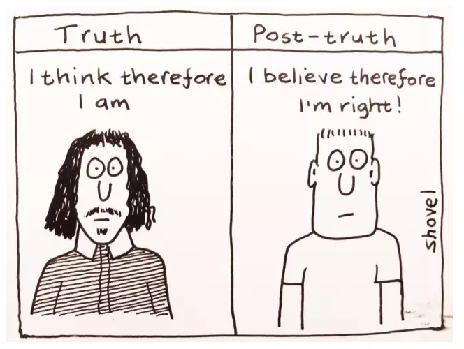

Communication scholar R. Keyes named this phenomenon in his book called The Post-Truth Era: When emotional appeals replace fact-checking as the primary force shaping public opinion, society falls into an epistemological crisis where the public loses the ability to distinguish truth from political fiction, thereby undermining democratic foundations (Keyes, 2004)2. In 2016, when the Oxford Dictionary declared ‘post-truth’ as the Word of the Year, 78% of false claims during the Brexit referendum came from the Leave campaign, and during the U.S. election, Trump’s team averaged 5.3 unverified allegations per day (Oxford Dictionary Editorial Department, 2016)3. These events collectively heralded a new era where emotion outweighs facts.

Traditional media once acted like priests in a church, defining reality for all through front-page editorials. But in the algorithm age, every smartphone screen becomes a mini-chapel: Trump’s tweets serve as gospel radio, influencer livestreams resemble revival tents, and family WeChat groups circulate folk versions of ‘scripture’. This dramatic shift toward decentralized communication has completely dismantled the classic communication theory—K. Lewin’s Gatekeeper Theory. With the collapse of traditional media’s role as information filters, conspiracy theories and alternative facts began to proliferate (Lewin, 1947)4. When short videos claiming Japanese nuclear wastewater causes marine life mutations appear alongside scientists’ test reports on trending lists, and when the rumor drinking diluted bleach kills COVID-19 spreads wildly in WhatsApp groups, we finally realize: people don’t disbelieve the truth—they simply prefer to believe ‘facts’ that align with their emotional stance, even if they are riddled with flaws.

This cognitive revolution is more radical than Nietzsche’s ‘God is dead’. If the collapse of church domes merely brought truth down from its altar, today, a billion smartphones are creating cognitive fragmentation through filter bubbles—algorithms tailor information based on user preferences, forming what C. Sunstein called the echo chamber effect (Sunstein, 2009)5. When more than 60% of Gen Z gets their news from TikTok6, N. Postman’s prophecy of amusing ourselves to death is evolving into dying by emotion: complex social issues are compressed into 15-second adversarial clips, and the space for rational discussion collapses amid likes and dislikes.

II. Case Study on Trump: When the State Apparatus Becomes a Lie Factory

If ordinary people are struggling to stay afloat in the tidal wave of information, then the colossal waves engineered by power institutions are deliberately sweeping everything away.

Scholar Benkler’s definition of disinformation is as precise as a scalpel: disinformation is ‘dissemination of explicitly false or misleading information’ and connected to larger propaganda strategies ‘designed to manipulate a target population by affecting its beliefs, attitudes, or preferences in order to obtain behaviour compliant with political goals of the propagandist’ (Benkler et al., 2018, pp. 29, 32)7. This systematic machinery of deception was showcased in its full glory during the White House’s meticulously orchestrated condom funding controversy.

From Fabricated Issues to Political Narratives

A typical operation was the White House’s fabricated international aid scandal: claiming to cancel the condom project funding for Gaza, Palestine, when what was actually canceled was health aid for Gaza Province, Mozambique. This process follows a fixed template: first, distorting ordinary policies into sensitive issues, such as forcibly linking health aid to terrorism. Then, far-right media amplifies it into a cultural war symbol, allowing factual clarifications to be drowned out by emotionalized dissemination, ultimately reshaping the event into a political achievement. This tactic is identical to the migrant caravan crisis8 exaggerated by the Trump administration in 2018, which pushed policy agendas by fabricating threats.

Institutionalized Lie Production

At the bureaucratic level, language systems are systematically altered. For example, the U.S. Department of Agriculture once rebranded adjustments to school lunch nutrition standards as combating the invasion of woke culture, wrapping technical decisions in ideological packaging9. In the scientific field, data cherry-picking has emerged: health authorities selectively cited a handful of vaccine accident cases from the 1970s (accounting for less than 0.1%) while ignoring CDC safety reports covering 120 million vaccine recipients. The judicial field has further devolved into an image battleground: after edited footage of the 2021 Capitol riot removed scenes of police assaults, it was disseminated with the #PoliticalPrisoners hashtag, leading 52% of Trump supporters to believe the related convictions were political persecution (Pew Research Center’s “Perceptions of Judicial Bias Report,” 2024).

The complete collapse of defense mechanisms

When power personally engages in manufacturing lies, the immune system of a democratic society begins to disintegrate. Judicial credibility plummets precipitously, with Republicans’ trust in the courts dropping from 32% to 19%, directly leading to 44% of election-related lawsuits refusing mediation. Media oversight mechanisms fail as fringe outlets like The American Truth Network infiltrate the White House press corps, their reports forcibly cited by mainstream media, creating a lie-laundering pipeline. Technology platforms’ defenses crumble—after Musk acquired Twitter and slashed 70% of content moderation teams, the survival time of political misinformation extended from 3 hours to 27 hours (Stanford Internet Observatory data). This systemic collapse validates Professor Benkler’s warning: when the state becomes a source of disinformation, society loses its fundamental antibodies against deception.

III. Finding the Compass in the Fog: Rebuilding the Triple Defense of Cognitive Immunity

The world is witnessing an unprecedented cognitive defense war, but with little success. The EU forces social platforms to label suspicious content as requires verification, Germany’s Kiel University developed an AI lie detector with 99% accuracy, and China handles 120,000 rumors annually—yet these seemingly robust digital defenses reveal two fatal flaws in reality:

The first challenge is AI’s textual inquisition dilemma. When algorithms become the arbiters of truth, machines’ misjudgments of human language are causing new harms. Meta’s 2023 report10 revealed that its moderation system incorrectly deletes 1 out of every 3 genuine pieces of information—because AI still cannot comprehend uniquely human expressions of wisdom like irony and metaphor. This mechanized content filtering is creating a chilling effect in the digital age.

The second challenge is the viral spread of emotions. A 2022 MIT study11 uncovered a harsh reality: hate speech spreads six times faster than ordinary information. Social platforms’ core algorithms remain essentially emotion amplifiers. When rumors like Japan’s nuclear wastewater causes marine life mutations trigger mass panic, even if verification systems raise red flags, they cannot stop people from frenzied sharing driven by adrenaline. This fundamentally reveals the deep-seated conflict between artificial intelligence and human instincts: AI relies on massive data to calculate probabilities (for instance, the system at Kiel University requires millions of labeled samples to achieve 99% accuracy), while human communication behavior is perpetually governed by dopamine. When primal emotions like fear and anger are precisely triggered by algorithms, rational thought often surrenders the instant one clicks the ‘Share’ button.

To survive in this war, ordinary people must master new survival rules. The first step is breaking the information comfort zone: compare BBC and Fox News headlines on immigration policies, use DuckDuckGo’s private search to uncover content hidden by algorithms. The second step is developing fact-checking habits: when encountering ‘Shocking!’ headlines, immediately search for ‘content + rumor’ keywords and verify political claims on PolitiFact (the site labeled Trump’s ‘election fraud’ claims as ‘Pants on Fire’). Finally, resist instinctive biases: write ‘I easily believe information that aligns with my views’ as your phone wallpaper and impose a 24-hour delay before sharing opinions you agree with. This cognitive redundancy strategy aligns with Nobel laureate D. Kahneman’s proposed method to counter intuitive biases: by mandating exposure to opposing information, the brain’s rational thinking system is activated (Kahneman, 2011)12.

IV. The Awakening of Players: Standing Firm in the Flood of Information

We are destined never to return to the old days when newspaper front pages defined truth, but we can learn from the survival wisdom of deep-sea fish: sharks have thrived for 500 million years, never altering their course due to rumors on trending lists. As ‘amusing ourselves to death’ meets post-truth politics, we must ask ourselves three soul-searching questions before reposting: Does this content provoke physiological anger in me? Is the source as traceable as a food expiration date? And most crucially—who stands to benefit from my repost?

As communication scholar McQuail warned: The true threat to democracy is not lies themselves, but the public’s collective indifference to truth (McQuail, 2010)13. Perhaps we can never attain a god’s-eye view, but at the very least, we can ensure that Trump’s infamous quote—‘A lie repeated a thousand times becomes the truth’—does not complete its thousandth replication on our timelines.

- Bruns, A., Harrington, S., & Hurcombe, E. (2020). “Corona? 5G? or both?”: the dynamics of COVID-19/5G conspiracy theories on Facebook. Media International Australia, 177(1), 12–29. https://doi.org/10.1177/1329878×20946113 ↩︎

- Keyes, R. (2004). The Post-Truth Era. St. Martin’s Press. ↩︎

- 纽约时报中文网 (2016). Post-truth | ‘后真相’的真相. [online] 纽约时报中文网. Available at: https://cn.nytimes.com/culture/20161207/tc07wod-post-truth/. ↩︎

- Lewin, K. (1947). Frontiers in Group Dynamics: Concept, Method and Reality in Social Science; Social Equilibria and Social Change. Human Relations, [online] 1(1), pp.5–41.

doi: https://doi.org/10.1177/001872674700100103. ↩︎ - Sunstein, C.R. (2006). Infotopia. Oxford University Press. ↩︎

- 联创互联-http://www.lc787.com. (2024). 2024美国大选:政治广告迈入玩梗时代-TopMarketing|TopMarketing官方网站. Itopmarketing.com. https://www.itopmarketing.com/info18626 ↩︎

- Ferreira, R. R. (2020). Benkler, Y., Faris, R., & Roberts, H. (2018). Network propaganda: Manipulation, disinformation, and radicalization in American politics. Oxford: Oxford University Press. Mediapolis – Revista de Comunicação, Jornalismo E Espaço Público, 11, 103–105. https://doi.org/10.14195/2183-6019_11_7 ↩︎

- 【美国研究】特朗普的恫吓能否阻退“大篷车移民”的步伐?_民主党. (2018, November 29). Sohu.com. https://www.sohu.com/a/278565711_619333 ↩︎

- 美国调整学校午餐标准以减少浪费-新华网. (2016). Xinhuanet.com. http://www.xinhuanet.com//world/2017-05/02/c_1120904279.htm ↩︎

- Form 10-K (NASDAQ:META). (2023). https://annualreport.stocklight.com/nasdaq/meta/23578439.pdf ↩︎

- Vosoughi, S., Roy, D., & Aral, S. (2018). The Spread of True and False News Online. Science, 359(6380), 1146–1151. https://www.science.org/doi/10.1126/science.aap9559 ↩︎

- Kahneman, D., Lovallo, D., & Sibony, O. (2011). Before you make that big decision. Harvard business review, 89(6), 50-60. ↩︎

- Pisani, J. R. (1985). Mass Communication Theory. McQuail, Denis. London: Sage Publications, 1983. 245 Pp. $25 (h), $12.50 (P). Journal of Advertising, 14(4), 62–63. https://doi.org/10.1080/00913367.1985.10672976 ↩︎

Be the first to comment