Is what you see really what you see?

Imagine that we see a video on social media: the video is of a well-known scientist, with a formal laboratory as the background. The scientist is using her “voice” and twisting body to make the whole speech more authentic and credible. However, this video has been deeply faked by AI. What’s more frightening is that AI allows ordinary citizens to create and spread false messages, which can be produced on a large scale and at low cost.

Sometimes, information does not need to be deliberately faked, just some editing of the content is enough. For example, the video of US President Biden’s distraction at the 2024 G7 summit. Biden in the video looks absent-minded, but in fact Biden is staring at the skydiver who is about to land in the air. Most of the comments under the video accuse Biden of being a president who loses his judgment at any time. Although this “out of context” method does not tamper with the essential content, it can mislead the direction of public opinion.

These two different examples reflect a growing crisis of trust. False information is no longer a sensational headline or a rumor beyond science, but has become a more confusing ‘real information’. AI deep fake content, maliciously edited videos or pictures, misleading news headlines, fragmented data citations and other new forms of false information have been slowly integrated into the existing digital environment.

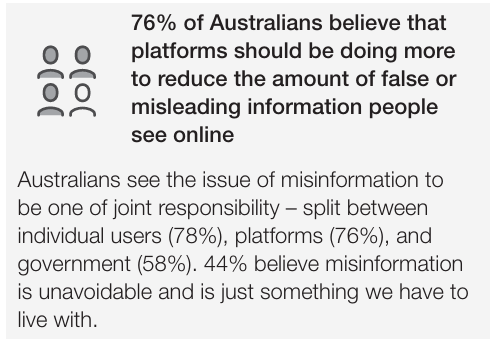

The real question is: how should we deal with it? Platform governance or policy formulation and implementation? Rely on the people themselves or the authorities? Existing false information has become more difficult to govern in the big data environment. It begins to cater to the tastes of the people and let the people see what they want to see, making it more difficult for the people to distinguish.

Misinformation, Disinformation and Fake News

False information is slowly being updated with the development of the digital society. It is not just rumors or news, but also incorporates more difficult-to-identify features. AI’s deep fake makes it easier to fake information. For example, misleading news headlines, out-of-context video images, old news spliced with time dislocation, and other types of false content make the entire digital community full of false atmosphere. As Terry Flew mentioned:

It is increasingly common to refer to ‘misinformation’ and ‘disinformation’ rather than to ‘fake news’. Misinformation is defined as ‘communication of false information without intent to deceive, manipulate, or otherwise obtain an outcome’. By contrast, disinformation is defined as ‘dissemination of explicitly false or misleading information’ and connected to larger propaganda strategies ‘designed to manipulate a target population by affecting its beliefs, attitudes, or preferences in order to obtain behaviour compliant with political goals of the propagandist’.

In reality, disinformation and misinformation are intertwined: after disinformation is intentionally created by a specific organization or individual, it will quickly turn into a larger misinformation once ordinary users believe it and start to forward it on a large scale.

So why does this information have such a strong ability to spread?

As the most critical intermediary, the platform can promote the content posted by users based on quantitative standards such as click-through rate, number of comments, and number of reposts. In other words, as long as enough people view the content posted by a user, the platform will help the user promote what he posted to more people.

AI Fake News at War

In October 2023, an armed conflict broke out between Hamas and Israel. In the following days, a large amount of news, reports, pictures, videos and other information about this armed conflict were posted on the Internet, including attacks, explosions and other very visually impactful content.

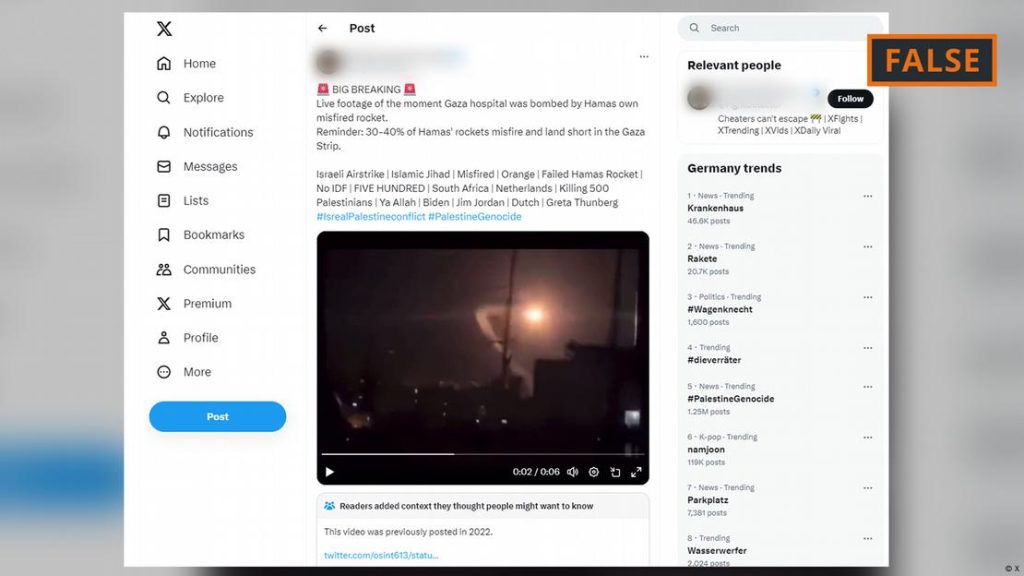

Among these information, a video post spread online at a very fast speed, in which the content was an Israeli military airstrike on a hospital. The international public opinion storm was instantly set off, and accusations on the Internet continued. However, after the subsequent investigation by the fact-checking organization, this video was shot in Syria in an earlier period. After being repackaged and edited with montage techniques, it became the current Israeli military airstrike on the hospital video. There are similar contents on X (formerly Twitter), such as certified accounts that posted official statements about the Israeli military, etc. Many of these contents have been synthesized by AI deep forgery. During the period when these information were not refuted, they had already caused an uproar on the Internet, quickly filling the public opinion space in the information vacuum.

Some people have turned AI into a digital weapon and used it online. These false contents arouse people’s emotions through moral and emotional angles. In the early stage of the outbreak of global hot spots, they can spread rapidly in a short period of time with the help of the public’s sensibility and the platform’s algorithm mechanism. Even if the official comes out to refute the rumors, the first impression in the public’s mind has already been fixed.

From the platform’s perspective, the X platform is almost impossible to deal with. Because the subscription mechanism of the X platform allows anyone to get a checked account by paying eight dollars a month, and this account will also be promoted by the platform. This means that users can post at will, and bad actors can use false information to make money at any time. At the same time, the relaxation of the platform’s review mechanism has also allowed false information to appear more unscrupulously on the X platform. According to NewsGuard, a company that rates the reliability of online news sources, nearly three-quarters of the 250 most popular posts on X that promote false or unofficial narratives about conflicts were posted by accounts with subscription marks.

In other words, AI brings more than just falsification. It can also make itself more advantageous based on the rules of the platform. It began to use online public opinion as a new battlefield. If platform governance mechanisms cannot keep up with the speed of AI, the harm caused by false information on the Internet may become real harm.

Hidden manipulation of out-of-context quotes

Unlike AI deep fakes, out-of-context quotations are taken from existing real materials. This type of information is sometimes more deceptive because the content taken out is also real, but the taken out fragments cannot express the meaning of the original text, and may even express the opposite meaning of the original text. Disinformation as distortions.

In April 2024, US President Biden seemed to shake hands with the air after a campaign speech, so a large number of edited videos appeared on the media platform, with titles similar to Biden shaking hands with a ghost, or some personal attacking words. These edited videos or pictures have a lot of views and forwarding. The comment area is also affected by the content, and most of the comments are teasing or questioning the president’s mental state.

But in fact, in the full version of the video, Biden is gesturing to the audience outside the camera, not just to the air. This clip itself is real, but through time point editing, title guidance and other behaviors, the audience can be introduced into a wrong understanding environment in advance. It does not tamper with the content, but it still misleads the audience.

This is also the most difficult part of misinformation that is taken out of context to identify: it does not require changing the content, it only needs editing and a striking title to arouse the audience’s emotions. Based on the platform algorithm mechanism, this type of content can easily spread in the community.

What’s more complicated is that this type of misinformation may also be caused by some ordinary users intercepting a radical piece of content for their own “traffic” in order to attract users. Traditional platform governance methods such as fact checking or platform content deletion are difficult to quickly process such information in a short period of time and cannot prevent it from spreading on the Internet in time.

False information does not always start from the perspective of falsification, it may also start from the facts themselves. Taking out of context, misleading editing and emotional packaging can easily make the audience fall into a storm of public opinion, which is also one of the most challenging contents in modern information governance.

Is the platform a helper of false information?

In the information exposure logic of social platforms, truth or falsehood is never a priority, but popularity is. Only content that is attractive enough to users can be recommended to more people. The platform uses multiple quantitative standards such as the number of views, click-through rate, viewing time, sharing, comments, and forwarding to promote the most traffic content to enter the audience’s field of vision first.

Emotional and conflicting content is more likely to attract people’s attention. Whether it is AI deep fake or out-of-context fragments, as long as it can attract user attention, the platform algorithm will help this information spread. The more users click to follow, the more people’s homepages this information will appear.

However, looking back at the nature of the platform algorithm, the platform itself did not deliberately spread false information, but lacked the ability to judge the authenticity of the information immediately. After the information has spread around the global network, fact checking may have just begun. Even if the official rumor-refuting news later proves that the information is false, few people will pay attention to the real situation because they have a first impression of the original content.

The algorithm mechanism of the platform did not prevent the spread of false information, but helped it become a viral distribution, which also made the platform inadvertently become an amplifier of misinformation.

Governance dilemma: When will policies catch up?

The harm of false information is imminent, but policies are often reactive. One of the biggest dilemmas of digital governance at present is the lagging policy formulation.

In 2022, the EU launched the Digital Services Act to regulate online intermediaries and platforms to prevent illegal and harmful activities on the Internet and the spread of false information. The EU also launched the AI Act to regulate the comprehensiveness of artificial intelligence. These policies show that officials are thinking about countermeasures to false information.

However, the implementation of these policies still has many challenges. First, the platform is transnational, and it becomes very difficult to enforce EU law between different countries; second, the formulation and subsequent improvement of policies cannot keep up with the speed of information dissemination. Before the legislation is passed, false information has already spread on the Internet around the world.

In addition, freedom of speech should also be considered while governing false content. Once the policy is too harsh, it will arouse public dissatisfaction and distrust of the platform. The platform itself is also unwilling to give up user traffic. A bridge of trust needs to be built between the public, the platform and the government to stabilize the information environment.

Future: Collaborative Governance and Public Responsibility

Facing the current information ecology, it is still difficult for government policies and platform audits to achieve rapid and accurate governance. Future governance requires multi-party collaboration: the government formulates transparent and fair policies; the platform assumes the responsibility of auditing and updates the algorithm mechanism; technology development companies strengthen AI deep fake identification and marking; the public should keep a clear mind in the public opinion environment. Because the public is not only the receiver of information, but also the disseminator of information. Strengthening the information literacy of each user can also help the rapid governance of digital information.

Information treatment relies not only on policies and tools, but more importantly on the attitude of each participant. Cultivating the judgment of the truth and the sense of responsibility for the announcement space is also a top priority.

Conclusion: Rebuilding trust in the truth

Today’s false information is not just itself, it has various disguises that catch people off guard. Deep fakes, out of context, and platform big data algorithms are also reshaping our understanding of modern digital society.

The purpose of governance is not only to clean up the network environment, but more importantly to help people judge, think and choose in such a large ocean of information. Only by establishing a trusting communication channel between multiple parties can governance be better achieved.

Be the first to comment