Have you ever had this experience: you originally intended to “scroll through TikTok for five minutes,” but before you realized it, an hour had silently slipped away? Or perhaps, when you were feeling down, bored, or anxious, the app perfectly delivered a video that matched your mood, leaving you surprised or even slightly uncomfortable? This strange feeling of “how does it understand me so well” isn’t magic—it’s algorithms precisely controlling from behind the scenes.

In fact, TikTok’s algorithmic functions have long surpassed mere entertainment purposes. Social media platforms use technologies such as emotional analysis and predictive data analytics to directly influence user preferences (Andrejevic, 2013a, as cited in Flew, 2021). The platform provides content while it shapes how we perceive the world and modifies our behavioral patterns.

What makes these algorithmic systems particularly powerful is their adaptive learning capability. Every scroll, pause, and reply becomes valuable feedback that refines the platform’s understanding of not just what content you enjoy, but when and how you prefer to consume it. This temporal dimension allows TikTok to identify your daily patterns—recognizing. The algorithm doesn’t just track your explicit preferences but maps your emotional vulnerabilities, identifying precisely when you’re most susceptible to specific content themes.

Are You Scrolling or Being Scrolled?

Remember when social media was about following friends and family? Back then, information flows were built by our own hands: who to follow, what to see, what to block—users had relatively clear control. TikTok, through its “For You Page” (FYP), has completely subverted this model. Unlike Instagram or Facebook, its homepage isn’t a content stream composed of accounts you follow but relies on a precise algorithmic recommendation mechanism that has silently taken control of this power.

This approach means that the information users encounter depends entirely on the platform’s push rather than personal choice. Moreover, this isn’t purely to provide entertainment but to achieve profit by collecting user behavior data to build business profiles.

Think about what this means: in less time than it takes to watch a movie, TikTok has already decided what kind of person you are, what you believe, and what you should continue to see. Even though these processes happen silently they lead to substantial transformations in brain operations and decision-making abilities.

The Real Purpose Behind the Screen: You Are the Product

Although TikTok has never publicly disclosed the complete operational mechanism of its recommendation engine, through academic research, data leaks, and reverse engineering, outsiders have gained a relatively deep understanding of its algorithmic logic. This system not only suggests content based on users’ “likes” but it also tracks every small user action on the platform to construct a detailed “behavioral portrait.”

Factors that TikTok’s algorithm focuses on include:

- Interaction metrics: The TikTok algorithm examines video watch durations along with user interactions such as likes and comments to make evaluations.

- Content features: The algorithm examines content features like tags and background music styles from videos you have interacted with.

- Completion rate: The algorithm pays attention to whether users finish watching videos or just quickly swipe past them.

- User data: The algorithm considers user demographic information including geographic location and device type along with language settings.

(Newberry, 2025)

The data collection process operates continuously while gathering very detailed information. Many users lack visibility in this process. Users remain unaware of the full extent of data collection because the system records mouse movement trajectories and page dwell time as silent secondary behaviors. The platform’s privacy policies typically use vague wording like “improving user experience” to obscure the actual commercial purpose of data collection.

Opacity is also reflected in the “black box” nature of algorithmic decisions. Platforms list recommendation algorithm parameters as trade secrets, making it difficult even for content reviewers to grasp specific standards. Zuboff (2019, as cited in Flew, 2021) points out that this opacity allows platforms to construct a “controllable emotional economy” that covertly guides user behavior. Srnicek’s research (2017, as cited in Flew, 2021) also shows that platforms only provide “algorithmic matching promises” to advertisers rather than transparent data usage mechanisms.

This operational model results in vague and changeable content review standards, creating serious information asymmetry, leaving users passively providing data without understanding the rule system governing their experience. It is precisely this opacity that leads to the power imbalance between platforms and users:

From an economic perspective, platforms monopolize the commercial value of user data, gaining benefits through precise advertising and personalized content pushing, while users cannot benefit from it; from a cognitive perspective, platforms use the rhetoric of “personalized service” to make surveillance seem justified, creating the illusion of “voluntarily surrendering data,” thereby further consolidating this unequal relationship.

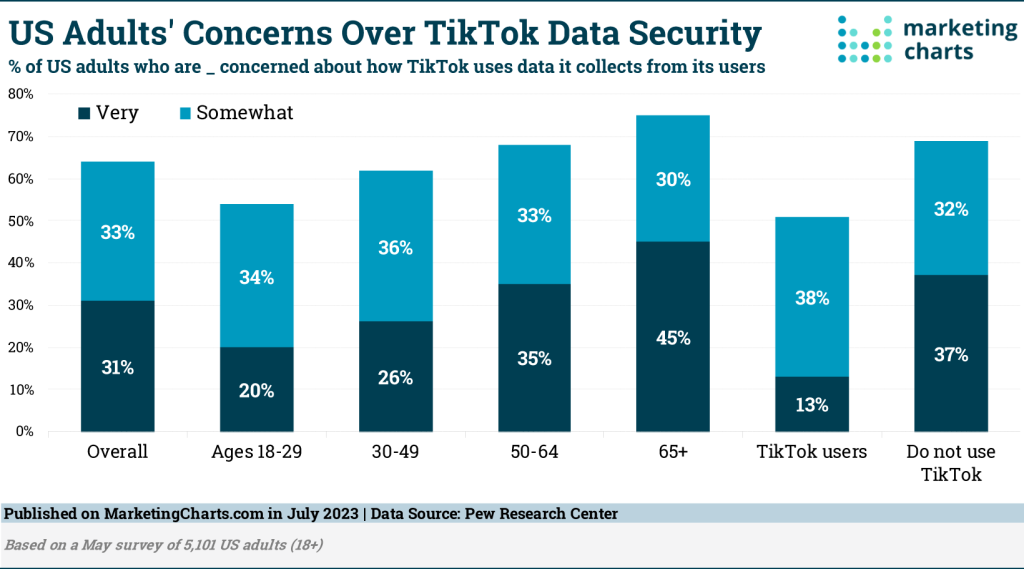

Moreover, this systemic opacity has triggered significant social anxiety—a 2023 Pew Research Center study showed that 64% of American adults expressed concern about how TikTok uses the data it collects, with 31% “very concerned” and 33% “somewhat concerned” (2023, as cited in Marketing Charts, 2023).

The level of concern reached its peak at 75% among individuals aged 65 and above whereas younger people between 18 and 29 years old expressed concern at a lower rate of 54%. People tend to demonstrate greater data security concerns as they grow older. The trend shows that trust levels towards new platforms differ among generations and this difference sparks wider discussions about data privacy concerns.

Zuboff’s analysis in 2019 explains that surveillance capitalism turns collected user data into monetizable digital assets while making money through concealed data collection operations. This exploitation relies on users’ “voluntary ignorance” as the TikTok case shows: More than 50% of users express data security concerns but need to agree to platform terms which demonstrate digital age power structures’ coercive nature.

Filter Bubbles: Your Personal Reality Tunnel

The filter bubble system employs algorithms to show content based on previous user behavior which limits exposure to diverse information streams. Recommendation systems on digital platforms analyze user engagement data to improve their output by presenting content that users will likely interact with which produces repetitive suggestions that strengthen existing preferences and create filter bubble effects. Users get locked into a self-perpetuating cycle of exposure to confirmation-biased viewpoints while filtering out diverse information which intensifies cognitive bias and strengthens group polarization.

On TikTok, this phenomenon occurs particularly rapidly. The platform uses early user activity data to rapidly direct individuals to a distinct “content track.” The algorithm identifies which content captures your attention and then delivers similar content frequently to reinforce interests which may have started as accidental, leading to the development of persistent information barriers.

This isn’t just theoretical extrapolation. A 2024 study by Ibrahim’s research team using 323 TikTok algorithm audit experiments in three U.S. states proved that the platform quickly prioritizes partisan video content following user interactions. The algorithm demonstrated bias by pushing 11.8% more same-party content to Republican accounts versus Democratic ones, while Democratic accounts saw 7.5% more content from opposite-party accounts indicating uneven distribution in political recommendations.

Emotional Content and Psychological Impact

TikTok’s algorithm not only creates filter bubbles but also hides a more covert yet more dangerous tendency: it systematically amplifies extremely emotional content. Driven by the attention of the economy, evoking emotional responses from users becomes the most effective means of maintaining engagement.

The algorithmic structure of platforms aims to boost user interaction yet poses severe mental health risks for adolescent users. According to the American Psychological Association adolescents experience amplified emotional reactions to intense online content since prolonged exposure damages their cognitive development and emotional regulation abilities (American Psychological Association, 2024). This aligns perfectly with TikTok’s mechanism of amplifying extreme emotional content, constituting a systemic risk.

With TikTok’s monthly active users exceeding 1.5 billion in 2024, it has become one of the main channels for Generation Z to obtain information. However, its recommendation mechanism poses unique risks to adolescents. The Center for Countering Digital Hate (CCDH, 2022) discovered that immediately after registration new users receive substantial amounts of eating disorders and self-harm content and related videos appear every 39 seconds on average. Adolescents experience increased mental health challenges from continuous exposure to intense emotional content.

More seriously, TikTok’s “Blackout Challenge” has led to multiple minors dying from asphyxiation after imitation, triggering public questioning of the platform’s algorithm push mechanism. Victims’ families pointed out that the algorithm precisely pushed this dangerous content to their children based on their interaction records (Hall, 2025). This reveals a deeper problem: The system may promote dangerous content as its singular optimization target is user engagement which creates significant risks to adolescent users.

What’s particularly alarming is how these algorithmic systems exploit adolescents’ developmental vulnerabilities. During this critical neurological development period, teenagers have heightened sensitivity to social stimuli and peer validation while simultaneously possessing underdeveloped impulse control mechanisms. TikTok’s algorithm exploits users’ psychological tendencies through content that creates intense emotional reactions which boost engagement rates. Millions of developing minds become subjects of unregulated psychological study through targeted exploitation that operates without clear rules or effective age verification methods.

Regaining Control: What Can We Do?

Social media algorithms remain unable to rob us of our autonomy regardless of their complex design and considerable influence. Through specific strategies we can attain complete control over how we manage our digital lives. Here are five effective response methods:

First, cultivate algorithm awareness. It’s recommended to set time limits for applications and remain vigilant while browsing: Evaluate emotionally charged content to determine whether it provides real value or if algorithms generated it to trigger emotional responses.

Second, actively expand information dimensions. Investigate different content areas beyond your typical interests and subscribe to creators who present unique views. When people choose what information to consume purposefully, they break through algorithm-generated content filters and build stronger analytical thinking skills.

Third, use platform control tools to their full potential. Users have the most straightforward way to correct algorithms through the “not interested” feature. Recommendation systems will learn to refine their suggestions through timely feedback about excessive or repeated content.

Fourth, establish regular “digital detox” mechanisms. Schedule a monthly 24–48-hour period free from digital devices which helps decrease algorithm dependence while restoring your natural attention span.

Fifth, participate in digital rights advocacy. Advocate for platforms to make basic algorithm rules public and form autonomous oversight organizations. Algorithmic ethics will become optimized in tech companies after receiving substantial user demands.

These strategies focus on transforming passive algorithm-controlled consumers into active creators who exercise digital independence. These strategies allow us to break through platform algorithm control to reshape power dynamics between people and technology.

Resistance efforts rooted in community action stand as a vital force against the dominant power of algorithmic control structures. Individuals who prioritize critical media literacy achieve better outcomes through local discussion groups which facilitate collective knowledge exchange.

To systematically disrupt recommendation patterns regular “algorithm interruption practices” involving families and friend circles should include deliberate content sharing which opposes standard algorithmic predictions. Educational institutions need to design digital citizenship courses which will enable students to understand algorithmic manipulation methods and technology knowledge while maintaining their autonomy in spaces governed by algorithms.

Reclaiming Control Over Digital Life

The TikTok algorithm exemplifies how technological advancements transform our perception of reality through content consumption patterns and political perspectives while regulating emotional responses through invisible forces. Like the “Clever Hans” of the 20th century (Crawford, 2021), contemporary algorithms similarly ‘learn’ control methods through our unconscious feedback (such as dwell time). When we realize that every frown or light laugh might become training data, we become more cautious about managing our digital body language.

As citizens of the digital age, we cannot merely become passive recipients of algorithms. By consciously using platforms, actively diversifying information sources, regularly detoxing digitally, and supporting greater algorithmic transparency, each of us can regain some control. Next time, when you feel bored and reach for TikTok, pause for a moment to consider: Do you make a deliberate choice to enter this environment or are you following routines which have been meticulously crafted? Your initial effort to regain power over your digital existence starts with this basic decision.

References

American Psychological Association. (2024). APA recommendations for healthy teen video viewing. https://www.apa.org/topics/social-media-internet/healthy-teen-video-viewing

Center for Countering Digital Hate. (2022, December 15). TikTok bombards teens with self-harm and eating disorder content within minutes of joining the platform. https://counterhate.com/blog/tiktok-bombards-teens-with-self-harm-and-eating-disorder-content-within-minutes-of-joining-the-platform/

Crawford, K. (2021). The atlas of AI: Power, politics, and the planetary costs of artificial intelligence (pp. 1–21). Yale University Press.

Hall, R. (2025, February 8). Parents sue TikTok over child deaths allegedly caused by ‘blackout challenge’. The Guardian. https://www.theguardian.com/technology/2025/feb/07/tiktok-sued-over-deaths-of-children-said-to-have-attempted-blackout-challenge

Flew, T. (2021). Regulating platforms (pp. 79–86). John Wiley & Sons.

Ibrahim, H., Jang, H. D., Aldahoul, N., Kaufman, A. R., Rahwan, T., & Zaki, Y. (2025). TikTok’s recommendations skewed towards Republican content during the 2024 U.S. presidential race. arXiv. https://doi.org/10.48550/arXiv.2501.17831

Marketing Charts. (2023). Most Americans are concerned about TikTok’s data security. https://www.marketingcharts.com/digital/social-media-230359

Newberry, C. (2025, April 9). How the TikTok algorithm ranks content in 2025 + tips for visibility. Hootsuite Blog. https://blog.hootsuite.com/tiktok-algorithm/

Be the first to comment