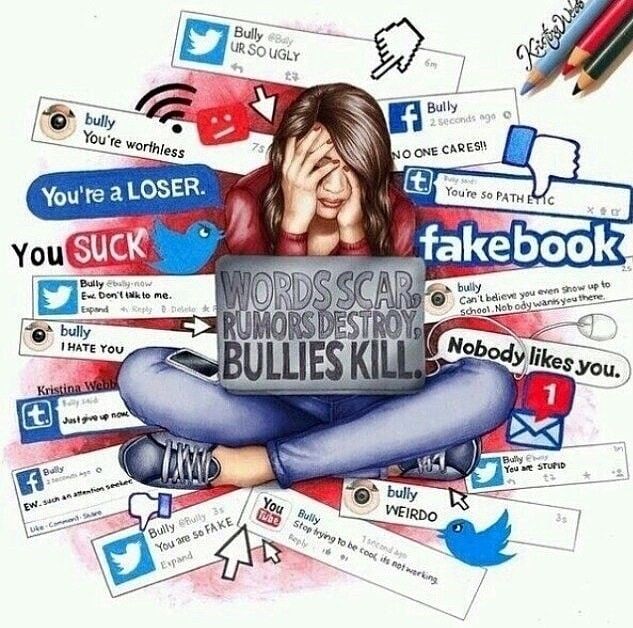

Figure 1: An illustration about hate speech on digital social platforms causing internet bullying to an individual. Image via Pinterest.

Hate Speech – An escalating growing concern of digital violence

Nowadays, we’ve entered an era of digital social media. With the rapid growth of social media platforms in the past decade, the issue of hate speech has been raised to be an inevitable harassment to every netizen. According to the Pew Research Center in the United States, around 41% of the Internet users had encountered different kinds of online abuse, including but not limited to physical threats, sexual harassment, or stalking (Duggan, 2017). No one is immune to hateful content online – from nobody to somebody, like celebrities. This article unpacks how hate speech on social media platforms affects both everyday users and celebrities. We will examine its psychological roots, viral transmission pattern, real-world consequences, and reflect on the challenges of digital moderation and governance of social media platforms. At the end of this article, we will discuss the balance between freedom of speech and moderate content regulation in order to gain a glimpse of insight into the dilemma and challenges of contemporary digital platform governance under an increasingly complex social context.

Introduction

To understand the phenomenon of hate speech on digital social media in a deep-dive view, we will start with understanding the definition of hate speech, and then we can analyze both ordinary individuals and celebrities & brands’ perspectives to see what is happening to them.

According to Parekh, speech that ‘expresses, encourages, stirs up, or incites hatred against a group of individuals distinguished by a particular feature or set of features such as race, ethnicity, gender, religion, nationality, and sexual orientation’ is defined as hate speech (Parekh, 2012). Hate speech encourages the unfriendly feeling and hostility in society and violates the human dignity of the target group, as Flew mentioned (Flew, T., 2021). What’s more, the poisonous influence of hate speech is not just a one-off hurt to one, but a harm that may last long. Tirrell describes it as ‘a slow-acting poison that accumulates in its effects’ (Tirrell, 2017). Generally speaking, hate speech is a form of language that is intentionally deleterious (Davidson et al., 2017) and is a deliberate, voluntary, and detrimental attack on human dignity and identity.

Individuals suffering from hate speech: It can be any of us

Hate speech to individuals may happen a lot, depending on what hashtag other netizens put on you. Sometimes people are jealous of the rich — or even just things they don’t occupy, which can be both physical and abstract — like happiness. Sometimes people hate the ‘heresy’—like’ LGBTQ+, some still consider them as ‘abnormal.’ According to Sinpeng, overall speaking, minority sexual and gender identities such as lesbian, gay, bisexual, transgender, queer, intersex and asexual

(hereafter LGBTQ+) individuals may experience a great deal of hate speech content online (Sinpeng et al., 2021). The internet witnessed hate speech like vomiting emojis in response to gay wedding images and accusations that homosexuality is a disease and should be treated but not celebrated.

The situation can be analogized to the discrimination of people from different regions and races. Even on Chinese social media today, colored people are still linked with impressions of ‘violence,’ ‘sexual words,’ etc. People from the middle-region area of China, like Henan Province, may be made malicious jokes of for ‘stealing manhole covers’ as a stereotype, while people from Shanghai may be taunted with ‘Here comes our billionaire. How many houses do you own? Even though most people in Henan Province live a well-off life, not every resident in Shanghai lives quite a luxury life; they have encountered hate speech based on the label that other netizens feel about their hometown.

It’s common for prejudice against a group to be applied to one individual at first sight as a preliminary judgment. People tend to make irresponsible opinions and vent negative emotions to them, hiding behind anonymous social accounts. It seems by now there are few valid and effective ways for preventing and regulating such behaviors and making them pay and learn for what they have done. Even laws in different countries are being made, which is a progress; it still takes a long time for the perpetrator to be punished and the victim to get compensated.

Hate speech targeting celebrities

Being a celebrity nowadays is definitely not an easy job. If you only see the fancy parts of having millions and billions of followers on social media accounts, endless likes on your casual posts, and having fashion brands fighting over the cooperation opportunity, you’re blinded by the glorious fantasy. Celebrities are just like living under the microscope, especially in such a hyper-connected social media landscape. Their movements are not just sought after by their fans but also observed and judged by the anti-fans.

Take Tello’s case study about the hate speech directed at Spanish female actors as an example, hate speech that celebrities may encounter includes misogyny, sexual harassment, animosity, unfriendly criticism of talent, etc. (Tello, 2025)

Speaking of sexual harassment, as mentioned above, it is even quite common for a female to receive disgusting comments even when they are sharing posts with pictures that have no connection to direct sexual innuendo, not to mention the situation that female celebrities may encounter. These comments may also be linked to the questioning of the real talent of the celebrity. Vicious speculation will be upon her performance skill, music achievement, and any success in her career is likely to be considered as the triumph of her taking advantage of gender.

The phenomenon of hate speech may spread and expand from the celebrity’s own social media account comments to any brand’s official account that the celebrity cooperates with. For instance, MIDO, a Swiss watch brand in the Swatch Group, has encountered hundreds of hate speech comments in different ways under a commercial product celebrity seeding post on Little Red Book, the main social media platform in China. Comments are like “Boycott this brand from now on,” “Ewww!” “Never gonna buy a watch from this brand,” etc.

Figure 2: Hate speech comments in a celebrity product seeding post on the official account on Little Red Book of MIDO. Image via Litte Red Book comment area.

These voices really trouble the brands and companies because when choosing cooperating candidates, the public relations team always does pre-research on the celebrities to make sure they are not involved in scandals on basic benchmarks, like moral and professional attitude, so as to avoid a brand public opinion crisis. However, the hate speech commenters just focus on putting out the negative comments regardless of the occasion. Most of the time, the comment area of the brand post is falling victim to fans arguing. Fans of different celebrities with competitive relationships sometimes post negative and humiliating comments under the celebrity their own idol is opposite to, and the numbers and scales can be observed to be well planned and organized.

The motivation of them can be mainly considered to screw the cooperation result on purpose as well as spreading negative impressions to the public who don’t know the plots in it as psychological roots. With a viral transmission pattern, they appear and write the unfriendly comments in groups, sharing them among different fans online, encouraging others to join in this notorious witch-hunting campaign. For real-world consequences, it is obvious that the harms of these hate speeches, as quoted at the beginning of this article, will draw upon celebrities, which is a geometric growth compared with ordinary people.

However, the cooperation brand will be another target in this viral. Shall they drop the post with the issued celebrity as they find the post is under the attack of hate speech comments? Most brands will not do so because at least they need time to investigate whether it is a truth that the celebrity has some serious and unforgivable scandals on basic benchmarks or whether it is just a vent of the anti-fans anger. Most of the time, when brands find that they are victims of this venting of emotion carnival, a tricky problem they are facing is how to deal with the hate speech comments that had already occurred. Deleting them all is not a good choice because the anti-fans will observe carefully how brands react to them. Once they find the comments are totally deleted, they are probably likely to raise another round of incendiary speech, accusing the brand of bullying the ordinary massive audience and prohibiting their rights of freedom of speech, plunging brands into a media firestorm.

What’s the role of digital platforms in this?

How to react to hate speech online effectively and reduce the harm to a minimum size is always a difficult task all digital platforms are facing up to. Freedom of expression vs excesses freedom of expression—what is the limits between them and to what extent can people be regulated?

The very core mechanism of most digital platforms is commercial content moderation. Roberts has defined four basic types of work situations for professional moderators. They are in-house, boutiques, call centers, and microlabor websites (Roberts, 2019). Key catalog of content that has been dealt with, including nudity or sexual content, violent or graphic content, hateful content, threats and spam, misleading metadata, scams, etc. (Roberts, 2019).

Digital Platforms, as place gathering multiple media intermediaries is of responsibility to have clear policy and regulation for its governance on user behaviors. Most main digital platforms do have mechanism by their own, whether the rule is actionable and matured. Take the Little Red Book case above as an example, the platform algorithm by now had no direct machenism for the automatic detective for hate speech without obvious humiliating words to be shield, like ‘F-words’. MIDO Brand team can only contact with the Little Red Book team and manually report the hate speech comment and wait for the manual review from the platform. Even though, the outcome of this case is platform choose to remove the hate-speech-like comments to the bottom of the comment area and promote the positive and neutral comments. Moderations on digital platforms for hate speech are often done by both computational tools and human evaluation review. The case of MIDO shows that these two methods need to be further more combined.

Still, there are platforms lack of manual intervention. According to Massanari, the phenomenon of Reddit’s inaction leads to the implicitly that allows toxic behaviors like anti-feminist and racist activist spreading on its platform (Massanari, 2017). From one’s perspective, it shows the limits of the regulation setting of the platform, that is to say rules and moderations may become biased considering the rule setter behind it.

To set a wide and fair common rule for regulating behaviors on digital platforms, governments are suggested to have joint effort and lead the trend. Digital platforms may need a basic benchmark as a cornerstone and allow various platforms to have their own plus opinion on it. While this may be a real challenge considering the project scale, it is even more difficult to decide to what extent users’ opinions can be involved. Some platforms had made attempts, like Facebook in 2009, claimed that users would have direct input on the terms of service, which are considered “the governing document” for Facebook users worldwide as long as it’s with 30% of active users to vote for changes to take effect (Suzor, 2019). However, in one case of the implement of this rule, even with over 660,000 users voted, it still counted less than 1% of the total user base. This attempt ended up in Facebook rolled back its commitment to direct user input. How to fully considered and involve the real users’ opinion into real actions for the regulation of platforms is far away on exploration.

Conclusion

To sum up, as we are marching forward an ever-changing era of the digital age with a rather complex context, it is obvious that hate speech behavior may bring harmful result to every individual, no matter who you are. The pain may last long for being a slow-action poison which may attack and destroy human dignity and identity. With the observe on universal digital platforms can we find familiar occasions we may encounter as an ordinary user, while with the case of MIDO we can have a slight piece of look of the dilemma of both celebrity and brand. For digital platforms, it is their duty to get joint effort with government to establish the modern regulation that both allows users to express their own opinions as well as control hate speech effectively with fair and transparency.

As for ourselves, I personally suggest that we shall keep a kind and friendly perspective and do not impose hate speech on someone, even he/her may hold a different idea from you. After all, hate speech may happens to us all, no matter we are somebody or nobody.

Reference

Davidson, T., Warmsley, D., Macy, M., & Weber, I. (2017). Automated hate speech detection and the problem of offensive language. Proceedings of the International AAAI Conference on Web and Social Media, 11(1), 512–515.

Duggan, M. (2017, July 11). Online harassment 2017. Pew Research Center. https://www.pewresearch.org/internet/2017/07/11/online-harassment-2017

Flew, T. (2021). Hate speech and online abuse. In Regulating platforms (pp. 91–96). Polity.

Massanari, A. (2017). Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Parekh, B. (2012). Is there a case for banning hate speech? In M. Herz & P. Molnar (Eds.), The content and context of hate speech: Rethinking regulation and responses (pp. 37–56). Cambridge University Press.

Roberts, S. T. (2019). Behind the screen: Content moderation in the shadows of social media (1st ed.). Yale University Press.

Sinpeng, A., Martin, F., Gelber, K., & Shields, K. (2021). Facebook: Regulating hate speech in the Asia Pacific. University of Sydney and University of Queensland. https://r2pasiapacific.org/files/7099/2021_Facebook_hate_speech_Asia_report.pdf

Suzor, N. P. (2019). Who makes the rules? In Lawless: The secret rules that govern our lives (pp. 10–24). Cambridge University Press.

Tello Díaz, L., & Martínez-Valerio, L. (2025). Hate speech directed at Spanish female actors: Penélope Cruz—A case study. Social Inclusion, 13. https://doi.org/10.17645/si.9250

Tirrell, L. (2017). Toxic speech: Toward an epidemiology of discursive harm. Philosophical Topics, 45(2), 139–161. https://doi.org/10.5840/philtopics201745214

Be the first to comment