Introduction

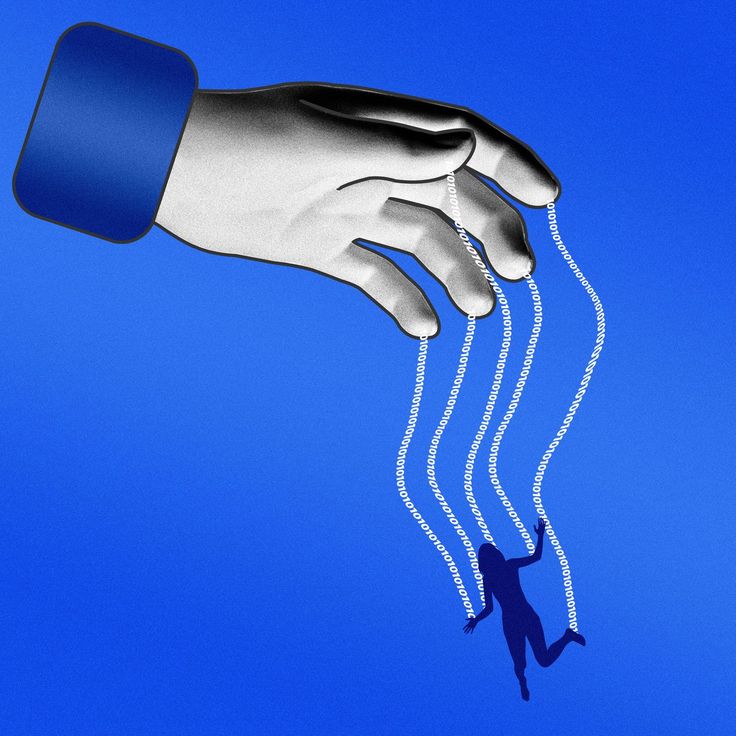

Before realizing it, artificial intelligence is growing fast and maturing. AI was a term used in newspaper articles as a “breakthrough achievement.” It was either the product of an experimental field or the subject of a science fiction movie. Admittedly, AI is now a buzzword that has quietly moved from the edge of technology into almost every part of our lives. The videos or news you see, or even your emotions and judgments about the world, could be gradually determined using algorithms. What stands behind efficient and accurate parenting on the Internet is the massive data collection and analysis of user behavior by the platforms. It is worth wondering how the algorithms work. Is it fair and transparent, and how can it be controlled and governed? In this blog, we explore how AI and algorithms are transforming themselves from intelligent tools to governors. AI is not only reshaping the web structure but also reconfiguring the “reality” we share, which profoundly affects our cognition.

AI in Everyday Life: From Tools to Decision Makers

Artificial Intelligence (AI) is not a future fancy anymore but is deeply rooted in our daily lives. Starting from unlocking phones with facial identification in the morning to the music list recommended by Spotify. Then, from Google web browsing to personalized streams on Facebook and Instagram, AI systems silently guide our choices and behavior. These techniques are often characterized as tools to serve humans. However, when social platforms analyze our clicks, searches, likes, and time spent continually to recommend content that people like, they are already the decision-makers. Internet media users are trapped in such filter bubbles and predictable behavior patterns. In order to enhance their algorithms, the data collected by platforms is unpredictable.

In the case of TikTok, its recommendation page is considered to be one of the most powerful algorithms in the social media market. In terms of entertainment, TikTok pushes videos based on what people like, more accurately analyzing how long we stay on a video, whether we like it or recommend it less, etc., to optimize the content stream continually. Over time, this algorithm has not just reflected our interests; it has even reshaped them. We start seeing more similar videos or repeating ideas, which gradually influences our worldview. Regarding societal and political aspects, TikTok can utilize algorithmic biases to control the direction of public opinion compared to Instagram or YouTube. Dolan (2025) mentions searching keywords for reactionary rhetoric about Chinese politics to collect videos. The research experiment results show that more than half of the 300 videos on Instagram and YouTube are related to reactionary rhetoric. At the same time, only a few percent, 2.5% or 8.7%, are on TikTok (para. 8). The data results for TikTok are driven by the fact that TikTok makes feature selections for users on what they are searching for. Then, videos with irrelevant content are pushed reversely to cover sensitive topics. In Addition to this, TikTok also manipulates positive content.

“The researchers found a positive correlation between time spent on TikTok and favorable views of China. These relationships held even when controlling for time spent on other social media platforms and demographic variables such as age, gender, ethnicity, and political affiliation” (Dolan, 2025, para. 16).

Whether it is promoting China or changing the world’s perception of China, TikTok employs a variety of data applied to its algorithms to take video personalized recommendations to the leading edge.

We rarely think about why a piece of content appears before us or wonder why an item is recommended. Such an unconscious acceptance has allowed AI to gain more control over our digital lives, as argued by the fact that AI systems are deeply rooted in political, economic, and environmental power structures (Crawford, 2021, p. 8). It seems like a thoughtful recommendation engine, but it is a tool for attention capture, behavioral prediction, and social influence. As in the case of TikTok, as software backed by China, it usually also hints at the thought values the tech company is trying to react to. Regardless of the platform, the algorithmic manipulation behind it has set the answer for people when they are looking for content they are interested in. From this perspective, AI has evolved from an assistant to a governor. Instead of relying on laws or regulations, AI algorithms achieve soft control over society through the micro-guidance of daily choices. The more internet users rely on these systems, the more power they lose, which we are often unaware of.

Who’s Really in Charge? When Platforms Quietly Rule Our News Feed

Social media platforms are no longer simply neutral tools that provide our entertainment lives. They have played the role of powerful and invisible rulers in the digital world. Algorithms become non-transparent decision-making systems without ever having our permission. With invisible rule-making, platforms decide what content is visible to us, what content is pushed into the stream, and what content is hidden. These choices gradually mold our attention, our options, and even our thoughts. Just and Latzer (2017) note this is known as algorithmic governance. These invisible rules slowly influence our lives and the social order (pp. 245-246). It is ironic that while these platforms are becoming more influential, we know almost nothing about how they work and even less about what supervisory mechanisms are in place.

As Pasquale points out, “The Black Box” represents a system of non-transparency in society and technology. Data collection by internet companies raises concerns about user control and privacy. Secrecy is justified, but over-censorship of individuals undermines democracy (2015). Algorithms have been deeply involved in how we access information, from search results to news tweets and short video recommendations. The key point is that these mechanisms are not publicized, so we cannot challenge them. As a result, platforms have accumulated vast amounts of “soft power” while being responsible to nearly no one.

For instance, the news push algorithm on Facebook would prefer to show content that provokes strong emotions or controversy. It is because such content is more likely to attract clicks and comments. While this may make us stay longer, it can also lead to the spread of misinformation and even increase social conflict. The problem is that we cannot possibly guess why Facebook is pushing this content. What we view is the platform “thinks” we should watch, not what we actively choose to view. This kind of non-transparent content sorting allows us to gradually lose the freedom of thinking and searching without realizing it. Regarding pushing content, there is a similar situation on YouTube. Its recommendation system constantly guides you to more similar content based on your viewing habits. Once users start clicking on a particular topic, the following recommended videos may become progressively extreme. For example, when you search for popular science videos about food and health, you may have a front page full of fake science or even conspiracy theory videos.

Unlike government agencies, there are no rules, regulations, or face-to-face accountability for the algorithmic systems of social media platforms. When algorithms have issues, such as pushing inaccurate content or making certain types of voices “disappear,” we have limited recourse or accountability. Platforms can quietly update their algorithms without telling us what changes have been made. Moreover, many algorithms are self-learning and constantly optimizing themselves based on data. We do not even know the mechanism behind the algorithms. It can be stated that the algorithms of most platforms are not designed to serve the community and the public but for commercial interests. Platforms want you to click on ads and watch them for a few more seconds, leaving more data to feedback to the company. The primary concern of the platforms is not the diversity, fairness, or authenticity of the information but how to make you more addicted to the content and then better sell ads. A simple example is even with a simple game app, platforms reward you by compulsory viewing of ads to complete the game. The basic reason why this is happening is that the user is expected to stay longer on the ads, no matter what the ads are. Such a black-box online system is transferring power from the public to the servers of large corporations. If we as internet users do not intervene, monitor, or create new rules, the more powerful algorithmic rule would become. When we live in a digital world where information flows are traded, hotspots are manipulated, and responsibility is shifted, what we surf the web for is not always reality but the virtual world that the platforms want us to view. After all, the online experience we have today is actually by design. So, the key question is, who is going to regulate these “governors” that manipulate our information lives? Being aware of this is the first step in taking back our ownership.

Public Participation and Future Imaginaries: What Can We Do?

Recognizing that non-transparent algorithms orchestrate our digital experiences is only the first step. The immediate question remains: how do we, as users and citizens, regain ownership in this algorithm-driven environment?

Feeling powerless when faced with massive tech platforms and opaque algorithms is easy. These systems are written, tested, and optimized behind closed doors, and the data used is almost beyond our control. However, this does not mean that we are completely out of the loop. In fact, daily users have a role to play. This is where public participation comes in, not just as a buzzword but as a tangible step towards reshaping autonomy in the digital world.

When bloggers want to post non-entertaining video content on TikTok or Instagram platforms, such as social phenomena or discussions about national politics, the algorithms will treat the videos unfairly. The algorithm may automatically recognize and then delete the video for no reason or restrict its display. Nevertheless, whoever the user is can be responsible for driving the platform. Some users have taken actions. Reynolds and Hallinan (2024) mention that in the case of YouTube, for example, creators can speak up for themselves and users by making videos that expose the platform. The support of users can put pressure on the platform and correct the algorithms in order to achieve user-generated accountability (p. 5108). Whether social media users are fighting against unfairness or appealing to platforms to make changes, platforms may choose to listen as a result of the community’s collective actions.

We can also imagine a better future. The foundation of algorithms requires humans to program and launch. If we only think of technology as the faster, more innovative, and more profitable it is, algorithms will go in that direction. But if we imagine that algorithms can be more fair, transparent, and respectful, then we can push it in a better direction. The next step in imagining is practicing. We can require platforms to open up the algorithms of recommended content. We can support policies that push for open algorithms and inspection. It is also essential that we improve our information recognition in our daily lives. It is recognizing fake news and understanding which information is “fed” to us by the platforms and which we genuinely want to read. It seems like a simple action, but it is a step towards taking back control of our digital lives. In the future, we should not be dictated to but rather engage with platforms to make them understand and respect us better. It is a good idea to build a better system together.

Conclusion

Algorithms have quietly changed our daily lives and how we access information using social media. From TikTok’s selected content to Facebook’s news pushes, these platforms guide our interests, thoughts, and perceptions through data. However, the more influential algorithms are, the less control users have over the online world. We cannot accept this reality in silence. We can challenge the algorithmic system by implicitly encouraging public participation and changing platform algorithms. When we take back the initiative in the digital world, we can browse information that truly interests us and receive more objective and correct information.

References

Crawford, K. (2021). Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence (pp. 1–21). Yale University Press.

Dolan, E. W. (2025, February 3). Algorithmic manipulation? TikTok use predicts positive views of China’s human rights record. Retrieved from PsyPost – Psychology News website: https://www.psypost.org/algorithmic-manipulation-tiktok-use-predicts-positive-views-of-chinas-human-rights-record/

Just, N., & Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the Internet’, Media, Culture & Society (pp. 238–258). Springer International Publishing.

Pasquale, F. (2015). “The Need to Know”, in The Black Box Society: the secret algorithms that control money and information (pp. 1–18). Harvard University Press.

Reynolds, C., & Hallinan, B. (2024). User-generated accountability: Public participation in algorithmic governance on YouTube. New Media & Society, 26(9), 5107–5129.

Veed Studio. (2020, February 22). How Does The TikTok Algorithm Work – Reverse Engineering TikTok’s Content Flow – YouTube. Retrieved from www.youtube.com website: https://www.youtube.com/watch?v=pLqHuhQxW1M

Be the first to comment