Unprecedented experience: voting with just a tap

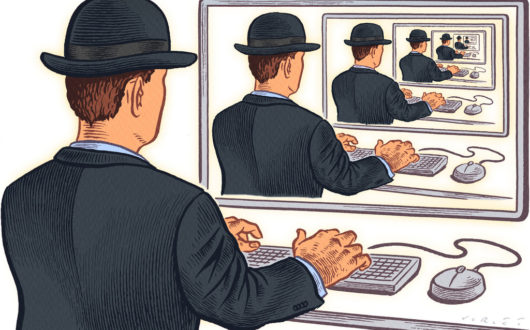

In many ways, social media has brought citizens a new era of experience. Social media is different from traditional media. Social media allows citizens to interact almost unlimitedly, which is impossible with traditional media such as newspapers, which are one-way ways of obtaining information. Citizens have gained convenience through social media, and this convenience is reflected in many aspects, such as obtaining information, communicating and socializing between people, expressing opinions and making comments, etc. The aspect I want to discuss today is about political rights on social media. Social media allows us to participate in discussions about political elections with just a few clicks on the screen. However, behind this convenience lies the problem of manipulation that is not easy to detect and difficult to regulate. I would like to raise a question here: when we move our fingers to vote, are there invisible hands beside us manipulating our hands to vote in the way they want?

The case I am going to explore today is about the 2024 US presidential election. To be more precise, I want to see what kind of impact speech on social media platforms will have on politics, such as the 2024 US presidential election. The results of this election show that speech on social media has had a significant impact on politics, and I found that there are problems in regulation in my research. Next, I will analyze the social network context of the case, then analyze why social media has an impact on politics, and finally look forward to the future development of social media.

Social platforms: the new battlefield of modern political campaigns

The 2024 U.S. presidential election shows how social media is becoming more and more important in politics and getting people to vote (Papageorgiou, 2025). A poll by the Pew Research Center found that more than half of American people get their news from social media (Papageorgiou, 2025). Men are more likely to use Reddit, X (formerly known as Twitter), and YouTube, while women are more likely to use TikTok, Instagram, and Facebook, X also stands out when it comes to politics (Papageorgiou, 2025). About 60% of X users follow politics or political topics on the platform (Papageorgiou, 2025). Because of this, X has become very important to the 2024 campaign, especially under Elon Musk’s leadership, who stresses free speech (Papageorgiou, 2025). Although Republican X users feel this way the most, they are more likely than Democratic X users to think that free speech is good for democracy (Papageorgiou, 2025). Even so, both political parties have heavily promoted their position on the platform, taking advantage of its unique power and user dynamics (Papageorgiou, 2025).

Traditional journalism, on the other hand, is becoming less and less important. The OECD says that between 2019 and 2024, the world market for print ads dropped by almost half (Papageorgiou, 2025). This is a huge blow to news media. The U.S. Census Bureau has also seen a big change from print to digital media (Papageorgiou, 2025). For example, the number of people reading print newspapers in the U.S. dropped by 14% in 2023 (Papageorgiou, 2025). The number of digital readers, subscribers, and page views for U.S. online newspapers have all gone down by a lot (Papageorgiou, 2025). This suggests that people are losing trust in traditional media, which many see as very biased and divisive (Papageorgiou, 2025). The fact that trust is falling even more strengthens social media as the best place to get involved in politics (Papageorgiou, 2025).

Even though it had said it would grow the X team, Musk’s X fired half of it a week before the November 2024 poll (Action, 2025). There were also fewer tools available to enforce the lab’s rules, and speech that breaks the rules was limited (Action, 2025). Posts that “unofficial evidence of election deception” or “claiming victory before the election results are certified” were once not allowed on X (Action, 2025). In addition, the tech giant has lowered the fines for breaking the regulation (Action, 2025). Regardless of the social platform, political information should be more strictly controlled before the US election, but Musk did the opposite operation at this time. Musk deliberately manipulated the comments on Platform X at this critical juncture, which makes it difficult for people to believe that he maintains an impartial attitude towards the US election. Trump’s use of Twitter has been one of the most important parts of his leadership (Benkler et al., 2018). It’s possible to see Trump’s use of Twitter to talk directly to the public, especially his tens of millions of fans (Benkler et al., 2018). There are times when Trump wants to use Twitter to control the media, the executive branch, the legislature, or even his opponents, and he does this by talking to his fans on Twitter (Benkler et al., 2018).

The “protective umbrella” of American social media platforms: Section 230 of the Communications Act

In the United States, social media platforms can let public opinion develop so arrogantly because they rely on a law that is crucial to them – Section 230 of the Communications Act of 1934 (Wikipedia contributors, 2025). The definition of the law is “The main legislation applicable to general platform liability in the United States, which exempts platforms from various forms of liability for hosting third-party content,” writes by Nunziato (2023). Let me give you a vivid example to illustrate the relationship between this law and social media platforms. You can think of social media platforms as gangs that force people to buy and sell and force people to accept their discipline. And this law is the police who collude with these “gangs” and turn a blind eye to the management of public opinion by social media. For example, the gang sent people to sell drugs on the street, and the police gave them verbal warnings, but did not send these bad guys to prison to let them receive the punishment they deserve. Because of this, Section 230 protects the platform from being sued for not taking down harmful material (Blaszczyk, 2024) even though it has editing tools. I think that for these social media platforms that fail to fulfill their regulatory responsibilities, the more serious punishment is to fine them a lot of money or to prevent them from appearing in people’s online lives and kick them out of the social media platform market. It is this lack of certain punishment laws that makes major platforms continue to be accomplices in promoting false or harmful content. For example, even if a huge platform, such as YouTube, is repeatedly and plainly warned that it is hosting harmful information (such as ISIS propaganda films), the platform is protected from accountability (Nunziato, 2023). In contrast to US law, the EU DSA states that once an online platform receives a reliable information that illegal content exists on its website, it must “promptly” remove such content or face liability (Nunziato, 2023). Unlike the DSA, Section 230 does not provide any prerequisites for platforms to receive liability exemption (Nunziato, 2023). The “notice and action” provisions of the DSA stand in stark contrast to the similar procedure under Section 230 of the US Communications Act (Nunziato, 2023). The United States and Europe are both places with more freedom of speech than China. The EU’s approach is like a mature big brother, standing up to maintain the environment of social media platforms.

Social platforms put people in an “echo chamber”

According to the literature review report by Ye et al. (2024), during the 2024 US presidential election, on platform X, users leaning to the left and right saw more accounts that supported their political views and less accounts that supported opposing views. In other words, on platform X, people will see more information about their preferred political positions, and it is difficult to see some statements of opposing political positions. This also means that people’s political positions are beliefs controlled by X’s promotion algorithm. Algorithms on social media sites personalize content and show people things that make them feel important. This makes an information box room that allows users to contact their believe views. This can make it so that people only see things that support their own viewpoint and never see anything that challenges or goes against it (Della Guitara Putri et al., 2024).

As Ye et al. (2024) say, Algorithm X boosts consistent accounts outside the network while lowering the visibility of competing views. Not only can algorithmic suggestion make the echo chamber effect stronger in social networks, but it can also make the content shared in the network more cognitive (Ye et al., 2024). According to Ye et al. (2024), the number of strong voices from outside institutions, like verified political analysts and powerful people, may add to these echo chamber effects by spreading fear and false information, making the problem even worse. The combination of social media, algorithmic news curation, bots, artificial intelligence, and big data analytics is making our echo chambers of bias stronger, removing signs of credibility, and generally making it harder for us to understand the world and govern ourselves as rational democracies (Benkler et al., 2024). This tendency has the potential to solidify opposing ideas, making it increasingly difficult for people to understand and empathize with those who disagree, as well as engage in productive discourse (Della Guitara Putri et al., 2024). Furthermore, filter bubbles contribute to social polarization by strengthening divisions among people who have opposing views (Della Guitara Putri et al., 2024). Users who are exposed to material that promotes their dominant opinion are more likely to understand or empathize with opposing perspectives (Della Guitara Putri et al., 2024). This can result in a reduction in the quality of hostility and prolonged public debate (Della Guitara Putri et al., 2024). These echo chambers limit our exposure to opinions, viewpoints, and cognitions, resulting in an intellectual psychology environment in which defensive thinking and open-mindedness are frequently sacrificed for cognitive biases (Della Guitara Putri et al., 2024). This trend can result in entrenched polarized leverage exchange rates, making it increasingly difficult for individuals to empathize with those who have opposing views and participate in productive discussion (Della Guitara Putri et al., 2024).

An exploration of the future development of social media platforms in the United States

With the development of technology and the popularity of the Internet, more and more people rely on social media for information, especially during elections, because online news updates faster than newspapers. However, after my research, I found that how social media platforms handle information during elections has caused me concern. Companies like Meta, TikTok, Twitter, and YouTube don’t have adequate regulations, processes, AI systems, or employees to greatly reduce the harm that happens before, during, and after elections (Benavidez, 2023). To change the current social media platform speech environment, it is key to improve the responsibility of each platform. Benavidez (2023) says that platforms should make their policies more open and honest, and they should share key indicator data with researchers, journalists, lawmakers, and the public on a regular basis. Then, platforms should give reports every three months on important trends, such as viral reports, network analyses, and so on (Benavidez, 2023). Moreover, they should provide researchers and others with affordable and thorough API access (Benavidez, 2023). When the next election year comes, I hope that social platforms can show their due sense of responsibility, otherwise the future speech environment will continue to pose a threat to fair democracy.

Reference

Action, D. (2025, March 24). United States election briefing – Digital action. Digital Action. https://digitalaction.co/united-states-election-briefing/

Benavidez, N. (2023). BIG TECH BACKSLIDE: How Social-Media rollbacks Endanger democracy ahead of the 2024 elections. https://www.freepress.net/sites/default/files/2023-12/free_press_report_big_tech_backslide.pdf

Benkler, Y., Faris, R., & Roberts, H. (2018). Network propaganda: Manipulation, Disinformation, and Radicalization in American Politics. Oxford University Press.

Blaszczyk, M. (2024). Section 230 Reform, Liberalism, and their discontents. California Western Law Review, 60(2), Article 2. https://scholarlycommons.law.cwsl.edu/cwlr/vol60/iss2/2

Della Guitara Putri, S., Purnomo, E. P., & Khairunissa, T. (2024). Echo Chambers and Algorithmic Bias: The Homogenization of online culture in a smart society. SHS Web of Conferences, 202, 05001. https://doi.org/10.1051/shsconf/202420205001

Nunziato, D. C. (2023). The Digital Services Act and the Brussels effect on platform content moderation. In Chicago Journal of International Law (Vols. 24–24, Issue 1, pp. 115–127).

Papageorgiou, M. (2025, January 14). Social Media, Disinformation, and AI: Transforming the landscape of the 2024 U.S. presidential political campaigns – The SAIS Review of International Affairs. The SAIS Review of International Affairs –. https://saisreview.sais.jhu.edu/social-media-disinformation-and-ai-transforming-the-landscape-of-the-2024-u-s-presidential-political-campaigns/

Wikipedia contributors. (2025, April 13). Section 230. Wikipedia. https://en.wikipedia.org/wiki/Section_230

Ye, J., Luceri, L., & Ferrara, E. (2024). Auditing Political Exposure Bias: Algorithmic amplification on Twitter/X approaching the 2024 U.S. presidential election. arXiv (Cornell University). https://doi.org/10.48550/arxiv.2411.01852

Be the first to comment