The rapid development of artificial intelligence (AI) technology is profoundly changing our lives, and human society has entered a brand-new intelligent era. AI is a technology that simulates human intelligence, capable of thinking and analyzing massive data like humans, and executing various instructions and tasks. It covers multiple fields, such as intelligent customer service in the service industry, autonomous driving technology in the transportation sector, and AI drug research in the medical field. In daily life, people can not only enjoy smart homes but also enhance work efficiency with AI. For example, writing, searching for information, and analyzing data. However, while AI brings us many conveniences, it also comes with many challenges and negative impacts – ethical issues, legal lag, etc. It is not only a technology but also a product of social practice and institutionalization (Crawford, 2021, p.8). That is to say, its development cannot be separated from the institutional framework constructed by human society over a long period of time. Therefore, the development of AI in the new era requires innovative governance – legal norms and global collaboration.

The Game of AI Governance: Who’s in Charge?

In the discussions on AI governance, the development of AI concerns multiple core stakeholders, and different stakeholders often have different demands. The government is concerned about social stability and wants to use AI to enhance control; enterprises tend to pursue technological innovation and hope to rapidly develop AI technologies while maximizing their commercial interests, minimizing as much as possible the restrictions of regulation; and the public is more concerned about the impact of AI on privacy and employment, worrying about the ethical risks and social inequality that may be brought about by the abuse of technology. For instance, some recruitment AI was found to prefer male candidates more. Under such diverse demands, AI governance becomes a complex negotiation process. Among these different interests and demands, there are also some overlooked issues. For example, during the training process of AI, a large amount of data is needed, and these data often contain a lot of users’ personal information. Lack of transparency is a key problem in the current development of AI – users often do not know when and how their data was collected.

The Development and Challenges of AI Governance

The governance process of artificial intelligence has gone through a development trajectory from technological exploration to system regulation. As early as the mid-20th century, pioneers such as Alan Turing proposed the concept of AI. Over the subsequent 150 years, the continuous evolution of computing technology ultimately shaped the current digital world (LeanIX GmbH, 2024).

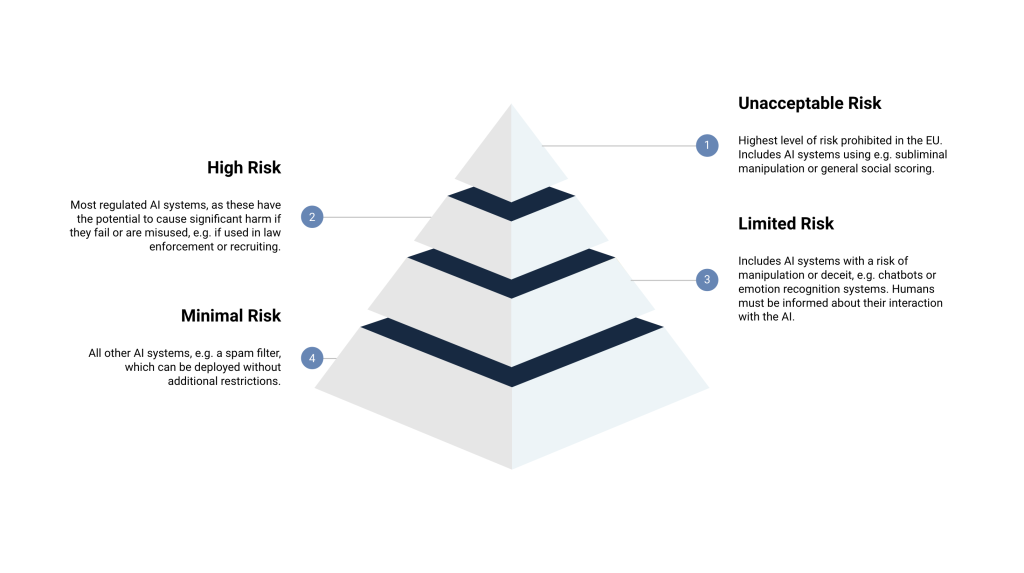

In the early days when technology was not so advanced, AI was just a research project in the laboratory – there was no mechanism for managing AI. However, with the rapid advancement of technology, AI began to be applied in some important fields, such as medical diagnosis and military decision-making, which led to many ethical issues. For example, the biases and discrimination brought by algorithms, as well as the impact on the job market, have all aroused widespread social concern. Facing these challenges, the international community gradually realized that people needed an AI regulatory framework. In April 2021, the European Commission proposed the AI Act. This is a law specifically designed to manage AI, with the aim of making AI safer, more transparent, and more respectful of humanity (European Union, 2021).

The development of AI is not merely a technical issue but also a social concern – closely related to social trends. It profoundly affects social issues such as privacy rights and employment, and has aroused widespread public attention. For instance, data abuse, algorithmic bias, and the impact of automation on traditional jobs, etc., all pose higher requirements for social governance. Algorithmic systems are complex systems with interrelated parts, and their overall behavior is unpredictable, posing challenges to governance (Just & Latzer, 2017). AI governance involves multiple interests, issues such as algorithmic discrimination and insufficient regulation may exacerbate social conflicts. To adapt to the challenges posed by this emerging technology, people need to constantly update legal and ethical systems. In the context of globalization, the development of AI has driven an intensification of cross-border cooperation and competition. Many countries are competing for technological leadership and standard-setting rights, such as the European Union, the United States, the United Kingdom, etc. Different countries have different regulations, which requires global collaboration and unified legal standards – international cooperation.

The New Era of Governance: EU AI Act

In 2023, Europe passed an AI regulation, unifying the regulation of AI. As the world’s first comprehensive legislation specifically targeting AI, it has formulated detailed rules in terms of safety, privacy, transparency, etc. It also classifies AI systems according to risk levels, imposes strict requirements on high-risk systems, and clarifies the responsibilities of relevant parties and regulatory standards (Zaidan & Ibrahim, 2024). This bill classifies AI systems into different grades based on risk size, not only assigning clear responsibilities to the companies developing AI, but also establishing a complete set of regulatory rules, including whether the system is qualified for certification, who will supervise, etc. Before this, there were significant differences in AI regulation among countries, which might lead to a fragmented situation. This new regulation sets a unified standard for everyone, allowing AI development to finally have a set of rules to follow. For example, high-risk AI that affects personal safety – such as autonomous driving technology – now must undergo more rigorous safety tests. Developers must comprehensively assess risks, complete certification, and ensure the system’s safety and reliability. Once there is a problem, detailed reports must be submitted to help improve the technology.

The new AI regulations of the European Union have brought about many changes – creating an open and transparent AI governance environment. It makes AI systems safer, enabling people to use them more confidently. Especially in important fields such as recruitment, healthcare and transportation. Previously, some AI systems used in recruitment were found to be “biased”, for instance, favoring men more. The new regulations may make AI recruitment more fair – through strict assessment, ensuring that the algorithms are unbiased. On one hand, overly strict regulations might slow down technological development and prevent enterprises from keeping up with the global pace; but if completely ignored, it is easy for AI to be misused, harming people’s rights and interests and causing people to lose trust in technology. Therefore, this set of regulations has found a balance point. In simple terms, AI with low risks can operate freely without too many restrictions; but those AI systems used in important fields and potentially affecting personal safety, such as autonomous driving and medical diagnosis, must undergo strict review to ensure there will be no problems. This way, it can both allow AI technology to continue developing and enable people to use it with peace of mind.

Zaidan also mentioned that although AI has brought many benefits, it also brings troubles, such as being abused by some companies to make money. These problems have made many countries realize that laws need to be formulated to govern AI. Since the EU has a wide influence in law-making, EU regulations may be emulated by other countries, helping to better manage AI globally. Its introduction has guided AI to move in a better direction – making technology serve society. Some companies may spend more money on testing, while avoiding ethical risks, and improving the quality and reliability of their products. Will this affect the speed of AI innovation? Perhaps to some extent it will, but the introduction of the bill is not to limit its growth, but to make AI development more stable. Compared with pursuing speed at all costs, a responsible development is more important. Although it will increase the R&D costs of some enterprises, it provides a direction for the healthy development of AI – establishing a sustainable AI ecosystem.

The Different Paths of the EU and China

In terms of artificial intelligence legislation, the EU and China have taken two different approaches. The EU has developed a comprehensive set of laws and intends to manage it in a unified, transparent and safe manner. They classify AI into several risk levels, with high-risk areas requiring strict compliance with regulations. For instance, in areas like autonomous driving and healthcare, they need to be particularly cautious. They attach great importance to human rights, transparency and market supervision, hoping to balance innovation and rules.

While China tends to take a more gradual approach, it has issued different regulations for various AI technology applications, mainly under the supervision of government departments. It aims to both encourage innovation and ensure that it can be controlled – AI development should comply with the requirements of the Party. They place greater emphasis on national security, social stability, and information control, demanding that AI content be true and reliable – making it clear that this is done by AI and emphasizing that platforms should bear the main responsibility. Moreover, China intends to formulate regulatory rules based on the degree of risk and adopt different management measures for different situations. It neither suppresses innovation nor allows risks to run rampant (Chen, 2024).

What the EU values is democracy and the rule of law, and it likes to work with other countries to formulate global rules for AI. China, on the other hand, prioritizes security the most. It believes that the development of AI should be beneficial to the country and in line with the national mindset, such as promoting rules through projects like the “Belt and Road Initiative”. In short, what the EU desires is an AI world with rules and openness, while China hopes that AI can contribute to economic development and be under control without causing trouble. Although the approaches of the EU and China in AI governance are quite different, while they adhere to their key concerns, they actually may also reach some consensus in some aspects. How to protect personal privacy through governance and prevent AI from abusing data is both a concern of the EU and a direction that China is gradually paying attention to. If the two sides cooperate and formulate some common rules, it may be possible to better address global issues.

Conclusion

The rapid development of artificial intelligence has brought unprecedented opportunities and challenges, and its governance has become a global focus of attention. Different stakeholders – governments, enterprises and the public – have different demands for AI, making the governance process highly complex. The European Union took the lead in establishing a comprehensive regulatory framework through the “Artificial Intelligence Act”, emphasizing transparency, safety and protection of human rights; while China adopted a gradual legislative approach, focusing on national security and social stability, and encouraging technological innovation. Although the two paths are different, both reflect the importance attached to AI risk management. In the future, global collaboration and rule coordination are of great significance. Only through cross-border cooperation can a sustainable AI ecosystem be built that is innovative and environmentally friendly. The experiences of the European Union and China may be mutually beneficial, and together they can address the far-reaching impacts brought about by this technological revolution. Not only should some flexible laws be enacted, but also efforts should be made to promote cooperation among different industries and countries, gradually establishing unified rules and standards. Only in this way can AI be led on a safe, reliable and socially responsible development path.

Reference List

Crawford, K. (2021). The Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press.

Chen, Q. (2024, February 7). China’s Emerging Approach to Regulating General-Purpose Artificial Intelligence: Balancing Innovation and Control | Asia Society. Asiasociety.org. https://asiasociety.org/policy-institute/chinas-emerging-approach-regulating-general-purpose-artificial-intelligence-balancing-innovation-and

European Union. (2021). The EU Artificial Intelligence Act. The Artificial Intelligence Act. https://artificialintelligenceact.eu/

Just, N., & Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the Internet. Media, Culture & Society, 39(2), 238–258. https://doi.org/10.1177/0163443716643157 )

LeanIX GmbH. (2024). Complete History of AI | LeanIX. Leanix.net. https://www.leanix.net/en/wiki/ai-governance/history-of-ai

Zaidan, E., & Ibrahim, I. A. (2024). AI Governance in a Complex and Rapidly Changing Regulatory Landscape: A Global Perspective. Humanities and Social Sciences Communications, 11(1), 1–18. https://doi.org/10.1057/s41599-024-03560-x

Be the first to comment