Introduction: When a Cry for Justice Becomes a Target for Hate

On May 23, 2023, a heartbreaking accident rocked Wuhan Hongqiao Primary School. A first grader was unfortunately knocked down by a schoolteacher’s vehicle. After experiencing this unimaginable loss, the child’s mother, Ms. Yang, struggled to fight for justice and dignity. Her story took a devastating turn on June 2 when, after relentless online abuse, she jumped from a building to end her life.What should have been a moment of public sympathy quickly turned into a storm of online hostility. The tragedy has since sparked a national conversation about the limitations and failures of digital governance and the human cost of unchecked cyberbullying.

How Grief Became a Spectacle: The Anatomy of Online Abuse

As Ms. Yang sought answers and accountability for her son’s death, the internet turned into a courtroom—and she became the defendant.

The Cruel Doubts: Instead of support, Ms. Yang faced a flood of accusations. Some questioned her motives, accusing her of “dramatizing” the incident or exploiting the tragedy for financial gain. Others mocked her clothing, speculating that she didn’t “look sad enough” to be a grieving mother.

Personal Attacks :Insults came next. Her appearance, her behavior, even her grief were picked apart. She was labeled “a fine actress” and deemed “unworthy of sympathy.” One commenter chillingly suggested she “deserved it.”

Secondary Trauma: As her story went viral, so did falsehoods. Baseless rumors claimed she had financial conflicts with the school, accusing her of trying to extort money by leveraging her child’s death. These conspiracy theories added salt to an already gaping wound, deepening her emotional isolation.

A Mother’s Breaking Point: When Silence Meets Suffering

After Ms. Yang’s death, family members came forward, shedding light on the profound toll online harassment had taken on her—and the direct link it had to her final act.

1. Crushed by the Weight of the Internet: Ms. Yang is already burdened with unbearable grief. But it was the relentless hatred, suspicion and digital cruelty that deepened her grief and made it darker. Over time, the constant bombardment wore her down, breeding a spiral of depression and anxiety.

2. Alone in the Fight for Justice: While seeking justice for her child, Ms Yang found herself isolated – without the support of school officials, abandoned by local authorities and misunderstood by wider society. The justice she sought was slow to arrive. As the online abuse intensified, her hope faded. In the end, she saw no other way out.

Cyberbullying: The Invisible Weapon That Kills

In today’s digital society, online hate has become more than just background noise—it’s an insidious threat, corroding public spaces and social values with alarming speed. Often dismissed as “just words,” cyber violence operates in the shadows, its anonymity making it all the more dangerous—and harder to hold accountable.

According to researchers Carlson and Frazer (2018), the damage caused by online abuse runs far deeper than insults. The real danger lies in the lasting impact on a victim’s mental health. Prolonged exposure to hate speech and personal attacks is closely linked to anxiety, depression, and tragically, suicidal ideation. But the harm doesn’t stop with individuals—it chips away at the trust and empathy within communities, leaving emotional scars that ripple across society. Ms. Yang’s story is a typical example. She struggles to recover from the loss of her child, only to become the target of her faceless tormentor. They criticized her clothes, her appearance, even her pain. The rumors questioned her motives and tarnished her character. It is no longer “criticism.” It was a relentless psychological attack – one that pushed her over the edge.

And she’s not the only one.

In 2020, Japanese wrestler Hana Kimura died by suicide after being targeted for her reality show appearance. In 2019, South Korean actress Choi Xue-ri met the same fate following months of rumors and ridicule. In 2021, French influencer Mava Flosa ended her life after enduring prolonged harassment online. Each of these stories is a stark reminder: cyberbullying isn’t just cruel—it’s deadly.

The Mechanics of Digital Cruelty: Why Cyber Violence Is So Dangerous

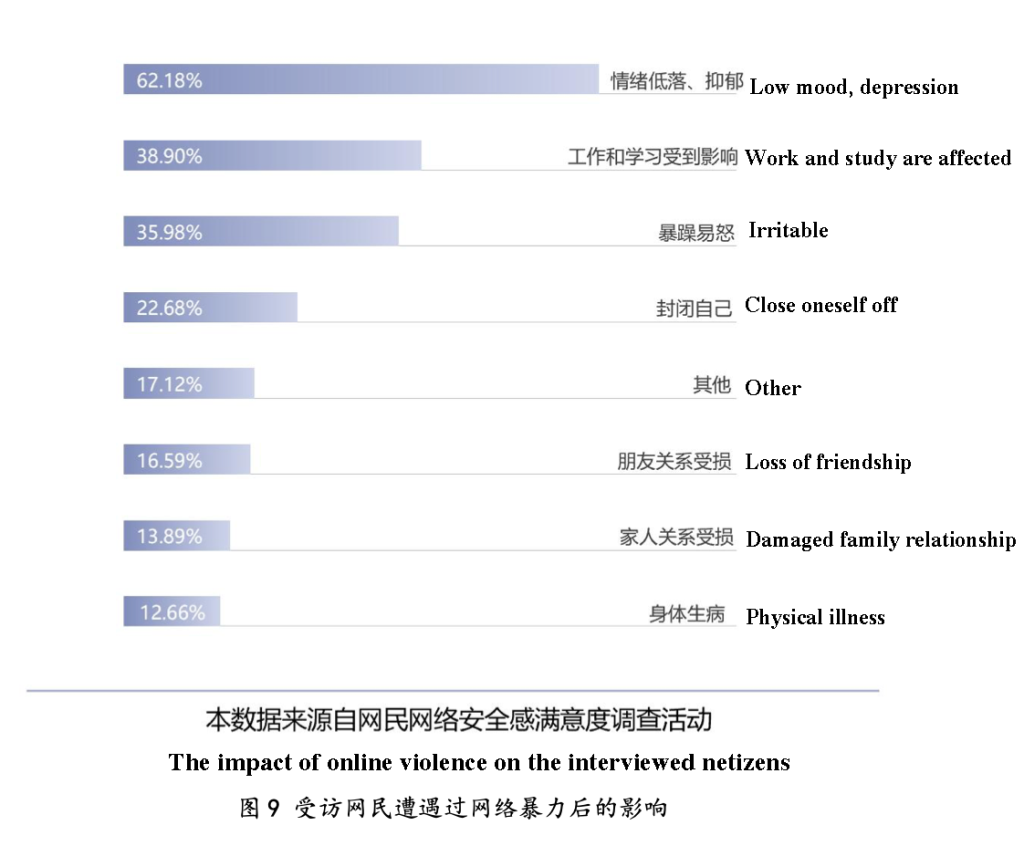

According to the “2022 National Internet Users’ satisfaction with Internet Security” special survey report on “Prevention and Control of Internet Violence and Internet Civilization”, Internet violence has a variety of psychological and physical impacts on victims:

- 62.18% of victims have psychological problems such as depression and depression;

- 38.90% of victims said that cyberbullying affected their work and study;

- 35.98% of victims became irritable;

- More than 10% of victims choose to close themselves, damaged relatives and friends, physical illness, etc.

To truly understand the threat of cyberbullying, one must look beyond the surface. It’s not just the language itself, it’s how these words are spread, multiplied, and implicitly accepted by society, making this seemingly “invisible” violence particularly insidious. Anonymity is one of the most dangerous sources of cyberbullying. Hiding behind a virtual identity, people often lose empathy, their sense of responsibility disappears, and cruelty becomes easy. And the group effect of online violence makes the situation quickly out of control – a malicious comment can soon trigger a wave of language attacks, and this “violence integration” effect makes the moral boundary quickly collapse in the group(Strathern et al., 2020), and violence is rampant. At the same time, rumors and hate speech have been allowed to spread at an alarming rate, fueled by digital media. In the case of Ms. Yang, within a few days, rumors and attacks about her exploded on the Internet, giving her no space to respond and no way out. Under the pressure of public opinion, she became increasingly isolated. What’s more worrying is that unlike physical abuse, which leaves no visible traces, the psychological damage it causes is just as real and deadly. However, society often downplays the emotional responses of victims as being “overly sensitive,” thereby ignoring or even denying their pain. Ms. Yang’s death is a stark warning that ignoring the gravity of trauma can have irreversible consequences. The deeper problem is the role of the platform itself. As researcher Matamoros Fernandez points out in his theory of “platform racism,” the algorithmic mechanisms of social media platforms often inadvertently facilitate the spread of harmful content. These engagement-centered systems, in the absence of effective intervention mechanisms, have become hotbeds for hate and cyberbullying. Ultimately, this is not just a matter of individual moral loss, but a public health crisis and a collective failure of digital governance. If we want to avoid the next tragedy, we must have governments, tech platforms, and the public all working together to assume their responsibilities.

When Tech Fails Humanity

For all the power they wield, today’s digital platforms are still struggling with one of the internet’s most urgent issues: hate speech. According to media theorist Terry Flew (2021), there’s a critical gap in how these platforms handle online abuse. While they champion free speech and user engagement, they often fall short when it comes to protecting users from harm. Too often, algorithms prioritize what gets clicks over what’s right. In this attention economy, outrage travels faster than empathy.

Take Ms. Yang’s case. After her story went viral and she became the target of online mobs, the platform eventually stepped in—banning over 600 accounts. But by then, the damage was done. The intervention came too late to make a difference. This kind of after-the-fact action reveals a deeper problem: the absence of proactive systems. There was no early-warning mechanism. No meaningful victim protection. Just a slow and insufficient response to a fast-moving, devastating crisis.

Silence in Practice: What the Wuhan Case Reveals

Ms. Yang’s case is more than a personal tragedy—it’s a glaring case study in the failures of digital governance. Her experience brings to light four major issues plaguing the way platforms handle online hate:

1. The Gray Area of Hate Speech: How do you define hate speech across languages, cultures, and contexts? According to research by Facebook (Sinpeng et al., 2021), hate speech is deeply nuanced and context-dependent. Yet platforms still rely on broad, global definitions that fail to capture the real impact on victims. Meta, for example, frequently touts its commitment to combating hate online—but in practice, its vague policies offer little real protection for users like Ms. Yang.

2. Broken Reporting Systems: Researchers Carlson and Frazer have noted a worrying trend: many online communities lack moderators who are trained to identify and handle hate speech effectively. In the Wuhan case, this became painfully obvious. There were no clear ways for Ms. Yang—or the public—to report the abuse she endured. No safety net. No feedback. Just silence.

3. Dodging Accountability: The concept of “Platformed Racism,” introduced by Matamoros-Fernández (2017), holds that tech platforms don’t just allow hateful content to exist—they can amplify it. Whether by design or neglect, algorithms often push sensational, divisive posts to the top, further fueling cyber violence.

4. Profit and Politics Over Protection: As reported by Social Media Today (2025), companies like Meta tend to tread carefully around politically or economically sensitive topics. When a story like Ms. Yang’s goes viral, platforms may avoid stepping in, fearing backlash. That hesitation enables hate to spread unchecked.

“How the platform’s design, algorithms, and governance mechanisms inadvertently contribute to the proliferation of racism. ”

— Matamoros-Fernández

📰What Platforms Must Do

Fighting cyber violence isn’t just about naming the problem—it’s about fixing it. And that requires everyone at the table: platforms, governments, and ordinary users alike.

Fix the Filters

Digital platforms need to strengthen their content moderation systems. It’s not enough to rely on slow, inconsistent reviews—hate speech must be flagged and removed swiftly and accurately.

Make Reporting Easier

Victims shouldn’t have to jump through hoops to get help. Platforms must streamline reporting tools so users can quickly and safely raise the alarm.

Partner with the People Who Know Best

Tech companies must work hand-in-hand with government agencies and civil society groups to build smarter, more compassionate systems for handling abuse.

👤What the Public Can Do

Learn Before You Post

Raising our collective internet literacy is essential. Knowing how to express views respectfully—and when not to engage—is the first step toward ending online cruelty.

Be an Active Bystander

If you see abuse, report it. Silence fuels cyber violence. Taking action can break the cycle.

Stand With Victims

Show empathy. Offer support. Often, just knowing someone sees and believes them can help a victim hang on.

Building a safer, healthier internet isn’t the responsibility of one person or platform. It’s a shared mission—and one we can’t afford to ignore.

Conclusion: Never Again

Ms. Yang’s heartbreaking story is more than a singular tragedy—it’s a mirror held up to our digital age. Her death exposed cracks in every corner of our online world: failing regulations, toothless platform governance, a fragile social support system, and a dangerously low level of digital literacy.But at its core, this is a human story—a reminder that behind every screen is a real person, fighting battles we may never see.To prevent tragedies like this from happening again, we need more than just outrage. We need action.

🏛️Governments must introduce clearer, stronger legislation to protect users.

🧷Platforms must take real responsibility, not just after harm is done, but before.

👤Communities must educate themselves and stand against hate. Especially for young people, digital literacy isn’t optional—it’s survival.

And perhaps most importantly, we must never let stories like Ms. Yang’s fade into silence.Her tragedy should not be a fleeting headline. It should be a turning point—a call to reform how we manage the digital world, how we treat one another online, and how we respond to cruelty with compassion. Only through shared commitment and deep societal change can we make the internet a safer, more humane space for everyone.

References:

Carlson, B., & Frazer, R. (2018). Social Media Mob: Being Indigenous Online. Macquarie University. https://researchers.mq.edu.au/en/publications/social-media-mob-being-indigenous-online

Flew, T. (2021). Regulating Platforms. Polity Press.

Matamoros-Fernández, A. (2017). Platformed racism. Information, Communication & Society.https://doi.org/10.1080/1369118X.2017.1293130

Meta Transparency. (2025). Hate Speech – Community Standards.

Sinpeng, A., Martin, F., Gelber, K., & Shields, K. (2021). Facebook: Regulating Hate Speech in the Asia Pacific. University of Sydney & University of Queensland. https://r2pasiapacific.org/files/7099/2021_Facebook_hate_speech_Asia_report.pdf

Social Media Today. (2025). Meta Political Content Update.

Strathern, W., Schoenfeld, M., Ghawi, R., & Pfeffer, J. (2020). Against the Others! Detecting Moral Outrage in Social Media Networks. arXiv preprint arXiv:2010.07237

https://doi.org/10.48550/arXiv.2010.07237

Appendixes:

Figure1 Netizens’ comments on Ms. Yang’s justice:https://weibo.com/2230913455/4908286140221529

Figure 2 Hana Kimura’s photos from the competition and her company’s obituary:https://m.thepaper.cn/wifiKey_detail.jsp?contid=7561391&from=wifiKey&utm_source=chatgpt.com#

Figure 3 The impact of online violence on the interviewed netizens :https://www.iscn.org.cn/uploadfile/2023/0104/9.pdf?utm_source=chatgpt.com

Vedio Wuhan mother jumps to death, Who is responsible? https://www.youtube.com/watch?v=bdZNXfBhvA8&rco=1

#Algorithmic Governance #ClearView AI #ContentModeration #Facial Recognition #Freedom of speech #instagram #MetaPolicy #Misinformation #FakeNews #China'sFoodSafety #Pimeyes #Public Anonymity #Twitch academic integrity AI-assisted writing ai and algorithoms apps Australian Privacy Principles authorship data breaches digital literacy Elderly ElderlyPrivacy empathic response FemTech fertility apps health data media period trackers period tracking apps personal data platforms privacy policies sensitive data Swiss governance Telecom scam Tweet university governance Vietnam women's health

Be the first to comment