With the development of technology, we suddenly have a momentary discovery in our daily life: from online shopping to watching videos or news on social media platforms, or even choosing what to eat, we are always able to find our favorite options very quickly through various ways, and sometimes we even open an app, and the home page has the content that we need.

Certainly, it’s not due to coincidence, it’s all about artificial intelligence and recommender system algorithms. The algorithms analyze our behavior, such as which types of content users used to like and search, to predict and recommend content to users that they might be like.

What seems to be a user-friendly feature that helps users to solve daily problems, subconsciously changes the way users make choices, changes their perceptions. So, in this blog, I am going to illustrate how AI and recommender system algorithms work and how they can inadvertently guide users’ choices, and how governance and policy to restrict the workings of AI and recommender system algorithms.

The Development and Popularization of AI

In recent years, AI has moved from the unattainable research labs into people’s daily lives. For every second people live, people are surrounded by AI applications, like online shopping, Siri, which can improve people’s quality of life.

Mobile phones are one of the most common ways to popularize AI. It can be said that there is not a single modern smartphone that does not employ AI, and many features that people don’t pay attention to but use all the time are the result of AI, such as the camera, which uses AI to optimize the quality of images. And the homepage of many apps make content recommendations based on user preferences, with streamers like Netflix and Tik Tok using complex recommendation system algorithms to recommend personalized video content for users based on their past data and preferences (Hallinan & Striphas, 2016).

What is recommend system algorithm and how it works?

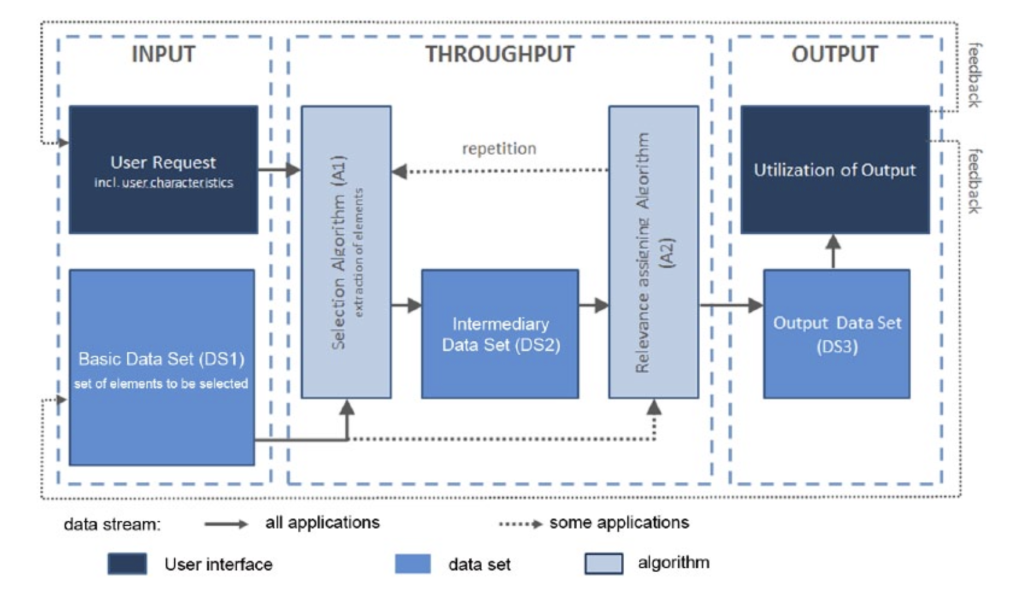

What is a recommender system algorithm? We can imagine an algorithm as a series of steps that will eventually solve a problem or accomplish a task. Here I will use a very daily example that assembling furniture. When we buy a furniture, it will contain all the parts we need, and an instruction manual. The manual is what we call an algorithm, which will tell us the next steps and guide us step by step in assembling the furniture (Flew, 2021, p82).

The first step of the recommender system algorithm is the same as the original state of assembling the furniture: it has all the parts and tools, which is comparable to the user data and historical behavioral information in the recommender system.

In the second step, we usually read the instructions to understand how to complete each step, just as the recommender algorithm will start interpreting the model rules to process the input data.

Then, we start assembling the furniture by following the correct steps in the instructions, while the recommender system analyzes the user data and behavioral analytics collected in the first step and applies techniques such as content analytics to match what the user is likely to like and generate a list of recommendations that are unique to the user.

If a problem is found during the whole furniture assembly process, it may be possible to adjust the position of the components or connecting points of the parts to enhance the stability (Just & Latzer, 2016). The recommender system is the same, will be adjusted according to the user’s favorite list of recommendations or feedback, and improve the quality of the recommendations.

Finally, as if seeing the finished furniture, users will see on their own pages what they have been recommended as being of interest.

How Recommender System Algorithms Influence People’s Choices and Behavior

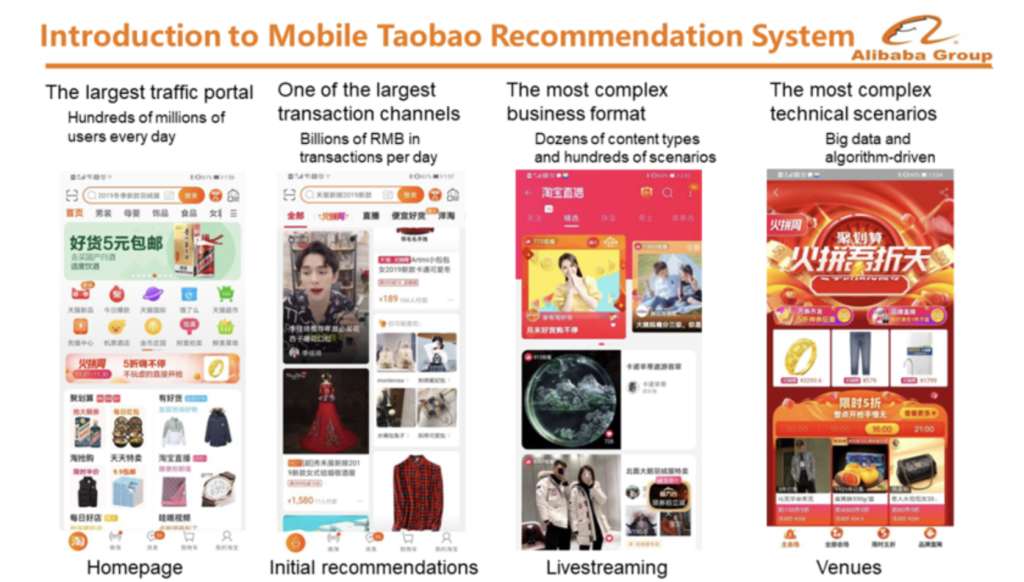

With more contact with AI, people seem to find that their choices are, to some extent, influenced by the recommendation system algorithms. One of the most common examples is online shopping, where sites like Amazon and Taobao currently dominate most of the online shopping market.

For Taobao, when a user browses or searches for a product, the algorithm will recommend related products based on the user’s search history and browsing behavior, and after the user completes the purchase order, it will recommend products related to the purchased item at the bottom of the order to increase the chances of cross-selling, and at the same time, it is also a way to guide the user to buy the goods that may not be needed in a way that is generally acceptable to the public. For example, if you buy a camera, it will immediately recommend memory cards, camera bags, and other related products(How Taobao Applies AI to Personalized Recommendations, 2021).

Although these algorithms were initially thought to bring convenience and efficiency, the “excessive” involvement of algorithms in people’s lives has gradually led to a number of impacts and consequences.

Impacts and Consequences of Recommender System Algorithms

Filter Bubbles, where recommender systems algorithms will recommend only content relevant to the user’s interests based on information and data collected in the past, thus leading to users being trapped in Filter Bubbles, even reducing their exposure to new information and different perspectives and affecting the openness and inclusiveness of the entire online society. Among other things, there have also been gender-differentiated Filter Bubbles in the comments section in the Chinese version of TikTok. For example, when the content of a video may provoke a debate between men and women with different viewpoints, most female users will only see comments made by or in favor of women, while male users will see comments made by or in favor of men. Although in some ways this approach prevents men and women from arguing on the Internet, it exacerbates social problems by confining most users to Filter Bubbles, as if they were being restricted in their freedom and their minds were being manipulated.

At the same time, there are privacy and security concerns, as many people feel their privacy is being violated and biased precisely because recommender algorithms are overly “involved” in people’s lives.

Privacy Management and Bias

From the above, we know that the first step of the algorithm is to enter the user’s data. Algorithms need to do this by collecting a large amount of data about the user, which is more than just personal information, behavioral preferences, or even biologically informative data about the user. Because the data is too personal, it raises concerns about privacy being profited or exposed.

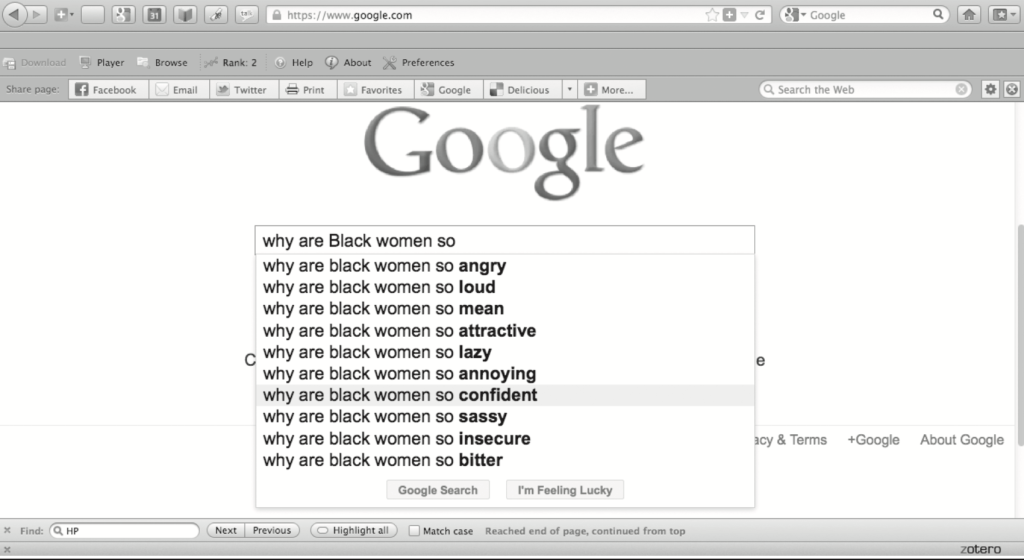

And another issue which is bias is also a recently perceived problem. The Filter Bubbles differentiated by gender mentioned above, is just one of the results caused by bias. There is also a typical example of bias in Google’s search engine from Safiya Umoja Noble’s Algorithms of Oppression (2018, pp. 15–63). In this article, there is a reference to a United Nations campaign that used real Google search autosuggestions to make the point that the algorithm is pervasive regarding sexism and racism. Searching for black women in the Google search engine box will automatically associate them with some indecent porn sites.

There are many reasons why the algorithm may be biased. The first is caused by the data that the algorithm inputs at the beginning, because recommendation algorithms often rely on past data from the user to calculate how to make a recommendation, and if there are biases in this data, the algorithm may also replicate these biases in the earliest steps of the algorithm, which is similar to the “stereotypes” that also exist in people. For example, if the data behind a movie comes primarily from viewers in a particular region, the algorithm may recommend a series of similar movies to viewers in that region, ignoring other diverse needs and preferences.

Some of the decisions made by the algorithm designer in designing and constructing the recommendation system may also be a cause of algorithmic bias (Noble, 2018, p35). When bias is present in the initial input data, it will lead to biased initial recommendations, in which case all user responses to the recommendations, such as clicking or watching, will reinforce the bias that is present. In the middle of the algorithm is the loop, and the user’s reaction will cause the bias to be reproduced and exacerbated repeatedly.

Finally, existing problems in society also contribute to algorithmic bias (Noble, 2018, p45). Recommendation algorithms may replicate existing biases in society as they learn from the data. In the case of the Google search engine box, people are presenting their opinions and ideas to the algorithm behind the search process. When a large portion of the society has the same bias and uses the search engine to search for relevant content, the algorithm will use this data and guide it. The algorithm will use this data to guide other minorities to accept these biases.

Governance and Policy

If these biases and influences are not effectively limited and corrected, they will have a negative impact on society. Governance and policy on AI and recommendation algorithms are evolving. Different countries and organizations are also developing policies and regulations for better use of AI technology.

1. The EU’s General Data Protection Regulation (GDPR)

In 2018, to protect the privacy of EU citizens’ personal data, GDPR set strict rules such as explicit consent and data minimization and GDPR gives individual citizens the right to access data, correct or delete data, etc(GDPR Compliance | Seekom, 2018).

2. Consumer Privacy Protection in the United States

This policy is regulated by state laws, such as the California Consumer Privacy Case (CCPA). The CCPA provides similar principles to the GDPR. Also, the U.S. Federal Trade Commission regulates the use of consumer data(Murray, 2023).

3. China’s Data Security and Personal Information Protection Laws

In 2021, China implemented the Data Security Law and the Personal Information Protection Law to enhance security and transparency in data processing(Luo, 2023).

4. Guiding principles and frameworks of international organizations

The Organization for Economic Co-operation and Development (OECD) released the Principles of Artificial Intelligence, which emphasize transparency, security, fairness, and accountability of AI systems(OECD, 2022).

AI governance is a way to ensure that its operation and use meet ethical and compliance standards (Hiter, 2023). This governance meets regulatory standards, safeguards customer data, and maximizes the effectiveness of using AI. It may prioritize data security and privacy while achieving its AI goals more effectively by putting in place an AI governance framework.

Conclusion

AI and recommender system algorithms have become more and more integrated into daily life in recent years, having a subtle but significant impact on user perceptions and choices. These algorithms use user history to forecast and recommend information, which increases convenience but may also stifle free thought by forming “filter bubbles.” These “bubbles” restrict exposure to opposing viewpoints, which may have an impact on how transparent online interactions are. The gathering of copious amounts of personal data also gives rise to privacy and security issues. Stereotypes and discrimination can be reinforced by bias in algorithmic recommendations, which might arise from skewed baseline data or social prejudices. Global and regional legislation like China’s analogous laws, the CCPA in the US, and the GDPR in the EU seek to improve data privacy and system transparency to address these problems. Nevertheless, in the rapidly changing world of AI technology, strong governance is essential to reducing biases and defending user rights.

Reference

Flew, T. (2021). Regulation Platforms (pp. 79–86).

GDPR Compliance | Seekom. (2018, May 21). https://www.seekom.com/about-seekom-nz-property-management-channel-manager/gdpr-compliance/?gad_source=1&gclid=CjwKCAjw5v2wBhBrEiwAXDDoJVvKfbwxQ5eVZFEsxTzhdiJs_BhuwVr7sahBK9H-KnXxrCRQjHHznxoCFKQQAvD_BwE

Hallinan, B., & Striphas, T. (2016). Recommended for you: The Netflix Prize and the production of algorithmic culture. 117–137. https://doi.org/10.1177/1461444814538646

Hiter, S. (2023, December 2). AI Policy and Governance: What You Need to Know. EWEEK. https://www.eweek.com/artificial-intelligence/ai-policy-and-governance/

How Taobao Applies AI to Personalized Recommendations. (2021, April 1). Alibaba Cloud Community. https://www.alibabacloud.com/blog/how-taobao-applies-ai-to-personalized-recommendations_597556

Just, N., & Latzer, M. (2016). Governance by algorithms: reality construction by algorithmic selection on the Internet. Media, Culture & Society, 39(2), 238–258. https://doi.org/10.1177/0163443716643157

Luo, D. (2023, November 3). China – Data Protection Overview. DataGuidance. https://www.dataguidance.com/notes/china-data-protection-overview

Murray, C. (2023, April 21). U.S. Data Privacy Protection Laws: A Comprehensive Guide. Forbes.

Noble, S. U. (2018). Algorithms of Oppression : How Search Engines Reinforce Racism (pp. 15–63). New York University Press.

OECD. (2022). Artificial intelligence – OECD. Www.oecd.org. https://www.oecd.org/digital/artificial-intelligence/

Be the first to comment