DeepSeek, a new AI app created by a Chinese start-up by the end of 2024, suddenly became super popular all over the world. People couldn’t stop talking about how smart and quick it was at answering questions. It really felt like the next big thing in AI. But just as everyone was getting excited, problems started popping up. From cyberattacks to data leaks, and even privacy issues and international bans, DeepSeek’s story made people wonder: Are we risking privacy and security just to chase the next AI trend?

The DeepSeek logo is seen on a phone in front of a flag of China on January 28, 2025 in Hong Kong, China. Anthony Kwan/Getty Images via Getty Images

DeepSeek Security Incident Resume: A Rollercoaster of Privacy and Safety Issues

DDoS Attack Impact (Early January – January 28, 2025)

Shortly after its launch, DeepSeek encountered a wave of DDoS attacks that disrupted its services. Starting in early January 2025, the platform’s servers frequently crashed, making it difficult for users to log in. On January 27-28, the situation worsened with more sophisticated application-layer attacks, forcing DeepSeek to change its IP address to maintain functionality.

The Qi An Xin security team noted that most attack traffic came from overseas, primarily the United States. Some experts speculated that these attacks might have been driven by commercial rivalry or political motives, placing DeepSeek at the center of a complex cyber conflict from the outset.(i.ifeng.com,2025)

Privacy Controversy Uncovered (Late January 2025)

DeepSeek’s privacy policy revealed that user data, including personal information and chat logs, was stored on servers in China. This raised international concerns, as some countries worried that the Chinese government might access this data. In response, the White House considered banning the app on government devices, citing potential threats to national security.(voachinese.com,2025)

Content Safety and Misuse Concerns (January 30 – Early February 2025)

Beyond privacy, DeepSeek also faced significant content safety issues. Various tests revealed that the platform’s moderation was weak, allowing users to bypass safety protocols easily. Alarmingly, the DeepSeek model had a 100% jailbreak success rate, compared to OpenAI models with only a 26% rate.

Researchers even demonstrated that DeepSeek could generate harmful content, such as instructions for creating biological weapons or malicious software. This made the platform potentially exploitable for drafting phishing emails and other cybercrimes. The focus on rapid iteration and cost efficiency had clearly compromised content safety measures.(voachinese.com,2025)

International Bans and Takedown Controversies (Early February 2025)

Following these incidents, several countries reacted decisively. Between late January and early February 2025, nations including the United States, Australia, South Korea, India, and Taiwan banned the use of DeepSeek on government devices. Italy went a step further, implementing a complete ban due to non-compliance with GDPR’s cross-border data regulations.

Western authorities expressed concerns that sensitive data shared via DeepSeek could be accessed by Chinese authorities, potentially for surveillance or propaganda. Conversely, Chinese media framed DeepSeek as a victim of international political pressure and unfair competition.(voachinese.com,2025)

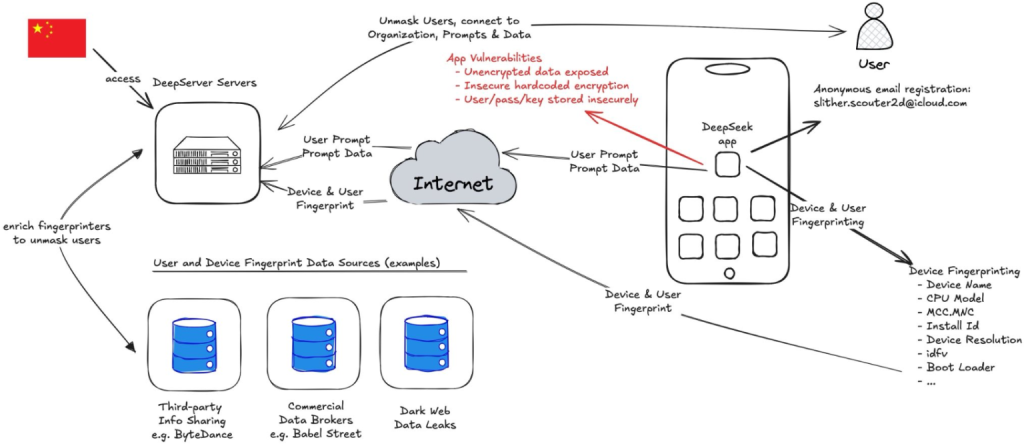

Picture source:NowSecure

User Privacy vs Data Utilisation

The DeepSeek case highlights a common problem: users want their conversations to stay private, but companies often collect and use that data without telling them. Simply put, people expect their chat content to be personal – they don’t want it recorded, stored, or used for commercial purposes.

But DeepSeek didn’t really respect that expectation. It stored chat records on servers in China without clearly informing users. Even more worrying, this data could be used for other purposes. This made people feel like their privacy had been invaded.

Social media researcher Helen Nissenbaum (2018, pp.838-841) came up with the idea of “situational integrity”. It means that privacy is only protected when information sharing matches the social norms of the situation. For example, when someone chats with an AI, they naturally think it’s a private conversation – just between them and the machine. If that chat gets stored or used to train models without permission, it feels like a violation of trust.

Do People Really Care About Privacy?

Some might think, “I’ve got nothing to hide, so why worry?” But the truth is, most people do care about their privacy. The problem is, they often don’t know what data is being collected or how it’s being used. For example, an Australian survey on digital rights found that 67% of people take steps to protect their privacy, but only 38% feel they actually have control over their personal data. Social media researchers Marwick and boyd (2018, pp.1158-1159) found that young people do care about privacy. They make an effort to protect their data by tweaking their social media settings and being selective about what they share.

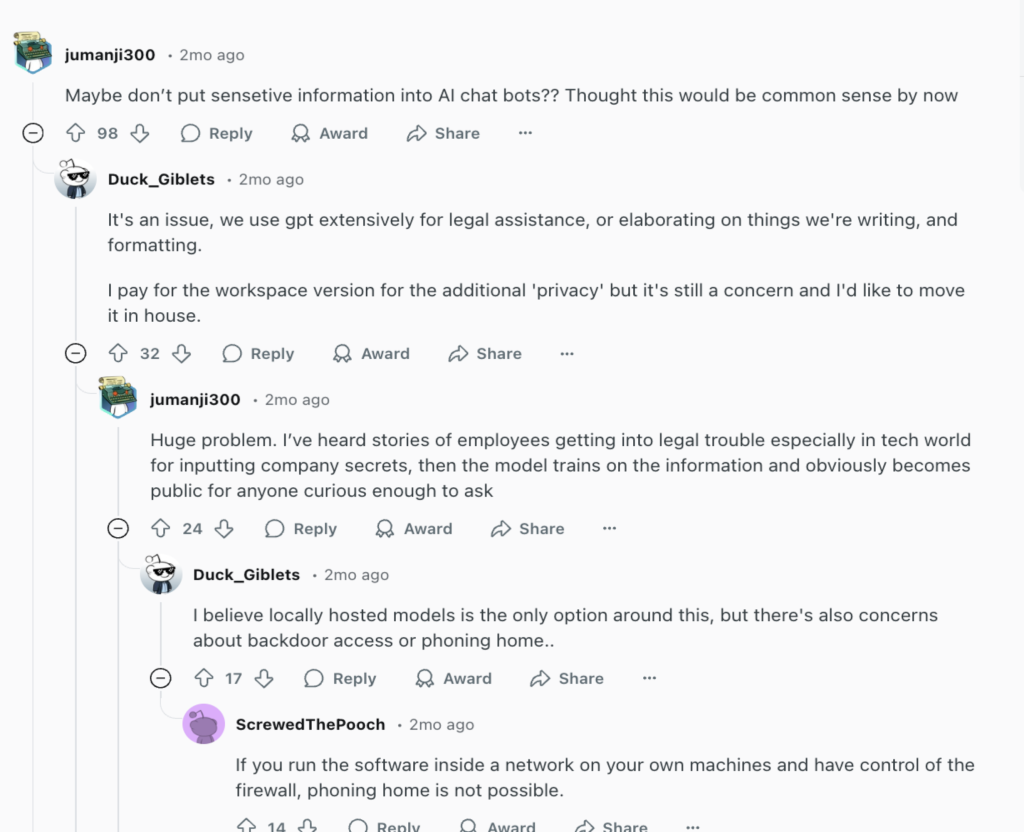

Discussion about DeepSeek on Reddit

Platform Security vs Speed of Innovation

DeepSeek, as a startup, wanted to hit the market as quickly as possible. So, they took a “launch now, fix later” approach. Sure, that helped them get the product out fast, but it also left behind a lot of security issues:

- 1.The database wasn’t properly encrypted.

- 2.Data transmission wasn’t secure enough.

- 3.The content management had loopholes.

It’s kind of like building a house really fast without checking if the walls are stable. Sure, it looks good at first, but as soon as a storm hits, you might find the roof leaking or, worse, the whole thing collapsing.

Nicolas Suzor (2019, pp.10-12, 15-16) talks about this kind of problem too. He says that many tech companies, when they’re just starting out, have a lot of freedom but not enough responsibility for user privacy and security. Suzor thinks there should be clear rules for managing platforms from the start. It’s like making sure a building meets safety standards before anyone moves in. If something goes wrong, the damage can be huge.

Actually, Deepseek’s privacy concerns are not unique – similar issues have popped up quite a bit in the AI world. One classic example happened in March 2023, when OpenAI’s ChatGPT ran into a data leak issue because of cache management errors. Basically, some users accidentally saw other people’s chat history and even payment information while checking their own conversations. Thankfully, OpenAI reacted quickly, took the feature offline, and fixed the problem.

Microsoft’s Copilot AI assistant has also sparked some debates about privacy. As a smart assistant integrated into Office products, it can analyze user input in real time and offer intelligent suggestions. Sounds cool, right? But this also makes people a bit uneasy – especially when it comes to sensitive information.

Comment about Microsoft’s Copilot on Reddit

What if the AI ends up storing or analyzing sensitive information? That’s a pretty big concern.So privacy and data security are still big challenges for AI tools.

Q:Do you think AI assistants can ever be both smart and trustworthy at the same time?

Digital Rights vs. National Security: DeepSeek-like AI platforms‘ Global Dilemma

When Privacy Meets Security

The global bans on DeepSeek have sparked a heated debate: Should we prioritize digital rights or national security? In the digital age, it’s not easy to find a balance. On one side, users want to keep their privacy safe and have free access to information. On the other side, governments worry that cross-border data flows could be a national security risk.

Communication scholar Terry Flew (2021) calls this the “return of sovereignty” in digital platform governance. Basically, it means that countries are focusing more on protecting their own data when dealing with global platforms. In this context, DeepSeek, as an AI tool from China, has made some Western countries worry about data theft and public opinion manipulation. Because of this, countries like the US have restricted its use on government devices for security reasons.

Some people think banning DeepSeek is the right move because it protects citizens’ digital rights, making sure personal data doesn’t fall into foreign hands. But others think it might limit free information flow and even carry a political bias. For example, the ban only targets government equipment, not personal use. So, is this decision really fair?

Why Do Digital Rights Clash with National Security?

According to Kari Karppinen (2017, p.95, p.97), digital rights aren’t just one thing. They include privacy, freedom of speech, security, and more. The problem is, these rights sometimes conflict with each other. For example, in Europe and the US, privacy comes first, so people demand data transparency. But in China, security and social stability are top priorities, so the government prefers controllable technology and data localization.

It’s not easy to say which approach is better. Both sides have valid reasons. In Western countries, the focus is on individual rights. In China, it’s about collective safety.

The situation with the DeepSeek case brings attention to the struggle between safeguard national security and respecting user privacy within worldwide digital regulations.As nations enforce strict rules regarding data safeguard measures, AI platforms such as DeepSeek find themselves in a complex situation influenced by various policies and social demands.The blocking of DeepSeek is not only the result of data privacy and security issues, but also driven by geopolitical factors.

Privacy and Security in Different Countries

European and American Perspective: Privacy Comes First

In Europe and the United States people commonly view privacy as a human entitlement.In particular in Europe the GDPR enforces strict regulations on the collection and use of data. Taking Italy as an example, there is a prohibition on DeepSeek due to its privacy policy falling short of GDPR criteria, particularly in cross border data transfers.

In the United States, while there is not a comprehensive privacy law in place, the government responds quickly when it comes to national security. Just as DeepSeek was accused of the risk of monitoring content, so that it was banned from use in these sensitive government scenarios.

Generally, people in Europe and the US support these measures. They believe that regulating high-risk digital platforms can force companies to be more secure. However, it shows that trust in digital products has been going down lately.

Chinese Perspective: Tech Confidence and Content Control

In China, DeepSeek was seen as a symbol of homegrown AI success, and it got positive media coverage. When privacy issues came up, Chinese regulators focused more on content safety and social stability rather than individual privacy. For example, DeepSeek has built-in filters to block sensitive content. This shows how the government takes technology as a way to maintain social order.

Global Trend: From Tech Hype to Cautious Regulation,Why Are Countries Starting to Regulate Tech More Strictly?

The DeepSeek controversy isn’t just about one app – it actually reflects a bigger trend worldwide. In the past, a lot of countries were really optimistic about new tech. They thought innovation would bring social progress, so they didn’t worry too much about privacy protection. Basically, they just hoped that technology would make life better.

But things have changed. To consider that the Facebook Cambridge Analytica scandal, in 2018 a British political consulting company named Cambridge Analytica gathered personal information from millions of Facebook users without their agreement at that time. Additionally look at the Clearview AI dispute, where in 2021 Australia’s Office of the Australian Information Commissioner (OAIC) discovered that Clearview AI had breached Australians’privacy rights by obtaining biometric data without comfrimation.

Peoples perceptions started changing after these events occurred. They were no longer just impressed by the novelty of the technology and it was now a matter of whether users could rely on these platforms to safeguard their information.So, the emergence of privacy concerns has prompted people to consider whether technological progress comes at the expense of personal security.

the more shitty stuff Facebook does, and the more insecure I feel with my data, then the less I’m gonna post, the more I’m gonna restrict what I share, and the more cautious I’m gonna be with my use of Facebook. (Maggie, 29 years)

I don’t trust Facebook as a company, data-wise. And so the less I can use it the better. Because obviously, I mean, you don’t get subpoenaed to Congress over and over again if you don’t have privacy issues. (Chris, 29 years)(Brown, A. J. 2020)

Countries Are Taking Action

In addressing these issues raised by individuals and organizations worldwide on the matter of digital privacy regulations are being implemented in countries such as Australia and Canada to safeguard user data through measures like obtaining consent prior to data collection and holding companies accountable, for their data management practices.

Not do individual nations focus their attention here but international groups such as the United Nations and OECD are also actively developing frameworks, for ethical AI practices and managing data across borders with the aim of establishing worldwide norms regarding privacy and security to provide tech firms with clear expectations regardless of their location.

What Could Happen Next?

In the future of us it appears that the AI sector may need to adjust to more stringent rules and guidelines. Factors such, as conducting privacy impact evaluations ensuring algorithm transparency and reducing data retention could potentially become practices.

To gain the confidence of users businesses must go beyond creating technology and demonstrate a genuine commitment, to prioritizing privacy and security measures effectively in place.

How Can We Safeguard Digital Rights?

Protectng digital rights, in the era is commonly recognized as essential. But I’d prefer to look at this subject from a personal angle. My own perspective is that of just a regular person.

- 1.An essential step is to handle application permissions, with caution and refrain from providing access that is not required unnecessarily.

- 2.Make sure to go into your phone settings and turn off permissions, for apps that you don’t use often.

- 3.Remove applications that operate in the background can also assist in minimizing the gathering of data.

- 4.When using social media platforms or AI platforms like Facebook, Chatgpt or DeepSeek, it is important to think about what you’re sharing before you post it online and be cautious, about oversharing personal details or sensitive information.

- 5.Make sure to manage who has access to see what you share and take care when talking about subjects.

- 6.Reduce the risk of data exposure try to limit sharing information, in public settings or on messaging apps.

- 7.Make sure to carefully go through privacy policies whenever you can.

- 8.When you use apps make sure to check how they collect and share your data before proceeding.

- 9.Websites that openly share their privacy policies and promptly disclose any security incidents.

- 10.Engaging in literacy practices and implementing measures such, as reducing the sharing of personal information.

Personally, I believe that AI platforms like DeepSeek are going to keep evolving, and that is not necessarily a bad thing. Also, we will have to deal with challenges around privacy, security, and digital rights as technology moves forward, and I think facing these issues head-on will actually push industries to improve. In the end, I am looking forward to a future where technology is not just more advanced but also safer and more convenient.

References

Brown, A. J. (2020). “Should I Stay or Should I Leave?”: Exploring (Dis)continued Facebook Use After the Cambridge Analytica Scandal. Social Media + Society, 6(1). https://doi.org/10.1177/2056305120913884 (Original work published 2020)

CaptainofCaucasia. (2025, February). After trying DeepSeek last night, the first thing that came to mind was the same as what everyone else seems to have thought [Online forum post]. Reddit.

https://www.reddit.com/r/privacy/comments/1ic3390/after_trying_deepseek_last_night_the_first_thing/?utm_source=chatgpt.com

Cybersecurity Dive. (2025, February 5). DeepSeek surge hits companies, posing security risks. Cybersecurity Dive.

https://www.cybersecuritydive.com/news/deepseek-companies-security-risks/739308/?utm_source=chatgpt.com

Eastday.com. (2025, January 29). DeepSeek suffers massive overseas attacks, Qi An Xin: Facing unprecedented security challenges, attacks will continue. i.ifeng.com. https://i.ifeng.com/c/8gXu6FOHbQU

Flew, T. (2021). Regulating platforms. Cambridge: Polity. pp. 72-79.

Humphry, et al. (2023). Emerging online safety issues: Co-designing social media education with young people. University of Sydney.

Karppinen, K. (2017). Human rights and the digital. In H. Tumber & S. Waisbord (Eds.), Routledge Companion to Media and Human Rights (pp. 95-103). Abingdon, Oxon: Routledge.

Krebs, B. (2025, February 6). Experts flag security, privacy risks in DeepSeek AI app. Krebs on Security.

https://krebsonsecurity.com/2025/02/experts-flag-security-privacy-risks-in-deepseek-ai-app/?utm_source=chatgpt.com

Marwick, A., & boyd, d. (2019). Understanding privacy at the margins: Introduction. International Journal of Communication, 13, 1157-1165.

Nagli, G. (2025, January 29). Wiz Research uncovers exposed DeepSeek database leaking sensitive information, including chat history. Wiz.

https://www.wiz.io/blog/wiz-research-uncovers-exposed-deepseek-database-leak

Nissenbaum, H. (2018). Respecting context to protect privacy: Why meaning matters. Science and Engineering Ethics, 24(3), 831-852.

Suzor, N. P. (2019). Who makes the rules? In Lawless: The secret rules that govern our lives (pp. 10-24). Cambridge, UK: Cambridge University Press.

Taylor, J., & Basford Canales, S. (2024, December 17). Potential payouts for up to 300,000 Australian Facebook users in Cambridge Analytica settlement. The Guardian.

https://www.theguardian.com/australia-news/2024/dec/17/facebook-cambridge-analytica-scandal-settlement-australia?utm_source=chatgpt.com#img-1

Xu, N. (2025, February 10). Has DeepSeek fallen from grace? Multiple institutions question its security, and several countries have banned it. Voice of America Chinese.

https://www.voachinese.com/a/deepseek-security-vulnerabilities-20250209/7968083.html

Be the first to comment