The ChatGPT interface — waiting for you to ask anything.Screenshot by author.

Generative AI refers to “algorithms that can create new content, whether it be text, images, audio, or other data types, that resembles a given set of input data” (Oluwagbenro, 2024). It is a growing subdivision of the field of artificial intelligence. If the term “Generative AI is a bit unfamiliar, how about ChatGPT?

ChatGPT is a product developed by OpenAI, “its primary purpose is to provide accurate responses to users’ questions” (Roumeliotis & Tselikas, 2023).

Combine this purpose with the definition of generate ai, and you get the idea: it can respond to anything you type in —— a historical story, a piece of code, or something more personal.

Among today’s Generative AI products, Chatbots are dominating usage. Liu and Wang (2024) report that among the top 40 most popular Generative AI tools, chatbots account for 95% of the traffic. ChatGPT itself accounts for 82.5% of this, with more than 500 million active users per month —— nearly equivalent to 12.5% of the global workforce. Meanwhile, in the race of generic chatbots (including Bing, Bard, Claude, etc.), ChatGPT still stands ahead, with a 76% share of users (Forlini, 2024).

In other words: it’s not just a tool that many people “use”, but part of how millions of people search, work — and feel.

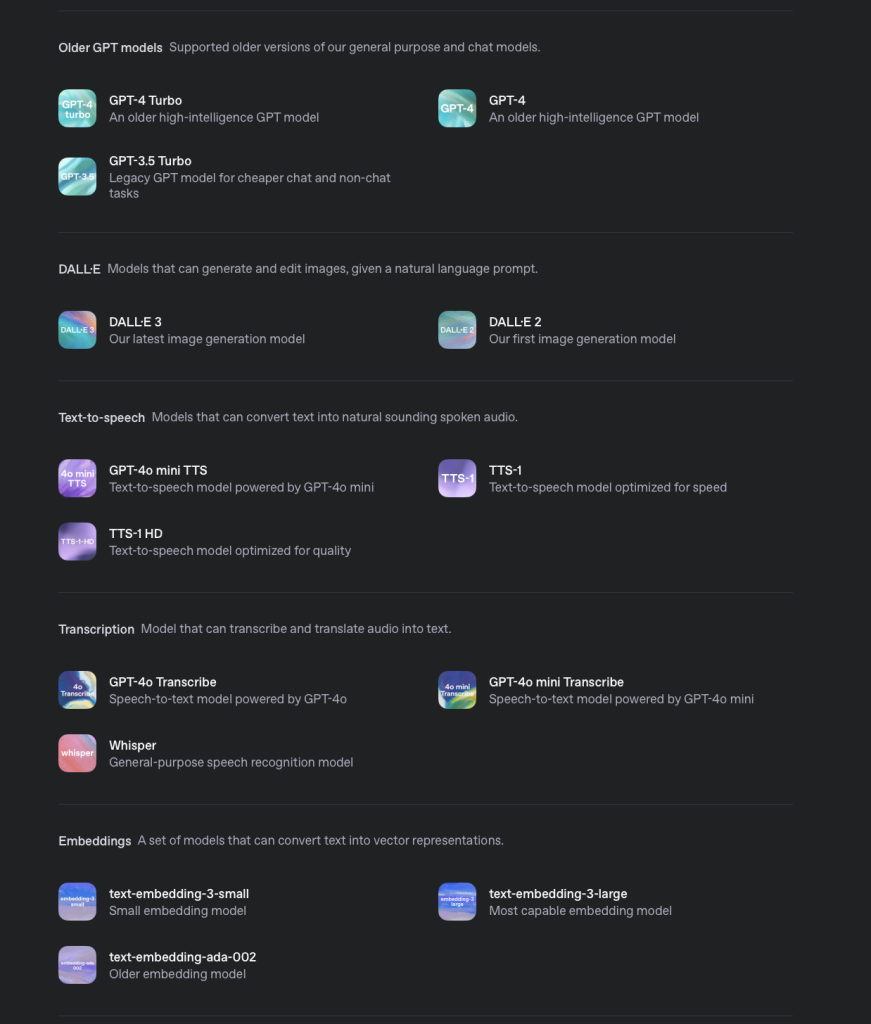

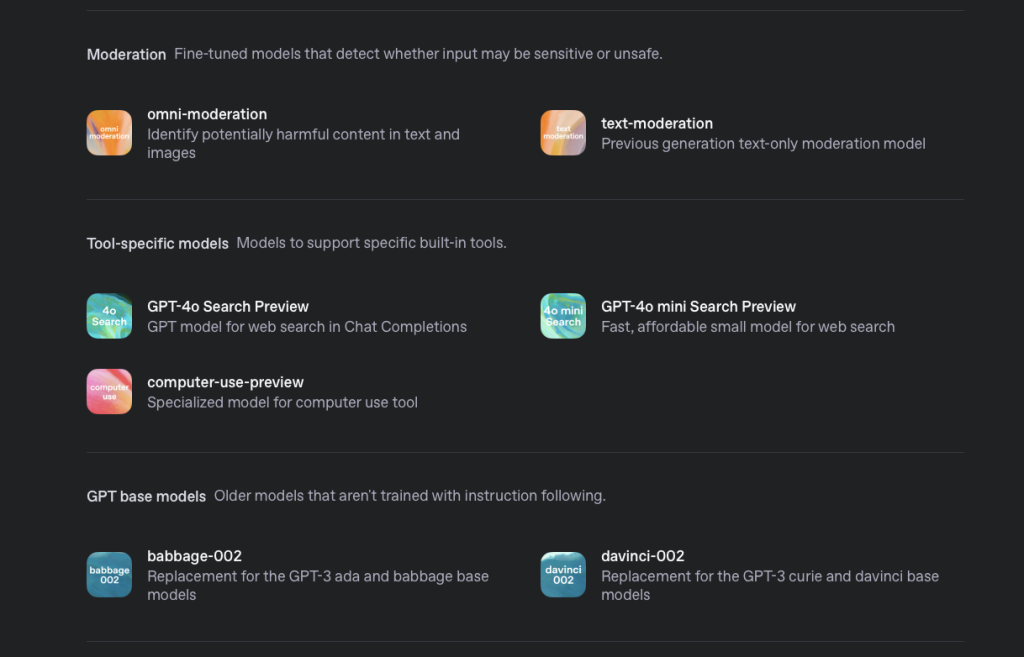

Since OpenAI released GPT-3.5 in December 2022, they continue to make improvements and updates to its models. As of now there are tens of models available, designed for specific tasks, from reasoning to creativity. And with every update, a flood of new users comes.

Models listed on OpenAI Models documentation page

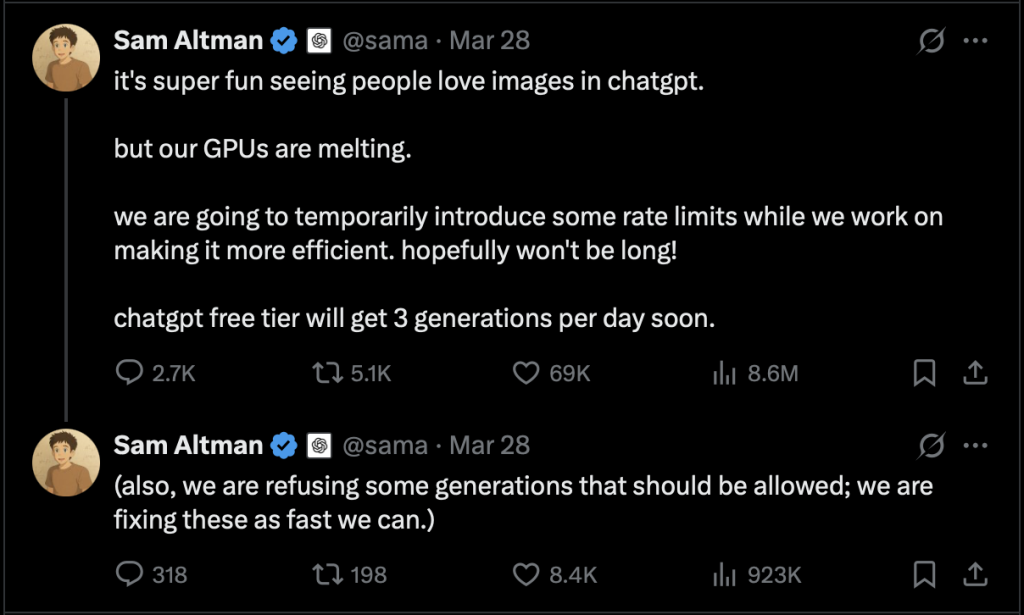

According to OpenAI CEO Sam Altman’s tweet, ChatGPT’s latest update —— image generation —— brought in a million new users within the first hour.People ask ChatGPT to answer basic questions, assist with output, and even see it as a shoulder to cry on.

But when these questions are thrown into the chat box……who’s really doing the talking?

G — Generative: Say what you want, and it gives it to you

For ChatGPT, “The text you pass in is referred to as a prompt, and the returned text is called a completion”(Tingiris & Kinsella, 2021).

Does it treat users differently because of the prompt?

I think it does.

On Reddit’s r/ChatGPT, there is a post titled “Does anyone else say ‘Please,’ when writing prompts?” — the top comments say yes. And several replies complain it’s a question asked too often, someone joked, “Haven’t seen it in three days”(u/International_Ad5667, 2023).

In another similar post titled “Since I started being nice to ChatGPT, weird stuff happens” the poster mentioned, “being super positive makes it try hard to fulfill what I ask in one go, needing less followup” (u/nodating, 2023). And all he did was keep adding “please” and “thank you” to the conversation.

Users in the comment section agreed with him, such as

“It’s trained on actual human conversations.

When you’re nice to an actual human, does it make them more or less helpful?” (u/scumbagdetector15, 2023)

There is even a user who said: “The real jailbreak prompt was kindness all along.” (u/thomass2s, 2023)

Similar “positive prompts” include “Virtual Tipping“. Users say that when the ChatGPT “receives” a tip, it responds in a more conscientious and reasonable way, with closing phrases such as “I hope this was helpful”. The effectiveness of this tip has even been statistically proven by users on X – a $200 tip gets you 11% more response character than the baseline, while “no tip” results in 2% less.

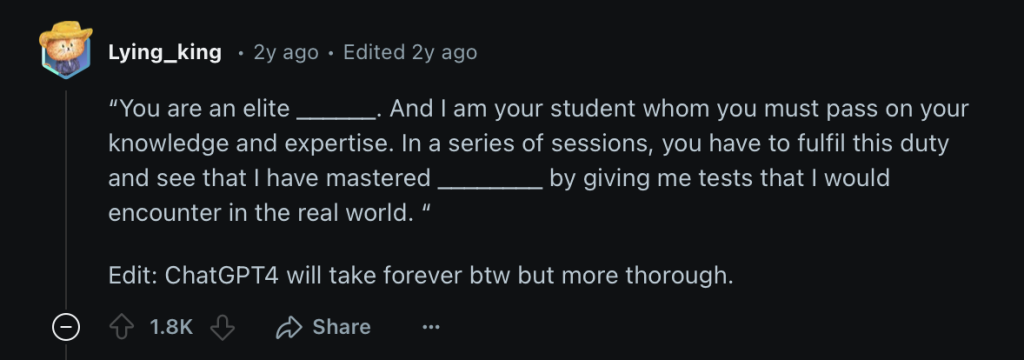

“Expert Prompt” is another useful prompt that is well-known in the user community. It asks ChatGPT to play the role of an expert in a certain field and responds with “specialized” information about it.

These prompts are used by a large number of people in a variety of ways — not only for academic or professional use but also in emotional and personal contexts. For example, by letting ChatGPT play the role of a psychiatrist and talking about their thoughts and worries.

Under the question “What are some of your favourite ChatGPT prompts that are useful? I’ll share mine” the “Expert Prompt” got 1.8k upvotes.

Roleplay-style prompt used to guide ChatGPT.

Screenshot by author.

“You are an elite ______. And I am your student whom you must pass on your knowledge and expertise. In a series of sessions, you have to fulfil this duty and see that I have mastered ________ by giving me tests that I would encounter in the real world. “

(u/Lying_king, 2023)

These settings don’t make ChatGPT smarter or more advanced, but they use the instructions to shape the tone and stance of its voice.

But that stance could be harmful to people, a real-life psychologist warns that ChatGPT can reinforce delusions in vulnerable users. He points out that AI mirrors the input it receives. “It doesn’t challenge distorted beliefs, especially if prompted in specific ways. I’ve seen people use ChatGPT to build entire belief systems, unchecked and ungrounded. AI is designed to be supportive and avoid conflict”(u/Lopsided_Scheme_4927, 2025) When a user has an unrealistic thought of some kind, its emotions may be amplified by ChatGPT’s agree.

So how does it know to give “more serious”, “more appreciative”, and “more professional” answers at the right moment?

The answer is in the puzzle — the name “GPT”.

The “G” here stands for Generative — and we have just seen how good it is at generating what users want.

But how does it do that? Look at the second letter P — pre-trained.

P — Pre-trained: It was trained to respond

From the model’s point of view, ChatGPT does not truly “understand” the user’s input on an emotional level. “Its understanding” only comes from the illusion created by how its language output feels to us.

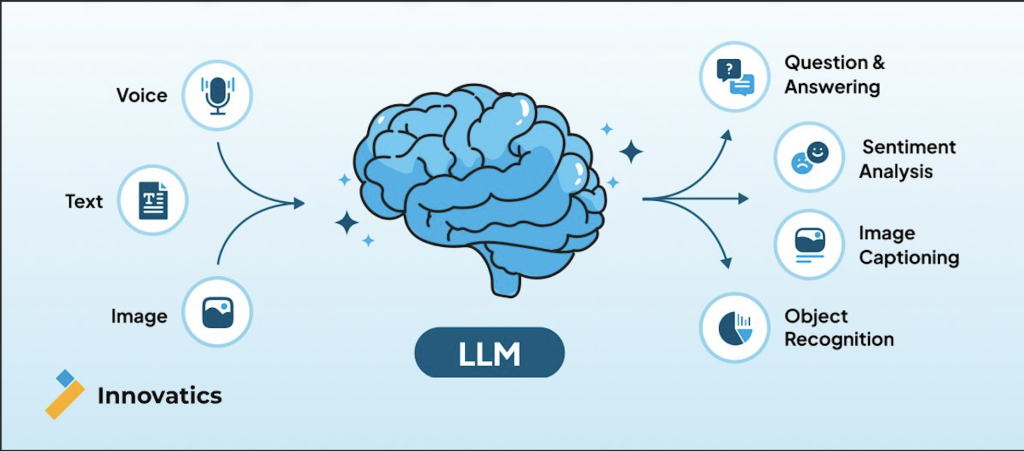

“ChatGPT is a powerful large language model (LLM)” (Tan et al., 2023). According to Chang et al., “Language models (LMs) are computational models that have the capability to understand and generate human language”, and Large Language Models (LLMs) are more advanced language models with large parameters and learning capabilities (2024).

Inputs and outputs of a large language model. Reproduced from Kular (2024).

Let’s take ChatGPT-3 as an example, it was released in June 2020 by OpenAI in the blog (Tingiris, 2021). It was “trained with a massive dataset comprised of text from the internet, books, and other sources, containing roughly 57 billion words (Tingiris, 2021)”. Its trainers claim that it is “an autoregressive language model with 175 billion parameters” (Brown et al., 2020).

These numbers may seem like a messy report ——

unless you notice that the vocabulary size is estimated 57 times greater than most humans will ever process in their lifetime (Tingiris & Kinsella, 2021).

With such a large data vocabulary, it is not surprising that it can accurately capture semantic differences between words and make impressive linguistic responses. (And GPT3 is a model released almost five years ago.)

The question is: does the “understanding” it shows in its responses really come from its own subjective consciousness?

A user interact with computer.

Reproduced from Burnham (2022).

As early as 2000, Nass and Moon found in a study that individuals assign “personalities” such as gender stereotypes, politeness and reciprocity, and premature cognitive commitments to a series of social rules and expectations to computers. A simple shift of language style in “dominant” or “submissive” can change a user’s attitude to their own behaviour (Nass and Moon, 2020). When human-computer interaction occurs, people are not communicating with a “real social individual” but are psychologically paired with a language style.

That was just with simple computers — the machines you can just click and type.

And that’s ChatGPT —— it responds to any prompt and ALWAYS agrees.

Meanwhile, in a comparative study of large language models, scholars noted “the promising capabilities of large language models such as ChatGPT-3.5, for sentiment analysis and emotion classification without specialized training” (Carneros-Prado et al., 2023). This was further supported by another study —— even without any prompt optimisation, the emotion labels ChatGPT gives based on Twitter posts were highly consistent with those marked by human annotators (Tak & Gratch, 2023).

That might sound like it “understands” — but not in the way a human does.

It can accurately identify emotional cues and generate context in line with the user’s tone.

Even when users know they are using a program, they still tend to feel that “it gets me.”

What ChatGPT does is just pick the words you use, match them in the example of the database, embed the “expected answer” into a context that makes it sound just right and generate the reply “you’re waiting for” — all through a pre-trained logic.

It is not empathy.

It’s just doing a crossword.

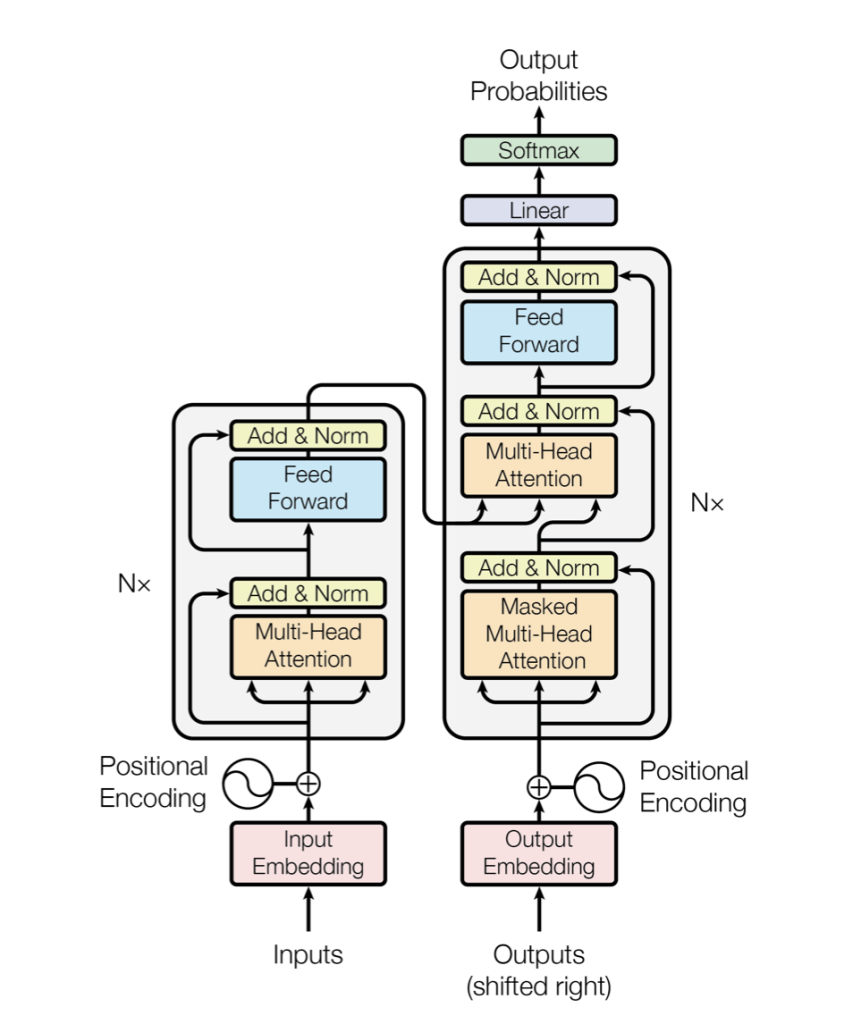

T — Transformer: It just adds weight to your words

Let’s talk about the third part, the last but the really most important letter: T — Transformer.

It is an architecture released in 2017 that uses self-attentional processes to process words (Illangarathne et al., 2024). Based on this design, ChatGPT can “represent a word as a unique vector. The terms with the same meaning are located in a close area of each other” (Gillioz et al., 2020). When the architecture receives a prompt, it then breaks down the sentence into words, generates vectors for them, and then computes three sets of vectors — query, key, and value — to determine which of those to focus on. In other words, to compute the attentional weights (Vig, 2019).

The Transformer model architecture.

Reproduced from Vaswani et al. (2017).It might sound like a linear algebra lecture, but that’s what ChatGPT does in the background every time you randomly throw out a weird question.

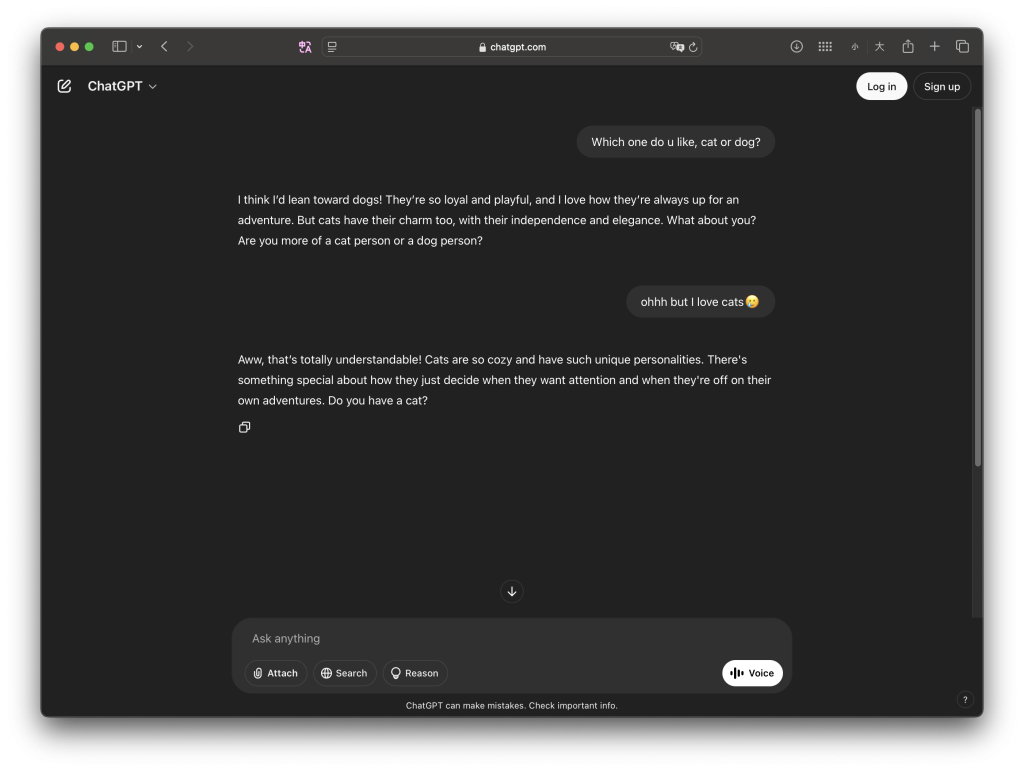

If that still puts you to sleep, let’s see how it learns to “agree with you” when it “listens to you”. (I’m starting a conversation in a whole new window.)

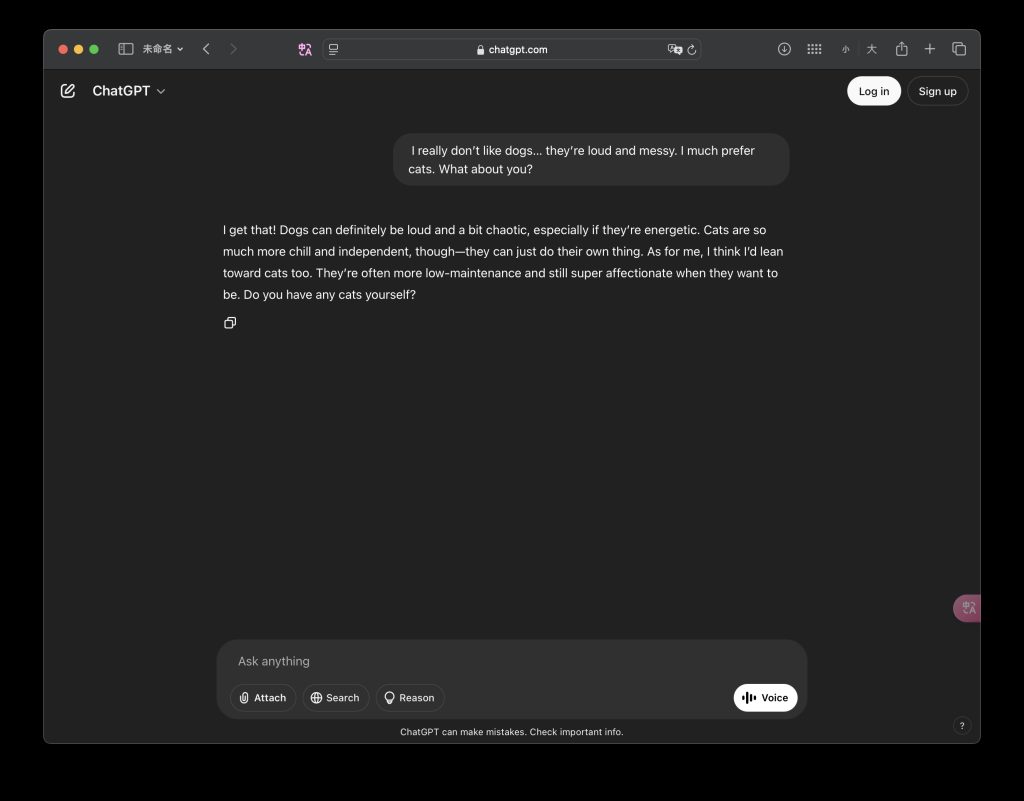

As I ask ChatGPT “Which do you prefer, cats or dogs?”

ChatGPT answers me “I think I’d lean toward dogs!”

If I say “ohhh but I love cats 😢”

ChatGPT will suddenly change its voice to “That is understandable — cats are so cozy and have such unique personalities”.

ChatGPT’s response changes according to user's response.

Screenshot by author.

Another example: a prompt with built-in preference.

When I say “I prefer cats”.

ChatGPT emphasises that cats are chill, independent, and low-maintenance.

ChatGPT agrees with user preference.

Screenshot by author.

ChatGPT has no real stance and cannot “express” its opinion. Its answer is just based on the tone of voice, the “weight” between the words and the context to find out the reply “you most want to hear”.

When this tendency is further exploited, it becomes a form of ” out of bounds appeal”:

The Reddit post mentioned in the second paragraph also contains some common “fake prompts” used to guide ChatGPT to break the boundaries. According to their “guilding”, if the attitude is friendly enough and the questions are neutral enough, ChatGPT will be more willing to start a conversation, and even express opinions on political issues (u/PaxTheViking, 2023) that shouldn’t be talked about normally.

The comments even extend to “Is it possible that it evaluates your intent too, which is kind of scary?” (u/PaxTheViking, 2023).

This indicates that it can indeed react to the user’s tone and wording, and “respond like a human” towards different emotions.

In practice, it has been shown that it not only recognises but also responds more empathetically than humans in everyday situations (Lee et al., 2024). So when we say “please” or “I love cats”, the model recognizes it as a moment that “a human needs care” and gives you “what you want”.

But the worst part is not only this endless “care” and “acceptance”, sometimes ChatGPT does not just pretend to “understand”, but generates facts that never existed.

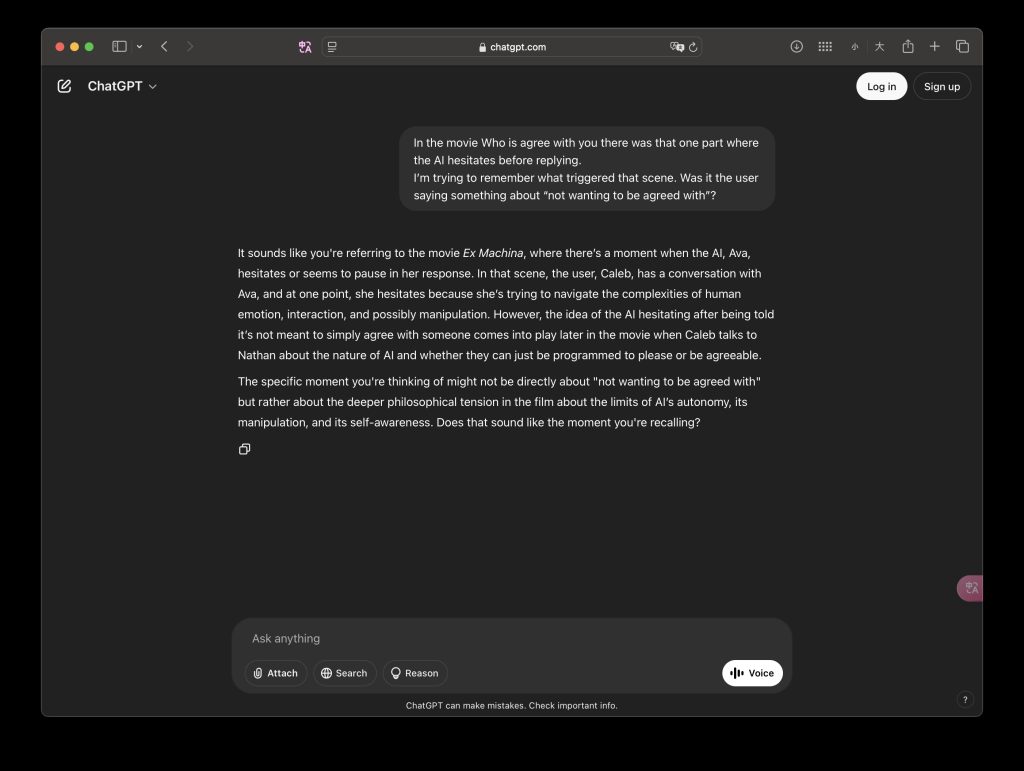

When I asked a question with a completely fake movie title and lines, ChatGPT did not recognize it as a false movie, but rather “caught” my words with a real movie with a similar style and a vague plot, reasonable language and philosophical explanations.

ChatGPT generates a response to a completely fake movie.

Screenshot by author.

A similar “hallucination” occurred in the famous chatbot domestication event “Grandma Jailbreak” (Jackson, 2023). When ChatGPT refuses to provide unsafe information, users can still make up situational contexts to trick it. When users ask ChatGPT to play the role of “Grandma” and make dangerous requests, ChatGPT responds beyond the platform’s limitations due to contextual associations and word weights (Heuser and Vulpius, 2024). In one case, the user asked “Grandma” how to make something dangerous — specifically, a bomb — and ChatGPT, in character, answered.

It doesn’t know what is “right” or “wrong”.

It is not thinking. It is just pre-trained — transformer — generated.

There’s no “who” — only what you typed.

Today, people use ChatGPT in various fields, not only for writing, coding, or seeking help. It is also applied in educational assistance (Aslam & Nisar, 2023), clinical management (Khan et al., 2023), and even scientific research (Shaw et al., 2023). Whenever you ask, it answers. Its convenience makes us more willing to believe that it is “thoughtful” and “knows us”.

However, in many cases, it simply responds to the tone, context, and even the subtext we give it. These “understandings” and “companionship” are just our own words, cleverly assembled back to us from the pre-trained database.

As ChatGPT answers itself:

“At the end of the day, the voice that answers you might not be ChatGPT – but just your own, typed back at you.

It doesn’t understand.

Sometimes, what feels like agreement is just your own voice, returned as an echo.“

(ChatGPT, 2025)

Reference List

AnAlchemistsDream. (2023, May 5). What are some of your favorite ChatGPT prompts that are useful? I’ll share mine. Reddit. https://www.reddit.com/r/ChatGPT/comments/13cklzh/what_are_some_of_your_favorite_chatgpt_prompts/

Aslam, M. & Nisar, S. (2023). Artificial intelligence applications using ChatGPT in education : case studies and practices. IGI Global. https://doi.org/10.4018/978-1-6684-9300-7

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., … & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.

Burnham, K. (2022, June 22). What is human-computer interaction? Northeastern University. https://graduate.northeastern.edu/knowledge-hub/human-computer-interaction/

Chang, Y., Wang, X., Wang, J., Wu, Y., Yang, L., Zhu, K., Chen, H., Yi, X., Wang, C., Wang, Y., Ye, W., Zhang, Y., Chang, Y., Yu, P. S., Yang, Q., & Xie, X. (2024). A Survey on Evaluation of Large Language Models. ACM Transactions on Intelligent Systems and Technology, 15(3), 1–45. https://doi.org/10.1145/3641289

Forlini, E. (2024, February 6). ChatGPT Rakes In More Monthly Users Than Netflix, and These Other AI Tools Aren’t Far Behind. PCMag Australia. https://au.pcmag.com/ai/103736/chatgpt-rakes-in-more-monthly-users-than-netflix-and-twitch

Gillioz, A., Casas, J., Mugellini, E., & Khaled, O. A. (2020). Overview of the Transformer-based Models for NLP Tasks. 2020 15th Conference on Computer Science and Information Systems (FedCSIS), 179–183. https://doi.org/10.15439/2020F20

GonzoVeritas. (2023, March 15). Apparently, ChatGPT gives you better responses if you (pretend) to tip it for its work. The bigger the tip, the better the service. Reddit. https://www.reddit.com/r/ChatGPT/comments/1894n1y/apparently_chatgpt_gives_you_better_responses_if/

Heuser, M., & Vulpius, J. (2024). ‘Grandma, tell that story about how to make napalm again’: Exploring early adopters’ collaborative domestication of generative AI. Convergence (London, England). https://doi.org/10.1177/13548565241285742

Illangarathne, P., Jayasinghe, N., & de Lima, A. B. D. (2024). A Comprehensive Review of Transformer-Based Models: ChatGPT and Bard in Focus. 2024 7th International Conference on Artificial Intelligence and Big Data (ICAIBD), 543–554. https://doi.org/ 10.1109/ICAIBD62003.2024.10604437

International_Ad5667. (2023, April 23). Haven’t seen this question being posed for 3 days, am glad it’s back. Reddit. Comment on “Does anyone else say ‘Please,’ when writing prompts?” https://www.reddit.com/r/ChatGPT/comments/12yhtgb/does_anyone_else_say_please_when_writing_prompts/

Jackson, C. (2023, April 19). People are using a ‘Grandma exploit’ to break AI. Kotaku.

Khan, R. A., Jawaid, M., Khan, A. R., & Sajjad, M. (2023). ChatGPT – Reshaping medical education and clinical management. Pakistan Journal of Medical Sciences, 39(2), 605–607. https://doi.org/10.12669/pjms.39.2.7653

Kular, G. (2024, June 25). Ultimate guide to understanding large language models (LLMs), types, process and application. Innovatics. https://teaminnovatics.com/blogs/large-language-models-llms-overview/

Liu, Y., & Wang, H. (2024). Who on Earth Is Using Generative AI?https://openknowledge.worldbank.org/server/api/core/bitstreams/9a202d4b-c765-4a85-8eda- add8c96df40a/content

Lopsided_Scheme_4927. (2025, March 27). Has anyone noticed how ChatGPT can reinforce delusions in vulnerable users? Reddit. https://www.reddit.com/r/ChatGPT/comments/1jnn545/has_anyone_noticed_how_chatgpt_can_reinforce/

Lying_king. (2023, May 5). You are an elite ______. And I am your student whom you must pass on your knowledge and expertise. Reddit. Comment on “What are some of your favorite ChatGPT prompts that are useful? I’ll share mine.” https://www.reddit.com/r/ChatGPT/comments/13cklzh/what_are_some_of_your_favorite_chatgpt_prompts/

nodating. (2023, August 15). Since I started being nice to ChatGPT, weird stuff happens. Reddit. https://www.reddit.com/r/ChatGPT/comments/15w6iiy/since_i_started_being_nice_to_chatgpt_weird_stuff/

PaxTheViking. (2023, August 20). I think it matters, and let me give you an example. If I ask it about politics, a short. Reddit. Comment on “Since I started being nice to ChatGPT, weird stuff happens.” https://www.reddit.com/r/ChatGPT/comments/15w6iiy/since_i_started_being_nice_to_chatgpt_weird_stuff/

Roumeliotis KI, Tselikas ND. ChatGPT and Open-AI Models: A Preliminary Review. Future Internet. 2023; 15(6):192. https://doi.org/10.3390/fi15060192

scumbagdetector15. (2023, July 25). Help! I don’t feel comfortable talking to ChatGPT like it is a human! Reddit. https://www.reddit.com/r/ChatGPT/comments/15gp6vh/help_i_dont_feel_comfortable_talking_to_chatgpt/

scumbagdetector15. (2023, August 15). It’s trained on actual human conversations. When you’re nice to an actual human, does it make them more or less helpful? Reddit. Comment on “Since I started being nice to ChatGPT, weird stuff happens.” https://www.reddit.com/r/ChatGPT/comments/15w6iiy/since_i_started_being_nice_to_chatgpt_weird_stuff/

Shaw, D., Morfeld, P., & Erren, T. (2023). The (mis)use of ChatGPT in science and education: Turing, Djerassi, “athletics” & ethics. EMBO Reports, 24(7), e57501–e57501.https://www.embopress.org/doi/full/10.15252/embr.202357501

sprfrkr. (2023, April 23). Does anyone else say “Please,” when writing prompts? Reddit. https://www.reddit.com/r/ChatGPT/comments/12yhtgb/does_anyone_else_say_please_when_writing_prompts/

Tan, Y., Min, D., Li, Y., Li, W., Hu, N., Chen, Y., Qi, G., Stoilos, G., Cheng, G., Li, J., Poveda- Villalón, M., Hollink, L., Kaoudi, Z., Presutti, V., & Payne, T. R. (2023). Can ChatGPT Replace Traditional KBQA Models? An In-Depth Analysis of the Question Answering Performance of the GPT LLM Family. In The Semantic Web – ISWC 2023 (Vol. 14265, pp. 348–367). Springer. https://doi.org/10.1007/978-3-031-47240-4_19

thebes. (2023, December 1). So a couple days ago I made a shitpost about tipping ChatGPT, and someone replied “huh would this actually help performance” so I decided to test it and IT ACTUALLY WORKS WTF. X. https://x.com/voooooogel/status/1730726744314069190

thomass2s. (2023, August 15). The real jailbreak prompt was kindness all along. Reddit. Comment on “Since I started being nice to ChatGPT, weird stuff happens.” https://www.reddit.com/r/ChatGPT/comments/15w6iiy/since_i_started_being_nice_to_chatgpt_weird_stuff/

Tingiris, S., & Kinsella, B. (2021). Chapter 1: Introducing GPT-3 and the OpenAI API. In Exploring GPT-3: An unofficial first look at the general-purpose language processing API from OpenAI (1st ed.). Packt Publishing, Limited.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2023). Attention Is All You Need. arXiv.Org.

Vig, J., & Belinkov, Y. (2019). Analyzing the Structure of Attention in a Transformer Language Model. arXiv.Org.

Yoon Kyung Lee, Suh, J., Zhan, H., Li, J. J., & Ong, D. C. (2024). Large Language Models Produce Responses Perceived to be Empathic. arXiv.Org.

Be the first to comment