Let’s talk about something closely related to our daily life. We are currently in the digital era, social media platforms become very important and necessary in people’s lives. Social media has changed the way we communicate with each other, also, they have influenced social culture and political life, however, with the growing development of social media, issues such as platform rules-making, hate speech etc. Besides that, the fast spread of wrong information on social media is also another big worry that makes people confused, those problems have become controversial issues which have gotten a lot of attention.

What is online harm? “The government’s draft Online Safety Bill defines online harms as user generated content or behaviour that is illegal or could cause significant physical or psychological harm to a person.” (gov.uk). There are many types of online harms, for examples: child sexual exploitation and abuse, terrorist use of the internet, hate crime and hate speech, harassment, cyberbullying and online abuse. Also, when false news and wrong information spreads, it can mess up what people think and how they decide things.

Discovering Online Harms

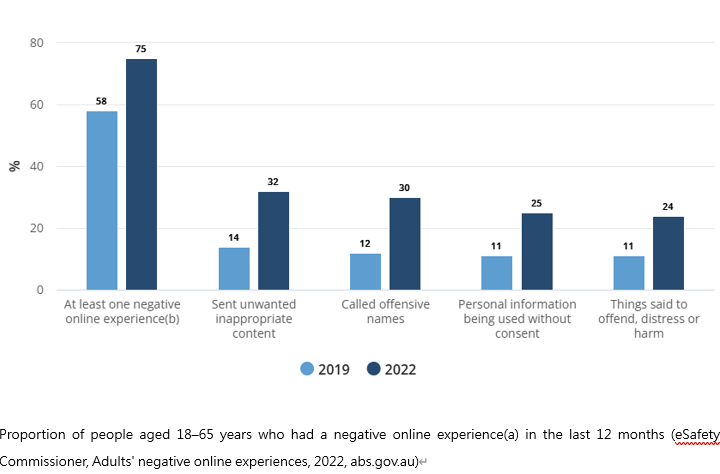

“2022 eSafety report states that 62 percent of teens had been exposed to harmful content online. But the good news is that half of those surveyed had also reported it. “(UNICEF Australia) Cyberbullying has become a common thing because of the development platforms like TikTok and video games. Teenagers get verbal attacks, malicious slander and threats to personal safety easily. Many primary and junior high school students on Douyin (Chinese TikTok) share their daily lives, hoping to receive praise and encouragement. However, a group of netizens make fun of them, “Nobody is curious about your daily life” or “Have you finished your homework yet?” These vicious comments can plunge teenagers, whose minds are not yet fully mature, into extreme self-doubt. They start to be afraid of sharing their daily lives again, become quiet and don’t want to talk to classmates. “32% of teens say they have been called an offensive name online or on their cellphone. Smaller shares say they have had false rumours spread about them online (22%) or have been sent explicit images they didn’t ask for (17%).” (Pew Research Center, May, 2022) This situation is truly concerning. Schools and parents have to do something. Schools can have anti-cyberbullying classes which teach teens how to deal with these problems. Parents should monitor their kids’ online activities. Social media platforms also need to set a teen’s mode to isolate harmful content and ban bullies fast. Only with combined efforts can we safeguard teens’ mental health and online experiences.

Social media affects mental health in more ways than just cyberbullying. When the perfectly looking lives and carefully made outlooks are shown to the audience, they start to feel not good enough and have low self-esteem. Teenagers and young adults will tend to contrast their own lives with the ones that look so perfect on social media. This can bring problems like anxiety, depression, and body image worries.

The Intricacies of Platform Rule-making

First, what is rule-making? Social media platforms use terms of service and community guidelines. While they claim to protect our rights and follow the law, another important thing is to deal with hate speech and online harm. As Hollinsworth (2006) pointed out, “The issue of white Australian race relations is a complex one partly rooted in a long history of domination and dispossession of Indigenous people, a history that has defined and continues to propel the construction of Australian national identity.” This shows that social background related to race is complex, which makes the platform’s fair rules-making important. Social media is a globalized and interconnected place, People from all kinds of jobs share their views and experiences. So, the chance of misunderstandings and conflicts about race is big. Fair rules are really important. They can make sure everyone can say what they want freely. And they don’t have to worry about being attacked by hate speech or discriminatory behaviour.

Facebook’s CEO said their terms were like “governing documents” and should represent users’ ideas. In 2009, he said users could help make the rules to solve privacy questions, But Facebook set a very high voting bar. It would change only if 30% of active users voted. So, it was hard for users to actually change anything. (Suzor, Nicolas P, 2019) Their priority is to maintain commercial interests and disregard public demands. This becomes even more evident when we look at how they handle user data. Often, without explicit consent, user information is shared with third parties for advertising purposes, further eroding trust. Legally, these terms are contracts. The relationship between platforms and users is like companies and customers. In the US, the constitution mostly covers government actions, it doesn’t apply to private platforms like Facebook, our rights on these platforms mostly depend on the contract terms. Users often find themselves at a disadvantage. When there are disputes, they have few legal ways to solve them.

Now, let’s move on to content checking. We all shop online. Have you ever been deceived by a misleading description of a product from the seller? Amazon, as a leading e-commerce giant, uses both automated algorithms and human moderators (kdp.amazon.com), The automated systems will first look for certain keywords. These keywords are related to false or exaggerated advertising. But if a seller uses a tricky marketing trick in the product description, the automated system may miss it. Therefore, human moderators step in. It takes a long time for human moderators to make sure all the content right, since there are millions of products are being listed every day, also Amazon has to keep a fast and convenient shopping experience for its customers. Most platforms check posts after they’re being posted. Checks incur high costs and are also very slow. “For any major site, the costs of monitoring content as it is uploaded are far too high. Even if it were possible to screen content in advance, many sites rely on the immediacy of conversation.” (Suzor, Nicolas P, 2019) When a post is marked bad, it goes to a review team of employees, agents, or moderators. Moderators have a tough job, they handle lots of content, work hard, get paid little, and often have to decide fast. Many platforms tend to conceal their moderation process since we can get information from leaked papers or media.

False Information Epidemic: Infecting the Social Media Sphere

The spread of wrong information on social media is a big and growing problem. False news, conspiracy theories, and bad health information can spread fast. In a short time, millions of people can see them. Take the COVID-19 pandemic as an example. There was a lot of wrong information about the virus, where it came from, and if vaccines worked. Some posts said wearing masks didn’t help or that some unproven treatments could fix the virus. This wrong information made the public confused. It also made some people do dangerous things, like not getting vaccinated or not following safety rules. So, social media platforms need to spend more money on better algorithms to find and mark wrong information. They should also work with fact-checking groups to quickly show that false claims are wrong and stop them from spreading.

We also need to pay attention to biases. Take Facebook and Instagram as examples. Their rules on women’s nipples in the terms of service are seen as unfair. “Both platforms ban women’s nipples in their terms of service, a policy that activists who cluster around a campaign called ‘free the nipple’ reject as inherently discriminatory.” (Suzor, Nicolas P, 2019). This kind of rule stops women from expressing themselves, such as in situations like breastfeeding or art-making where women should have freedom. Social media shouldn’t be a place full of discrimination and unfairness. All the biases we see today have not only undermined the integrity of the platforms but also caused real-world consequences, causing people to feel unrest and mistrust.

YouTube provides a different way in this aspect, “YouTube offers the possibility to give a thumbs down ‘to a video. Facebook and Twitter’s bias towards positivity makes it difficult to locate the negative spaces (John & Nissenbaum, 2016). “By providing a ‘dislike’ button, Facebook and Twitter could allow users to counter racism online, providing at the same time the possibility to measure this practice. (Matamoros-Fernández,2016)This indicates that different platforms have different features, and some of these differences can affect how users deal with various issues.

In the context of hate speech on social media, the different treatment is obvious and concerning. Consider the case of a US congressman’s inappropriate post on Facebook wasn’t stopped, at the same time, a poet was just using their right to say different ideas and share special views, but he got in trouble for speaking up. Even though platforms like Facebook are trying to improve by providing more information and making fair and clear rules, it remains tough. They need to find a balance between securing free speech and also prohibiting harmful speech. I believe in order to make social media better and more useful for our lives, should by everyone working together.

Users often face difficulties on social media. When content is removed or accounts are blocked, we rarely receive any explanation. All they can do is guess why would it happen. Maybe someone reported them, or the platform is just being unfair. This lack of transparency makes us confused. It would be way better if platforms sent out detailed notifications with detailing reasons, whether it’s a violation of community guidelines or a false positive from automated checks. Also, providing an appeal process would give users a chance to defend themselves. Without these improvements, the user experience will continue to deteriorate, and the trust between users and platforms will be further eroded.

Conclusion:

In the end, social media platforms play a very important role in our daily lives now. But they also have a lot of serious problems that need to be solved. When it comes to protecting the mental health of users, especially teenagers, platforms really don’t do well enough. Cyberbullying is getting worse because of the anonymity and wide reach of these platforms, and it’s hurting the younger generation. Schools and parents can’t do too much about it, all they can do is offer guidance and support. The platform should also have a strict rule that doesn’t allow any cyberbullying and punish those who do it a lot, like banning them forever. Platforms need to become more democratic on rule making, give feedback from the public through meetings or comments should be a great way to communicate with the public.

When it comes to biases, platforms need to check their terms of service often to make sure they’re fair and equal. If they keep having unfair policies, they’ll lose users and also might get into legal trouble. Social media companies must work with reliable news sources and scientific institutions to check if the content being shared is true, same as misleading advertisement. The platform has to show that these things are wrong and make sure users know the truth in a quicker way.

At last, transparency is the key. When users have their content removed or accounts blocked, they should get a full explanation. There should also be an easy and quick appeals process. Only if they deal with all these issues directly can social media platforms hope to get back the trust of users and really be a good thing in our lives, instead of a source of stress and harm. Otherwise, the problems will keep getting worse and damage the way our digital society works.

Reference:

Suzor, N. P. (2019). Lawless: The Secret Rules That Govern Our Digital Lives. Cambridge University Press. https://doi.org/10.1017/9781108666428

Amazon. (n.d.). Content Guidelines. https://kdp.amazon.com/en_US/help/topic/G200672390

UNICEF Australia. (2025). What is harmful content? https://www.unicef.org.au/unicef-youth/staying-safe-online/what-is-harmful-content

Matamoros-Fernández, A. (2017, June 3). Platformed racism: The mediation and circulation of an Australian race-based controversy on Twitter. Information, Communication & Society, 20(6),930–946. https://www.tandfonline.com/doi/pdf/10.1080/1369118X.2017.1293130?needAccess=true

Flew, T. (2021). The end of the Libertarian Internet. Polity Press.

Vogels, E. A. (2022). Teens and cyberbullying 2022. www.pewresearch.org/internet/2022/12/15/teens-and-cyberbullying-2022/

Newport Academy. (2024). How does social media affect teens? https://www.newportacademy.com/resources/well-being/effect-of-social-media-on-teenagers/

UK Government. (2021, June 29). Understanding and reporting online harms on your online platform. Retrieved from https://www.gov.uk/guidance/understanding-and-reporting-online-harms-on-your-online-platform

Be the first to comment