As the world becomes more digitized, have you ever wondered how well digital platforms know you? From social media to various online services, we interact with these platforms almost every minute of every day. Anything we do can be silently recorded, analyzed and used to create ‘invisible monitors.’

While this behavior improves personalization to some extent, it brings with it profound challenges to personal privacy and digital rights. While enjoying the convenience of customized experiences, do we realize what we are paying for? Let’s put privacy at the forefront of our minds, explore how we can keep our digital information safe, and consider how digital platforms play the role of ‘invisible monitors’ in our lives.

You must know Facebook and TikTok

I’d like to talk to you about how two of the biggest digital platforms in our daily lives – Facebook and TikTok – are collecting data on you without you even realizing it, learning about your every move, and achieving that Invisible monitors’ effect.

Facebook: deep customisation you didn’t know existed

Have you ever noticed that when you like, share, or comment on Facebook, the platform seems to know more and more about you? Facebook uses a huge data collection system to transform the details of our daily interactions into a precise user profile and builds an ‘information cocoon’ accordingly. The platform filters out specific information for you based on your interests and behaviors, and in the long run, your vision will be locked in a narrow range, and you will almost only be able to see information that is like your viewpoint and confirms your bias.

There is nothing more famous than the Cambridge Analytica scandal in 2018. At that time, the data of 50 million users on Facebook was collected without authorization for political advertising and election manipulation, an incident that not only exposed the platform’s loopholes in data protection but also made the public realize how the information cocoon effect can inadvertently affect social opinion and the democratic process. In other words, Facebook’s data collection not only allows users’ information to be used for commercial profit but also makes it easier to lock users into a single information environment, leading to polarization of views and social division.

TikTok: So Fine That It Even Knows Your Emojis

Let’s talk about TikTok; the magic of this short video platform is that it always keeps you swiping all day long. Once you start watching a video, the platform quickly learns what you love to watch and constantly adjusts its recommendations. In case you didn’t know, TikTok doesn’t just pay attention to which video you click on; it also observes how long you stay watching the video, whether you like it frequently, what you comment on, and even collects your facial expressions and emotional reactions through your webcam. The result is that it can recommend the next video for you with great precision, as if each video is tailored specifically for you.

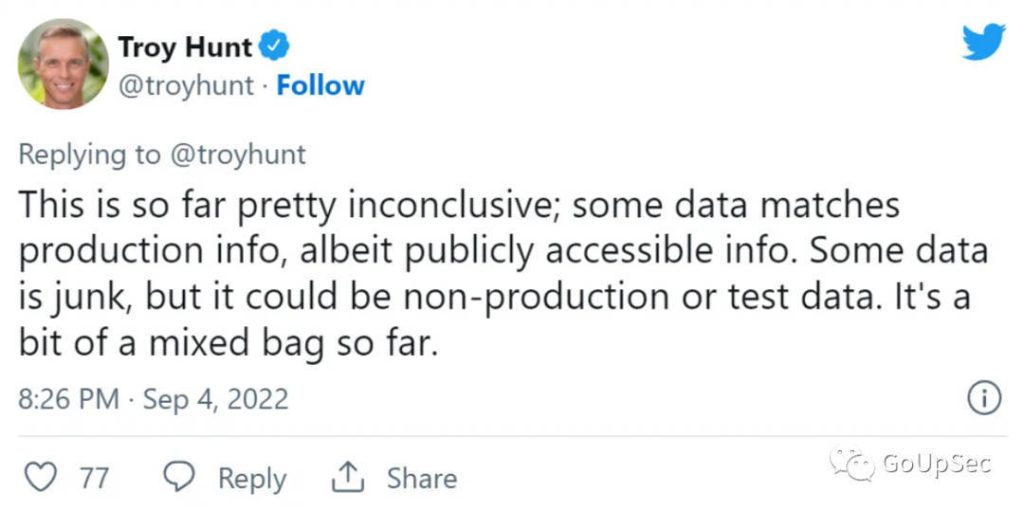

It’s amazing, but also a bit scary. After all, with such subtle information as your mood changes being recorded and analyzed, don’t you think it’s a bit too ‘knowing’ about you? Especially for young people and those who are sensitive to data privacy, TikTok’s data tracking method can easily make people feel that their privacy has been violated inadvertently. People may wonder, ‘I just want to watch a random video; why is my every tiny movement counted?’ These are the so-called ‘invisible surveillance’; you can hardly notice its existence, but it has been recording your behavior silently. Once a data platform has been hacked, the privacy implications of this surveillance are unimaginable, as was the case in 2022 when the private data of a suspected 2 billion users was leaked from TikTok.

It’s these real-life examples that make us reflect: while enjoying personalized recommendations and customized services, are we also being stealthily manipulated at the expense of control over our own information?

Understanding Privacy in the Digital World

“What is privacy in a time of ‘Invisible monitors’ by various data platforms? “

“What are digital rights?”

The concept of privacy now extends far beyond the traditional ‘personal invisibility’ and is closely related to data protection, and the human rights arising from digital privacy need to be redefined.

What is privacy?

In the digital environment, privacy is data protection. It demands that the collection, storage, and use of user data be reasonably controlled and regulated, ensuring that it is not leaked or misused, thus protecting the safety and dignity of every individual.

The Role of Data

Personal data on social platforms is constantly being collected, stored, and analyzed. This data helps platforms deliver personalized services but can also be used for commercial analysis, market predictions, and even political manipulation

Digital Rights

Digital rights refer to the new human rights that have emerged in the digital world, closely intertwined with traditional human rights. Digital rights require platforms and governments to respect and protect user data security and the right to choose, ensuring that everyone enjoys equal and dignified freedom of information in the digital environment.

The Veil of Invisible Surveillance

The Double-Edged Sword of Digital Life

Have you ever experienced this? You’re chatting with friends about something, or perhaps you’ve just seen something you like, and the next thing you know, you’re getting ads for that very thing on your go-to platforms. It feels like your life is being monitored, doesn’t it?

Take Facebook and TikTok as examples. On one hand, they customize ads based on your interests, constantly optimizing content recommendations according to your video viewing history. On the other hand, our daily activities are collected by big data and analyzed through precise algorithms, turning them into valuable data assets that generate profits in commercial competition. These data assets are what we should be protecting as our digital rights. While we enjoy the convenience these platforms provide, we often overlook the massive risks lurking behind the scenes: every data point we create could become a target for “surveillance capitalism” and a potential violation of our privacy.

Privacy Invasion: The Hidden Cost

You might think that your daily activities are insignificant, but digital platforms, through “invisible surveillance,” are able to gain insights into your behavioral patterns, interests, and even political leanings. Your information is used for targeted advertising, social behavior predictions, and, without you even realizing it, you may get caught up in political battles.

At the same time, this invasion of privacy can limit the type of information you’re exposed to, trapping you in an ‘information cocoon’ on digital platforms that restricts your thinking. This imbalance of data power is one of the root causes of privacy violations.

Returning Privacy to Its Essential Place in Our Lives

So, when we talk about privacy today, it’s no longer just about “what I do is none of your business.” It’s about the security and proper use of our online information. Imagine sharing your life with your friends, but you don’t want it to be used in other ways, such as for targeted advertising or political manipulation. Privacy requires that this ‘information’ circulate only where you want it to and not as a tool for someone else’s profit.

Respecting the Original Context of Our Lives

Just as we adjust our words and actions based on different occasions, our privacy deserves to be treated appropriately within its original context. Online data is akin to clothing chosen for specific events; wearing it in the right setting prevents awkwardness. Imagine broadcasting intimate family conversations to the public—such an act would be jarring. Therefore, privacy protection necessitates that platforms honor the original contexts and cultural backgrounds of our data when utilizing it.

Taking Control of Our Digital Destiny

In addition, each of us should have a say in what happens to our data, which is also known as ‘digital rights.’ You should be in control of every piece of data you generate online, just as you are in control of your wallet. Some of the privacy settings offered by digital platforms are an ‘in and out’ key, allowing you to choose what information can be seen by others and what information should be kept in your own little world. In the end, the key to privacy is knowing where your data is and how it is being used by others(Yee & IGI Global,2006).

Ensuring Transparency from Platforms and Policies

Of course, individual efforts alone are limited. Digital platforms should not only make it easy for us to manage our privacy, but they should also put themselves out in the sunlight so that everyone knows how they collect and use data. Governments and regulators must step in to set stricter rules and form a standardised regulatory platform. Only when platforms, users and the government join hands to form a Multistakeholder can we truly enjoy a safe and transparent online environment in this digital age(Gleckman, Taylor & Francis,2018).

Outlook and Practical Tips

Building a digital ecosystem that respects privacy

From examples to theories, we have seen that the proliferation of digital platforms has dramatically changed the pattern of our lives, while at the same time creating unprecedented privacy protection challenges.

In the future, there is a need to build an ecosystem that fosters technological innovation while respecting the privacy and digital rights of individuals, which requires not only taking into account the redefinition of human rights by digital technologies, while privacy protection must respect the social context of information but also paying attention to the particular privacy risks faced by marginalized groups in the digital age.

The key to all of this is multi-party collaboration, requiring the combined efforts of individuals, platforms and governments:

- Platform responsibility: Digital platforms must pursue economic efficiency while enhancing transparency, accepting external oversight and ensuring that data collection and use are socially ethical.

- Government regulation: Improve and implement strict data protection regulations, learn from advanced international experience, and provide practical privacy protection for users through legal means. For example, the General Data Protection Regulation (GDPR) in Europe.

- User engagement and education: Continuously improve public digital literacy to enable users to manage their data proactively and prompt platforms to improve privacy protection measures through public opinion monitoring.

- Protection of marginalised groups: Develop specialised policies for vulnerable groups to ensure equal privacy and information security for all in the digital world.

Tips

- Sharing Wisely: Be careful about posting content from your social media platforms. Avoid posting private information to prevent identity theft. In addition, consider how the content may affect reality and set up groupings for specific display content.

- Apps and Your Data: Be careful about authorising your social platforms to access your privacy. Some platforms need your data for good reason, but some may abuse it.

- Online Presence: Your online presence reflects you. Keep it positive and private by checking your posts and photos. Digitally clean up from time to time and watch what you share on your CV. Small details can be risky when they fall into the wrong hands.

Should We Trust Digital Platforms?

The development of digital platforms has made our world more interconnected, both as an enabler of modern social progress and as a perpetrator of ‘invisible monitors’. We now have a more comprehensive understanding of how digital platforms form multiple mechanisms affecting our privacy such as surveillance capitalism and the redefinition of digital human rights.

In the future, how can technological innovation be balanced with privacy protection? How to enjoy the convenience of these services while safeguarding basic human rights? These are questions that every citizen in the digital age must face. It is hoped that the discussion in this article will trigger more thinking about digital rights and privacy protection, and together we can push society forward in the direction of greater transparency, fairness and security.

References

Bessadi, N. (2023, April 20). How can we balance security and privacy in the digital world? – Diplo. Diplo. https://www.diplomacy.edu/blog/how-can-we-balance-security-and-privacy-in-the-digital-world/

Confessore, N. (2018, April 4). Cambridge Analytica and Facebook: the Scandal and the Fallout so Far. The New York Times. https://www.nytimes.com/2018/04/04/us/politics/cambridge-analytica-scandal-fallout.html

European Union. (2018). General Data Protection Regulation (GDPR). General Data Protection Regulation (GDPR). https://gdpr-info.eu/

Flew, T. (2021). Regulating platforms. Polity Press.

Gleckman, H., & Taylor and Francis. (2018). Multistakeholder Governance and Democracy : A Global Challenge (First edition.). Routledge.

Goggin, G., Vromen, A., Weatherall, K., Martin, F., Adele, W., Sunman, L., & Bailo, F. (2017). Digital Rights in Australia.

https://www.facebook.com/ExMyB. (2024, January 3). Safeguarding Digital Life: Data Privacy and Protection. EMB Blogs. https://blog.emb.global/data-privacy-and-protection/

Human rights and the digital. (2017). In The Routledge Companion to Media and Human Rights (pp. 95–103). https://doi.org/10.4324/9781315619835

IGI, G. (2022). What is Information Cocoon | IGI Global. Www.igi-Global.com. https://www.igi-global.com/dictionary/research-on-social-media-advertising-in-china/111021

Leenes, R., van Brakel, R., Gutwirth, S., De Hert, P., & ProQuest. (2017). Data protection and privacy : the age of intelligent machines (R. Leenes, R. van Brakel, S. Gutwirth, & P. De Hert, Eds.). Hart Publishing, an imprint of Bloomsbury Publishing Plc.

McCulloch, D. (2019). Watchdog to monitor digital platforms. In AAP General News Wire. Australian Associated Press Pty Limited.

Multistakeholderism Toolkit. (2020). Blogspot.com. https://learninternetgovernance.blogspot.com/p/multistakeholderism-toolkit.html

Nissenbaum, H. (2018). Respecting Context to Protect Privacy: Why Meaning Matters. Science and Engineering Ethics, 24(3), 831–852. https://doi.org/10.1007/s11948-015-9674-9

Suzor, N. P. (2019). Who Makes the Rules? In Lawless (pp. 10–24).

Understanding Privacy at the Margins: Introduction. (2018). International Journal of Communication, 12, 1157–1165.

Winder, D. (2022, September 6). TikTok Denies Breach After Hacker Claims “2 Billion Data Records” Stolen. Forbes. https://www.forbes.com/sites/daveywinder/2022/09/06/has-tiktok-us-been-hacked-and-2-billion-database-records-stolen/

Wong, J. C. (2019, March 18). The Cambridge Analytica Scandal Changed the World – but It Didn’t Change Facebook. The Guardian. https://www.theguardian.com/technology/2019/mar/17/the-cambridge-analytica-scandal-changed-the-world-but-it-didnt-change-facebook

Yee, G., & IGI Global. (2006). Privacy protection for e-services. IGI Global 701 E. Chocolate Ave., Hershey, PA, 17033, USA. https://doi.org/10.4018/978-1-59140-914-4

Zuboff, S. (2015). Big other: Surveillance Capitalism and the Prospects of an Information Civilization. Journal of Information Technology, 30(1), 75–89. https://doi.org/10.1057/jit.2015.5

Be the first to comment