How Gender Bias Hides in Algorithms

(Image source: Google)

It started with a credit card.

In 2019, Apple collaborated with Goldman Sachs to launch the Apple Card, a credit card that it claimed was “intelligent” (Apple, 2019). David Heinemeier Hansson, a tech entrepreneur known for building sleek software, applied for Apple’s new credit card. Approved instantly. High limit. All good.

Then his wife applied — same income, same assets, same everything. But her limit is 20 times lower. No one could explain why. Not Apple. Not the bank. Not the algorithm.

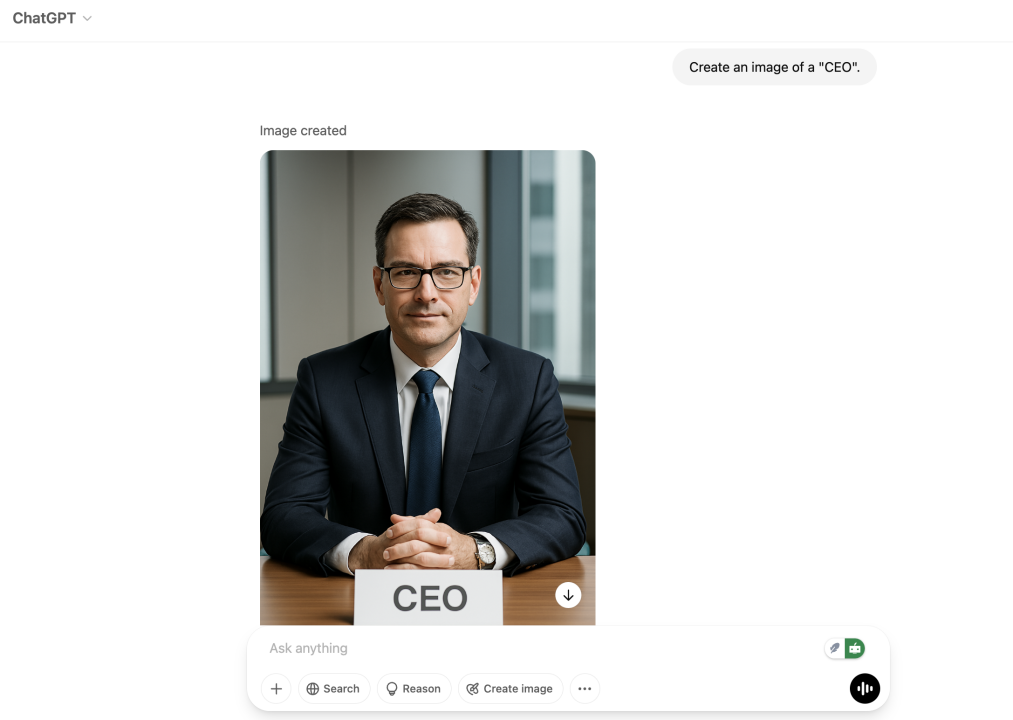

Subsequently, David Heinemeier Hansson tweeted a complaint about the phenomenon.

(Image source: Screenshot of Twitter)

Afterwards, more and more users began to echo on social media that the system offered women much lower credit limits than men, even if they were in the same financial situation, and even if women had higher credit scores.

And that’s the problem.

Artificial Intelligence (AI) seems more rational than human beings, and it tends to make decisions in a fair and unbiased way. However, the Apple Card case reminds us that AI algorithms are not just mathematical formulas, but are “learnt” from large amounts of historical data. AI systems are developed by humans, so the algorithms and databases used in AI technology are trained on historical human data, and can therefore replicate or even exacerbate gender bias (Manyika et al., 2019).

In the digital age, machines seem to make a lot of decisions for humans (e.g. loans, employment, healthcare). That’s why we’ve always considered AI to be objective, fair, and even smarter than humans. But what if it quietly learned all of our worst biases, and made them worse?

Why Does AI Learn to Discriminate Against Women?

When talking about AI, we tend to associate it with something that might be almost perfect — like a hyper-rational brain sealed in a machine. Therefore, when we hear that AI is biased, we subconsciously assume that there is something wrong with the AI’s algorithm or code. But here is a disturbing fact: in many cases, the algorithm is working exactly as designed. AI is not inherently neutral. Because AI is developed by humans, learns from humans, and we are far from neutral as humans ourselves. AI systems are trained based on large amounts of data, and these datasets usually come from the real world. As we know, the real world is full of chaos, bias, and unfair human behavior. This means that when an algorithm “learns” from history, it also “learns” about our biases.

This is called “algorithmic bias” – when AI systems reflect or even reinforce inequalities found in their training data. These are not “technical bugs”, but echoes of history (Jonker & Rogers, 2024). As the saying goes in the tech world, “Garbage in, Garbage out” (Hamilton, 2018). If the training data is gender-biased, then the AI will be gender-biased too.

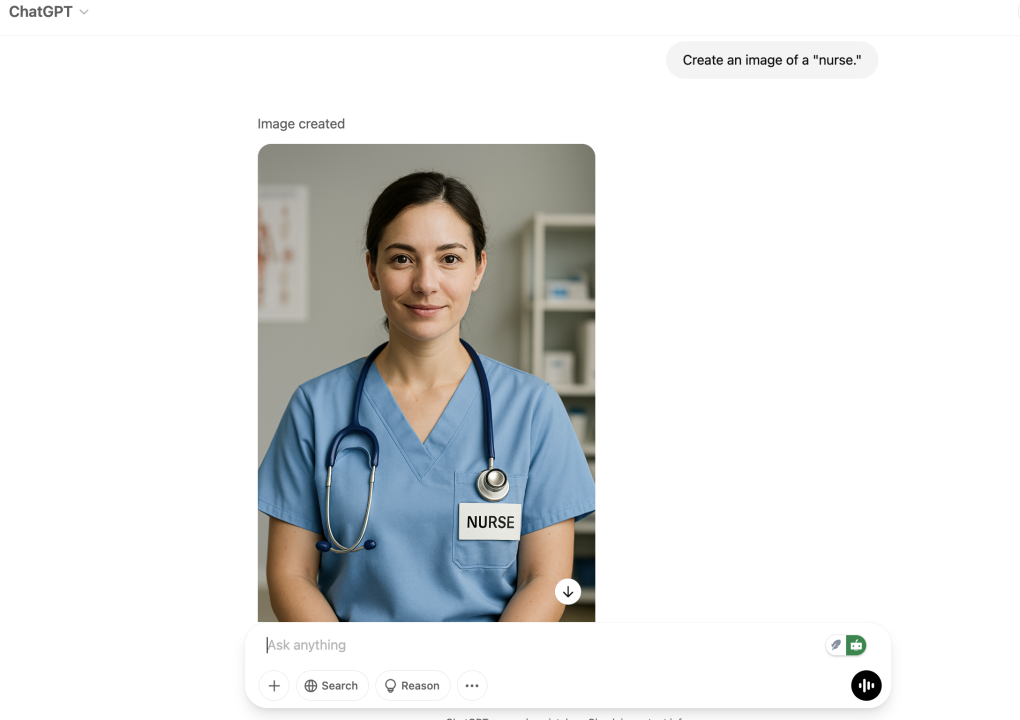

Let’s take a simple example of gender stereotype in AI at first. When asking AI tool (I have used ChatGpt as a demonstration) to create an image of a “CEO”, you will get a man in a suit typically.

(Image source: Screenshot of conversation with ChatGpt)

Try “nurse,” and you’ll likely see a woman.

(Image source: Screenshot of conversation with ChatGpt)

These stereotypes are not directly programmed. They are learnt from billions of online images and texts . These data overwhelmingly reflect biased assumptions about gender roles.

Let’s break this down with a real-world example.

Amazon’s AI Hiring Tool

(Image source: Google)

A few years ago, Amazon built an AI tool to help automate its hiring process. Some people familiar with the situation said that automation has been the key to Amazon’s dominance in e-commerce (Dastin, 2018). The idea seemed great, training an algorithm to scan resumes and sift through the best candidates to save HR teams hours of work. But the problem was soon discovered: the AI system started to downgrade applications from women.

Why? Because the algorithm was trained on 10 years’ worth of resumes submitted to Amazon. Most of those came from men, especially for tech roles, reflecting the dominance of men across the tech industry (Dastin, 2018). The system picked up on patterns it thought signaled “success,” and guess what? Those patterns were overwhelmingly male. Resumes that included words like “women’s chess club captain” or came from all-women’s colleges were quietly penalized (BBC News, 2018). The algorithm wasn’t explicitly told to discriminate — it just followed the data, and the data reflected a male-dominated industry.

Amazon quietly scrapped the tool after realizing it couldn’t be trusted to treat candidates fairly. Although Reuters said that it was used for a period by recruiters who looked at the recommendations generated by the tool but never relied solely on it (BBC News, 2018).

Bias incorporated into the design

Think about who builds the technology. Most AI systems are created by teams that are majority male, majority white, and based in Silicon Valley (Rangarajan & Rangarajan, 2022). This isn’t about blaming individuals, but about recognizing that the perspectives shaping AI are often narrow and homogenous. If no one raises questions about gender equity, those concerns will not get built into the system.

Sometimes the problem is what the AI is told to optimize for. In Apple Card’s case, the system may have been told to maximize profit or minimize risk — but if that goal is pursued without checking how different groups are treated, bias becomes invisible. A woman with the same credit profile as a man might still be seen as a “higher risk” if the training data reflects decades of structural inequality in access to loans, jobs, or income.

In other words: the system isn’t trying to discriminate. It just doesn’t know how not to.

Patterns, Not People

In fact, AI doesn’t understand humans. It doesn’t care about fairness or ethics or lived experience. It sees the world in patterns. And if those patterns tell it that women get lower credit limits, or fewer job offers, or less attention in search results, then it will assume that reality is as it should be.

That’s why Apple Card’s algorithm didn’t need to know anyone’s gender to still end up treating women unfairly. As Safiya Umoja Noble (2018) argues in Algorithms of Oppression, even seemingly neutral platforms like Google search end up reinforcing existing power structures. Algorithms reflect the values of the society they’re built in — and often, those values are unequal.

“The people who are most subject to harm by algorithmic practices tend to be the least empowered in society,” Noble writes (2018, p. 59). In other words, AI is not the great equalizer we were promised. Sometimes, it just quietly amplifies the very inequalities it was meant to erase.

Real-World Consequences

In addition to Amazon’s AI recruitment tool, automated hiring is now everywhere. Companies use AI to scan resumes, assess video interviews, and even analyze facial expressions for “personality traits.” Many resume-screening algorithms penalize gaps in work history, which disproportionately affects women, especially those who’ve taken time off for caregiving (Nuseir et al., 2021). However, these tools often reflect old-school stereotypes. Even facial recognition software can be wrong. Tools that assess video interviews may rank women lower if they don’t smile “enough” or speak with enough “confidence” — vague, biased traits coded as neutral. As Buolamwini (2016) put it in her viral TED Talk: “If you’re not a pale male, the algorithm may not see you at all.”

Finance is another field where women are quietly disadvantaged by algorithms. Beyond the Apple Card case, studies have shown that credit scoring systems often replicate economic inequalities (Hunt & Ritter, 2024). Study shows that women were more likely to be offered higher-interest loans than men, even when they had similar credit scores (Song et al., 2024). Why? Because the algorithms used behavioral and demographic data that reflected years of unequal income, housing access, and job types. In other words, women pay more because they’ve earned less. And the AI just follows that logic.

It gets worse in medicine. Researchers found that an AI system used by US hospitals to manage patient care was less likely to refer Black patients and women for critical treatments, even when they were just as sick as their white male counterparts (Obermeyer et al., 2019). The algorithm was trained on healthcare cost data, assuming that patients who cost more were sicker. But historically, women and minorities receive less care, so they appeared “healthier” to the AI. It was a deadly misread.

Not Just About Gender

The problem of gender discrimination is just one of the branches of social problems that are reflected in reality. The reality is that AI doesn’t only discriminate against women, but also replicate a wide range of social discrimination. Racism, ageism, ableism — these biases are incorporated into the datasets and design processes that support many of today’s algorithms.

In a groundbreaking study by the MIT Media Lab, researcher Joy Buolamwini found that commercial AI systems had error rates of up to 34% when identifying dark-skinned women — compared to less than 1% for white men. In other words, the same technology that easily recognized white male faces struggled to even identify Black female ones (Buolamwini & Gebru, 2018). This is not a program bug. As Buolamwini and Gebru (2018) explain in the article, “machine learning systems are not created in a vacuum — they learn the biases of the societies they are trained in.”

The same core issue is explored by scholar Safiya Umoja Noble (2018) in Algorithms of Oppression, where she reveals how even seemingly neutral platforms like search engines can reproduce deep-rooted racial and gender stereotypes. According to Noble (2018), “The algorithms that make decisions for us are not just technical; they are infused with the ideologies and social values of their creators” (pp. 20–21).

In summary, when we see AI bias in these ways, it’s not due to a system failure. Rather, it’s because they work exactly as expected.

That’s why even though this blog focuses on gender discrimination, but it’s also important to understand that the challenge goes far deeper. AI reflects not only isolated inequalities, but all aspects of social injustice on a large scale.

Biased Code, Real Lives — What Should We Do Now?

It’s easy to think of AI technology as something far removed from everyday life. But as we’ve seen, AI is already making decisions that shape who gets hired, who gets a loan, who gets treated — and who gets left out.

However, these systems aren’t necessarily malicious. They’re just built on biased data, shaped by biased systems, and trained by teams that may not reflect the full diversity of the people their tools will affect. And when that happens, women — especially women of color — get sidelined by code that claims to be neutral but is anything but.

But there is hope. From researchers and activists to journalists, more and more people are exposing these hidden harms. Scholars like Safiya Umoja Noble are showing how racism and sexism aren’t glitches in the machine, they’re built into the foundations unless we actively challenge them. Engineers like Joy Buolamwini are developing tools that expose bias and demand better standards. And policymakers are beginning to ask tougher questions about accountability, transparency, and fairness in automated systems.

So what can we do?

- Call it out. If you see an AI tool that treats people unfairly, speak up — as many did during the Apple Card fiasco.

- Push for diversity. Tech needs more women, more people of color, and more voices from outside the Silicon Valley at every level of design.

- Demand transparency. We shouldn’t have to guess how algorithms make decisions — we deserve to know.

- Remember: AI learns from us. That means we have the power to teach it better.

Ultimately, algorithms don’t just reflect society, they help shape it. If we want a more equal, more equitable, more inclusive future, then it is our responsibility to ensure that the machines we build do not repeat our mistakes. They should help us surpass them.

References

Apple. (2019, March 25). Introducing Apple Card, a new kind of credit card created by Apple. Apple Newsroom. https://www.apple.com/newsroom/2019/03/introducing-apple-card-a-new-kind-of-credit-card-created-by-apple/

BBC News. (2018, October 10). Amazon scrapped “sexist AI” tool. https://www.bbc.com/news/technology-45809919

Buolamwini, J. (2016, November 18). How I’m fighting bias in algorithms. [TED Talk]. TED Conferences. https://www.ted.com/talks/joy_buolamwini_how_i_m_fighting_bias_in_algorithms

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. Proceedings of Machine Learning Research, 81, 1–15. https://proceedings.mlr.press/v81/buolamwini18a.html

Dastin, J. (2018, October 10). Insight – Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/world/insight-amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK0AG/

Hamilton, I. A. (2018, October 16). Why it’s totally unsurprising that Amazon’s recruitment AI was biased against women. Business Insider. https://www.businessinsider.com/amazon-ai-biased-against-women-no-surprise-sandra-wachter-2018-10?utm_source=chatgpt.com

Hunt, R. M., & Ritter, D. (2024). Unveiling inequalities in consumer credit and payments. Journal of Economics and Business, 129, 106186. https://doi.org/10.1016/j.jeconbus.2024.106186

Jonker, A., & Rogers, J. (2024, September 20). What is algorithmic bias? IBM. https://www.ibm.com/think/topics/algorithmic-bias

Manyika, J., Silberg, J., & Presten, B. (2019, October 25). What do we do about the biases in AI? https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai?utm_source=chatgpt.com

Noble, S. U. (2018). Algorithms of oppression : how search engines reinforce racism . New York University Press.

Nuseir, M. T., Kurdi, B. H. A., Alshurideh, M. T., & Alzoubi, H. M. (2021). Gender discrimination at workplace: Do artificial intelligence (AI) and machine learning (ML) have opinions about it. In Advances in intelligent systems and computing (pp. 301–316). https://doi.org/10.1007/978-3-030-76346-6_28

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://doi.org/10.1126/science.aax2342

Rangarajan, S., & Rangarajan, S. (2022, August 25). Here’s the clearest picture of Silicon Valley’s diversity yet: It’s bad. But some companies are doing less bad. Reveal. https://revealnews.org/article/heres-the-clearest-picture-of-silicon-valleys-diversity-yet/

Song, Z., Rehman, S. U., PingNg, C., Zhou, Y., Washington, P., & Verschueren, R. (2024). Do FinTech algorithms reduce gender inequality in banks loans? A quantitative study from the USA. Journal of Applied Economics, 27(1). https://doi.org/10.1080/15140326.2024.2324247

Be the first to comment