Have you ever seen some malicious posts or comments against a certain group while using social media?

Do you remember how you felt?

Dr Ally Louks, a student at the University of Cambridge, finally completed her PhD in English Literature on November 28, 2024. She shared this news and posted a photo of herself holding a copy of her PhD dissertation on social media platform X. She wrote in her post that “Thrilled to say I passed my viva with no corrections and am officially PhDone.”

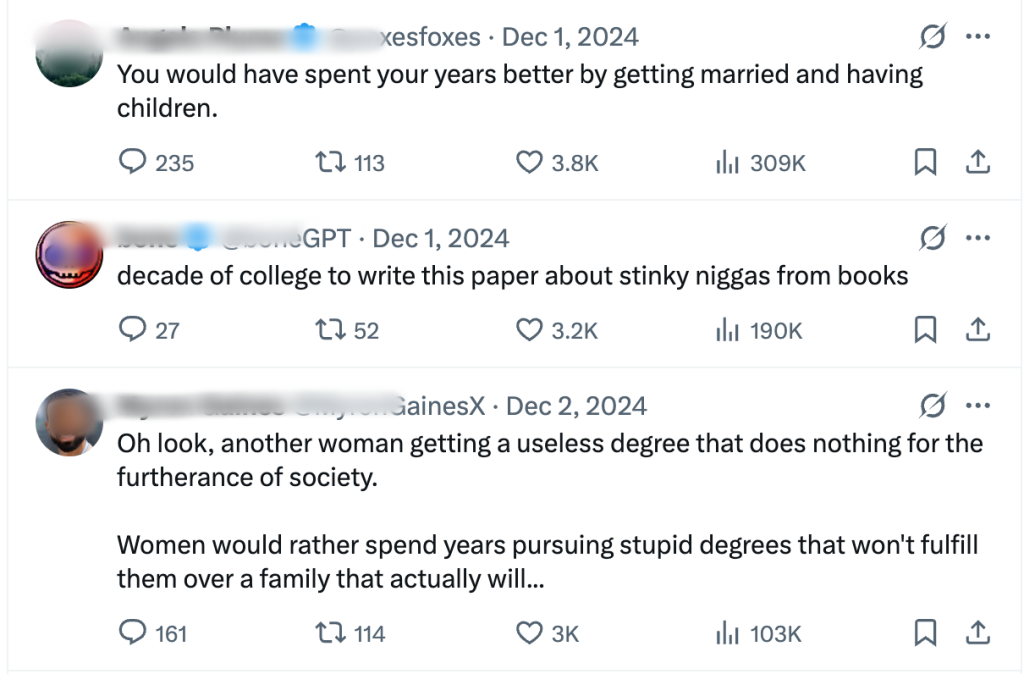

The post was viewed more than 100 million times in a few hours. However, as the post continued to spread, the celebration began to spiral out of control and turned into a nightmare. A lot of quite vicious comments appeared after around 48 hours. Dr Ally Louks was ridiculed by right-wing posters, who called her papers leftist academic garbage with no social value and subjected her to sexist insults even sexual assault and death threats.

These misogynistic hate speech comments encroached on her post. They are full of discrimination and hostility against women and academic groups. These posts were spread virally with the initial post growing to over 120 million views on platform X.

Clearly, this kind of hate speech on digital media platforms has turned a gratifying moment into an online battlefield filled with violence and harm. This prompts the question of whether platform X is to blame for the online harm caused by hate speech, and what should be done to reduce hate speech.

Before that, we should first understand what hate speech is.

Hate speech has been defined as speech that “expresses, encourages, stirs up, or incites hatred against a group of individuals distinguished by a particular feature or set of features such as race, ethnicity, gender, religion, nationality, and sexual orientation” (Parekh, 2012, p. 40). Hate speech stigmatizes certain groups, labeling them negatively, treating them as a social threat and creating antagonism (Parekh, 2012).

Also, hate speech is accompanied by online harassment and online harm. It not merely offends someone or hurts their feelings but can harm them immediately and over time (Sinpeng et al, 2021).

Therefore, policy and regulations are necessary. Platform X does not implement it well. The relaxation of content moderation on platform X leads to an increase in hate speech. I propose that the platform company should take responsibility and strengthen content moderation.

The Influence and Essence of Moderation Relaxation

In 2022, American entrepreneur Elon Musk acquired and took over the social media company Twitter and renamed it X. He insists on the importance of free speech for democracy function and believes that the company will become the world’s premier platform for free speech after reformation.

Musk relaxed content moderation. He removed lifetime account suspensions and dismissed many content moderators. Moreover, he dissolved the Trust and Safety Council which is responsible for Twitter’s policy on hate speech, child sexual exploitation and self-harm content.

The relaxation of moderation creates a tolerant environment. CCDH conducted a sample study of 300 posts that posted hate speech on platform X. It was found that 90% of the accounts in the sample remained active and 86% of the posts were not deleted, even though each post apparently violated at least one of X’s anti-hate policies (X Content Moderation Failure, 2023).

This has been followed by multiple organizations reporting an increase in hate speech on the platform. Harassment is more intense. It is found that misogynistic online hate has increased after the takeover of Twitter, and that there has been a 69% rise in new accounts following misogynistic and abusive profiles (Spring, 2023).

What is more, even if Musk claims that easing moderation is for free speech, it is not neutral essentially. It is a move to pursue political interests under the guise of free speech.

After Musk acquired Twitter, the platform went from a slightly left-leaning platform to a megaphone promoting Make America Great Again (MAGA), which Musk uses several times a day to spread his political views (Fukuyama, 2025).

MAGA is a symbol of conservative political movements, so the idea of freedom of expression also serves these specific groups. These groups adhere to the natural biological division of labor between men and women and oppose women breaking the traditional role positioning to choose their own lifestyle ambitiously.

This explains the rampant rise of right-wing commentators in the Dr Ally Louks case who oppose women entering academia and argue that women should return to the family.

These voices promote misogyny and anti-intellectualism. Musk’s action has dramatically increased the freedom of expression on the right wing. With the lifting of bans on certain extremist speech accounts and the relaxation of content moderation, hate speech can be spread more freely on social media.

Dr Ally Lukes is an accomplished woman in academia and presents a confident, successful woman profile on social media, which also explains why she has been targeted.

Platforms’ Responsibility for Strengthening Moderation

While social media platforms break down barriers to message dissemination and allow everyone to freely express their opinions and participate in mass communication, they can also easily become a breeding ground for hate speech.

Because of the features of social media platforms, they invisibly support toxic technocultures, which often hold backward ideas about gender, sexual orientation and race, oppose pluralistic and progressive values, and use socio-technical platforms as channels for harassment (Massanari, 2017).

From the perspective of the feature of platform X, the anonymity mechanism without real-name authentication reduces the moral constraints of users when they make speeches, which not only avoids the risk of direct attacks on individuals but also reduces the responsibility cost of users for inappropriate speech.

Also, the openness of the platform allows people with similar ideas to quickly assemble and form group attacks.

Moreover, with the influence of the platform’s algorithm, some content that conforms to the tone of the platform is more likely to be pushed to the audience, which is related to the political interest we just discussed before.

Platforms are not neutral because of their design and system. As a content gatekeeper, it determines what can be circulated on the platform.

In the case of Dr Ally Louks, platform X relaxed moderation and loosened regulations on abusive posts in the name of prompting freedom of expression. This increased the number of right-wing voices through the platform’s algorithm, fueling the further spread of ideas of misogyny and anti-intellectualism. Non-mainstream groups being the main victims.

Therefore, because of these characteristics of social media platforms, they should be responsible for foreseeable risks brought about by their choices. The platform governance is the key point.

Different from the case of Dr Ally Louks, hate speech related to gender issues and against minorities had a different outcome before Twitter was acquired.

In 2022, the Twitter account of Conservative satire site The Babylon Bee was suspended for referring to a transgender woman as “Man of the Year” and using male pronouns and words to describe her. Twitter claimed it violated platform policy on hateful behavior (Dodds, 2022).

The incident sparked protests among some people about interference with freedom of expression and excessive moderation. It was also a key catalyst for Musk’s acquisition of Twitter and the reform of content moderation policies.

However, the tremendous amount of hate speech and following online abuse show that this absolutism of free speech needs to be changed considerably in the current legal and regulatory environment (Flew, 2021). Large social media platforms with a wide user base must adopt stricter content moderation and strict control over hate speech and other behaviors (Woods and Perrin, 2021).

Moreover, it is found that platforms often have contradictory attitudes about the trade-off between content regulation and freedom of expression. Platforms need content moderation, which is a key part of the production chain of commercial websites and social media platforms, but companies’ working methods and standards are often opaque and they are reluctant to disclose their moderation policies publicly, which contradicts the social media platform as a zone of free expression (Roberts, 2019).

Therefore, content moderation for platform X should be strengthened, and it should be conducted by an independent sector.

Possible Directions for Future Governance

Woods and Perrin (2021) proposed Duty of Care in the development of the general regulatory approach for online harms.

It is supported by an independent regulator, which reduces the erosion of the free speech environment caused by the platform companies’ opaque and undisclosed policies.

This approach emphasizes that the governance should not directly focus on specific content. It is more important to pay attention to the design of the service, the tools that the platform offers to users, and the resources that the platform ensures user complaints and user safety, because these aspects affect the message communication throughout the platform (Woods and Perrin, 2021).

The core of the Duty of Care is the concept of risk, which stresses that the platform should prevent risks and have the awareness of actively identifying and assessing risks and taking timely measures to reduce them (Woods and Perrin, 2021).

According to the theory of Duty of Care, the specific improvement directions of platform X should be as follows.

Platform Design

- User identities should be regulated to reduce anonymous abuse. For example, real-name authentication or additional identity authentication policies could be implemented for users with high interaction and many followers. This reinforces their behavioral norms and reduces their behavior of arbitrarily influencing user opinion.

- A stricter community management system should be adopted for users who engage in harassing activities through the private messages on platform X. For example, users can only send one private message before receiving a reply.

Content Distribution

- Tag moderation can be enhanced. Platforms should reduce hashtags’ visibility or prevent them from appearing in the recommendation stream when finding that they are exploited by hate speech. They also need to reduce the recommendation weight for the reported hate content and the frequency of hashtags filled with extreme emotions to reduce interaction with related hate speech.

Receiving Interaction Between the User and the Content

- A stronger personalized security settings should be provided. For example, users could choose whether to accept likes and comments from anonymous users or people they do not follow to reduce the risk of harassment from unfamiliar accounts.

Finally, complaint and deletion mechanisms, as the final safeguard measures, are also important. A faster and more transparent complaint and reporting systems should be established to improve the efficiency of handling hate speech.

Conclusion

From the case of Dr Ally Louks, I find that the relaxation of moderation on platform X has exacerbated the growth of hate speech to a certain extent, resulting in online harm. Moreover, platform governance is complicated. After the takeover of Twitter, the platform X turned to pursue freedom of expression. However, the relaxation of content moderation is not really a move promoting free speech, but a strategy for pursuing political benefits. Because of the right-wing tendency, sexist hate speech is rampant, and women and academics have become one of the targets. The weakness of platform regulation makes it difficult to truly promote open and equal communication.

The anonymity and openness of social media platforms suggest that platform companies should be responsible for the online harm caused by hate speech. Platform X should strengthen content moderation on the premise of protecting a certain degree of freedom of speech. Moreover, because platforms are not neutral, setting up an independent regulator is a viable strategy for the future. The approach of Duty of Care, which focuses on platform design, risk mitigation and so on, will be an effective way to guide to strengthen content moderation.

References

Dodds, I. (2022). Conservative satire site The Babylon Bee locked out of Twitter for misgendering trans White House official. The Independent. https://web.archive.org/web/20220322023704/https://www.independent.co.uk/news/world/americas/us-politics/babylon-bee-twitter-rachel-levine-b2040895.html

Flew, T. (2021). Issues of Concern. In Regulating platforms (pp. 91–96). Polity.

Fukuyama, F. (2025, January 14). Elon Musk and the Decline of Western Civilization. Archive.org; Persuasion. https://web.archive.org/web/20250304084809/https://www.persuasion.community/p/elon-musk-and-the-decline-of-western

Massanari, A. (2017). # Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New media & society, 19(3), 329–346.

Parekh, B. (2012). Is there a case for banning hate speech? In The Content and Context of Hate Speech: Rethinking Regulation and Responses (pp. 37–56). Cambridge: Cambridge University Press.

Roberts, S. T. (2019). Understanding Commercial Content Moderation. In Behind the Screen: Content Moderation in the Shadows of Social Media (pp. 33–72). Yale University Press.

Sinpeng, A., Martin, F. R., Gelber, K., & Shields, K. (2021). Facebook: Regulating hate speech in the Asia pacific. Facebook Content Policy Research on Social Media Award: Regulating Hate Speech in the Asia Pacific.

Spring, M. (2023, March 6). Twitter insiders: We can’t protect users from trolling under Musk. BBC. https://www.bbc.com/news/technology-64804007

Woods, L., & Perrin, W. (2021). Obliging Platforms to Accept a Duty of Care. In Regulating Big Tech: Policy Responses to Digital Dominance (pp. 93–109). Oxford University Press, Oxford.

X Content Moderation Failure. (2023). Center for Countering Digital Hate | CCDH.

Be the first to comment