In a Twitch Livestream, a female gaming anchor was playing a game, and the chat area presented some negative comments – “Women just aren’t suited to gaming,” “Another anchor who makes money by selling meat ““Just should stay in the kitchen”, etc. These comments are not an unusual thing, but just part of the daily live streams belonging to female streamers.

Over the years, more and more female gamers have joined the ranks of live gaming, especially on popular platforms like Twitch. But they didn’t get the same attention as other male anchors for their gaming skills. Instead, they are held to a more demanding standard. For example, they need to face stricter gaming skill standards and appearance censorship—as well as hate speech, cyber violence, and even threats to personal safety in reality.

We can’t help but wonder why female anchors are more likely to be victims of cyber violence?

Hate speech is recognized as a form of speech that requires a policy response because it is damaging in ways comparable to more obvious physical harm. It has a cumulative effect on the well-being of its targets, building up over time like a chronic poison (Sinpeng, Martin, Gelber, & Shields, 2021, p. 6).

Twitch is a globally recognized gaming platform, and the governance and culture of its platform significantly influence user behavior and social perceptions. Previous studies have shown that video games are often considered a male-dominated domain, despite recent polls indicating that almost half of all gamers are female.

Data suggests that female streamers suffer about 11 times more harassment than their male counterparts (Holl, Wagener, & Melzer, 2024). As a result, women continue to be marginalized in the gaming community and are at risk of experiencing sexism in games and online at any time.

Case study of the female streamer Pokimane

Pokimane, one of the most popular female streamers on the Twitch platform, has millions of followers and broadcasts games such as League of Legends and Fortnite. However, throughout her career, she has been repeatedly subjected to hate speech and gender attacks. Many have questioned her gaming skills, believing that she relies on her sex appeal to attract viewers.

For example, in her live streams, it is common to see people criticizing her gaming ability, such as comments like, “She just shows sexy and that’s it, no gaming skills.” These malicious comments not only attacked her gaming performance but also shifted the focus to her appearance and gender. Pokimane has been accused of making fun of live broadcasts without makeup, and she has stated in interviews that this criticism has left a psychological shadow on her. She even expressed fear about broadcasting without makeup.

Additionally, comments like “Women should be sexy, don’t play games” reflect deep-seated gender stereotypes. These types of stereotypical comments illustrate that hate speech is a discriminatory discursive behavior that opposes its targets in a way that denies equality of opportunity and violates the rights of others, much like other forms of discrimination (Sinpeng et al., 2021).

As a result, Pokimane has been deeply affected by this online environment. In 2024, she announced that she would no longer sign an exclusive partnership agreement with Twitch, criticizing its online culture and expressing frustration with its “chaotic behavior.”

https://www.youtube.com/watch?v=LCCdOoM5T-w, 2024)Causes of Cyber Violence and Gendered Attacks

There are many reasons behind online violence, especially gendered attacks. Factors like algorithmic recommendation mechanisms, herd mentality, and platform anonymity play an important role.

Algorithmic Amplification of Harassment

Algorithmic choices shape individual consciousness and in doing so influence societal culture, norms, and values (Just & Latzer, 2017). Twitch is one of the most popular live-streaming platforms with over 140 million monthly users (Rock & Art, 2023). It promotes viewer–anchor interactions by relying on a complex algorithmic system.

The algorithms look at immediate viewer reactions (e.g., likes, shares, and reactions) to determine live stream relevance. As a result, live streams with more viewers and real-time chat are more likely to rank well in the algorithm.

Studies have shown that people are more likely to be attracted to emotional content, and that negative emotions tend to spread faster. For example, on Twitter, each additional word of moral-emotional sentiment increases retweet rates by about 20% (Brady, Wills, Jost, & Tucker, 2017).

Similarly, if many sexist comments about Pokimane appear under her live stream or related topics, Twitch’s algorithm may treat this as “high user interaction” and recommend similar content to a broader audience. Even if the discussion is negative, the algorithm still treats it as popular. As more users engage, malicious comments escalate, resulting in mass online harassment.

The Role of Herd Mentality

When cyber violence is not initiated by a single individual, it’s often driven by herd mentality. Herd mentality occurs when individuals are influenced by group behavior, abandoning their personal judgment to align with the majority.

For instance, when a comment or post gets a high number of likes, it’s shown to more users—this encourages others to “like” it too. That’s how herd behavior functions on digital platforms (Massanari, 2017).

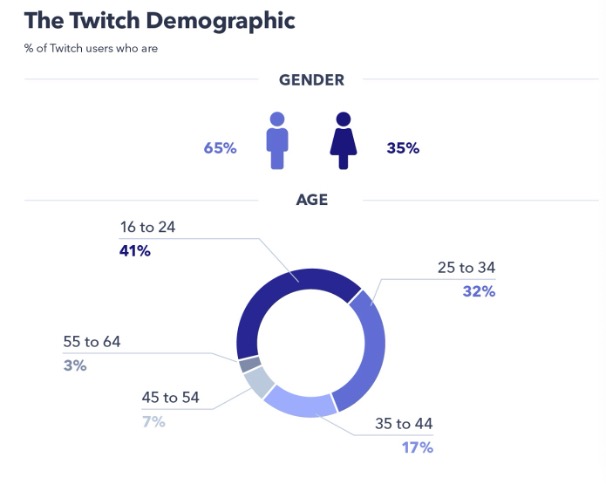

The surge of sexism and hate speech during Pokimane’s streams reflects this effect. According to a survey, 65% of Twitch users are male (Kavanagh, 2025), meaning many viewers identify with the same gender. When a portion of male viewers begin to launch gendered attacks, others may feel encouraged to follow, assuming these statements are acceptable or widely supported.

This creates a vicious cycle: as negative and offensive comments increase, more viewers join in, intensifying the harassment.

The theory of deindividuation explains that individuals in groups sometimes lose responsibility for their own actions, have diminished or even lost self-control, and thus engage in antisocial behaviors that they normally would not dare to do (Zhou, Farzan, Cech, & Farnham, 2021). And anonymity leads to depersonalization.

There are two types of anonymity: social anonymity and technical anonymity. Technical anonymity refers to hiding the IP address and not displaying the real name. Social anonymity refers to the fact that users do not feel cues related to their identity. On Twitch, users identify each other using usernames and IDs (Zhou et al., 2021, p.140).

Viewers on Twitch are highly anonymous both socially and technically, and it is difficult for an anchor to recognize the identity of a user behind multiple usernames or accounts. Instead, anchors are fully exposed to viewers. As a result, viewers are free to make sexist comments and attack female anchors without fear of facing real-life retaliation or punishment for those comments.

Even more extreme states are displayed. In the case of the sexism and attacks that Pokimane experienced, even after she banned someone from the chat room for posting inappropriate language, several new accounts entered the channel because the user was not tied to the person’s real ID and continued to engage in the same bullying behaviour. This is what happens when users are unilaterally highly anonymous.

Regulatory dilemmas: why is platform governance failing?

Twitch’s reporting mechanism provides a user-reporting tool to address harassment and hate speech on the platform. However, its overall operational structure lacks effectiveness and transparency. The community enforces standards of appropriate behavior through the development of norms combined with vetting techniques such as automated vetting (Uttarapong, Cai, & Wohn, 2021).

Twitch uses artificial intelligence to identify and extract information from massive amounts of data to blacklist users who make inappropriate comments. However, AI recognition is limited, and it still misses certain content, such as alternate spellings or context. Additionally, the filters are often too sensitive, meaning that hosts cannot set the highest filter settings without extreme censorship of their chat boxes. As a result, these settings still create a constant stream of harassing and negative comments.

Moreover, Twitch’s reporting mechanism remains anonymous, with most users reporting violations without knowing the specifics of the person they are reporting (Zhou et al., 2021). The platform also generally does not inform the whistleblower whether the reported person will be penalized. In many cases, users do not know whether their report was successful, and there is no transparent feedback on the outcomes of the punishment imposed by the platform.

Several female streamers, such as Pokimane, Kruzadar, and LuLuLuvely, have also pointed out Twitch’s vulnerability and expressed concern about the platform’s inefficiency and unknown outcomes (Rock & Art, 2023).

In the United States, the regulation of the Twitch platform is largely influenced by Section 230 of the Communications Decency Act (CDA 230). This law explains that online content intermediaries, such as platforms like Twitch, are exempt from civil liability (Bolson, 2016). It allows these platforms to provide an environment for content distribution and dissemination without being held legally responsible for user-generated content.

Encouraged by this broad immunity, users of internet sites are free to promote or send malicious content that harasses and bullies others, and the sites are not held relatively liable. Some critics argue that the law is too lenient and fails to address the responsibility of platforms for content censorship effectively. Despite these concerns, various parties continue to push for a revision of the law.

With such a loophole in the law, platforms like Twitch often operate with considerable freedom when dealing with issues such as online violence and sexism. Due to the protection offered by Section 230, the platform does not face legal consequences, which results in a lack of sufficient external pressure and legal constraints on its governance (Bolson, 2016).

In contrast to the US approach, the European Digital Services Act (DSA) emphasizes active regulatory responsibility and transparency for platforms. The DSA aims to make the internet safer by addressing illegal content and monitoring content review practices. It follows the principle of “remove first, consider later,” which requires platforms to remove or disable access to content as soon as hate speech appears (Turillazzi, Taddeo, Floridi, & Casolari, 2023). This strict regulatory mechanism exposes platforms operating in Europe to more serious legal liabilities.

Conclusion

The gender-based online violence experienced by Twitch’s female anchors is not an isolated case, but a systemic problem shaped by the platform’s recommendation algorithms, opaque vetting system, and broader societal biases. Pokimane’s experience is just the tip of the iceberg for the plight of many female anchors, and even though she already has top-tier traffic and voice, it’s difficult for her to escape from the long-term predicament of cyber-violence. Not to mention those female anchors who are not yet famous and lack resources.

Platforms, therefore, need to step up their game, not just by providing optimized algorithms or reporting systems, but by making a cultural shift to actively challenge misogyny and protect creators. But it’s not just Twitch’s responsibility. At the same time, as viewers and content creators, we need to constantly reflect on our own social media demeanour. Every time we remain silent in the face of sexism and laugh at hateful comments, we become part of the problem.

Twitch is not an inherently hateful platform, but if we continue to tolerate this “everyday violence,” it will never be a truly safe, open, and inclusive public space for all.

Change doesn’t just come from the platform. It comes from us.

Reference list

Bolson, A. P. (2016). Flawed but fixable: section 230 of the Communications Decency Act at 20. Rutgers Computer & Technology Law Journal, 1-.

Brady, W. J., Wills, J. A., Jost, J. T., Tucker, J. A., & Van Bavel, J. J. (2017). Emotion shapes the diffusion of moralized content in social networks. Proceedings of the National Academy of Sciences – PNAS, 114(28), 7313–7318. https://doi.org/10.1073/pnas.1618923114

Holl, E., Wagener, A., & Melzer, A. (2024). Do Gender Stereotypes Affect Gaming Performance? Testing the Effect of Stereotype Threat on Female Video Game Players. https://gamestudies.org/2402/articles/holl_wagener_melzer

Just, N., & Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the Internet. Media, Culture & Society, 39(2), 238–258. https://doi.org/10.1177/0163443716643157

Kavanagh, D. (2025, January 22). Watch and learn: The meteoric rise of twitch. GWI. https://www.gwi.com/blog/the-rise-of-twitch

Massanari, A. (2017). Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Rock & Art. (2023, May 26). Why are women streamers underrepresented on Twitch? Rock & Art. https://www.rockandart.org/women-streamers/

Sinpeng, A., Martin, F. R., Gelber, K., & Shields, K. (2021). Facebook: Regulating Hate Speech in the Asia Pacific. Department of Media and Communications, The University of Sydney.

Turillazzi, A., Taddeo, M., Floridi, L., & Casolari, F. (2023). The digital services act: an analysis of its ethical, legal, and social implications. Law, Innovation and Technology, 15(1), 83–106. https://doi.org/10.1080/17579961.2023.2184136

Uttarapong, J., Cai, J., & Wohn, D. Y. (2021). Harassment experiences of women and LGBTQ live streamers and how they handled negativity. ACM International Conference on Interactive Media Experiences, 7–19. https://doi.org/10.1145/3452918.3458794

Zhou, Y., Farzan, R., Cech, F., & Farnham, S. (2021). Designing to Stop Live Streaming Cyberbullying: A case study of Twitch Live Streaming Platform. C&T ’21: Proceedings of the 10th International Conference on Communities & Technologies – Wicked Problems in the Age of Tech, 138–150. https://doi.org/10.1145/3461564.3461574

Be the first to comment