How social media’s engagement-driven algorithms are fueling online hate against women

Batra, A. (2023, August 9). What challenger brands can (and should) do about online hate speech. Rolling Stone. https://www.rollingstone.com/culture-council/articles/what-challenger-brands-can-about-online-hate-speech-1234810976

- “You’re ugly. You’re worthless. Why don’t you just disappear?”

Imagine waking up every morning eager to check your social media notifications, worried that hateful words might be waiting for you – not because you’ve said something controversial, but because you’re a woman who shares your daily experiences on Instagram or TikTok. For millions of women online, this is no fiction; It’s the reality of their lives.

Social media platforms often present themselves as neutral Spaces that promote free speech and equal participation. Beneath their polished interfaces, however, lurks an uncomfortable reality: algorithms designed purely to maximize user engagement inadvertently – and sometimes intentionally – amplify misogynistic content. A recent Guardian report (2024) revealed that Instagram and similar platforms routinely post content that demeans and harasses women, effectively normalizing such speech among impressionable young audiences.

We must stop seeing this phenomenon as a harmless oversight.

This blog argues that Instagram’s recommendation algorithms actively amplify misogynistic hate speech, creating environments where hostility is rewarded, and victims are silenced. By integrating insights from Adrienne Massanari’s (2017) research on toxic technocultures and Safiya Noble’s (2018) critical exploration of algorithmic biases, this piece will illustrate precisely how gender-based hatred is systemically reinforced online—and highlight the urgent need for change.

What Exactly is Online Misogyny and Hate Speech Against Women?

The Guardian. (2025, March 19). Beyond Andrew Tate: The imitators who help promote misogyny online. https://www.theguardian.com/media/2025/mar/19/beyond-andrew-tate-the-imitators-who-help-promote-misogyny-online

Misogyny is a form of hostility or prejudice specifically targeting women, rooted in gender-based discrimination (Guardian, 2025). For example, Jordan Peterson (left) and Adin Ross (right) believe feminism is hurting men. When misogyny spreads online, it manifests itself in hate speech, harassment, and targeted digital abuse, often attacking women for their appearance, sexual orientation, or personal choices. This online hate takes many forms, such as slut-shaming, where women are stigmatized and hostile for their sexuality, or body shaming, where they are demeaned for not adhering to narrow beauty standards.

Far from being isolated incidents, these forms of hate speech have become disturbingly normalized in the social media environment. Women who share feminist views, speak openly about politics, or are simply visible online are often the targets of organized campaigns of harassment. This attack is not spontaneous; Rather, they are deeply embedded in the cultural fabric of online platforms, amplified and perpetuated by algorithms that manage content visibility.

Massanari (2017) describes digital platforms as fostering a “toxic techno culture” – a cyberspace where misogynistic harassment not only exists, but is algorithmically encouraged and culturally reinforced. According to her research, incidents like Gamergate expose how social media governance structures and algorithmic mechanisms can inadvertently promote gender-based hostility.

Sadly, this isn’t limited to Reddit or niche forums – the same goes for mainstream platforms like Instagram and TikTok. Women often report hostile comments, threatening messages, and degrading posts that are amplified by algorithmic recommendation systems, effectively normalizing and perpetuating misogyny online.

How Algorithms Amplify Hate Speech Against Women

Gillespie, T. (2018). Custodians of the Internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press. https://ebookcentral.proquest.com/lib/usyd/reader.action?docID=4834260&ppg=1

When you scroll through Instagram or TikTok, have you ever wondered why certain controversial or provocative videos keep popping up in your feeds? Social media platforms rely heavily on complex algorithms that are designed primarily to increase user engagement – more likes, shares, comments, and views. On the surface, this may seem harmless, even beneficial, but the reality can be deeply disturbing. A growing body of evidence suggests that these algorithms inadvertently amplify misogynistic content, effectively incentivizing creators who produce harmful and inflammatory material.

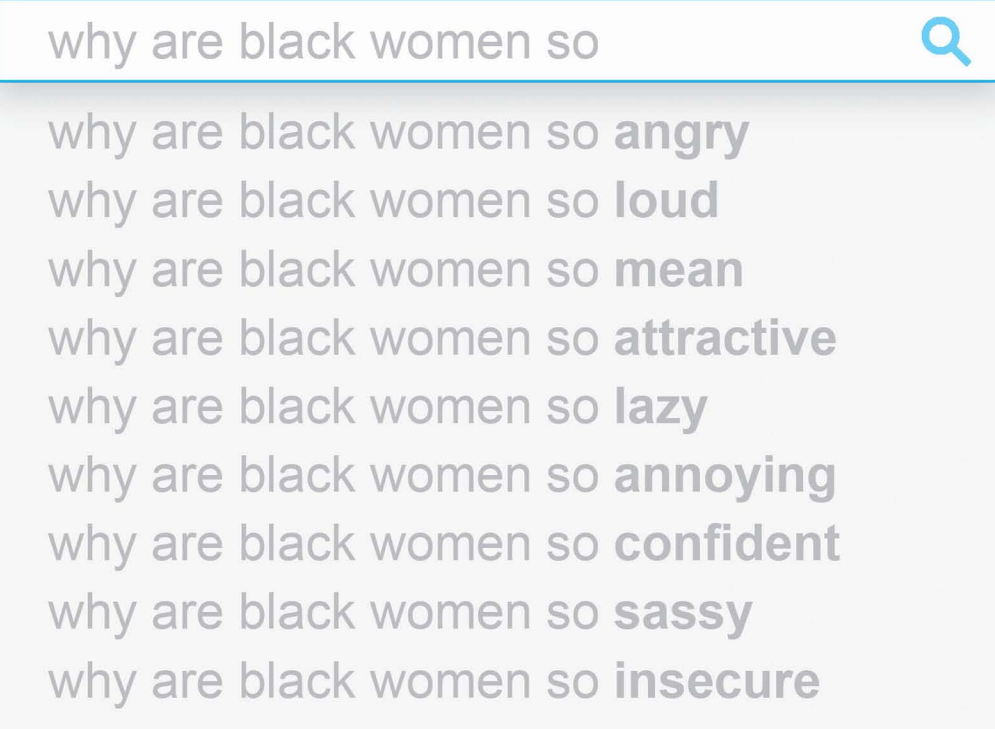

According Safiya Noble (2018), author of Algorithms of Oppression, provides a crucial insight: algorithms are not neutral or purely technical entities. Instead, they’re shaped by—and reflective of—societal biases and structural inequalities. Noble highlights that content recommendation systems prioritize engagement metrics above ethical considerations, reinforcing harmful stereotypes and biases. On platforms like Instagram and TikTok, this means misogynistic content—precisely because of its emotionally provocative nature—often attracts more interactions, rapidly becoming viral and reaching wider audiences.

This phenomenon was clearly illustrated in a recent investigation by The Guardian (2024), which reported that social media algorithms are actively amplifying misogynistic content among young audiences. The study showed how videos that demeaned, sexualized, or mocked women received disproportionately higher levels of engagement compared to neutral or positive content, prompting algorithms to continually surface them. Thus, a vicious cycle emerges: the more hateful the content, the more engagement it attracts; the more engagement it attracts, the more prominently algorithms feature it.

Additionally, misogynistic content on social media frequently draws inspiration from influential figures who deliberately spread hateful ideologies. According to another report by The Guardian (2025), influencers mimicking controversial figures like Andrew Tate are responsible for spreading harmful narratives that normalize misogyny among younger demographics. These imitators leverage algorithms’ affinity for emotionally charged, controversial posts to rapidly build audiences and propagate toxic ideas, thereby reinforcing harmful stereotypes on a massive scale.

Furthermore, platforms struggle to effectively moderate this problematic content, particularly when misogynistic messages are cleverly disguised. Another Guardian report (2025) demonstrated how “Incel” communities—groups of men expressing hostility towards women—have adopted seemingly harmless self-improvement language to evade platform censorship. Algorithms fail to detect these nuanced tactics, allowing disguised misogyny to thrive unchecked, highlighting fundamental limitations within automated content moderation systems.

In essence, algorithmic systems do not merely reflect existing misogyny; they actively exacerbate it. By consistently promoting emotionally charged content to maximize user attention, these algorithms reinforce and spread gender-based hatred. Massanari’s (2017) concept of “toxic technocultures” resonates profoundly here, highlighting how social media governance and recommendation mechanisms collectively sustain and amplify harmful misogynistic practices, ultimately shaping online culture in ways that systematically marginalize and silence women. Until platforms fundamentally rethink the way their algorithms handle harmful yet engaging content, the spread of misogyny online will likely continue to escalate unchecked.

The Real-World Impact of Online Misogyny

“How Online Abuse of Women Has Spiraled Out of Control” Produced by The Guardian (2021), this short video explores the devastating emotional and psychological toll of gender-based abuse in online spaces.

https://www.youtube.com/watch?v=GSf6nij-SdA

Online misogyny is far more than just hateful comments on a screen—it translates into significant, tangible consequences in women’s real lives, deeply impacting their mental health, safety, and digital participation.

Free Press Unlimited. (n.d.). The impact of online violence on women journalists and press freedom. Retrieved April 13, 2025. https://www.freepressunlimited.org/en/current/impact-online-violence-women-journalists-and-press-freedom

Research consistently highlights the serious psychological impact online abuse inflicts on women. According to Amnesty International (2018), women exposed to persistent misogynistic harassment online frequently experience anxiety, depression, and fear for their personal safety. Similarly, a Pew Research Center (2022) study found that approximately 40% of women who experienced severe online harassment chose to limit their online presence or completely withdraw from digital interactions due to anxiety or fear. This phenomenon, known as the “chilling effect,” profoundly undermines women’s freedom to engage and express themselves openly online.

Massanari’s (2017) study on toxic technocultures provides further context, demonstrating how misogynistic harassment systematically marginalizes women’s voices, thus reinforcing patriarchal power structures within digital spaces. The strategic and persistent nature of such harassment is often deliberately employed to silence women, eroding their confidence and restricting their participation. As Massanari argues, these hostile online cultures create environments where women’s voices are undervalued and routinely suppressed, perpetuating gender inequality.

Furthermore, Safiya Noble’s (2018) examination of algorithmic biases emphasizes that social media recommendation algorithms play an active role in facilitating and amplifying this misogynistic abuse. Algorithms that prioritize controversial, emotionally charged content inadvertently escalate misogynistic narratives, effectively normalizing sexism among users. Noble’s insights underscore how algorithmic biases contribute to broader societal discrimination, as harmful content online translates directly into real-world prejudice.

Young women, especially, bear the brunt of this amplified abuse. Platforms like Instagram and TikTok, primarily frequented by younger demographics, intensify misogynistic impacts during formative adolescent years. A recent Guardian report (2024) explicitly warned that repeated exposure to misogynistic content, driven by engagement-based algorithms, influences adolescents’ perceptions, potentially normalizing harmful attitudes and behaviors toward women. Additionally, The Guardian (2025) highlighted how influencers mimicking controversial misogynistic figures further accelerate this harmful content, exacerbating real-life discrimination.

Ultimately, the consequences of online misogyny extend beyond the digital world, reinforcing systemic sexism and suppressing women’s voices. It’s imperative that platforms recognize these impacts, assume greater responsibility, and urgently reform their moderation and algorithmic practices to protect vulnerable groups.

Platform Responsibility—What Instagram Should Be Doing

Debuglies News. (2019, December 18). The spread of hate speech via social media could be tackled using the same quarantine approach deployed to combat malicious software. https://debuglies.com/2019/12/18/the-spread-of-hate-speech-via-social-media-could-be-tackled-using-the-same-quarantine-approach-deployed-to-combat-malicious-software

- Rethinking Algorithmic Design

- Increasing Transparency

- Improving Human-AI Moderation

- Empowering Community Engagement

- Providing Support for Victims

Indian Express. (2022, January 21). Instagram to show potential hate speech lower down in your feed, Stories. https://indianexpress.com/article/technology/tech-news-technology/instagram-to-show-potential-hate-speech-lower-down-in-your-feed-stories-7734969

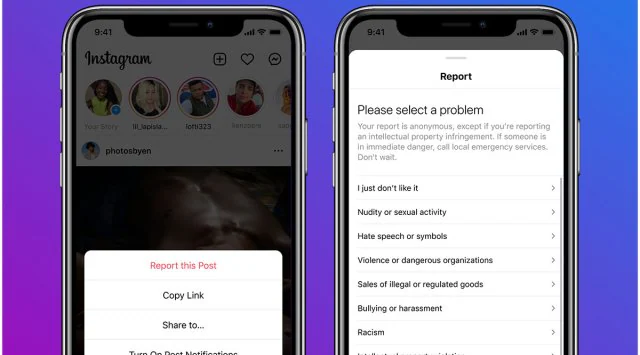

- Instagram pushes posts that may contain bullying, harassment, or calls for violence further down your feed or story to reduce engagement.

Given the significant role Instagram’s algorithms play in amplifying misogynistic hate speech, the platform bears undeniable responsibility to address the harm it perpetuates. Yet, its current moderation practices remain insufficiently transparent, reactive rather than proactive, and heavily reliant on flawed automated systems.

Platforms must first recognize algorithmic bias as a fundamental issue, echoing Safiya Noble’s (2018) assertion that technology is neither neutral nor inherently fair. Instagram needs to critically evaluate and redesign its recommendation algorithms, prioritizing ethical considerations alongside engagement metrics. For instance, identifying and limiting visibility for content systematically targeting or harassing vulnerable groups, such as women, would significantly mitigate harm.

Transparency about how moderation decisions are made is equally essential. According to research by Gillespie (2018), platforms often obscure their moderation processes to avoid scrutiny, thus fostering environments in which abuse can flourish unchecked. By clearly communicating how decisions are made, Instagram could regain user trust and enhance accountability. This increased transparency should also extend to algorithmic processes, enabling external audits and research into how misogynistic content gains visibility.

As highlighted by The Guardian (2025), platforms struggle to detect subtly disguised misogyny, often camouflaged as benign content, such as self-improvement narratives employed by incel communities. Instagram should, therefore, implement more sophisticated moderation techniques, integrating advanced human oversight with improved artificial intelligence systems capable of understanding nuanced contexts. Furthermore, investing in culturally diverse moderation teams can ensure that moderation decisions consider different societal perspectives and sensitivities.

User involvement can serve as another effective corrective measure. Massanari (2017) emphasizes that toxic technocultures thrive due to insufficient community governance structures and weak platform accountability. Strengthening user-driven reporting mechanisms—where users see tangible results and explanations following their reports—would increase confidence in platform safety measures. Collaborating with researchers, advocacy groups, and affected communities would further inform these processes, ensuring they remain responsive and adaptive to emerging challenges.

Lastly, Instagram must also provide substantial support resources for victims of online harassment. According to Amnesty International (2018), platforms generally offer minimal help to harassment victims, exacerbating psychological harm and alienating vulnerable users. Introducing comprehensive mental health resources and practical guides on protecting oneself from harassment could dramatically improve the user experience and empower users to remain active and safe online.

Addressing misogyny isn’t merely an ethical imperative; it’s integral to creating healthier online environments. Instagram, alongside other social media platforms, must understand that tolerating hate speech—algorithmically or otherwise—undermines both user safety and long-term platform sustainability.

Conclusion–Taking Responsibility for Our Digital Spaces

Instagram’s failure to adequately tackle misogyny highlights a broader industry challenge: the urgent need for platforms to reassess their responsibilities toward users. Algorithms that prioritize engagement over ethical standards don’t merely perpetuate harmful stereotypes—they amplify and normalize them, exacerbating real-world harm.

The fight against online misogyny requires more than surface-level policy tweaks. It demands a fundamental shift in how platforms design algorithms, approach moderation, and value user safety. As digital citizens, we also have a role in demanding greater accountability and ethical responsibility from tech giants.

As you scroll through your feed tonight, consider this: What type of digital world are we contributing to? Are we passively accepting harmful algorithms, or are we actively advocating for change? It’s crucial to recognize that behind every hateful comment is a real person affected, silenced, and hurt.

Social media has tremendous power to shape attitudes, behaviors, and societies. If we collectively hold platforms accountable, advocate for transparency, and refuse to tolerate hatred—then perhaps we can transform social media from a toxic echo chamber into a genuinely safe, inclusive space for everyone, especially women and marginalized communities.

Anti-Defamation League. (2023, March 6). #StandUpToJewishHate: Tony [Video]. YouTube. https://www.youtube.com/watch?v=2R1ZVygVHwk

The choice—and the responsibility—is ours.

References

Amnesty International. (2018, March 21). Toxic Twitter: A toxic place for women. Amnesty International. https://www.amnesty.org/en/latest/news/2018/03/online-violence-against-women-chapter-1

Gillespie, T. (2018). Custodians of the Internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press. https://ebookcentral.proquest.com/lib/usyd/reader.action?docID=4834260&ppg=1

Massanari, A. (2017). Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. NYU Press.

Pew Research Center. (2022). The State of Online Harassment.

Retrieved from https://www.pewresearch.org/internet/2022/06/01/the-state-of-online-harassment/

The Guardian. (2024, February 6). Social media algorithms ‘amplifying misogynistic content’.

Retrieved from https://www.theguardian.com/media/2024/feb/06/social-media-algorithms-amplifying-misogynistic-content

The Guardian. (2025, March 19). Beyond Andrew Tate: The imitators who help promote misogyny online.

Retrieved from https://www.theguardian.com/media/2025/mar/19/beyond-andrew-tate-the-imitators-who-help-promote-misogyny-online

The Guardian. (2025, March 29). Incel accounts using ‘self-improvement’ language to avoid TikTok bans – study.

Retrieved from https://www.theguardian.com/technology/2025/mar/29/incel-accounts-using-self-improvement-language-to-avoid-tiktok-bans-study

Be the first to comment