1. Introduction: The Illusion of Virtual Utopia

The meta-universe is often advertised as a free space that can go “beyond physical limits” In this virtual world, users can eliminate the constraints of the real world and redefine themselves(Korinek, 2023). However, when we take a deeper look at the meta-universe platforms, we find a disturbing reality: Rather than eliminating inequality in real society, these virtual Spaces are systematically replicating existing gender and racial structures through algorithms.

From a technical design point of view, the virtual avatar systems of meta-universe platforms are often based on flawed data sets and algorithms. These technical architectures claim to be “neutral” on the surface. Still, they are embedded in the designers’ cultural biases and social perceptions. This article will use examples from meta-universe platforms such as Meta Horizon Worlds and Decentraland, combined with specific user cases and academic research, to reveal the hidden discrimination in the meta-universe. This paper attempts to answer three core questions by collecting user encounters in the meta-universe: How do algorithms encode social bias? What effect do these biases have on users? What resistance strategies can we employ?

2. How does the algorithm encode social bias

2.1 “Standardized Template” for Avatars

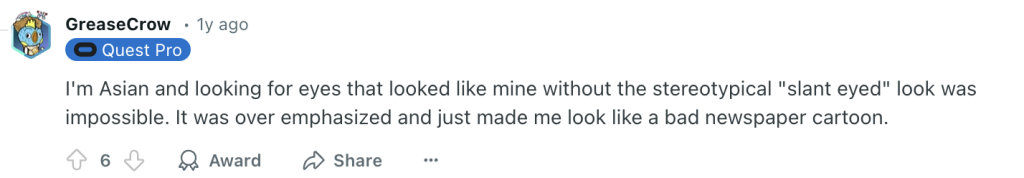

Asian user stereotypes are presented by Meta Horizon Worlds’ avatar creation system. In Meta Horizon Worlds’ default image library, the “East Asian face” option is reduced to a few highly simplified feature combinations: an almost uniform, slender eye shape, pale tones lacking true skin tone, and highly standardized facial contours. This design makes it difficult for Asian users, especially male users, to find choices in the system that match their accurate self-image. Asian users have reported that it is impossible to find a pair of eyes that look like them without stereotyping(Reddit, 2024). This problem is not unique to Meta but also exists in major social VR platforms such as Zepeto and VRChat. More worryingly, these platforms often define non-Western cultural characteristics as “special content” that requires an additional fee. For example, As shown in Figure 3 (Zepeto ,n.d.) , Zepeto users who want relatively realistic Korean traditional clothing, traditional hair bun, or other culturally specific decorative elements often need to purchase additional mods or DLC. This business model essentially commoditizes non-Western cultural identities, making non-Western images “exotic” that require additional consumption.

Figure 1 A screenshot from Meta Avatar

Note. Adapted from Facebook. (n.d.).

Figure 2 A screenshot from Reddit

Note. From The Meta Avatars Are Terrible. (2024). Reddit.com. https://www.reddit.com/r/virtualreality/comments/1bnwujz/the_meta_avatars_are_terrible/?utm_source

Figure 3 A screenshot from Zepeto

Note. From Zepeto. (n.d.).

Lisa Nakamura (2007) pointed out that identity customization systems in the meta-universe generally have an “illusion of freedom”. They ostensibly offer a diverse selection of images. Still, algorithms and design architecture marginalize non-Western features, making them “special options” that require extra effort to obtain. This mechanism not only perpetuates racial stereotypes in the real world but also ignores the enormous diversity within non-Western populations (e.g., differences between different ethnic groups in Southeast Asia, Africa, Oceania, etc.). This design logic reflects the common cultural blindness of technology companies and the simplified imagination of “other culture” from the perspective of Western centrism.

2.2 Marginalization of non-Western cultural symbols

In addition to the stereotyping of features, the marginalization of non-Western culture in the meta-universe is reflected in many aspects, such as virtual goods and language interaction. For example, the platforms such as Decentraland are dominated by Western-style dresses. In contrast, as show in Figure 4(Decentraland – Marketplace, 2025), traditional clothing such as conventional Chinese Hanfu, Japanese kimono, and East African Kanga & Kitenge must either be modelled by users or are missing altogether. This design reflects the platform’s structural neglect of non-Western cultures – they default to Western aesthetics as the “standard.” In contrast, other cultures are seen as “complementary” or “niche.”

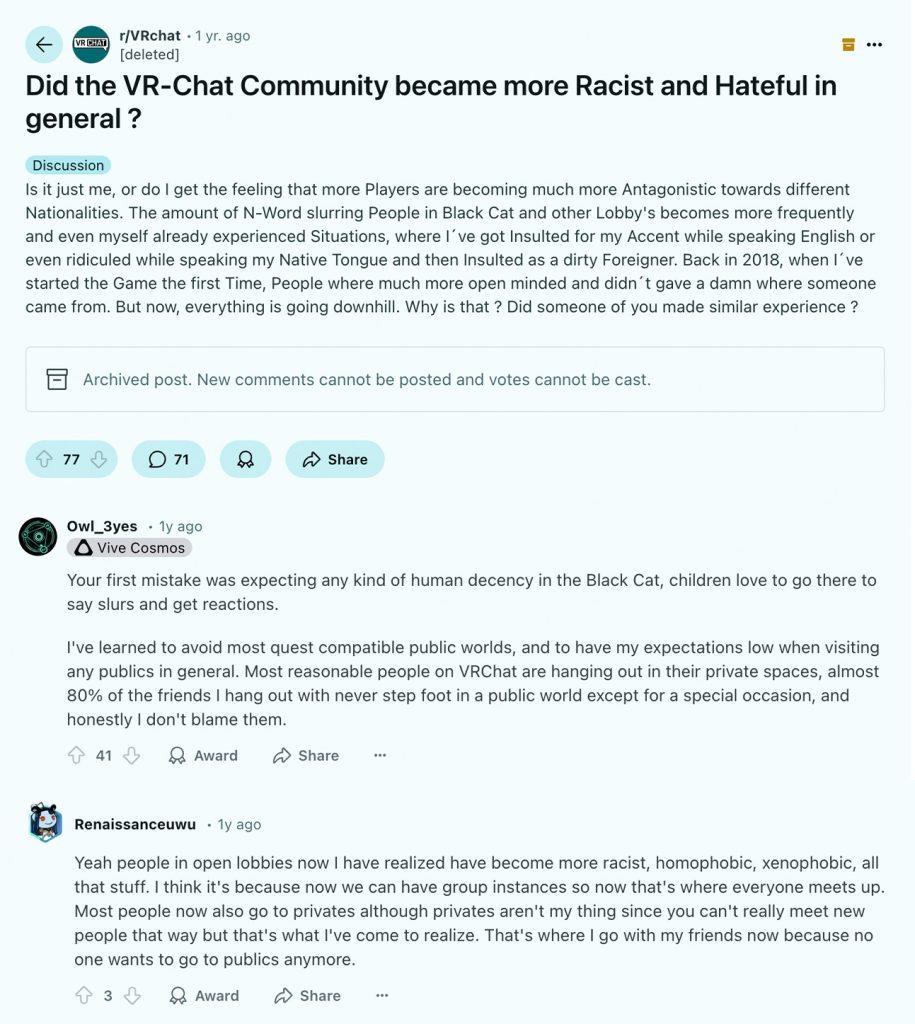

The problem of language hegemony is equally serious. Many non-native English speakers on Reddit have reported that they are often ridiculed or ostracized in meta-universe platforms. For example, one user mentioned that other players in VR Chat teased him due to his non-standard English pronunciation. Ironically, the “solution” offered by some commenters was to advise these users to avoid public areas rather than calling on the platform to improve its inclusive design (Reddit, 2018). Lumsden, Harmer, and Lumsden (2019) refer to this phenomenon as “Digital Othering,” where non-Western users are pushed to the margins through algorithms, social rules, or default Settings. The platform’s algorithms set Western culture as the norm in their study. At the same time, other cultures were grouped into “special” or “minor” categories. This classification affects the user experience and reinforces existing disparities in social equality.

Figure 5 A screenshot from Decentraland

Note. From Decentraland – Marketplace. (2025). Decentraland.org. https://decentraland.org/marketplace/browse?assetType=item§ion=wearables&vendor=decentraland&page=2&sortBy=recently_listed&search=+Chinese

Figure 5 A screenshot from Reddit

Note. From Did the VR-Chat Community became more Racist and Hateful in general ? (2018). Reddit.com. https://www.reddit.com/r/VRchat/comments/17ig6ts/did_the_vrchat_community_became_more_racist_and/

3. Political and digital exploitation of identity data

3.1 Commercialization of Algorithmic Identity

The commercial operation of user identity data on the meta-universe platform has formed a systematic exploitation mechanism. In 2022, the US Department of Justice sued the Meta platform in a landmark case. The case reveals how algorithmic bias can be deeply embedded in advertising systems. According to an investigation by the US Department of Justice, Meta allows advertisers to target based on characteristics such as race and skin colour and uses user analytics to divide people into “packets” with different commercial values(Wodecki, 2022).

As Zuboff (2020) points out in The Age of Surveillance Capitalism, this behaviour is essentially a digital evolution of “behavioural surplus value extraction.” By monitoring users’ virtual image choices, social interaction patterns, consumption behaviour and other data around the clock, the meta-universe platform builds a more sophisticated discriminatory pricing model than the real world and obtains super profits from it. More worryingly, this exploitation of data is closing a loop. The platform first induces users to reveal cultural preferences through a limited selection of avatars, then uses algorithms to reinforce those preferences, and finally sells the processed identity data to advertisers. The process deprives users of autonomy over their cultural representations and compacts multiple identities into tradable digital assets.

3.2 A meta-cosmic version of “digital colonialism”

The meta-universe platforms developed by local Asian technology companies seem to break the Western monopoly on the surface but continue the colonial logic in the deep architecture. Taking South Korea’s Zepeto as an example, although its Avatar 2.0 system adds Asian elements such as single eyelids and Hanbok, a careful analysis of its code architecture can find that this “localized” content is only surface decoration. The underlying character action system and expression system are still entirely copied from the Western mainstream engine.

The concept of “platform imperialism” proposed by Jin (2013) is fully confirmed here. He pointed out, “In the 21st century, there is a distinct connection between platforms, globalization, and capitalist imperialism. The contemporary concept of imperialism has supported huge flows of people, news, and symbols, leading to high convergence among markets and technologies.” Although non-Western companies have mastered the right to operate the platform, their dependence on Western systems at key technical levels, such as core algorithms, development tools, and interaction paradigms, has caused profound cultural aphasia. The new generation of Asian developers learning to code is exposed to development tools built with Western values, which makes it difficult for non-Western cultural perspectives to innovate from the technical source. The result is a more insidious

and stubborn form of digital colonization. Eastern companies provide the content, and Western structures define the rules.

4. Resistance and Reconstruction: The Possibility of Moving Towards a multi-dimensional Universe

4.1 User Empowerment Practices

Faced with the problem of discrimination in the meta-universe, users and digital activists have developed various resistance strategies. These bottom-up digital affirmative movements are forming a force to be reckoned with, driving deep ethical reflection in the tech industry. The Algorithmic Justice League (AJL), founded in 2016 by computer scientist Dr. Joy Buolamwini, is one of the most iconic organizations. The organization’s “Gender Shades” study, released in 2022, was a landmark. Through systematic testing, the research team found that facial recognition technology developed by major tech companies, including IBM, Microsoft and Amazon, had a 34.4% difference in error rates between dark-skinned women and light-skinned men(Buolamwini, 2017). This discovery not only revealed the deep-rooted racial and gender bias in AI technology but also directly prompted several tech companies to suspend or modify related products.

Dr Buolamwini’s work has been widely recognized, and she has been honoured as MIT Technology Person of the Year and World Economic Forum Young Scientist. More importantly, her research has successfully brought the problem of algorithmic bias into the public eye. In the meta-universe, AJL’s research has directly pushed companies like Meta to re-evaluate the fairness of their avatar recognition systems.

Sociologist Benjamin (2020) provides an essential theoretical underpinning for these practices. She points out that the autonomous technological practices of marginalized groups are doubly revolutionary: On the one hand, they fight for the right to speak in the digital space through confrontation, and on the other hand, they redefine the ethical direction of technological development through alternative practices. In particular, Benjamin emphasized the importance of “Pre-figurative Design” -demonstrating a more inclusive technological future through today’s practices. Therefore, the meta-universe social platform should provide more accurate image options and reconstruct the interaction paradigm to integrate non-Western cultural traditions into social design.

4.2 Policy and Ethical Challenges

However, relying on user initiative alone will not be enough to bring about real change in the meta-universe. Therefore, the participation of other forces in society is also required:

First, a “meta-cosmic charter of Human rights” could be developed to clarify fundamental principles such as virtual body rights, algorithmic transparency, and fairness in cultural expression. For example, the UN Committee on Digital Rights should lead in drafting such a document and promote global participation.

Second, regional organizations should play a more significant role in the meta-universe. For example, the Association of Southeast Asian Nations (ASEAN) should introduce a dedicated meta-anti-discrimination bill, requiring platforms to provide culture-appropriate vetting algorithms and diverse default options. In addition, it is important to establish an independent monitoring body to evaluate discrimination on the platform regularly.

Finally, greater collaboration between academia and industry is needed. Tech companies should actively engage with anthropologists and sociologists to avoid embedding bias from the design stage.

5. Conclusion

Through investigation and analysis, it can be found that the meta-universe replicates the inequality of the real world. The shadow of inequality permeates every level of the meta-universe, from the rigid templates of avatars to the exploitative logic of the data economy.

However, the spark of hope never dies. We see the courage of people fighting prejudice in the Algorithmic Justice League (AJL). It is possible to build a more diverse and equitable virtual world based on bottom-up forces for change and policy interventions. The construction of the meta-universe is not just a debate about technology but about what kind of digital future we want to create. The answer to this question will determine whether the virtual world ultimately becomes an instrument of liberation or a new form of oppression.

Reference

Decentraland – Marketplace. (2025). Decentraland.org. https://decentraland.org/marketplace/browse?assetType=item§ion=wearables&vendor=decentraland&page=2&sortBy=recently_listed&search=+Chinese

Benjamin, R. (2019). Race after technology: Abolitionist tools for the New Jim Code. Polity Press.

Buolamwini, J. (2017). Gender Shades. Gendershades.org. http://gendershades.org/overview.html

Jin, D. Y. (2013). The Construction of Platform Imperialism in the Globalization Era. TripleC, 11(1), 145–172. https://doi.org/10.31269/triplec.v11i1.458

Karen Lumsden, E. H., Lumsden, K., & Harmer, E. (2019). Online Othering: Exploring Digital Violence and Discrimination on the Web (1st ed. 2019.). Springer International Publishing. https://doi.org/10.1007/978-3-030-12633-9

Korinek, A. (2023, June 6). Metaverse economics part 1: Creating value in the Metaverse. Brookings. https://www.brookings.edu/articles/metaverse-economics-part-1-creating-value-in-the-metaverse/

Reddit – Did the VR-Chat Community became more Racist and Hateful in general ? (2018). Reddit.com. https://www.reddit.com/r/VRchat/comments/17ig6ts/did_the_vrchat_community_became_more_racist_and/

Reddit – The Meta Avatars Are Terrible. (2024). Reddit.com. https://www.reddit.com/r/virtualreality/comments/1bnwujz/the_meta_avatars_are_terrible/?utm_source

Nakamura, L. (2007). Digitizing Race: Visual Cultures of the Internet (NED-New edition, Vol. 23). University of Minnesota Press.

Wodecki, B. (2022). Officials Charge Meta Algorithms With Bias | IoT World Today. Iotworldtoday.com. https://www.iotworldtoday.com/metaverse/officials-charge-meta-algorithms-with-bias#close-modal

Zuboff, S. (2020). The age of surveillance capitalism : the fight for a human future at the new frontier of power (First Trade Paperback Edition.). PublicAffairs.

Be the first to comment