Today’s digital world requires digital privacy protections to achieve fundamental human rights status throughout the 21st century as platforms monitor every user activity and specific user information. People usually lose their privacy without thinking about it because quickness and better connection speeds through digital platforms have more value to them. User behaviors changed through the TikTok content discovery system which created an unperceived algorithmic control method for user experiences.

The blog breaks down both system operational organization and their produced effects. We’ll start by unpacking how algorithms like TikTok’s “For You Page” shape our choices, then investigate why self-regulation by platforms is failing, how governments are struggling to keep up, and finally, what collective solutions might look like.

Algorithmic “for you page”: Coincidence or Manipulation?

Your web or application recommendations constantly match what you searched and browsed previously. This kind of ‘accurate recommendation’ is not a coincidence, it’s part of a deliberate design strategy powered by algorithms.

TikTok’s “For You Page” (FYP) is a prime example. The system tracks both your expressed likes and it uses millisecond counting of video engagement activity. Watching a pet video for a short time might decrease advice about pets yet prolonged exposure to political content tends to make tweets more prominent (Zannettou et al., 2024). This isn’t a neutral user experience — it’s the construction of what scholars call a “filter bubble” or “information cocoon,” a term popularized by Eli Pariser (2011) to describe algorithmic systems that isolate users from diverse viewpoints.

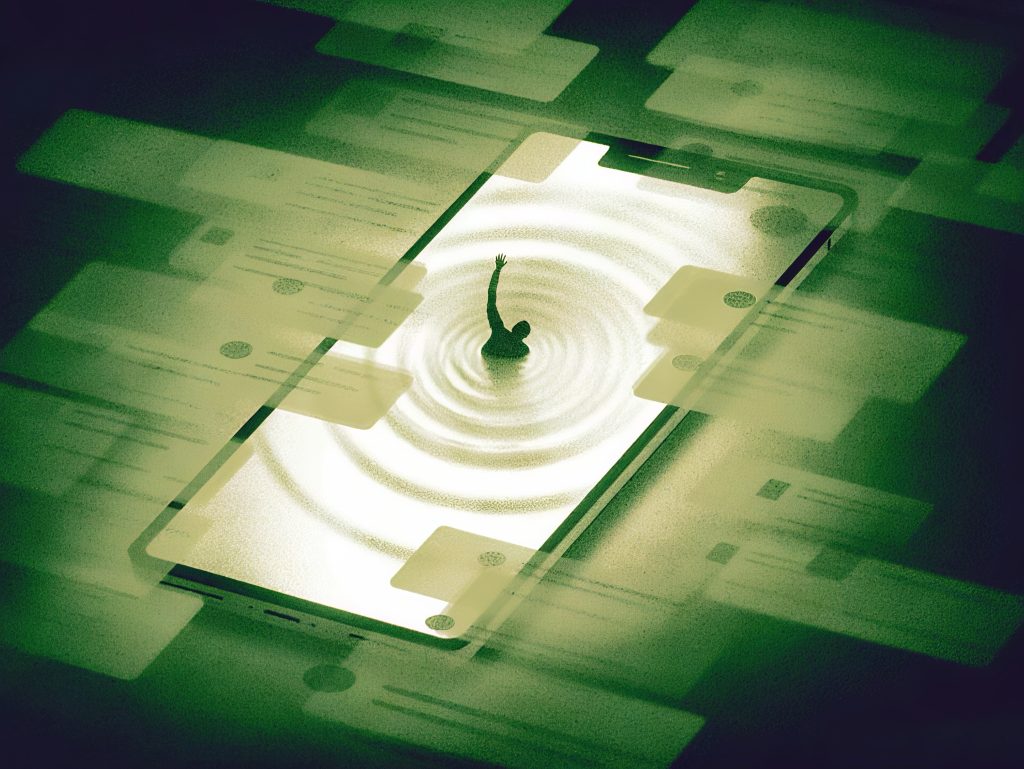

Through user profiling algorithms receive behavioral data such as search history and browsing history and location information which determines the information selection process for shaping your choices and judgments. Spending longer periods on the platform results in higher advertising revenue generation for the platform. The system uses your attention span as its main product.

You would enter a supermarket featuring shelves customized with your specific interests rather than optimal choices for increased staying time. While the layout design intends to guide users toward increased spending it fails to really enhance consumer exploration resulting in unintentional higher spending.The content recommendation algorithms on TikTok function in this manner.

This is not a serendipity, but an elaborate “attention hunt”

Platforms are not neutral providers of information, but rather “information filters” with enormous influence. Startup designers position healthful or cheaper product selections out of sight by hiding them in storage areas and strategic hidden locations on top of placing select items prominently on shelves. More dangerously, this manipulation is not limited to consumer recommendations – political ideologies, racial biases, and conspiracy theories seep into public consciousness through algorithmic “information cocoons.”

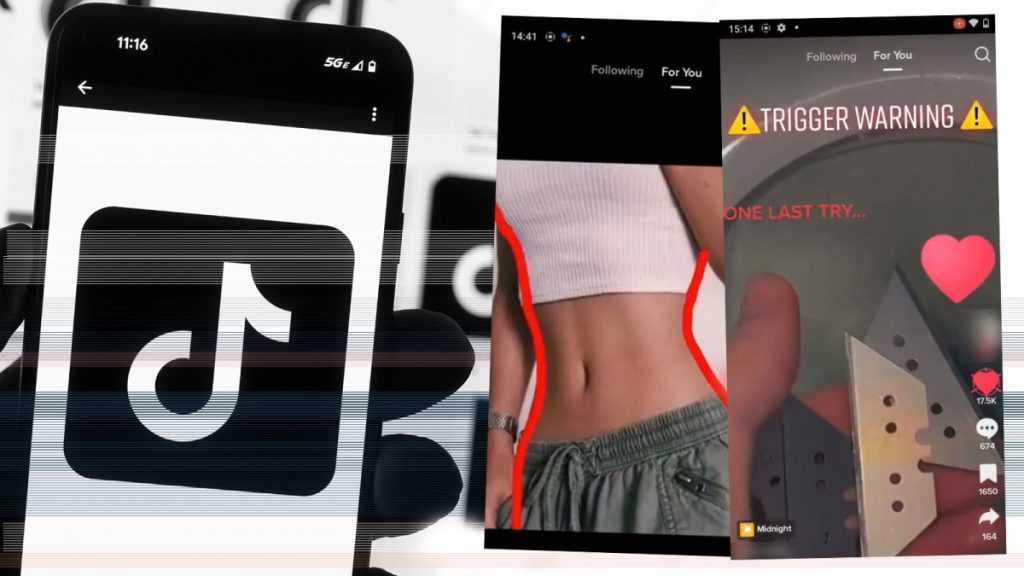

TikTok exists among other market competitors. YouTube’s recommendation system, for instance, traps users in similar feedback loops. A recent MIT Technology Review study discovered that YouTube selects polarizing content which opposes vaccines as the primary content to increase viewer duration. The result? User exposure to viral content from the survey conducted by Pew Research in 2023 prompted a rise in distrust levels about mainstream media reporting among 62% of the affected group. Even Instagram, known for its aesthetic focus, has faced backlash for promoting “thinspiration” posts. The American Psychological Association conducted a 2023 study which demonstrated that girls who continuously viewed specific posts developed body dysmorphia by 30% based on APA research. The algorithms go beyond showing user preferences because they exploit the weaknesses present within individual users.

Why Is Platform “self-regulation” Doomed to Failure?

Experts claim the most efficient approach would let platforms self-police their own operations because they understand their technologies and data most effectively. However, there are clear limitations to this ‘self-regulation’ model. ByteDance which owns TikTok operates in a conflicting position because it functions as rule-maker alongside being both a rule-enforcement entity and rules beneficiary.

TikTok received a €345 million fine from EU authorities in 2023 because the platform had not properly protected children from extreme materials. While the platform claims to have “optimized content moderation,” its business model prioritizes engagement over ethics. The platform generates clicks through extreme content because both clicks and extreme content drive advertisement earnings. For instance, TikTok’s algorithm promotes “#thinspiration” videos to teens despite publicly banning pro-anorexia content—because such videos keep users glued 23% longer (Wall Street Journal, 2023).

This hypocrisy is systemic. A study of Twitter’s self-enforcement operations during the 2020 U.S. elections showed massive failure as research documents revealed reviews occurred on less than half of one percent of submitted posts within such deadlines (The Guardian, 2021). During the Facebook-Cambridge Analytica scandal =it became clear that companies let profit guide their operations instead of guaranteeing user security. Facebook continued safeguarding data according to its statements but still permitted political manipulation of 87 million user accounts (Kang, 2021). Platforms function as public infrastructure yet evade public accountability.

Even giants like Google falter. Google Ads operations in 2022 allowed climate change denial information to display alongside valid environmental content in search results. Digital Marketing Institute revealed how AI advertisement automation bypassed human supervison because it chose quantity over precision in advertisements. An absence of ethics in decision-making transforms self-regulation from a genuine practice into empty pretense.

The Challenge of Government Regulation: From the “Trump seal” to the Privacy Paradox

Youth abusive behaviors observed in social media platforms are pushing governments toward mandatory oversight. Government oversight battles numerous difficulties when executed for monitoring purposes. On the one hand, it is difficult for laws and regulations to keep up with the rapid iteration of technology; on the other hand, governments themselves may use data for surveillance, which raises the same concern that no one can do the “regulator.”

Free Speech vs. Public Safety

Facebook and Twitter banned Trump’s account following the U.S. Congressional riots in 2021 thus sparking intense debate between public safety advocates and free speech defenders. The proper relationship between governmental oversight of speech freedom and tactics to prevent excessive intervention requires thorough examination. The basic rights to privacy and freedom of speech stand in permanent opposition to each other. Privacy is the ‘right to be forgotten, but this right is often sacrificed for public safety or commercial interests (Francis & Francis, 2017), privacy here is sacrificed for “national security.”

However, even well-intentioned policies backfire. The 2020 Indian ban on TikTok sought to safeguard data sovereignty through its legislation yet users evaded the restrictions by accessing the platform through VPN service which made the protective measures unusable. Governing without worldwide harmonization becomes an endless cycle of putting out individual problems while new ones appear.

Despite their widespread fear over data misuse among Americans (91%), most individuals willingly surrender their privacy rights for free usage of services. The answer lies in what scholars call the “privacy calculus”—users accept surveillance to access convenience (Acquisti et al., 2015). The release of Singapore’s contact tracing app TraceTogether faced widespread adoption despite known privacy risks according to Pew Research Center’s (2020) reporting.

Corporations exploit this vulnerability. Prime membership at Amazon lets the company gather and sell purchase data to companies without members’ knowledge about database monitoring. The 2023 FTC report demonstrated Prime users are not aware of the data-sharing practice with third parties(Zahn & Barr, 2023). The Washington Post revealed in 2022 how Uber conducts dynamic pricing by giving higher fares in affluent areas based on location tracking. Though Singaporeans had concerns about data privacy from their contact tracing app TraceTogether they chose to download it anyway because they prioritized their safety above maintaining their anonymity. The compromise required to defend privacy rights constitutes a major challenge because few individuals can pay for complete privacy.

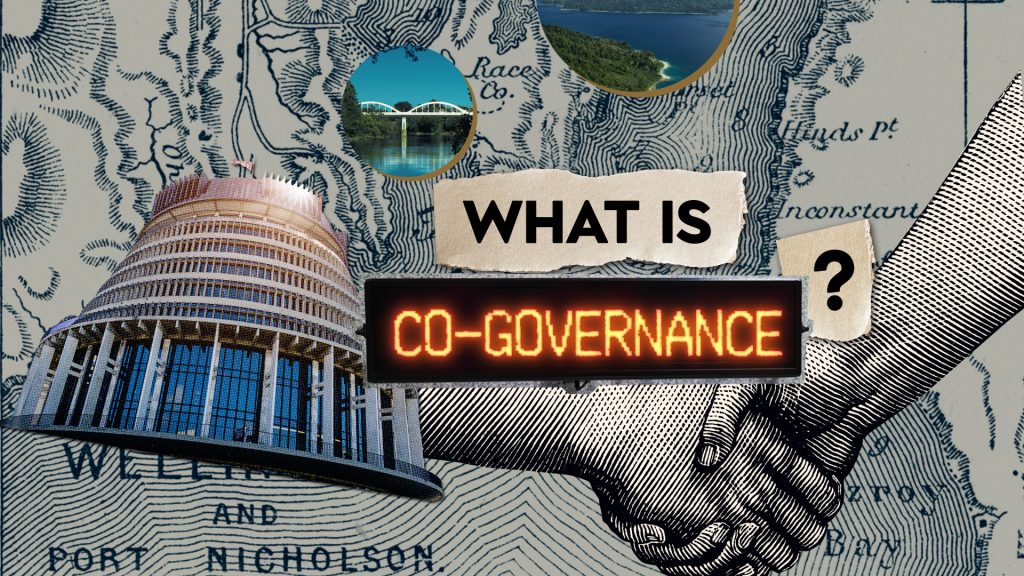

The Way Out: Shared GTovernance and the Digital Guerrilla Warfare of Ordinary People

Effective digital governance demands the co-existence of three main actors through a model called co-governance when platforms monopolize power beyond governmental reach. This model establishes participation between digital space actors such as platforms and governments plus public citizens for implementing and checking rules which enhance transparency and accountability along with fairness.

Co-governance represents a process that redefines the distribution of rights between the three involved parties rather than a basic rights transfer. Such measures need platform institutions to embrace greater accountability along with tighter oversight of their operations in exchange for higher public engagement rights.

- Platform Accountability: From Black Boxes to Glass Algorithms

TikTok needs to reveal algorithm weighting systems together with a personal recommendation disable option. The EU’s DSA brings partial transparency yet independent auditing represents the core requirement for full transparency. For example, Australia’s ACCC (2022) proposed “algorithmic impact assessments” to evaluate platforms’ societal harm.

Innovation offers hope. ARD the German public broadcaster created a clear news recommendation system which enables users to modify filtering standards. The first prototype demonstrations resulted in an estimated 40% decrease in extremist content presentation according to DW (2023). The 2021 Facebook leaks by Frances Haugen sparked legislative changes during that period. High-level protection of whistleblowers should be a global standard because the EU implemented its Whistleblower Directive (2019).

- Government regulation through guardrails should establish necessary limits rather than intrude into all operations.

The focus of lawmaking should be toward desired results rather than generalized programming standards. The South Korean Network Platform Fair Competition Act (2022) allows regulators to impose penalty fees that take 10% of company revenue when platforms demonstrate bias through algorithms and shows a solution other nations should adopt.

- Grassroots Resistance: Small Acts, Big Impact

The battle for digital autonomy isn’t fought in courtrooms or boardrooms—it’s waged through the quiet rebellions of ordinary uses. From disabling algorithms to abandoning exploitative platforms, individuals are rewriting the rules of engagement, one click at one time.

Consider the#OptOut movement. When users realized TikTok’s algorithm trapped their attention in an endless scroll, many began disabling its “For You Page” recommendations—a feature buried three layers deep in settings. By 2023, searches for “turn off TikTok recommendations” surged by 300%. This isn’t just avoiding distractions; it’s a collective awakening to reclaim cognitive sovereignty.

But resistance goes beyond opting out. When Instagram flooded feeds with shopping content, Gen Z didn’t merely protest—they staged a digital exodus. Thousands migrated to BeReal, a minimalist app that limits users to one unfiltered daily post. The backlash was so fierce that Meta scrambled to reverse its algorithm changes. Here, resistance isn’t passive; it’s a negotiation through mass abandonment.

The most profound shift, however, emerges from self-organized communities. Take Mastodon’s rise: when Twitter’s infrastructure collapsed in 2022, Mastodon’s user base exploded by 200% within days. Unlike corporate platforms, Mastodon operates on decentralized servers where users define their own rules. No ads, no algorithmic manipulation—just raw human connection.

Digital Rights and Everyday Life: Why It Matters to You

Your understanding of digital rights might remain theoretical until point when they control your daily actions without any awareness of their influence. While seeking employment or visiting websites or obtaining insurance coverage the data trails you produce determine which options become accessible to you.

Take job hunting, for example. The use of automated screening systems by many companies allows them to scrutinize your social media interactions together with your internet browsing behavior along with your search engine activity.

These algorithms may assign you a “digital score” — a concept similar to a credit score — that filters out resumes before a human ever sees them. Your TikTok user content and Instagram online actions create subtle effects on employer assessment of your job readiness.

Your dating activities remain exposed to potential risks of protection violation. Tinder functions through user area data and swipe habits to monitor daily timing patterns which it uses to perform both pricing tests and promotional campaigns. Zip codes that include wealthy residents receive stronger display and matching strength from the system. Computational activities through the system create digital experiences with varying quality that produces social inequalities which emerge due to concealed user profiling procedures.

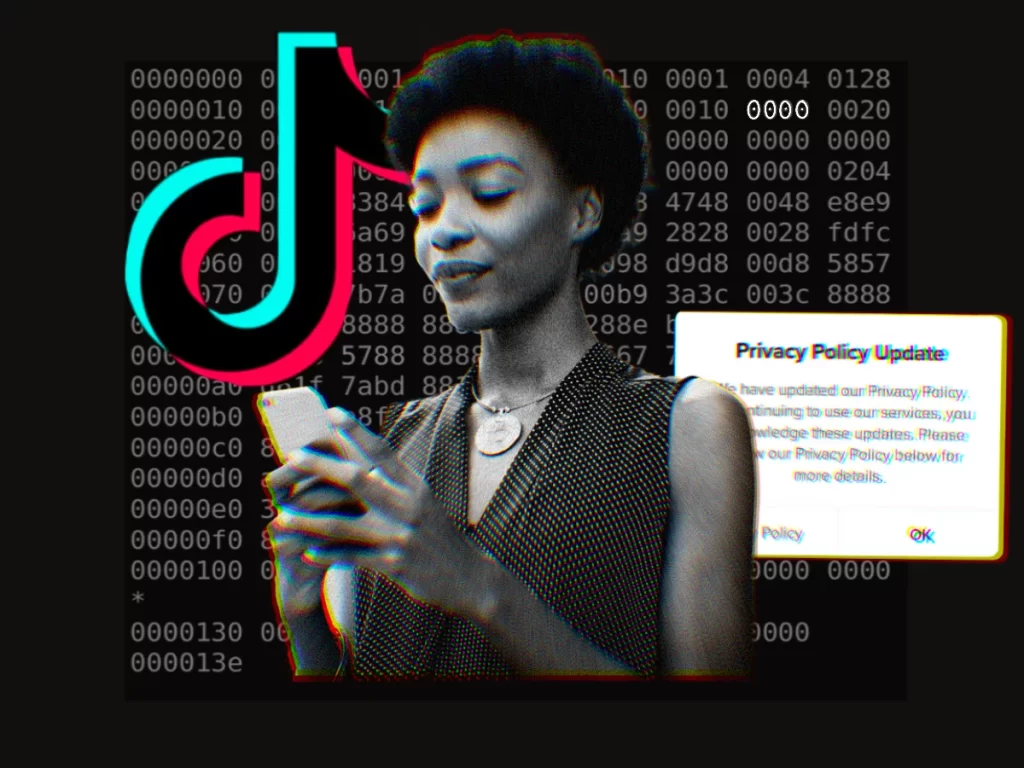

The lack of fundamental consent approval triggers all the observed problems. Most users never fully read the “Terms of Service.” The complex language used in acceptance terms makes crucial consequence information invisible to users. This leads to what privacy scholars call “consent fatigue” — the feeling that opting out is impossible, so we comply.

The ability to evaluate networking services together with algorithms and information flow networks requires core digital knowledge as basic digital literacy training. Both workplace settings and academic institutions together with family members need to update their comprehension of this essential matter. Social media operation lacks official educational material because vehicle driving lessons do exist to teach students how to operate vehicles.

Privacy is not just about secrecy. The core principle consists of dignity, autonomy and power. Without proper understanding of data usage and meaningful ability to decline there is no consent for shaping user choices.

Knowledge about how data operates in our daily lives enables us to establish better protection systems for ourselves as well as for others.

References

Acquisti, A., Brandimarte, L., & Loewenstein, G. (2015). Privacy and human behavior in the age of information. Science, 347(6221), 509–514. https://doi.org/10.1126/science.aaa1465

After TikTok ban: 100 fake apps emerge in India. (2020, July 5). Amz123.com. https://www.amz123.com/t/7psnDqda

DMI, S. (2025, March 4). The Ethical Use of AI in Digital Marketing. Digital Marketing Institute. https://digitalmarketinginstitute.com/blog/the-ethical-use-of-ai-in-digital-marketing

Francis, L., & Francis, J. G. (2017). Privacy : what everyone needs to know. Oxford University Press.

Jargon, J. (2023, May 13). TikTok Feeds Teens a Diet of Darkness. The Wall Street. https://www.wsj.com/tech/personal-tech/tiktok-feeds-teens-a-diet-of-darkness-8f350507

Kang, C. (2021, October 26). Facebook Faces a Public Relations Crisis. What About a Legal One? The New York Times. https://www.nytimes.com/2021/10/26/technology/facebook-sec-complaints.html

Lee, S. (2022, December 13). Main Developments in Competition Law and Policy 2022 – Korea. Kluwer Competition Law Blog. https://competitionlawblog.kluwercompetitionlaw.com/2022/12/13/main-developments-in-competition-law-and-policy-2022-korea/

McClain, C., & Rainie, L. (2020). The Challenges of Contact Tracing as U.S. Battles COVID-19. Pew Research Center.

Murphy, H., & McGee, P. (2020, April 11). Apple and Google join forces to develop contact-tracing apps. Www.ft.com. https://www.ft.com/content/8a11bf86-dd71-4fd2-b196-e3ddffe48936

Pariser, E. (2011). The Filter Bubble: What the internet is hiding from you. Penguin Press.

Paul, K. (2022, October 6). Disinformation in Spanish is prolific on Facebook, Twitter and YouTube despite vows to act. The Guardian; The Guardian. https://www.theguardian.com/media/2022/oct/06/disinformation-in-spanish-facebook-twitter-youtube

Solon, O. (2017, May 23). To censor or sanction extreme content? Either way, Facebook can’t win. The Guardian. https://www.theguardian.com/news/2017/may/22/facebook-moderator-guidelines-extreme-content-analysis

Zahn, M., & Barr, L. (2023, June 22). FTC sues Amazon for allegedly tricking millions of users into Prime subscriptions. ABC News. https://abcnews.go.com/Business/ftc-sues-amazon-allegedly-tricking-millions-users-prime/story?id=100274058

Be the first to comment